Practical advice on the separation of data into parts. Generate PartitionKey and RowKey for Azure Table Storage

Hello. This is the final article from the series “The internal structure and architecture of the AtContent.com service”. Here are the best practices from our experience with Azure Table Storage and the platform as a whole. This article can be a starting point for building typical data structures and will allow more efficient use of Windows Azure resources.

Horizontal scaling relies heavily on data sharing and the Windows Azure platform is no exception. One of the components of the platform is the Azure Storage Table - NoSQL database with unlimited growth. But many developers ignore it in favor of familiar and familiar SQL. In this case, quite often tasks are solved using the Azure Storage Table much more efficiently than using Azure SQL.

')

Here you will find practice and application scenarios for Azure Storage Tables.

The most common use case is storage lists. It fits nicely into the Azure Storage Tables architecture. Lists can be very different, but the most frequently used ones are records by date.

The first thing you should pay attention to when working is how values are selected from the table. There are several features here:

It is always necessary to remember about sampling in parts, because Table Storage can return any number of records and a continuation token. Although most often this happens when there are more than 1000 entries. The SDK has a mechanism that allows you to process it automatically, so you should not worry too much about it. It is necessary to remember only that each request is an additional transaction. If you have a 2001 entry in the sample, there will be at least 3 calls to the repository.

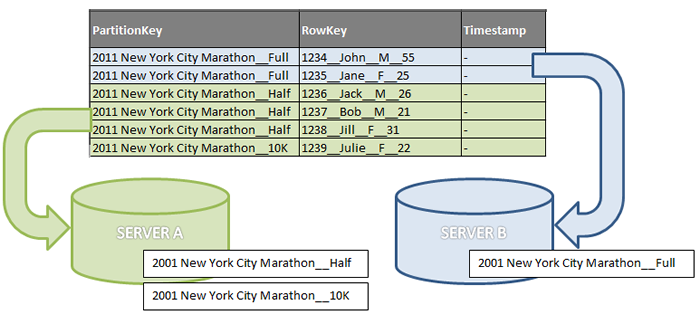

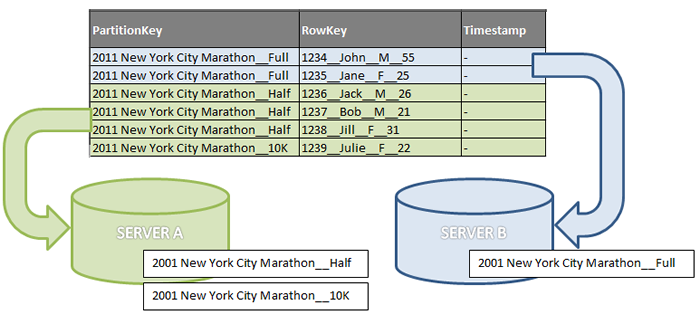

Also need to remember about PartitionKey. This is a very important key, which is responsible for the separation of data into sections. If you do not specify it in the request, then the cloud storage will have to poll all sections and then collect the data in one set. In this case, different sections can be located on different servers. And it will not add performance to your requests. In addition, it will increase the number of transactions to the repository.

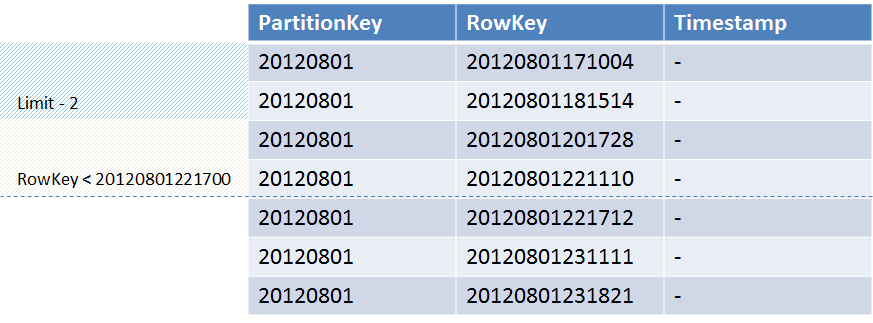

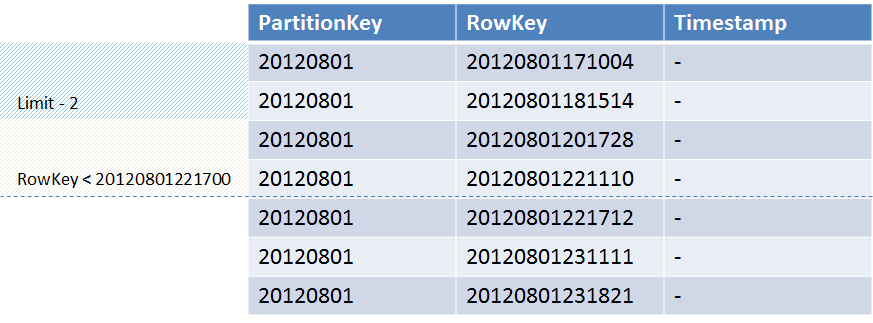

And finally, the least obvious feature is the selection of records by condition. Experimentally it was found that if you specify the condition “less than” or “less than or equal to” for RowKey, then the selected values will go from the minimum to the one that will satisfy the condition. In this case, the order of the returned entries will be from smaller to larger. That is, if you have 10,000 records in a table that satisfy the condition, and you only need the first 100, for which the RowKey is less than a certain value, then the storage will select 100 records starting from the minimum RowKey value.

As an example, consider a table in which several records with the key RowKey from a date. And we need to select 2 records for which RowKey is less than 20120801221700. We expect that the repository will return records with the keys “20120801221110” and “20120801201728”. But in accordance with the sorting order, the repository will return the values “20120801171004” and “20120801181514”.

This can be overcome using an inverted key. So, in the case of a date, it is DateTime.MaxValue - CurrentDateTime. Then, the sample will use the opposite condition and it will work correctly.

When designing an application, you should be guided by the recommendations that Microsoft makes regarding the Storage Table ( http://msdn.microsoft.com/en-us/library/windowsazure/hh508997.aspx ). The meaning of these recommendations is very simple. Design your applications so that there are not too many partition keys, and not too few. And also, that there were not too many entries in each section. It is desirable that they are divided into sections evenly. If it is possible to predict the load on the sections, then the sections that will be subject to greater load should be filled with a smaller number of records. Based on the Storage Tables architecture, partitions that are under heavy load are automatically replicated. And the smaller the partition size is, the faster replication will occur.

During development, we faced the problem of selecting partition keys for a table in which data is stored for identifying and authenticating users. Since we identify the user primarily by email, it was necessary to choose a system according to which they would be divided into sections. After conducting a small experiment, it was found that a good uniform distribution gives MD5 hashing and the subsequent separation of part of the bits from the hash to determine the partition number.

Such a simple code will allow you to get the necessary number of bits from the MD5 hash and use it as a section number:

This method, like many others, is available as part of CPlaseEngine, a link to which can be found at the end of the article.

In consequence, we extended this practice to other parts of our system, and it showed itself from a very good side. Although in some cases partitioning is more profitable by user ID. Or on the part of the user ID. This allows you to build queries to select data related to a specific user. In this case, requests are effective in the sense that one has to choose from one section.

As you can see earlier, often the key for recording is associated with some date. Most often, the date of creation. In our system, this is very common. And it is very effective for records, of which after you need to get a list starting from some date.

Naturally, the recording key must be unique. This uniqueness should be for a pair of “partition key” - “write key”. Therefore, when generating a key, it is safest to add a few random characters to it.

If you plan to select records in the form of lists, then the general recommendation for generating a record key is to associate it with the parameter with which you will build the list. So, for example, if you build a list of values by date, then it is best to use the date when generating the key.

The practice of dividing by key hash can be applied not only in relation to Table Storage. It extends very well to the file system. When caching entities from Table Storage to the cache on an instance, we also use a hash partition from the file path.

Horizontal scaling is closely related to the separation of data and the more efficiently you will share the data - the more efficient the system will work. Windows Azure as a platform provides a very powerful infrastructure for building reliable, scalable, fault-tolerant services. But at the same time, we should not forget that in order to build such services, the internal architecture must comply with the principles of building high-load systems.

I also want to be happy to announce that our OpenSource project CPlaseEngine has appeared on the CodePlex openly. It contains tools that allow you to develop services on the Windows Azure platform more efficiently. You can download it at https://cplaseengine.codeplex.com/ .

Read in the series:

Horizontal scaling relies heavily on data sharing and the Windows Azure platform is no exception. One of the components of the platform is the Azure Storage Table - NoSQL database with unlimited growth. But many developers ignore it in favor of familiar and familiar SQL. In this case, quite often tasks are solved using the Azure Storage Table much more efficiently than using Azure SQL.

')

Here you will find practice and application scenarios for Azure Storage Tables.

In general, about Azure Table Storage

The most common use case is storage lists. It fits nicely into the Azure Storage Tables architecture. Lists can be very different, but the most frequently used ones are records by date.

The first thing you should pay attention to when working is how values are selected from the table. There are several features here:

- Values are selected in parts of not more than 1000 values;

- Sampling without a partition key (PartitionKey) is very slow;

- When choosing a value range by key, the condition “less than or equal to” correctly works;

It is always necessary to remember about sampling in parts, because Table Storage can return any number of records and a continuation token. Although most often this happens when there are more than 1000 entries. The SDK has a mechanism that allows you to process it automatically, so you should not worry too much about it. It is necessary to remember only that each request is an additional transaction. If you have a 2001 entry in the sample, there will be at least 3 calls to the repository.

Also need to remember about PartitionKey. This is a very important key, which is responsible for the separation of data into sections. If you do not specify it in the request, then the cloud storage will have to poll all sections and then collect the data in one set. In this case, different sections can be located on different servers. And it will not add performance to your requests. In addition, it will increase the number of transactions to the repository.

And finally, the least obvious feature is the selection of records by condition. Experimentally it was found that if you specify the condition “less than” or “less than or equal to” for RowKey, then the selected values will go from the minimum to the one that will satisfy the condition. In this case, the order of the returned entries will be from smaller to larger. That is, if you have 10,000 records in a table that satisfy the condition, and you only need the first 100, for which the RowKey is less than a certain value, then the storage will select 100 records starting from the minimum RowKey value.

As an example, consider a table in which several records with the key RowKey from a date. And we need to select 2 records for which RowKey is less than 20120801221700. We expect that the repository will return records with the keys “20120801221110” and “20120801201728”. But in accordance with the sorting order, the repository will return the values “20120801171004” and “20120801181514”.

This can be overcome using an inverted key. So, in the case of a date, it is DateTime.MaxValue - CurrentDateTime. Then, the sample will use the opposite condition and it will work correctly.

Practice choosing partition keys

When designing an application, you should be guided by the recommendations that Microsoft makes regarding the Storage Table ( http://msdn.microsoft.com/en-us/library/windowsazure/hh508997.aspx ). The meaning of these recommendations is very simple. Design your applications so that there are not too many partition keys, and not too few. And also, that there were not too many entries in each section. It is desirable that they are divided into sections evenly. If it is possible to predict the load on the sections, then the sections that will be subject to greater load should be filled with a smaller number of records. Based on the Storage Tables architecture, partitions that are under heavy load are automatically replicated. And the smaller the partition size is, the faster replication will occur.

During development, we faced the problem of selecting partition keys for a table in which data is stored for identifying and authenticating users. Since we identify the user primarily by email, it was necessary to choose a system according to which they would be divided into sections. After conducting a small experiment, it was found that a good uniform distribution gives MD5 hashing and the subsequent separation of part of the bits from the hash to determine the partition number.

Such a simple code will allow you to get the necessary number of bits from the MD5 hash and use it as a section number:

using System.Security.Cryptography; using System.Text; public static string GetHashMD5Binary(string Input, int BitCount = 128) { if (Input == null) return null; byte[] MD5Bytes = MD5.Create().ComputeHash( Encoding.Default.GetBytes(Input)); int Len = MD5Bytes.Length; string Result = ""; int HasBits = 0; for (int i = 0; i < Len; i++) { Result += Convert.ToString(MD5Bytes[i], 2).PadLeft(8, '0'); HasBits += 8; if (HasBits >= BitCount) break; } if (BitCount < 1 || BitCount >= 128) return Result; return Result.Substring(0, BitCount); } This method, like many others, is available as part of CPlaseEngine, a link to which can be found at the end of the article.

In consequence, we extended this practice to other parts of our system, and it showed itself from a very good side. Although in some cases partitioning is more profitable by user ID. Or on the part of the user ID. This allows you to build queries to select data related to a specific user. In this case, requests are effective in the sense that one has to choose from one section.

Practice choosing keys for records

As you can see earlier, often the key for recording is associated with some date. Most often, the date of creation. In our system, this is very common. And it is very effective for records, of which after you need to get a list starting from some date.

Naturally, the recording key must be unique. This uniqueness should be for a pair of “partition key” - “write key”. Therefore, when generating a key, it is safest to add a few random characters to it.

If you plan to select records in the form of lists, then the general recommendation for generating a record key is to associate it with the parameter with which you will build the list. So, for example, if you build a list of values by date, then it is best to use the date when generating the key.

Distribution and synthesis

The practice of dividing by key hash can be applied not only in relation to Table Storage. It extends very well to the file system. When caching entities from Table Storage to the cache on an instance, we also use a hash partition from the file path.

Horizontal scaling is closely related to the separation of data and the more efficiently you will share the data - the more efficient the system will work. Windows Azure as a platform provides a very powerful infrastructure for building reliable, scalable, fault-tolerant services. But at the same time, we should not forget that in order to build such services, the internal architecture must comply with the principles of building high-load systems.

I also want to be happy to announce that our OpenSource project CPlaseEngine has appeared on the CodePlex openly. It contains tools that allow you to develop services on the Windows Azure platform more efficiently. You can download it at https://cplaseengine.codeplex.com/ .

Read in the series:

- " AtContent.com. Internal structure and architecture ",

- " The mechanism of messaging between roles and instances ",

- " Caching data on an instance and managing caching ",

- " Effective cloud queue management (Azure Queue) ",

- " LINQ extensions for Azure Table Storage, implementing Or and Contains operations ."

Source: https://habr.com/ru/post/143838/

All Articles