Neural networks for dummies. Start

It so happened that at the university the topic of neural networks successfully passed my specialty, despite the great interest on my part. Attempts of self-education were several times broken by an ignorant brow on the indestructible walls of the citadel of science in the guise of incomprehensible terms and confusing explanations in the dry language of university textbooks.

In this article (a series of articles?) I will try to highlight the topic of neural networks from the point of view of the uninitiated person, in simple language, with simple examples, putting everything on the shelves, and not “a neuron array forms a perceptron that works according to a well-known, proven scheme”.

')

Interested please under the cat.

Goals

What are neural networks for?

A neural network is a learning system. It acts not only in accordance with a given algorithm and formulas, but also on the basis of past experience. A sort of child who each time adds a puzzle, making fewer and fewer mistakes.

And, as is customary to write in fashionable authors, a neural network consists of neurons.

Here you need to make a stop and figure it out.

Let's agree that a neuron is just some kind of imaginary black box, which has a bunch of inlets and one exit.

Moreover, both incoming and outgoing information can be analog (most often it will be so).

How the output signal is formed from the heap input - defines the internal algorithm of the neuron.

For example, we will write a small program that will recognize simple images, say, the letters of the Russian language on raster images.

We agree that in the initial state our system will have an “empty” memory, i.e. a kind of newborn brain, ready for battle.

In order to make it work correctly, we will need to spend time learning.

Dodging the tomatoes flying into me, I will say that we will write in Delphi (at the time of writing this article was at hand). If the need arises, I will help translate the example into other languages.

I also ask you to take the quality of the code lightly - the program was written in an hour, just to deal with the topic, such code is hardly applicable for serious tasks.

So, based on the task - how many options can there be? That's right, as many letters as we will be able to identify. There are only 33 of them in the alphabet, so let's stop.

Next, we will define the input data. In order not to bother too much - we will feed the input bitmap 30x30 as a bitmap:

In the end, you need to create 33 neurons, each of which will have 30x30 = 900 inputs.

Create a class for our neuron:

type Neuron = class name: string; // – , input: array[0..29,0..29] of integer; // 3030 output:integer; // , memory:array[0..29,0..29] of integer; // end; Create an array of neurons, by the number of letters:

For i:=0 to 32 do begin neuro_web[i]:=Neuron.Create; neuro_web[i].output:=0; // neuro_web[i].name:=chr(Ord('A')+i); // end; Now the question is where will we store the “memory” of the neural network when the program is not working?

In order not to go deep into INI or, God forbid, databases, I decided to store them in the same 30x30 bitmap images.

Here, for example, the memory of the neuron "K" after running the program in different fonts:

As can be seen, the most saturated areas correspond to the most frequently encountered pixels.

We will load “memory” into each neuron when it is created:

p:=TBitmap.Create; p.LoadFromFile(ExtractFilePath(Application.ExeName)+'\res\'+ neuro_web[i].name+'.bmp') At the beginning of the untrained program, the memory of each neuron will be a white spot 30x30.

The neuron will recognize this:

- Take the 1st pixel

- Compare it with 1m pixel in memory (there is a value of 0..255)

- Compare the difference with a certain threshold

- If the difference is less than the threshold - we believe that at this point the letter is similar to the one in memory, add +1 to the weight of the neuron.

And so on all the pixels.

The weight of a neuron is a certain number (up to 900 in theory), which is determined by the degree of similarity of the processed information with the stored in memory.

At the end of the recognition we will have a set of neurons, each of which believes that he is right for some percent. These percentages are the weight of the neuron. The greater the weight, the more likely that this particular neuron is right.

Now we will feed the program an arbitrary image and run through each neuron through it:

for x:=0 to 29 do for y:=0 to 29 do begin n:=neuro_web[i].memory[x,y]; m:=neuro_web[i].input[x,y]; if ((abs(mn)<120)) then // if m<250 then neuro_web[i].weight:=neuro_web[i].weight+1; // , , if m<>0 then begin if m<250 then n:=round((n+(n+m)/2)/2); neuro_web[i].memory[x,y]:=n; end else if n<>0 then if m<250 then n:=round((n+(n+m)/2)/2); neuro_web[i].memory[x,y]:=n; end; As soon as the cycle for the last neuron ends, we choose from all the one that has more weight:

if neuro_web[i].weight>max then begin max:=neuro_web[i].weight; max_n:=i; end; It is for this max_n value that the program will tell us what, in its opinion, we have slipped to it.

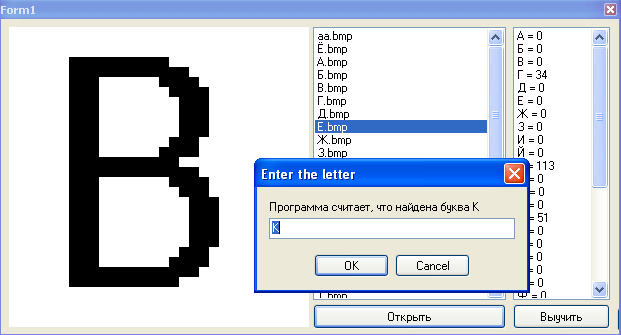

At first, this will not always be true, so you need to make a learning algorithm.

s:=InputBox('Enter the letter', ' , '+neuro_web[max_n].name, neuro_web[max_n].name); for i:=0 to 32 do begin // if neuro_web[i].name=s then begin // for x:=0 to 29 do begin for y:=0 to 29 do begin p.Canvas.Pixels[x,y]:=RGB(neuro_web[i].memory[x,y],neuro_web[i].memory[x,y], neuro_web[i].memory[x,y]); // end; end; p.SaveToFile(ExtractFilePath(Application.ExeName)+'\res\'+ neuro_web[i].name+'.bmp'); The memory update itself will do this:

n:=round(n+(n+m)/2); Those. if this point in the memory of a neuron is missing, but the teacher says that it is in this letter - we remember it, but not completely, but only half. With further study, the impact of this lesson will increase.

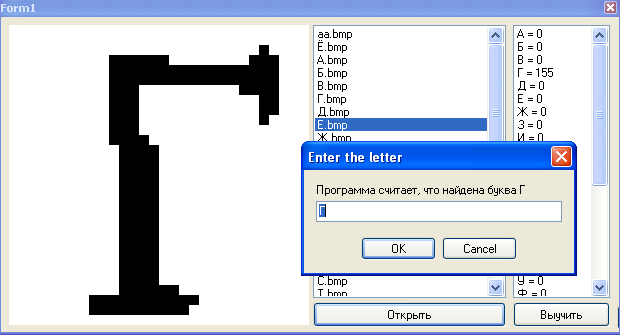

Here are a few iterations for the letter G:

At this, our program is ready.

Training

Let's start learning.

We open the images of the letters and patiently point the program at its errors:

After some time, the program will begin to stably identify even letters that are not familiar to it earlier:

Conclusion

The program is one continuous flaw - our neural network is very stupid, it is not protected from user errors during training, and the recognition algorithms are simple as a stick.

But it gives basic knowledge about the functioning of neural networks.

If this article is of interest to respected habravchan, I will continue the cycle, gradually complicating the system, introducing additional links and weights, consider some of the popular neural network architectures, etc.

You can mock our freshly born intellect by downloading the program along with the source code here .

For this I will take my leave, thanks for reading.

UPD: We got a blank for the neural network. So far it is not yet, but in the next article we will try to make it a full-fledged neural network.

Thank you Shultc for the comment.

Source: https://habr.com/ru/post/143129/

All Articles