We recognize the image from the token using the camera

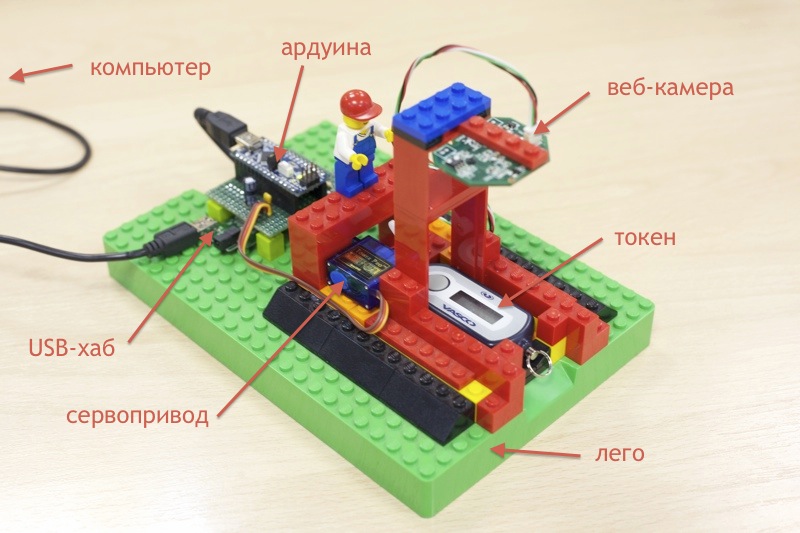

In an organization where I work in my spare time, there are very high safety requirements. Wherever possible, tokens are used to authenticate users. I was given just such a thing:

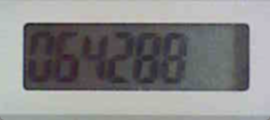

and said: press the button, look at the numbers, enter the password and rejoice. “Security, of course, is paramount, but we shouldn’t forget about comfort,” I thought about that and conducted an audit of my electronic junk.

(Honestly, I have long wanted to distract from programming and dig deeper into hardware, so my laziness is not the only motivator in this whole venture).

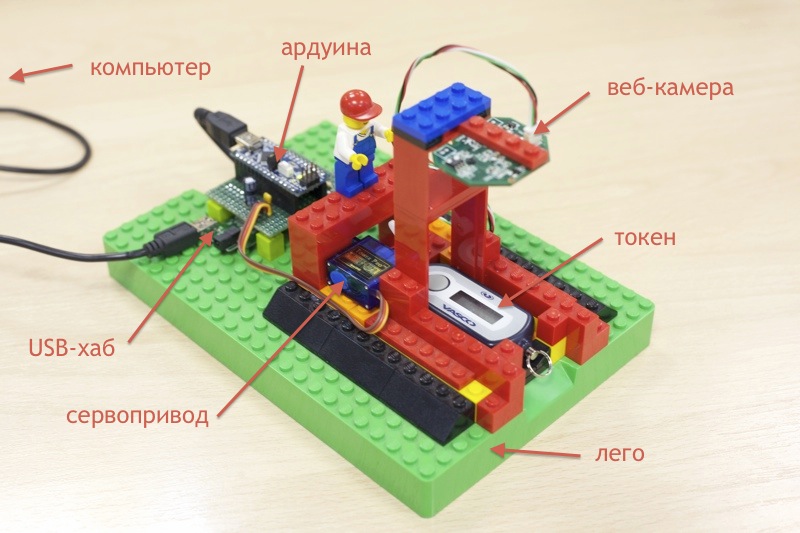

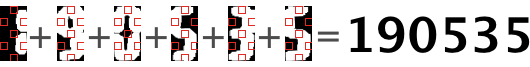

The first thing I came across was an old Logitech QuickCam 3000 webcam, which went to the “trash” in connection with the purchase of a laptop with an integrated webcam. The plan matured instantly: we remove the token from the camera with a camera, we recognize them on a computer and ... Profit! Then a servo was found (well, don’t press the button on the token each time with your hands, right?), An old USB hub (there are only two USB ports in my laptop, and one of them is constantly occupied by a USB-Ethernet adapter), and the Arduino board, it is not known how I found myself. As a case for my device, I decided to use the Lego designer, which I bought for use by my one-year-old son (it seems, now I understand why my wife grinned sarcastically with the purchase).

')

Unfortunately, I don’t have safe and sound photos of donor devices, so I can only “brag” about the device as an assembly:

Actually, everything is ridiculously simple (I will not even draw a scheme): a USB hub to which a webcam and an arduin are connected. A servo drive is connected to the arduine (via PWM). That's all. The source code of the program that is poured into the Arduin is trivial: github.com/dreadatour/lazy/blob/master/servo.ino

Arduin is waiting for the letter 'G' on the com port, and when it arrives, it pulls the servu back and forth. The delay (500ms) and the angle of the servo were selected experimentally.

The only programming language to which I would entrust such a difficult task as “computer vision” is the blessed Python. The benefit in it, almost out of the box, is the bindings to such a glorious library as OpenCV . Actually, this will stop.

I will provide links to pieces of code that are responsible for the described functionality - in my opinion this is the optimal format for submitting information. All the code can be viewed on the githaba .

First we take the image from the camera :

We make the task easier: the camera is fixed relative to the base, the token can move in its tray quite slightly, so we find the boundaries of the possible positions of the token, crop the frame from the camera and (only for our convenience) rotate the image by 90˚:

Then we do some transformations : convert the resulting image to grayscale and find the borders using the Canny border detector - these will be the borders of the LCD screen of the token:

We find the contours on the resulting image. A contour is an array of lines — we drop lines smaller than a certain length:

Using a blunt algorithm, we define four lines that limit our LCD display:

Find the points of persection of these lines and perform several checks:

Next, we find the angle to which our token is turned, rotate the image to this angle, and cut out the LCD image:

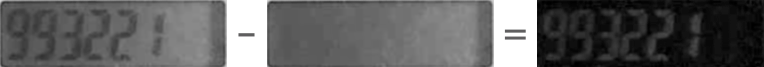

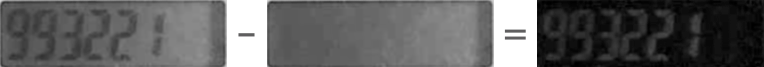

The hardest thing behind. Now we need to increase the contrast of the resulting image. To do this, we memorize the image of the empty LCD screen (before pressing the token button), and simply “ subtract ” this image from the picture with numbers (after pressing the button):

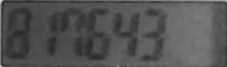

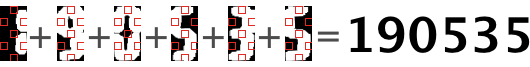

We get a black and white image. To do this, with the help of another blunt algorithm, we find the optimal threshold that will divide all the pixels in the image into “black” and “white”, convert the image into B / W and cut out the characters:

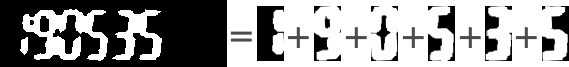

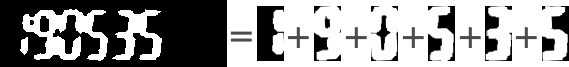

Well, then just recognize the numbers. It makes no sense to suffer with neural networks and other things, because we have a seven-segment indicator: there are seven “points” by which each digit is uniquely determined :

Just in case, we recognize the numbers from several frames: if three frames in a row we got the same result - we believe that the recognition was successful, we output the result using the program “growlnotify” to the user and copy the resulting code to the clipboard.

Caution sound!

Whole source code

and said: press the button, look at the numbers, enter the password and rejoice. “Security, of course, is paramount, but we shouldn’t forget about comfort,” I thought about that and conducted an audit of my electronic junk.

(Honestly, I have long wanted to distract from programming and dig deeper into hardware, so my laziness is not the only motivator in this whole venture).

Analysis of technical specifications and selection of components

The first thing I came across was an old Logitech QuickCam 3000 webcam, which went to the “trash” in connection with the purchase of a laptop with an integrated webcam. The plan matured instantly: we remove the token from the camera with a camera, we recognize them on a computer and ... Profit! Then a servo was found (well, don’t press the button on the token each time with your hands, right?), An old USB hub (there are only two USB ports in my laptop, and one of them is constantly occupied by a USB-Ethernet adapter), and the Arduino board, it is not known how I found myself. As a case for my device, I decided to use the Lego designer, which I bought for use by my one-year-old son (it seems, now I understand why my wife grinned sarcastically with the purchase).

')

Unfortunately, I don’t have safe and sound photos of donor devices, so I can only “brag” about the device as an assembly:

Schematic diagram and firmware microcontroller

Actually, everything is ridiculously simple (I will not even draw a scheme): a USB hub to which a webcam and an arduin are connected. A servo drive is connected to the arduine (via PWM). That's all. The source code of the program that is poured into the Arduin is trivial: github.com/dreadatour/lazy/blob/master/servo.ino

Arduin is waiting for the letter 'G' on the com port, and when it arrives, it pulls the servu back and forth. The delay (500ms) and the angle of the servo were selected experimentally.

Selection of programming language and analysis of existing libraries of "computer vision"

The only programming language to which I would entrust such a difficult task as “computer vision” is the blessed Python. The benefit in it, almost out of the box, is the bindings to such a glorious library as OpenCV . Actually, this will stop.

Token code recognition algorithm

I will provide links to pieces of code that are responsible for the described functionality - in my opinion this is the optimal format for submitting information. All the code can be viewed on the githaba .

First we take the image from the camera :

We make the task easier: the camera is fixed relative to the base, the token can move in its tray quite slightly, so we find the boundaries of the possible positions of the token, crop the frame from the camera and (only for our convenience) rotate the image by 90˚:

Then we do some transformations : convert the resulting image to grayscale and find the borders using the Canny border detector - these will be the borders of the LCD screen of the token:

We find the contours on the resulting image. A contour is an array of lines — we drop lines smaller than a certain length:

Using a blunt algorithm, we define four lines that limit our LCD display:

Find the points of persection of these lines and perform several checks:

- check that the length of the vertical and horizontal lines approximately coincides with each other and that the length of the lines approximately coincides with the size of the LCD display (which we calculated experimentally)

- check that the diagonals are approximately equal (we need a rectangle - LCD display)

Next, we find the angle to which our token is turned, rotate the image to this angle, and cut out the LCD image:

The hardest thing behind. Now we need to increase the contrast of the resulting image. To do this, we memorize the image of the empty LCD screen (before pressing the token button), and simply “ subtract ” this image from the picture with numbers (after pressing the button):

We get a black and white image. To do this, with the help of another blunt algorithm, we find the optimal threshold that will divide all the pixels in the image into “black” and “white”, convert the image into B / W and cut out the characters:

Well, then just recognize the numbers. It makes no sense to suffer with neural networks and other things, because we have a seven-segment indicator: there are seven “points” by which each digit is uniquely determined :

Just in case, we recognize the numbers from several frames: if three frames in a row we got the same result - we believe that the recognition was successful, we output the result using the program “growlnotify” to the user and copy the resulting code to the clipboard.

Video of the device

Caution sound!

Whole source code

Source: https://habr.com/ru/post/143102/

All Articles