SysAdmin Anywhere: Using UDP Hole Punching to implement remote desktop

Introduction

System administrators are by nature lazy people. They do not like to do the same thing a hundred times. They want to automate everything so that it works with minimal intervention. And I am.

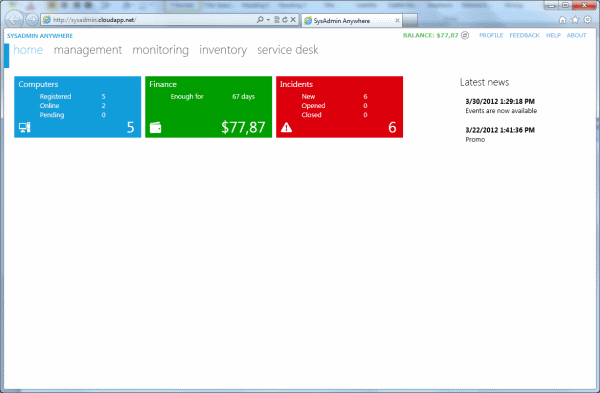

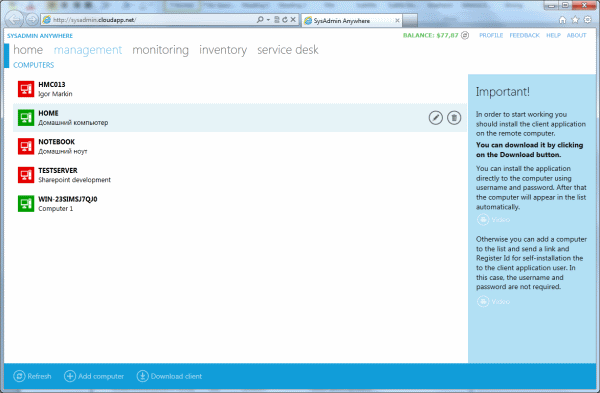

System administrators are by nature lazy people. They do not like to do the same thing a hundred times. They want to automate everything so that it works with minimal intervention. And I am.In order to make life easier for me and those like me, a program was created that combined disparate administration tools into one product with the simple name SysAdmin . It includes multi-domain network support, reports, inventory, and many other useful things that are scattered across different MMCs or are included in third-party products. Work has become much more convenient. This program has already grown to version 5.3, changed the interface to Metro-style. But, unfortunately, it is not possible to administer computers that are not included in the domain.

However, I wanted more:

- Fully administer remote computers;

- Configure access without personal presence on the remote side and without a qualified employee from the remote side;

- Do not open ports on the router;

- If it is impossible for personal presence to not open access to the router from the Internet;

- Do not complicate the technical solution if you need to have access to more than one computer on a remote network:

- Do not open ports on the router for each computer;

- Do not open access on the router to the server, and from there to computers (There is not always a need for a server, and introducing it as a means for remote access is not economically feasible);

- Do not open one port and every time do not reconfigure the router to access another computer;

- Do not raise VPN.

')

From experience:

At work, it was necessary to create a VPN-network with regional offices located throughout the country. In the central office was installed powerful VPN-equipment, and on-site equipment was planned by the same company, but simpler. It was necessary to find out everything about their Internet connection, local network, installed equipment, etc. Prepare the VPN equipment and send it to them so that they connect it and it all works. The piquancy of the situation consisted in the fact that this was actually to be done to me alone, business trips were not provided for, and the level of users in the field was very diverse. Of course, everything was implemented, but it took a lot of time for correspondence and telephone conversations.

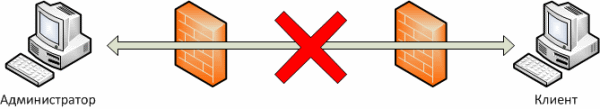

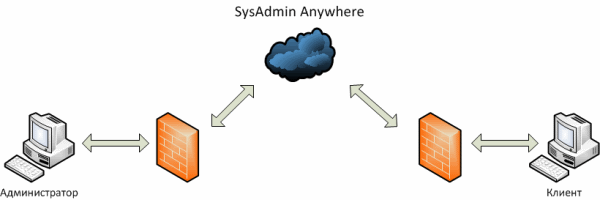

Because of the limitations previously listed, an intermediary was needed for implementation. The client part is installed on the remote computer, which creates a connection with an intermediary that is not blocked by firewalls, since it is initiated by the most distant machine. It is enough for an administrator to know only the address of the intermediary in order to connect to him to gain access to remote computers. Thanks to such an intermediary, we get a number of advantages:

- No need to open ports on the router. Security increases, the level of necessary user qualifications decreases;

- The administrator has access to the control panel located in the cloud through a browser that supports Microsoft Silverlight through a secure connection;

- Commands from the administrator using the cloud service are sent to the remote computer. The response is transmitted to the administrator in a similar way;

- The remote computer is online, so there is no need for the presence of the user;

- Additional services (inventory, service desk, reports, license accounting, archiving, monitoring, etc.) with data storage in the cloud and access to them, even if the remote computer is not connected to the Internet;

- Remote Desktop (VNC);

- Access from a mobile phone to Windows Phone.

The platform for the mediator did not have to look long. Microsoft Azure, by the way, was suitable for this. Moreover, it was possible to get 30 days access for experiments.

It so happened that by this time I had changed my line of business from system administration to programming, so I decided to create the service myself.

My colleague joined me, and together we began to develop a prototype service. We faced the task during the test period to create a workable model of the interaction of a remote client with a cloud service. Further development could be continued using the Azure SDK.

The hardest thing was to organize a permanent connection of a remote computer with a cloud service. As a result of long and tedious experiments (deploying to the cloud takes a significant amount of time), a working solution appeared that gave confidence in achieving this goal.

We were able to connect the remote computer with the cloud, without setting anything up from the network equipment. For us, this was a breakthrough.

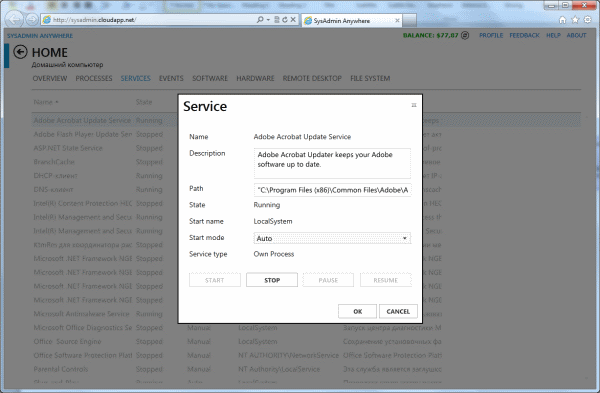

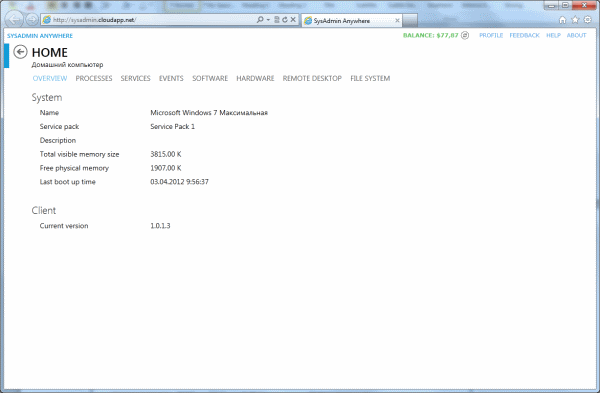

Now there was a task to transfer the command from the administrator to the cloud, and then to the computer and get an answer from it. We settled on the fact that a universal solution would be to use WMI. Moreover, the cloud service should not know anything about WMI. His task is to forward. This was conceived so that, as rarely as possible, you had to update the cloud service itself and client software on remote computers (it is now being updated to the latest version by clicking a button in the console).

Example:

A request is sent from the administrator to get a list of installed services (Select * From Win32_Service) plus a set of fields whose values we need. All this is serialized by JSON, packaged, encrypted and transmitted to the cloud. In the cloud, data is written to a queue and then taken from it by the service instance to which the remote computer is connected.

The client, having received the request, executes it. The result is serialized by JSON, packaged, encrypted and transmitted in reverse order to the administrator.

The use of WMI is not limited to just a request; a call to functions and procedures is implemented. For example, any of the services can be stopped.

It would seem that everything is simple, but do not forget that if we are planning to make a scalable solution, we cannot do without working with several instances of our service in the cloud. Working with them requires some revision of their development experience. You should always keep in mind that there is not one copy, but several, and they work in parallel. Want to do something exclusively - do not forget to notify the rest of the copies. For example, billing. It will be extremely unpleasant when each copy goes through the database and recounts the client’s balance, reducing it each time. This option is shown as an example of what might happen, although we are currently working to eliminate this problem in the future.

Creating the service, we proceeded from the fact that in most cases it is not necessary to have access to the remote desktop. Enough direct management of processes, services, etc. This is faster and easier to do through the web interface.

Nevertheless, the remote desktop is very popular and its implementation requires a direct connection between computers. Transmitting this amount of information through the cloud is not rational, as the delay in updating the screen can reach several seconds.

Implement UDP Hole Punching

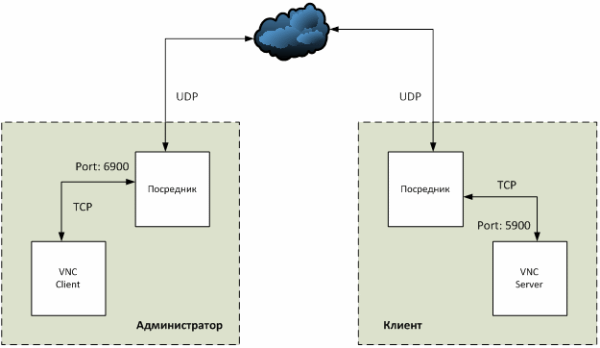

The most interesting task turned out to be the implementation of a remote desktop access system. To save time, it was decided to take a ready-made implementation of VNC, which, however, caused its own problems: it was impossible to connect the client and the VNC server directly. The only solution was the creation of intermediaries whose task is to relay traffic. Thus, the VNC client and server will “think” that they are working locally, when in fact all the traffic between them will be transmitted via the Internet.

NAT Travesal

The question remains: how to transfer information between intermediaries? The first option that comes to mind - to drive everything through the cloud - is an expensive and inefficient way. It is much better to connect everything directly. This is where we come up against the standard NAT Traversal problem.

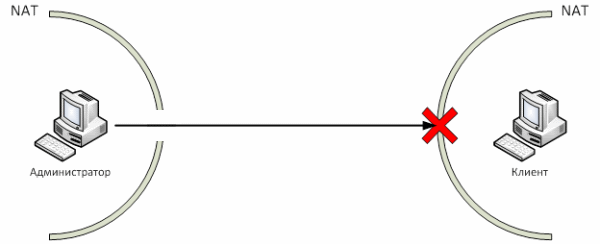

Network Address Translation (NAT) makes it impossible to establish a direct connection between clients. NAT is the process of translating local addresses that are not accessible from the Internet to external ones. To bypass NAT, there are several standard methods: open the port, raise the VPN.

Unfortunately, none of them suits us, because immediately complicates the installation of all necessary components (and we want the client installation on the working machine to be available to the least advanced users without the help of the administrator). Therefore, we use the UDP Hole Punching mechanism.

UDP Hole Punching

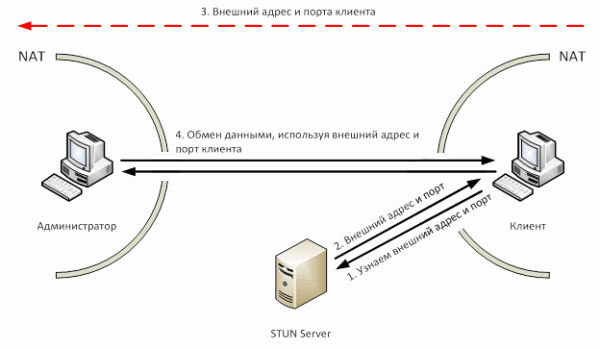

UDP hole punching is a method for directly connecting two computers that are behind NATs. To initiate a connection, a third party is required — a server that is visible to both computers. Commonly used public STUN servers.

So, our task is to directly connect the client on the remote machine and the console. To do this, you need to take a few steps:

- Find out the external IP and port of the remote machine. To do this, we use STUN - a network protocol that allows you to determine the external IP-address.

- Send this information to our application.

- Establish a connection and use it further to exchange data

What does this look like in code?

In theory, everything is simple. How does all this look in practice?

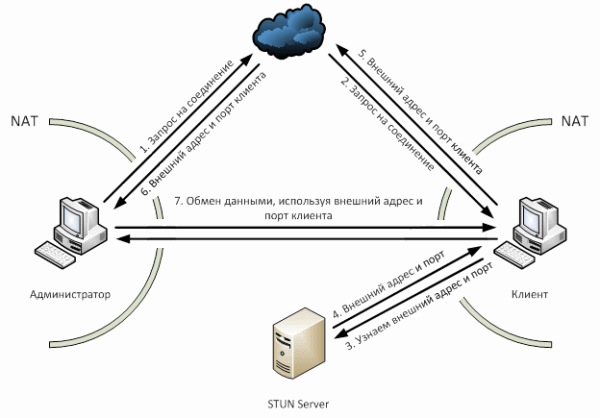

As can be seen from the image above, a little more complicated. First, we send a connection request from our application, which is transmitted via the cloud to the application on the client machine (steps 1 and 2). The client machine requests its external address and port from the STUN server (steps 3 and 4). In the network you can find many implementations of the STUN protocol.

string address = String.Empty; udpClient = new UdpClient(); udpClient.AllowNatTraversal(true); ResultSTUN result = ClientSTUN.Query("stun.ekiga.net", 3478, udpClient.Client); if (result.NetType == UDP_BLOCKED) { /* */ } else { address = result.PublicEndPoint.ToString(); } Because our solution uses UDP, we can, using the same socket, connect to the client (which distinguishes it from TCP).

After these simple manipulations, the address will contain the external address and port of the client machine (or an error message). This valuable information is transmitted to the management console via the cloud (steps 5 and 6). Creating a connection, knowing the port and the address is a matter of technique. Now we can transfer all the information we need.

NAT Traversal’s challenge is simple and elegant when using UDP, but what if we want all the advantages of TCP: guaranteed packet delivery, data integrity, no duplication, etc.? The first thought that comes to mind is to write your protocol over UDP. Naturally, we are not the first who faced this problem. Therefore, after a short search, you can find a solution such as Lindgren.Network , which is ultimately used in our application.

Source: https://habr.com/ru/post/142858/

All Articles