Open Source Photo Realism on GPU: Cycles Render

With the development of GPGPU technology, a lot of GPU renderers appeared on the market, among them iRay, V-ray RT, Octane, Arion. But, the opensource community is not asleep, and at least two free GPU renderers appeared to me: SmallLuxGPU and Cycles Render. I want to share my impressions about the latter.

Cycles Render - unbiased rendering , with the ability to render on the GPU (CUDA and OpenCL for ATI). Lies in a box with Blender, which runs on Windows, Linux, OSX.

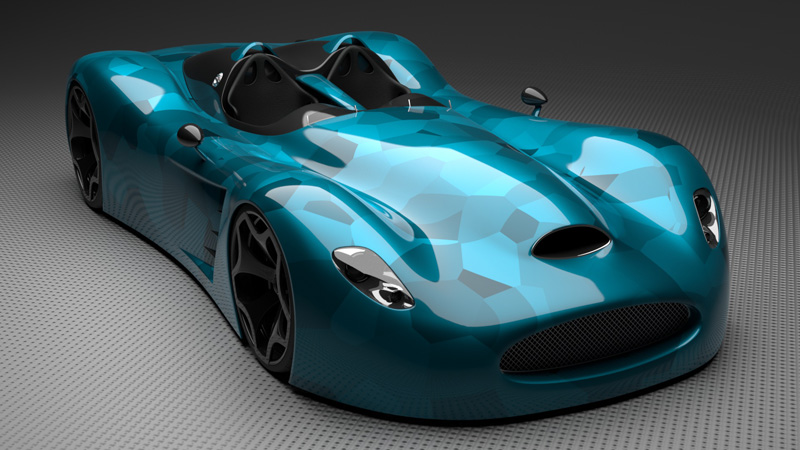

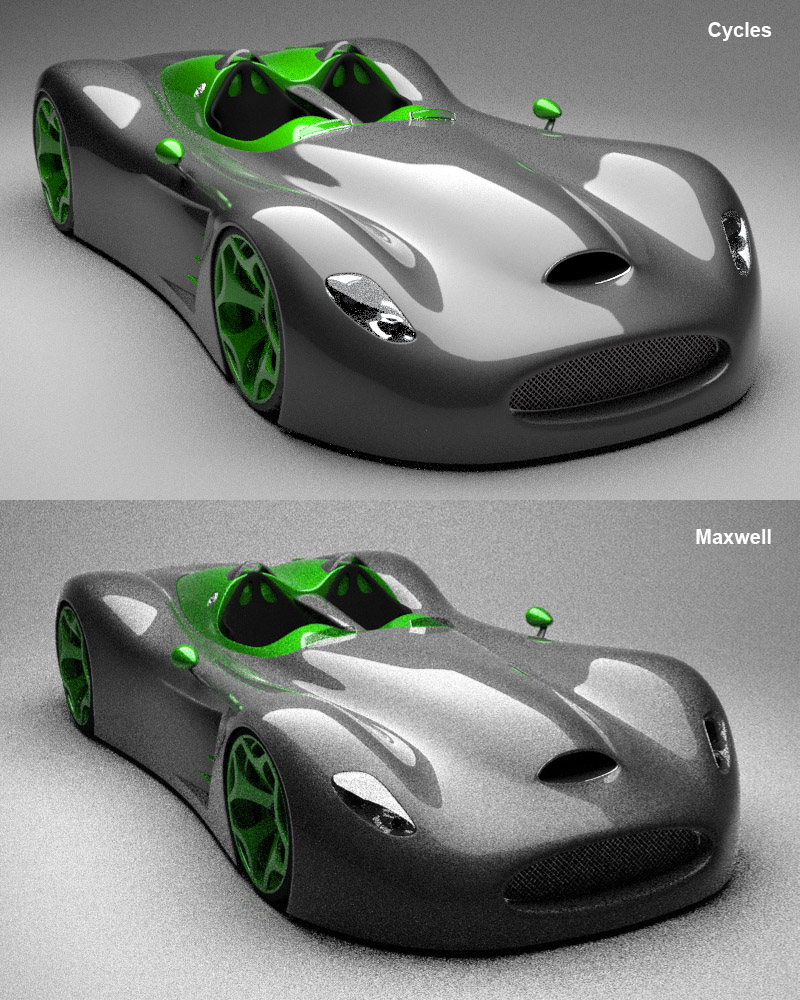

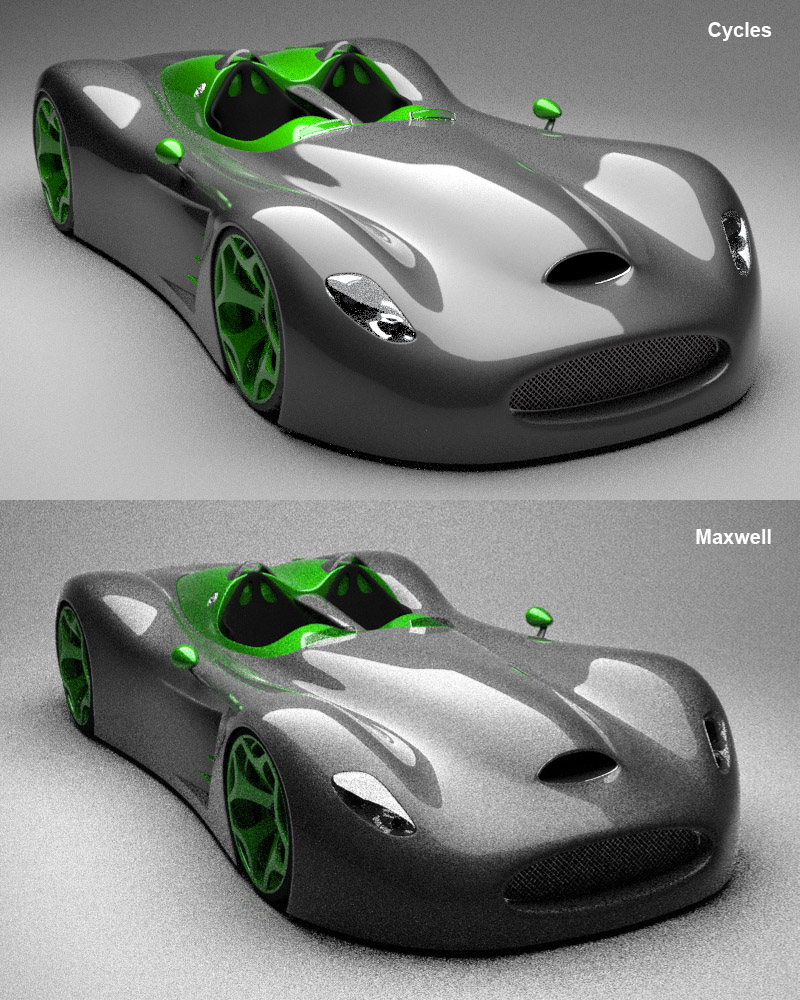

Cycles Render, a car with a procedural texture, FullHD was preparing for 2 minutes on the GTX580.

')

Blender was of little interest to me, even despite some advantages I knew about: openness, ease of installer, speed of work. It is extremely difficult for a conservator to transfer from 3d max to Blender: another management, “it's all wrong!”. But, being turned on the topic of anbias renders, especially on the GPU, I decided to try out Cycles, for one thing, and Blender to learn (at the time of publishing the article version 2.63 ).

A small video about interactivity, and how it all works:

The rendering mode using Cycles can be done directly in the active viewport (this is not an innovation, just a convenience), or you can monitor the changes in the scene in real time from the camera.

CPU vs GPU

The x86-64 processor cores have a very cumbersome instruction set, requiring a large crystal area. Because of this, it is difficult to locate many cores on the CPU, but in single-threaded x86 applications it shows itself from the best side.

But rendering is a multi-thread thing to ugliness. The main thing here is the high speed of floating point operations, and operating with a large amount of data requires good memory bandwidth. GPU is suitable for these purposes much better.

But the GPU, as a platform that was originally sharpened for hardware rasterization (OpenGL, DirectX), is rather difficult to adapt to GPGPU tasks. Many software solutions that are easily solved on a CPU require considerable dancing with a tambourine on a GPU through CUDA and OpenCL frameworks. Often, due to the complexity of the implementation of algorithms, weak optimization of frameworks (for example, OpenCL), programming on the GPU is refused.

For mathematical operations (rendering, physics calculation), a new processor architecture is needed with a small set of instructions, a large number of cores and a set of hardware solutions for fast additions and multiplications of floating-point numbers. Or wait until the GPU hardware and software is better adapted to the needs of non-graphical computing.

But since there is no such architecture and no desire to wait until everything “becomes cool,” developers around the world are already mastering the GPU. Of course, GPU rendering increases the rendering speed several times.

There is a small benchmark where you can try your hardware.

My render time (core i5 2500 vs GTX580).

Windows 7 64bit: CPU 5:39 : 64 CUDA 0:42 : 54. 8.07 times

Ubuntu 12.04 64bit: CPU 3:48 : 77, CUDA 0:39 : 03. 5.84 times.

It would be interesting to find out about the rendering speed on the latest top Radeons.

An interesting fact is that Unixes surpass Windows in rendering speed on the CPU. So that you don’t think that my Windows lives badly, I’ve dug up the evidence: one time (4th message) and two (in English). What is the reason - I don’t want to guess, I don’t know.

UPD: I already know, thanks to Lockal for the comment .

GPU detachment also depends on hardware, and the complexity of procedural textures. In complex procedural textures, the GPU margin is slightly reduced. By the way, about them.

Procedural textures

To create the desired material, you must have the skills to build shaders using the node graph. How it works I will try to explain with an example:

Where (it seemed to me that backwards would be clearer) :

1. Exit. Material Output is required to bring a function to the surface.

2. The shader mixes the paint component (4) and gloss (5) in accordance with the parameter (3).

3. The reflection coefficient of a glossy surface (the coefficient of reflection depends on the angle of incidence, than perpendicular to the surface is reflected less than a tangent)

4. The shader mixes shaders 6 and 7 in equal proportions (Fac = 0.5).

5. Specular reflection (varnished surface).

6, 7. Diffuse and glossy (roughness 0.35) components of paint.

8. Color converter. At the Hue input, the fac texture parameter (9) is from 0 to 1. At the output, the light is shifted relative to red.

9. Generator of cells of random color (r, g, b), where fac is intensity (from 0 to 1).

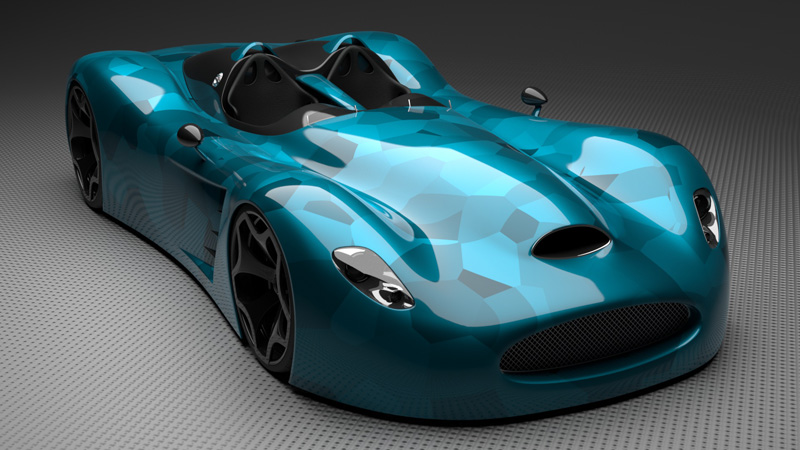

Having mastered the principle of work, you can play a little:

You can combine any textures and types of surfaces. There is FullHD .

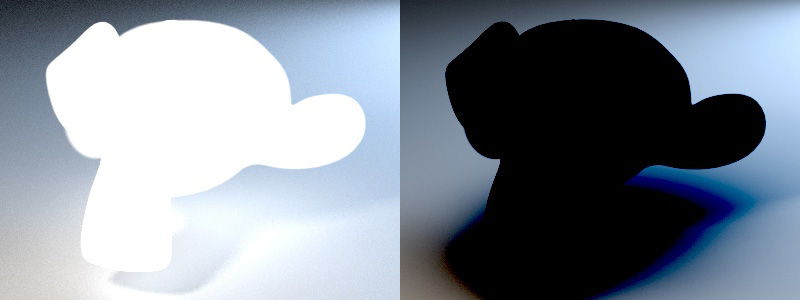

You can create light sources of negative luminosity.

Light, anti-light.

Procedural can be done not only the surface, but also the environment: the sky, clouds, etc. And with the help of nodes, you can also customize the post-processing of the image.

Incomprehensibility

Well, at first for me this question was incomprehensible, but then I realized what was happening. Here, as I understand it, the question is between performance and convenience, and this applies to all anbias on the GPU (the Arion Render and all the anbias on the CPU do not sin with this feature).

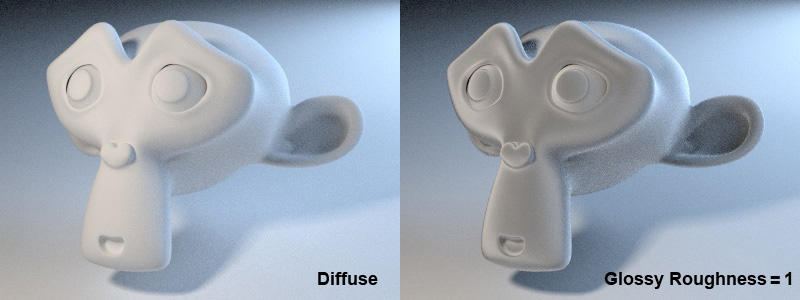

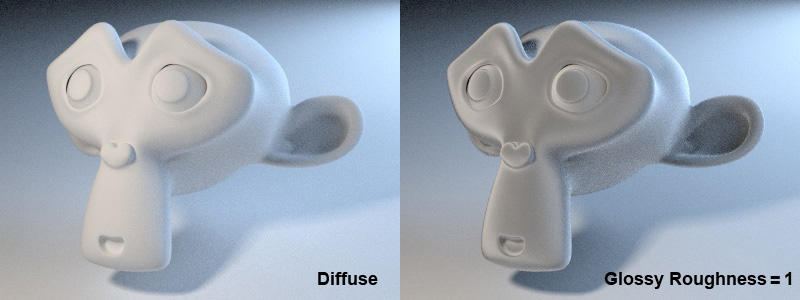

They have a glossy material for mirror and gloss reflections, and diffuse for scattered ones.

The point is this. If there is no scattering, then the magnitude of the random deviation at the point of incidence of the beam is 0, and the beam is reflected in a mirror. If 1 (maximum) - then the beam can be reflected in any direction in the hemisphere of reflection. That is, if we take a mirror and give it the maximum roughness, then we get white paper. At least I got used to it, using Maxwell.

If the rough and glossy turned out to be somehow not very, and you can not call it plausible scattered, then diffuse is the most it.

The same goes for the translucent shader. Translucent is translated as an opaque medium, but rendering means diffuse refraction. I mean, Translucent is a frosted glass (Glass shader with a matte roughness).

From these pictures we can say that Translucent looks normal.

It is clear that with the Glossy and Glass roughness close to 1 (visually, more than 0.7) it is better to use Diffuse and Translucent.

Detailed information on shader properties is here .

These questions are not fundamental for obtaining a realistic picture, but still, I would like to add some more generalizing and believable model of reflection for those who are used to such.

For example: set the surface roughness with any one parameter, as is done in Maxwell, Fry, Indigo, Lux and the features of the reflection distribution - with additional sliders and check marks. And for the most severe - to control the distribution of reflections using Bezier curves. Let, at the expense of performance.

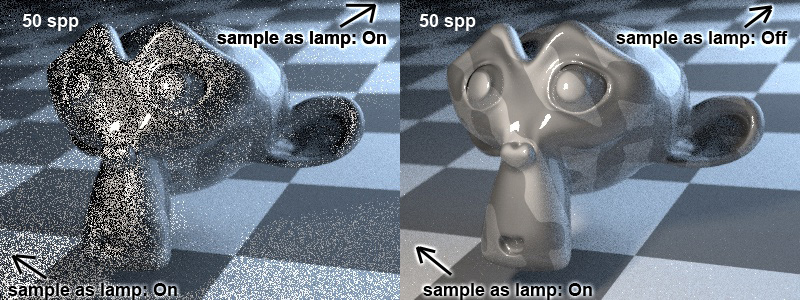

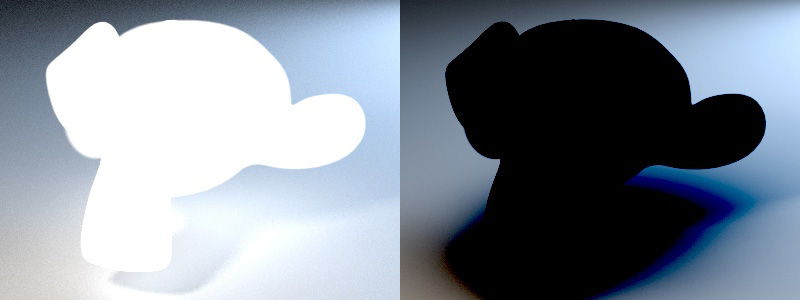

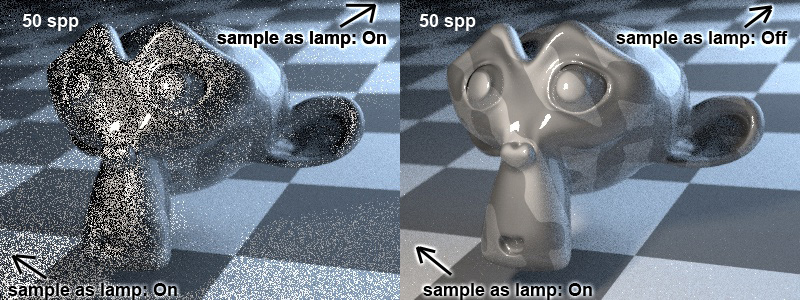

In addition, Cycles render still suffers from this feature. If we have several light sources in the scene (say, 2), then the probability that a beam fired from a camera will affect a large light source will be greater than a small one, regardless of the intensity of the light sources. When a soft and hard light is combined in a scene, it may look like this (left), and it will take a long time to wait for the noise to pass.

The picture on the left shows that it is the front light that is “noisy”, while the rear one feels great.

The first thing that can come to mind is to combine 2 render in post-processing.

However, so that people do not suffer much, in Cycles there is such a function: “Sample as lamp”, which is on by default. If you uncheck it, a part of the rays emitted from the camera will be reflected from objects in a random direction, and not in the direction of the light source (pure path tracing). In this case, the small light source will win, and the little one will lose a little. I think this is a temporary solution, and sooner or later the program will be completed and will take over the solution of this problem.

In general, the most difficult task in tracers is the correct distribution of computational loads over an image: which light source should be given more attention - which one less, which pixel needs many samples - which one does not, which material layer should be sampled more - and which practically does not affect the resulting image , in which direction it is better to reflect the beam, etc. With this, so far tight.

Oranges vs tomatoes

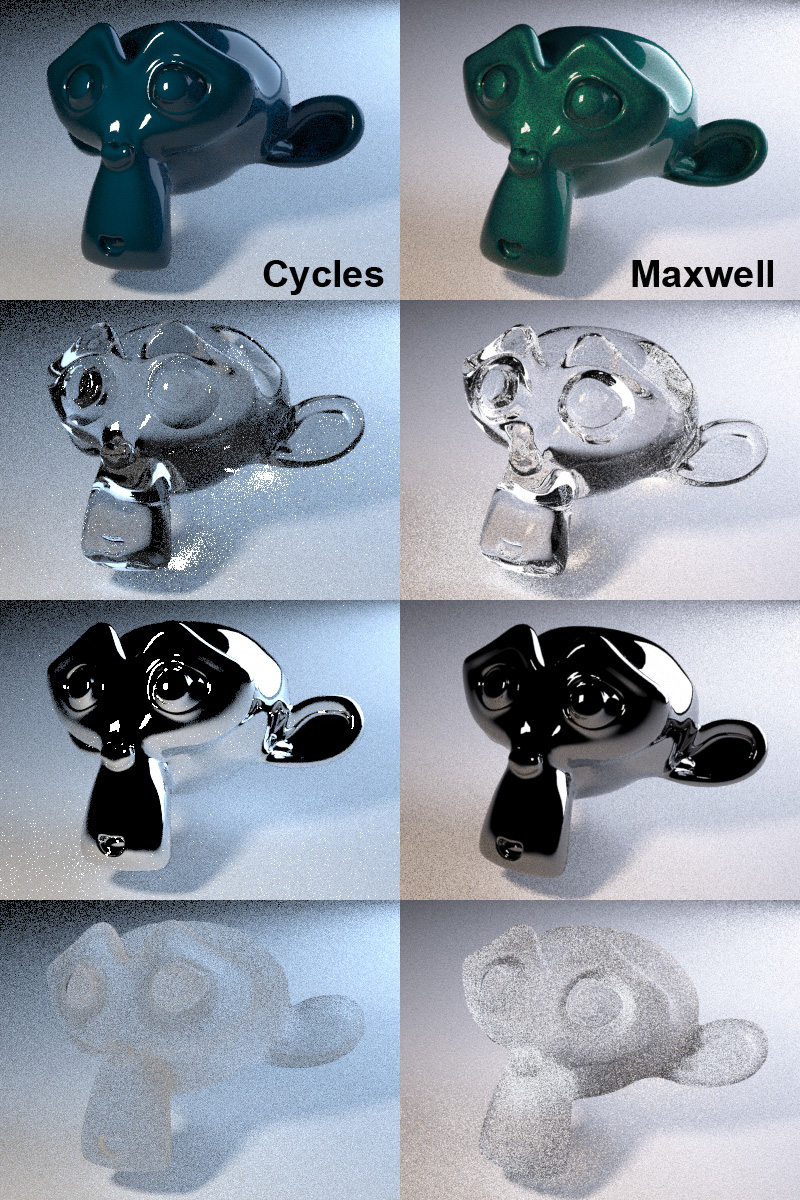

Maybe some will think about comparing Cycles with Maxwell. But the new open-source renderers need to grow and become equal to their older comrades.

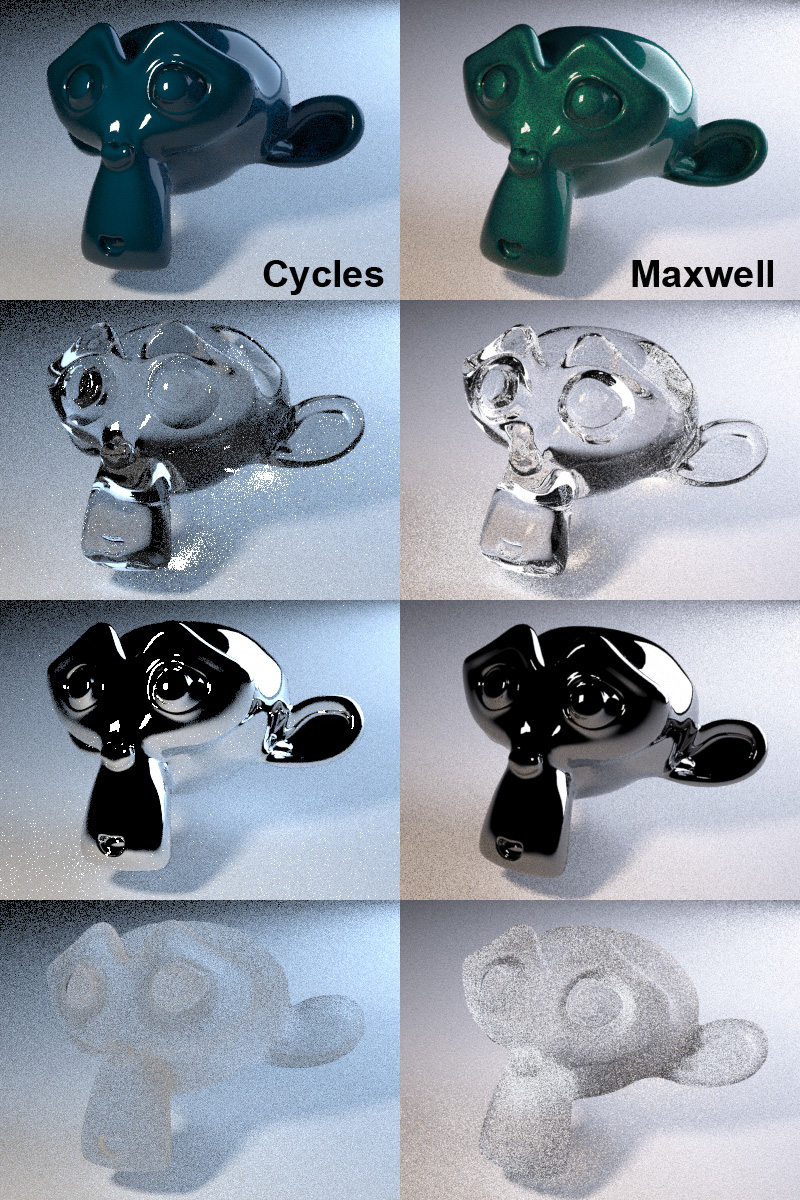

So, the resolution is 400x300, time 10 sec:

Maxwell looks much livelier, anyway.

In Maxwell, no parameters of surfaces like sample as lamp were configured, the load distribution algorithm takes over.

The strong noise from the caustics in Cycles (and the caustics, if desired, can be turned off) is due to the fact that it does not have Metropolis Sampling (the algorithm for optimizing beam beams, which is in Maxwell Render).

It should be noted that when using light from the environment or one large light source, the image in Cycles is noticeably cleaner than in Maxwell.

Rendered 5 seconds.

And a little more serious (core i5, 1 min).

Bvh

Bounding Volume Hierarchy - translated as a hierarchy of limiting volumes (thanks to don for the enlightenment in this part).

Honestly, in different renders the process of “pre-developer preparation” is called differently, compiling mesh in Octane, Voxelisation - in Maxwell. I, like many who work with Maxwell, used to call this business voxelization, but the above comrade says that this is not the same thing. Please forgive this inaccuracy.

This case was designed not to check each ray for intersection with all the triangles in the scene. And if there are millions of them? They all need to be checked for intersection. In this case, we hardly see the speed of more than a couple of samples per second. And with each new triangle the task will become more and more complicated.

The downside is that the BVH build is performed in Cycles always on the CPU. Maybe sometime, voxelization on the GPU will appear, but so far it is not, from which its limitations follow. For example, you have 10 million triangles in a scene, and 8 top-end video cards. They will render the picture in seconds, while the object voxelization time can exceed a minute even on a cool Core i7. If you use only core i7, then it will take you about a minute to voxelize, and 20-30 minutes to render. In this case, the voxelization time is not critical.

Voxelization of the above-rendered vehicle (400k triangles) takes 14 seconds.

With interactive visualization (preview), voxelization is performed only before the start of rendering, and with changes in the geometry of objects (the position of the vertices, the use of modifiers). And also, when you press Ctrl + Z (even if I haven’t done anything like this, I’d probably not finish it yet). In building a BVH there is no need to navigate, scale, change the location and rotation of objects.

When rendering (that is, at the final, by pressing the F12 button), voxelization is always performed. When animating, you can avoid the constant rebuilding of BVH static objects by clicking on the Cache BVH checkmark.

Let's hope that soon this issue will be somehow resolved in favor of speeding up the voxelization process, maybe this task can be transferred to the GPU.

Opencl

Distressed OpenCL under my Nvidia, the speed is inferior to CUDA two times. Under Ubuntu, Blender with OpenCL just crashes. Under Win7, it renders using OpenCL, but the render looks wrong for me, if the material consists of several layers, only one of them is shown, for example, the gloss or matte component. And the bugs in the viewport are simply inimitable.

On the Radeon, there seems to be no such bugs, maybe comments will be shown.

Interface brakes

If during rendering on a CPU it is not difficult to surf the web, then at full load the GPU is convenient only to read, Habr, for example. With this, it is desirable to try to keep turning the pages to a minimum, so as not to strain from the brakes.

Maybe there are some ways to change the priority of tasks on the GPU, but I don’t know about them.

If much intrigued

You can run it right now. To do this, you need to download Blender and run Cycles at home. To select a GPU: File -> User Preferences, select the System tab at the top, and at the bottom left you can choose a rendering platform (the default CPU value is set).

Subjective opinion

Today, Cycles is already good enough for visualization.

I think it would be nice to use it for subject rendering: on the basis of Cycles you can create your own Bunkspeed Shot, Hypershot, Keyshot, Autodesk Showcase. So that a person who is not dedicated to the wisdom of 3D editors can download a model and admire it from all sides in a beautiful render.

The enthusiasm of developers can not but rejoice, as does the activity of the opensource community in general.

I look forward to further development of the project.

Cycles Render - unbiased rendering , with the ability to render on the GPU (CUDA and OpenCL for ATI). Lies in a box with Blender, which runs on Windows, Linux, OSX.

Cycles Render, a car with a procedural texture, FullHD was preparing for 2 minutes on the GTX580.

')

Blender was of little interest to me, even despite some advantages I knew about: openness, ease of installer, speed of work. It is extremely difficult for a conservator to transfer from 3d max to Blender: another management, “it's all wrong!”. But, being turned on the topic of anbias renders, especially on the GPU, I decided to try out Cycles, for one thing, and Blender to learn (at the time of publishing the article version 2.63 ).

A small video about interactivity, and how it all works:

The rendering mode using Cycles can be done directly in the active viewport (this is not an innovation, just a convenience), or you can monitor the changes in the scene in real time from the camera.

CPU vs GPU

The x86-64 processor cores have a very cumbersome instruction set, requiring a large crystal area. Because of this, it is difficult to locate many cores on the CPU, but in single-threaded x86 applications it shows itself from the best side.

But rendering is a multi-thread thing to ugliness. The main thing here is the high speed of floating point operations, and operating with a large amount of data requires good memory bandwidth. GPU is suitable for these purposes much better.

But the GPU, as a platform that was originally sharpened for hardware rasterization (OpenGL, DirectX), is rather difficult to adapt to GPGPU tasks. Many software solutions that are easily solved on a CPU require considerable dancing with a tambourine on a GPU through CUDA and OpenCL frameworks. Often, due to the complexity of the implementation of algorithms, weak optimization of frameworks (for example, OpenCL), programming on the GPU is refused.

For mathematical operations (rendering, physics calculation), a new processor architecture is needed with a small set of instructions, a large number of cores and a set of hardware solutions for fast additions and multiplications of floating-point numbers. Or wait until the GPU hardware and software is better adapted to the needs of non-graphical computing.

But since there is no such architecture and no desire to wait until everything “becomes cool,” developers around the world are already mastering the GPU. Of course, GPU rendering increases the rendering speed several times.

There is a small benchmark where you can try your hardware.

My render time (core i5 2500 vs GTX580).

Windows 7 64bit: CPU 5:39 : 64 CUDA 0:42 : 54. 8.07 times

Ubuntu 12.04 64bit: CPU 3:48 : 77, CUDA 0:39 : 03. 5.84 times.

It would be interesting to find out about the rendering speed on the latest top Radeons.

An interesting fact is that Unixes surpass Windows in rendering speed on the CPU. So that you don’t think that my Windows lives badly, I’ve dug up the evidence: one time (4th message) and two (in English). What is the reason - I don’t want to guess, I don’t know.

UPD: I already know, thanks to Lockal for the comment .

GPU detachment also depends on hardware, and the complexity of procedural textures. In complex procedural textures, the GPU margin is slightly reduced. By the way, about them.

Procedural textures

To create the desired material, you must have the skills to build shaders using the node graph. How it works I will try to explain with an example:

Where (it seemed to me that backwards would be clearer) :

1. Exit. Material Output is required to bring a function to the surface.

2. The shader mixes the paint component (4) and gloss (5) in accordance with the parameter (3).

3. The reflection coefficient of a glossy surface (the coefficient of reflection depends on the angle of incidence, than perpendicular to the surface is reflected less than a tangent)

4. The shader mixes shaders 6 and 7 in equal proportions (Fac = 0.5).

5. Specular reflection (varnished surface).

6, 7. Diffuse and glossy (roughness 0.35) components of paint.

8. Color converter. At the Hue input, the fac texture parameter (9) is from 0 to 1. At the output, the light is shifted relative to red.

9. Generator of cells of random color (r, g, b), where fac is intensity (from 0 to 1).

Having mastered the principle of work, you can play a little:

You can combine any textures and types of surfaces. There is FullHD .

You can create light sources of negative luminosity.

Light, anti-light.

Procedural can be done not only the surface, but also the environment: the sky, clouds, etc. And with the help of nodes, you can also customize the post-processing of the image.

Incomprehensibility

Well, at first for me this question was incomprehensible, but then I realized what was happening. Here, as I understand it, the question is between performance and convenience, and this applies to all anbias on the GPU (the Arion Render and all the anbias on the CPU do not sin with this feature).

They have a glossy material for mirror and gloss reflections, and diffuse for scattered ones.

The point is this. If there is no scattering, then the magnitude of the random deviation at the point of incidence of the beam is 0, and the beam is reflected in a mirror. If 1 (maximum) - then the beam can be reflected in any direction in the hemisphere of reflection. That is, if we take a mirror and give it the maximum roughness, then we get white paper. At least I got used to it, using Maxwell.

If the rough and glossy turned out to be somehow not very, and you can not call it plausible scattered, then diffuse is the most it.

The same goes for the translucent shader. Translucent is translated as an opaque medium, but rendering means diffuse refraction. I mean, Translucent is a frosted glass (Glass shader with a matte roughness).

From these pictures we can say that Translucent looks normal.

It is clear that with the Glossy and Glass roughness close to 1 (visually, more than 0.7) it is better to use Diffuse and Translucent.

Detailed information on shader properties is here .

These questions are not fundamental for obtaining a realistic picture, but still, I would like to add some more generalizing and believable model of reflection for those who are used to such.

For example: set the surface roughness with any one parameter, as is done in Maxwell, Fry, Indigo, Lux and the features of the reflection distribution - with additional sliders and check marks. And for the most severe - to control the distribution of reflections using Bezier curves. Let, at the expense of performance.

In addition, Cycles render still suffers from this feature. If we have several light sources in the scene (say, 2), then the probability that a beam fired from a camera will affect a large light source will be greater than a small one, regardless of the intensity of the light sources. When a soft and hard light is combined in a scene, it may look like this (left), and it will take a long time to wait for the noise to pass.

The picture on the left shows that it is the front light that is “noisy”, while the rear one feels great.

The first thing that can come to mind is to combine 2 render in post-processing.

However, so that people do not suffer much, in Cycles there is such a function: “Sample as lamp”, which is on by default. If you uncheck it, a part of the rays emitted from the camera will be reflected from objects in a random direction, and not in the direction of the light source (pure path tracing). In this case, the small light source will win, and the little one will lose a little. I think this is a temporary solution, and sooner or later the program will be completed and will take over the solution of this problem.

In general, the most difficult task in tracers is the correct distribution of computational loads over an image: which light source should be given more attention - which one less, which pixel needs many samples - which one does not, which material layer should be sampled more - and which practically does not affect the resulting image , in which direction it is better to reflect the beam, etc. With this, so far tight.

Oranges vs tomatoes

Maybe some will think about comparing Cycles with Maxwell. But the new open-source renderers need to grow and become equal to their older comrades.

So, the resolution is 400x300, time 10 sec:

Maxwell looks much livelier, anyway.

In Maxwell, no parameters of surfaces like sample as lamp were configured, the load distribution algorithm takes over.

The strong noise from the caustics in Cycles (and the caustics, if desired, can be turned off) is due to the fact that it does not have Metropolis Sampling (the algorithm for optimizing beam beams, which is in Maxwell Render).

It should be noted that when using light from the environment or one large light source, the image in Cycles is noticeably cleaner than in Maxwell.

Rendered 5 seconds.

And a little more serious (core i5, 1 min).

Bvh

Bounding Volume Hierarchy - translated as a hierarchy of limiting volumes (thanks to don for the enlightenment in this part).

Honestly, in different renders the process of “pre-developer preparation” is called differently, compiling mesh in Octane, Voxelisation - in Maxwell. I, like many who work with Maxwell, used to call this business voxelization, but the above comrade says that this is not the same thing. Please forgive this inaccuracy.

This case was designed not to check each ray for intersection with all the triangles in the scene. And if there are millions of them? They all need to be checked for intersection. In this case, we hardly see the speed of more than a couple of samples per second. And with each new triangle the task will become more and more complicated.

The downside is that the BVH build is performed in Cycles always on the CPU. Maybe sometime, voxelization on the GPU will appear, but so far it is not, from which its limitations follow. For example, you have 10 million triangles in a scene, and 8 top-end video cards. They will render the picture in seconds, while the object voxelization time can exceed a minute even on a cool Core i7. If you use only core i7, then it will take you about a minute to voxelize, and 20-30 minutes to render. In this case, the voxelization time is not critical.

Voxelization of the above-rendered vehicle (400k triangles) takes 14 seconds.

With interactive visualization (preview), voxelization is performed only before the start of rendering, and with changes in the geometry of objects (the position of the vertices, the use of modifiers). And also, when you press Ctrl + Z (even if I haven’t done anything like this, I’d probably not finish it yet). In building a BVH there is no need to navigate, scale, change the location and rotation of objects.

When rendering (that is, at the final, by pressing the F12 button), voxelization is always performed. When animating, you can avoid the constant rebuilding of BVH static objects by clicking on the Cache BVH checkmark.

Let's hope that soon this issue will be somehow resolved in favor of speeding up the voxelization process, maybe this task can be transferred to the GPU.

Opencl

Distressed OpenCL under my Nvidia, the speed is inferior to CUDA two times. Under Ubuntu, Blender with OpenCL just crashes. Under Win7, it renders using OpenCL, but the render looks wrong for me, if the material consists of several layers, only one of them is shown, for example, the gloss or matte component. And the bugs in the viewport are simply inimitable.

On the Radeon, there seems to be no such bugs, maybe comments will be shown.

Interface brakes

If during rendering on a CPU it is not difficult to surf the web, then at full load the GPU is convenient only to read, Habr, for example. With this, it is desirable to try to keep turning the pages to a minimum, so as not to strain from the brakes.

Maybe there are some ways to change the priority of tasks on the GPU, but I don’t know about them.

If much intrigued

You can run it right now. To do this, you need to download Blender and run Cycles at home. To select a GPU: File -> User Preferences, select the System tab at the top, and at the bottom left you can choose a rendering platform (the default CPU value is set).

Subjective opinion

Today, Cycles is already good enough for visualization.

I think it would be nice to use it for subject rendering: on the basis of Cycles you can create your own Bunkspeed Shot, Hypershot, Keyshot, Autodesk Showcase. So that a person who is not dedicated to the wisdom of 3D editors can download a model and admire it from all sides in a beautiful render.

The enthusiasm of developers can not but rejoice, as does the activity of the opensource community in general.

I look forward to further development of the project.

Source: https://habr.com/ru/post/142213/

All Articles