Unbiased rendering (rendering without assumptions)

In computer graphics, rendering without assumptions refers to a rendering technique that does not include systematic errors, assumptions, or errors in the calculation. The image is obtained as it should be in nature, and the render has no quality settings for surfaces or light sources.

Image rendered using Maxwell Render.

Let's start with the bad

When the Anbias came into being, they were poorly optimized, and the iron was much weaker. Even quad-cores were rare, at least in the CIS. Many designers have refused such renders due to the fact that they had to wait too long for the noise from the image to disappear. Many professionals find it much easier to set up global lighting in Vray and do post-processing in Photoshop as a whole in 2-3 hours, than to press the “render” button in Maxwell and wait for an acceptable quality of, say, 8 (and more) hours. Sometimes the noise from any refraction did not want to go for days.

')

And what is good about them?

Physically accurate effects:

- global illumination , (including caustic );

- Depth of field (DOF) and shake (Motion Blur);

- subsurface scattering ;

- some renders even support dispersion ;

- soft shadows, realistic reflections, in general, everything is like in life.

All these effects we see from the first seconds of rendering.

The rendering time depends little on the number of triangles, this makes it possible not to save resources on their number.

Plus, the hardware is not in place, the processor performance is gradually increasing, and more interestingly, with the development of general-purpose computing technology on graphics cards ( GPGPU ), renderers began to appear, which use the GPU shader cores for computing. And the renders themselves began to give the best picture at the same computational cost.

Let's see

what the rendering process looks like:

Core i5 2500 3.3GHz, Maxwell Render 2.6, 400k triangles, personally created in 3ds Max model.

At the heart of anbias renders is Path Tracing with various types of optimization.

(Maybe some visitors noticed that I already published and published posts about tracing the path to the GPU. But I decided to cover the topic more deeply and extensively.)

***

ALGORITHM

Path Tracing (PT) is based on Monte Carlo integration. The more samples we calculate per pixel (pixel color = arithmetic average of the colors of all samples at this point) - the more accurate the result will be.

A sample (sample) is the rays that, after passing the path of reflections and refractions through the scene (from the camera to the light source), forms the color of the pixel to be painted at a certain point in the image.

Preview of the image (noisy image) we get almost immediately after voxelization (about it later).

The number of samples can not be infinite. The “perfect” picture will never be; it will take an infinite amount of time. Rendering can be considered complete if the noise is not distinguishable by eye. (usually 1000 - 10000 samples per pixel, or 2-20 billion samples per image of FullHD format, in special cases - even more). Nor should we forget that a slight noise makes the image realistic.

The more complex the paths that the rays travel, the slower the noise will go.

The easiest way to render light sources. Rays from light sources directly into the camera.

It is more difficult to render objects illuminated by direct rays from light sources.

Even more difficult - objects illuminated by another object, illuminated by a light source.

And so on. This feature makes the rendering of interiors more difficult than exteriors, for the simple reason that in the interiors more computing resources are used to calculate complex paths.

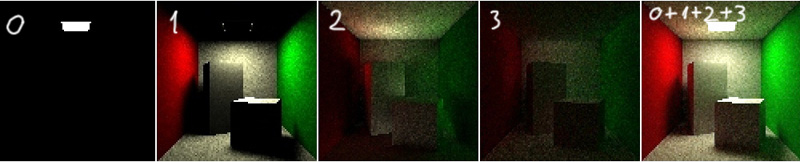

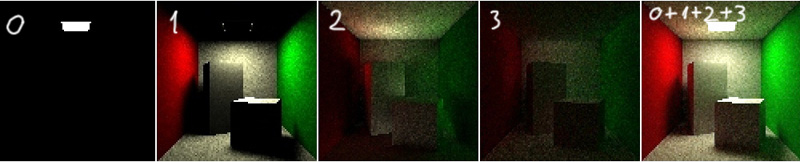

From left to right, direct light, first reflection, second reflection, third reflection, result.

The maximum depth of reflections and refractions in most renders is adjustable, and is equal to 8 by default.

In some (eg Maxwell, Fry) the depth of reflections is limited by a much larger number, and depends on the parameters of the surfaces. For example, it does not make sense to calculate a large depth of reflections for a dark brown table, while in order to calculate the refractions inside the glass it is required to increase the depth of reflections.

Soft shadows

The algorithm for obtaining soft shadows in PT is quite simple. The beam emanating from the camera towards a point on the surface is directed to an arbitrary place (the degree of “arbitrariness” depends on the parameters of the given surface) on the light source.

If the beam has safely reached the light source (green), then we paint this pixel in the desired color.

If the beam met an obstacle on the way (red), then the corresponding pixel on the screen is not painted over.

(By the way, the algorithm is similar to rendering shadows in raytracing)

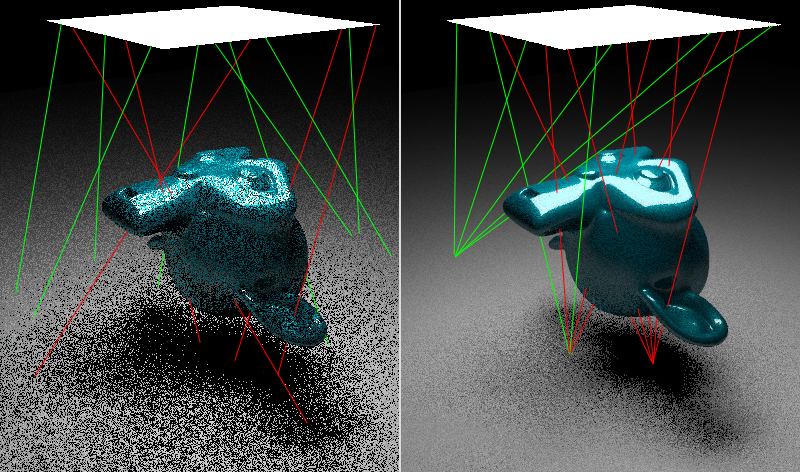

Number of samples per pixel: on the left - 1, on the right - 5.

For example, for clarity, there are no secondary reflections.

Reflections and refractions

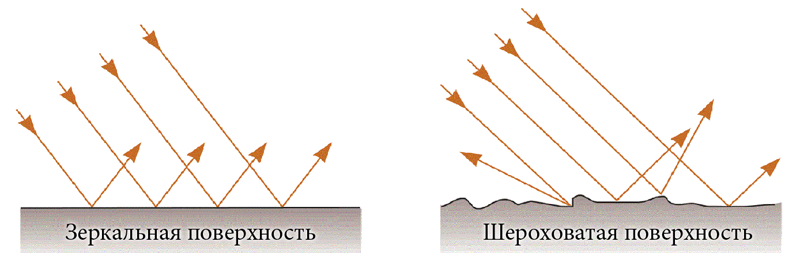

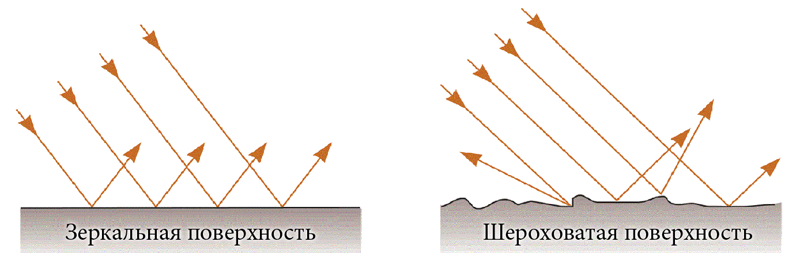

Reflective function depends primarily on the degree of roughness. And the degree of roughness is determined by the magnitude of the deviation error from the reflected beam.

If the degree of deviation = 0 - then we get a mirror image. If 1 (or 100%) - then the incident beam can be reflected in any direction. From 0 to 1 we get a reflection of varying degrees of roughness.

The degree of surface roughness (from 0 to 1).

Reflective function may be more complex (for example, an anisotropic surface ).

The refractiveness roughness algorithm is similar to reflections, only the beam penetrates into the object, changing its direction in accordance with the refractive index of this object.

Materials

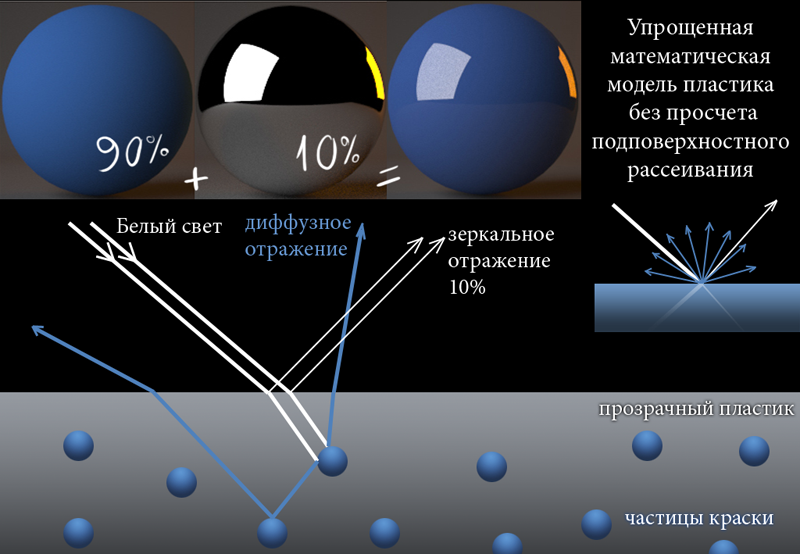

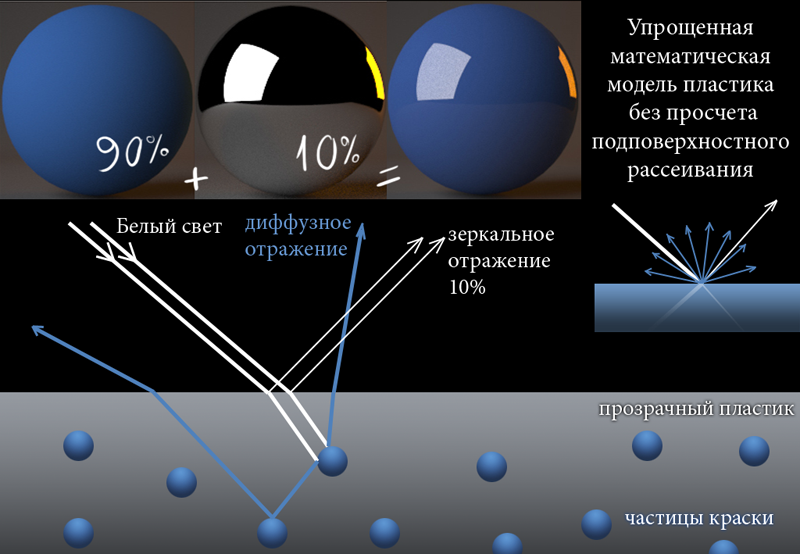

Materials, as a rule, consist of several layers.

Simplified plastic surface models.

In many anbias renderers (Maxwell, Fry, Indigo, Lux) it is possible to calculate the present subsurface scattering. Some of the rays pass and scatter below the surface, which makes its own adjustment to the resulting image.

Depth of field

The effect known in photography is achieved in PT with little or no computational overhead.

***

OPTIMIZATION METHODS

Importance sampling

“Important Sampling” requires that the computer does not waste computing power on “clean” areas. Often, surfaces that are “cleaned” are illuminated by direct light, while areas illuminated by refracted or reflected light remain noisy for a very long time.

Areas highlighted in red have increased noise.

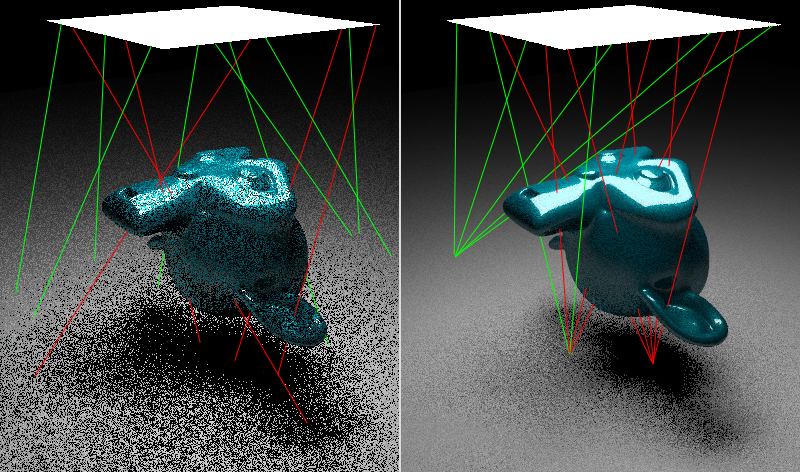

Bidirectional path tracing

PT in its pure form is used very rarely. The probability that the rays emanating from the virtual camera reach the source is very small and highly dependent on the size of the light source. The smaller the light source, the more difficult it is for the beam to “get” into it.

The BDPT algorithm emits rays simultaneously from the light source and from the camera. This allows you to “painlessly” bring even point sources of light into the scene.

Used in most unbiased GPU renders.

Metropolis Light Transport

The MLT mutant ray algorithm makes it possible to achieve less noise with the same number of samples. The algorithm retains the “nodes” (reflection points) of those rays that have strongly influenced the resulting image, and produces minor deviations from the original direction of the beam. Also, may introduce additional nodes in the path of the beam. After, it checks how strongly the change in direction influences the intensity of the beam, and determines whether to make further mutations of this beam.

Used in all unbiased renderers using a CPU.

(There is another method for optimizing mutating rays - Energy Redistribution Path Tracing, but it is not used everywhere, and there is little information about it.)

How it works, you can look at the video more clearly (MLT vs BDPT).

Here we can see the effectiveness of each of the rendering optimization methods.

Voxelisation

Before rendering, voxelization is necessary so that the program does not check all triangles for the possibility of intersection with one ray. The algorithm discards those areas of space, the triangles in which can not be in the path of the beam.

Objects must be voxelised each time a change occurs in the geometry, or new objects are added. But it is not necessary when moving the camera, changing the materials of objects or the environment.

In addition, you can enter a voxel rendering algorithm in PT.

***

GPU

Due to the fact that modern graphics processors cope with float computing much better than CPU, the trace of the path is gradually placed on their shoulders.

Real-time rendering

Enthusiasts have already started creating games that use PT. You can try the game yourself (using OpenCL) if you have a powerful enough card (you can try playing on the CPU, but it will slow you down a lot) .

The author of the following video (I recommend to watch his website ) states that in the near future the PT engine will be used in games. Rendered this video using 2 GTX580 in real time.

How to use a GPU

CUDA - software and hardware architecture allows you to compile C ++ and perform calculations on Nvidia video cards.

ATIFirestream is the same for ATI cards only.

OpenCL - a framework for computing on all OpenCL-compatible (most modern CPU and GPU) devices.

DirectCompute is a framework from Microsoft, supported by video cards with DX10, 11 support.

GLSL is a shader programming language that works on all graphics accelerators.

The following video demonstrates the speed of computing physics using various programming platforms. And physics, like graphics, requires good performance of floating point operations, so I think this video is applicable to this article.

( GLSL demonstrates speed at CUDA level, why then does not PT exist on GLSL? )

***

AND A BIT MORE

The most famous anbias renders:

Maxwell Render is the most popular unbiased render.

Indigo has a GPU analogue IndigoRT.

FryRender render from RandomControl, has a GPU analogue - Arion Render.

LuxRender open source render, has GPU acceleration support. The site can also be found completely GPU render - SmallLuxGPU.

Octane Render fully GPU render.

iRay is in the box with the latest 3ds Max, uses CUDA and a processor.

Cycles Render (built into Blender, supports both CUDA and OpenCL).

Popular misconception

There is a popular misconception that the V-Ray RT is the anbias renderer. No, it is not. This is raytracing with adaptive anti-aliasing . Of course, sampling and rendering of shadows is similar to the path tracing algorithm. But global illumination and caustics remain subject to the general settings of the time, and they must be configured for each specific case.

The same goes for Bunkspeed Hypershot and Luxeon Keyshot (earlier versions, before switching to iRay).

Let's sum up:

V-Ray Oldfags will say that they can do all the beauty in Virey, and they will be right. After all, render is not the main thing. The main thing - an artist with a head, smooth hands and creativity in the soul!

But unbiased renders can help us not pay attention to the technical settings of the render, but focus on the creative process.

Image rendered using Maxwell Render.

Let's start with the bad

When the Anbias came into being, they were poorly optimized, and the iron was much weaker. Even quad-cores were rare, at least in the CIS. Many designers have refused such renders due to the fact that they had to wait too long for the noise from the image to disappear. Many professionals find it much easier to set up global lighting in Vray and do post-processing in Photoshop as a whole in 2-3 hours, than to press the “render” button in Maxwell and wait for an acceptable quality of, say, 8 (and more) hours. Sometimes the noise from any refraction did not want to go for days.

')

And what is good about them?

Physically accurate effects:

- global illumination , (including caustic );

- Depth of field (DOF) and shake (Motion Blur);

- subsurface scattering ;

- some renders even support dispersion ;

- soft shadows, realistic reflections, in general, everything is like in life.

All these effects we see from the first seconds of rendering.

The rendering time depends little on the number of triangles, this makes it possible not to save resources on their number.

Plus, the hardware is not in place, the processor performance is gradually increasing, and more interestingly, with the development of general-purpose computing technology on graphics cards ( GPGPU ), renderers began to appear, which use the GPU shader cores for computing. And the renders themselves began to give the best picture at the same computational cost.

Let's see

what the rendering process looks like:

Core i5 2500 3.3GHz, Maxwell Render 2.6, 400k triangles, personally created in 3ds Max model.

At the heart of anbias renders is Path Tracing with various types of optimization.

(Maybe some visitors noticed that I already published and published posts about tracing the path to the GPU. But I decided to cover the topic more deeply and extensively.)

***

ALGORITHM

Path Tracing (PT) is based on Monte Carlo integration. The more samples we calculate per pixel (pixel color = arithmetic average of the colors of all samples at this point) - the more accurate the result will be.

A sample (sample) is the rays that, after passing the path of reflections and refractions through the scene (from the camera to the light source), forms the color of the pixel to be painted at a certain point in the image.

Preview of the image (noisy image) we get almost immediately after voxelization (about it later).

The number of samples can not be infinite. The “perfect” picture will never be; it will take an infinite amount of time. Rendering can be considered complete if the noise is not distinguishable by eye. (usually 1000 - 10000 samples per pixel, or 2-20 billion samples per image of FullHD format, in special cases - even more). Nor should we forget that a slight noise makes the image realistic.

The more complex the paths that the rays travel, the slower the noise will go.

The easiest way to render light sources. Rays from light sources directly into the camera.

It is more difficult to render objects illuminated by direct rays from light sources.

Even more difficult - objects illuminated by another object, illuminated by a light source.

And so on. This feature makes the rendering of interiors more difficult than exteriors, for the simple reason that in the interiors more computing resources are used to calculate complex paths.

From left to right, direct light, first reflection, second reflection, third reflection, result.

The maximum depth of reflections and refractions in most renders is adjustable, and is equal to 8 by default.

In some (eg Maxwell, Fry) the depth of reflections is limited by a much larger number, and depends on the parameters of the surfaces. For example, it does not make sense to calculate a large depth of reflections for a dark brown table, while in order to calculate the refractions inside the glass it is required to increase the depth of reflections.

Soft shadows

The algorithm for obtaining soft shadows in PT is quite simple. The beam emanating from the camera towards a point on the surface is directed to an arbitrary place (the degree of “arbitrariness” depends on the parameters of the given surface) on the light source.

If the beam has safely reached the light source (green), then we paint this pixel in the desired color.

If the beam met an obstacle on the way (red), then the corresponding pixel on the screen is not painted over.

(By the way, the algorithm is similar to rendering shadows in raytracing)

Number of samples per pixel: on the left - 1, on the right - 5.

For example, for clarity, there are no secondary reflections.

Reflections and refractions

Reflective function depends primarily on the degree of roughness. And the degree of roughness is determined by the magnitude of the deviation error from the reflected beam.

If the degree of deviation = 0 - then we get a mirror image. If 1 (or 100%) - then the incident beam can be reflected in any direction. From 0 to 1 we get a reflection of varying degrees of roughness.

The degree of surface roughness (from 0 to 1).

Reflective function may be more complex (for example, an anisotropic surface ).

The refractiveness roughness algorithm is similar to reflections, only the beam penetrates into the object, changing its direction in accordance with the refractive index of this object.

Materials

Materials, as a rule, consist of several layers.

Simplified plastic surface models.

In many anbias renderers (Maxwell, Fry, Indigo, Lux) it is possible to calculate the present subsurface scattering. Some of the rays pass and scatter below the surface, which makes its own adjustment to the resulting image.

Depth of field

The effect known in photography is achieved in PT with little or no computational overhead.

***

OPTIMIZATION METHODS

Importance sampling

“Important Sampling” requires that the computer does not waste computing power on “clean” areas. Often, surfaces that are “cleaned” are illuminated by direct light, while areas illuminated by refracted or reflected light remain noisy for a very long time.

Areas highlighted in red have increased noise.

Bidirectional path tracing

PT in its pure form is used very rarely. The probability that the rays emanating from the virtual camera reach the source is very small and highly dependent on the size of the light source. The smaller the light source, the more difficult it is for the beam to “get” into it.

The BDPT algorithm emits rays simultaneously from the light source and from the camera. This allows you to “painlessly” bring even point sources of light into the scene.

Used in most unbiased GPU renders.

Metropolis Light Transport

The MLT mutant ray algorithm makes it possible to achieve less noise with the same number of samples. The algorithm retains the “nodes” (reflection points) of those rays that have strongly influenced the resulting image, and produces minor deviations from the original direction of the beam. Also, may introduce additional nodes in the path of the beam. After, it checks how strongly the change in direction influences the intensity of the beam, and determines whether to make further mutations of this beam.

Used in all unbiased renderers using a CPU.

(There is another method for optimizing mutating rays - Energy Redistribution Path Tracing, but it is not used everywhere, and there is little information about it.)

How it works, you can look at the video more clearly (MLT vs BDPT).

Here we can see the effectiveness of each of the rendering optimization methods.

Voxelisation

Before rendering, voxelization is necessary so that the program does not check all triangles for the possibility of intersection with one ray. The algorithm discards those areas of space, the triangles in which can not be in the path of the beam.

Objects must be voxelised each time a change occurs in the geometry, or new objects are added. But it is not necessary when moving the camera, changing the materials of objects or the environment.

In addition, you can enter a voxel rendering algorithm in PT.

***

GPU

Due to the fact that modern graphics processors cope with float computing much better than CPU, the trace of the path is gradually placed on their shoulders.

Real-time rendering

Enthusiasts have already started creating games that use PT. You can try the game yourself (using OpenCL) if you have a powerful enough card (you can try playing on the CPU, but it will slow you down a lot) .

The author of the following video (I recommend to watch his website ) states that in the near future the PT engine will be used in games. Rendered this video using 2 GTX580 in real time.

How to use a GPU

CUDA - software and hardware architecture allows you to compile C ++ and perform calculations on Nvidia video cards.

ATIFirestream is the same for ATI cards only.

OpenCL - a framework for computing on all OpenCL-compatible (most modern CPU and GPU) devices.

DirectCompute is a framework from Microsoft, supported by video cards with DX10, 11 support.

GLSL is a shader programming language that works on all graphics accelerators.

The following video demonstrates the speed of computing physics using various programming platforms. And physics, like graphics, requires good performance of floating point operations, so I think this video is applicable to this article.

( GLSL demonstrates speed at CUDA level, why then does not PT exist on GLSL? )

***

AND A BIT MORE

The most famous anbias renders:

Maxwell Render is the most popular unbiased render.

Indigo has a GPU analogue IndigoRT.

FryRender render from RandomControl, has a GPU analogue - Arion Render.

LuxRender open source render, has GPU acceleration support. The site can also be found completely GPU render - SmallLuxGPU.

Octane Render fully GPU render.

iRay is in the box with the latest 3ds Max, uses CUDA and a processor.

Cycles Render (built into Blender, supports both CUDA and OpenCL).

Popular misconception

There is a popular misconception that the V-Ray RT is the anbias renderer. No, it is not. This is raytracing with adaptive anti-aliasing . Of course, sampling and rendering of shadows is similar to the path tracing algorithm. But global illumination and caustics remain subject to the general settings of the time, and they must be configured for each specific case.

The same goes for Bunkspeed Hypershot and Luxeon Keyshot (earlier versions, before switching to iRay).

Let's sum up:

V-Ray Oldfags will say that they can do all the beauty in Virey, and they will be right. After all, render is not the main thing. The main thing - an artist with a head, smooth hands and creativity in the soul!

But unbiased renders can help us not pay attention to the technical settings of the render, but focus on the creative process.

Source: https://habr.com/ru/post/142003/

All Articles