Invitation to get acquainted with IBM's smart cloud

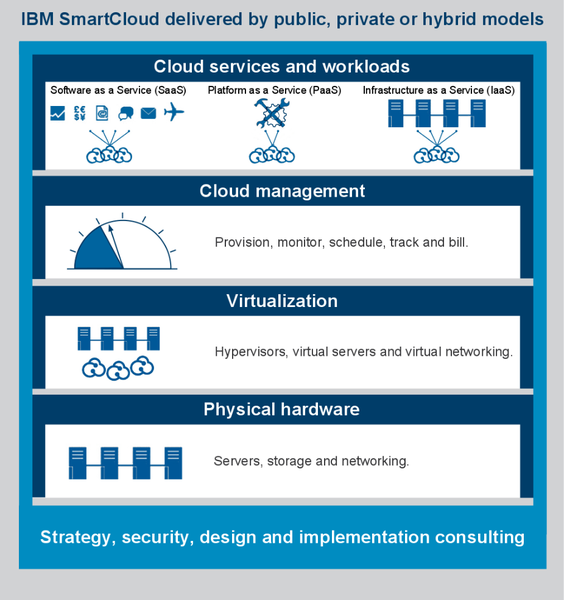

Cloud services in one form or another (SaaS, PaaS, IaaS, etc.) have finally ceased to be perceived as a wonder, for the most part of the network’s audience. This is one of the many reasons why not only big business, but also medium-sized businesses, as well as private entrepreneurs, have begun to look closely at the various cloud technologies provided today around the world, by a large number of companies, under different conditions and for different audiences.

But, perhaps, there is one thing that remains unchanged - organizations' requirements for the quality of the resources and services provided in the cloud. Among the companies that seek to transfer their tasks to the cloud, the issue of security remains perhaps one of the most relevant, because data will not be stored “in the basement”, but in the data center of another company, probably in another country or even on another continent . Not everyone is ready to take such a step right now, but as time goes on and, thankfully, there are technologies that help save doubters from dark thoughts, explaining why it is more profitable to be in the cloud today than anywhere else if we are talking about business. medium and large sizes. The larger the company or organization, the more data it has to process - the more relevant this statement is.

')

For a start - a little history. IBM computing clouds have emerged as a natural symbiosis of two of the strongest and most rapidly developing areas in the entire company: mainframe production and the development of virtualization technologies. The first virtualization experiments began to be conducted at IBM back in the 60s of the last century, when the development of a virtual machine for operating systems CP-40 and CP-67 was started: the hypervisor used for testing and software development, which allowed sharing memory between virtual images, and also to allocate to each user a certain amount of virtual disk space. Dividing the mainframe into virtual machines, IBM for the first time achieved a noticeable increase in process performance and increased application speed, thus increasing the efficiency of using expensive equipment. Since 1972, IBM has been selling technology to create virtual machines for mainframes, and now the Smart Cloud Foundation is one of the largest virtualization initiatives in principle.

Since February 1990, IBM began producing servers based on POWER processors. This line of processors was created specifically for running technologies so-called. "Mission-critical" virtualization. POWER servers included PowerVM hypervisors with active memory sharing between users and applications. Migrations were introduced already later, in the 6th generation of POWER, announced in May 2007. The next, expected, step was the standardization and automation of all technologies included in the Smart Cloud Foundation, to increase their speed and less use of resources. The combination of this work in the field of virtualization, standardization and automation has led to the fact that today IBM's clouds are the most popular in the corporate environment (according to the company, in April 2011, 80% of Fortune 500 corporations use IBM Smart Cloud).

Actually, since 2007, it is possible to keep a record of the bright development of the Smart Cloud - it was then that IBM clearly formed the main goal and mission of the program, namely, building quality clouds for corporate clients and providing all services available in the cloud ecosystem. Since October 2007, IBM, together with Google, launched a program for training in cloud technologies at universities - in addition to providing free cloud infrastructure for various purposes and tasks, two giants have compiled a curriculum for technical students.

But back, directly, to the clouds. What I wrote at the very beginning - namely, the criticality of storing data in the cloud, companies usually solved by managing several images of servers and applications that were performed at various sites. This allows you to protect yourself to some extent, but at the same time, greatly increases the complexity and cost of managing cloud technologies. From the point of view of the cloud, as a model for providing services, this is a counter-productive approach, because initially cloud technologies are designed to be more efficient than traditional hosting models.

Some time ago, IBM announced two new services provided to all Smart Cloud customers - data archiving and recovery of virtual and physical servers. The essence of this service is simple and clear - continuous duplication of the application and all associated data in a protected environment, which allows you to resume all operations as soon as possible after the detection of an unforeseen failure of the entire infrastructure.

IBM Smart CLoud Virtualized Server Recovery is a guarantee of reliability and efficiency of the cloud, as the service performs backups in continuous mode.

IBM Smart Cloud Archive is a different type of service designed to meet stringent standards and privacy requirements for stored data, with a wide range of functions from search and indexing to data extraction and eDiscovery (electronic information disclosure).

These two services are now included in the standard “IBM Smart Cloud” and are included in Managed Backup - part of the cloud that is used by hundreds of companies. From July 19 of this year, two new services will be available to all Smart Cloud users.

But, of course, this is not the whole list of what Smart Cloud can and can do. But it would be possible to talk about it endlessly, and therefore we offer dear readers of the IBM Habré blog to choose the most suitable option for themselves from the following:

1. Independently learn all the information about the IBM Smart Cloud in a special section of the official site.

2. Taste the Smart Cloud by testing a special simulator designed to familiarize yourself with the system.

3. View the IBM webinar entry in Russian on the topic “IBM Smart Cloud”.

Source: https://habr.com/ru/post/141753/

All Articles