Instance Caching and Caching Management

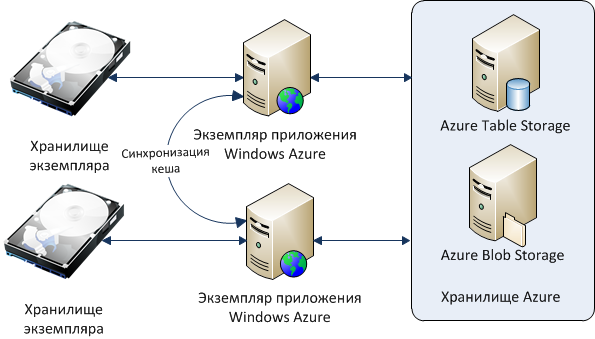

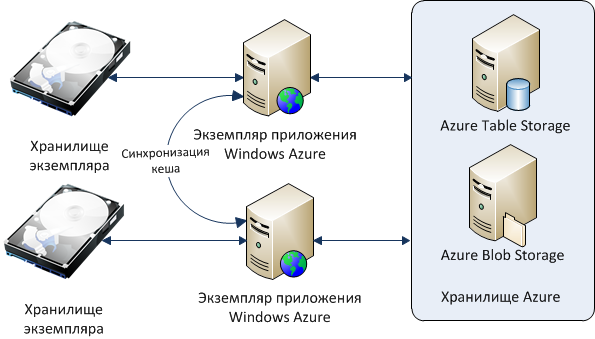

Here is the third article from the series "The internal structure and architecture of the AtContent.com service." From it, you will learn how and why when using the Windows Azure platform, you can reduce the number of accesses to Azure Table Storage and Azure Blob Storage data stores. In our service, the authors post their publications, which are then embedded in various sites. Thus, once published content is displayed on a large number of resources, while being delivered to these sites through our service. Therefore, in order to reduce the number of accesses to the Azure storage, we apply caching of publications to the instance storage, as there is no additional charge.

Also, the article will address questions about how to manage caching when there is more than one instance of the application and how to synchronize the cache state between instances.

This should start with the fact that all application data in Windows Azure should be stored outside the application or service instance, because when the role is restarted, all data that is in the instance storage is lost. This happens on average once every 1-2 days, as the service is now at a very active development stage and the deployment has to be done very often. Therefore, the most effective solution is to locate this data in Azure storage, such as Azure Table Storage or Azure Blob Storage. But for each operation with this repository we are charged a fee. Although it is not so big, I still want to reduce the cost of accessing the Azure storage.

')

With the introduction of caching, we were able to significantly reduce the cost of operations with Azure storage. So, when viewing the publication, it is selected from the Azure storage only for the first time, and all subsequent ones from the cache. Since authors rarely edit their publications, at this stage the new initiator is the main initiator of the cache update. Even if we take into account that the deployment occurs once a day, then for publication that is viewed, for example, 100 times a day, the savings for Azure storage will be 99%. And this is not the most popular publication.

To solve the problem of caching, we use instance storage. To be able to work with it you will need to connect the appropriate namespaces:

After that, you will need to define local storage. This can be done in the settings of the role:

By default, the storage size is set to 1000 MB, the minimum size can be 1 MB, and the maximum depends on the size of the role. For Small Instance this size will be 165 GB. You can read more about this in MSDN ( http://msdn.microsoft.com/ru-ru/library/ee758708.aspx ).

After configuring the storage, you can work with it as with a regular file system with only some special features. To determine the location of the file, you need to combine the path to the repository and the relative path to the file from the repository root:

In order to store .NET objects in the file system, they need to be serialized. For this, we use the JSON.Net library ( http://json.codeplex.com/ ).

Further, if you use more than one instance in your application, then the problem of cache relevance arises. Even if you update the cache on an instance that participates in changing data in Azure storage, the remaining instances will not know anything about it. To cope with this, we have just developed a mechanism for exchanging messages between instances and roles, described in the previous article ( http://habrahabr.ru/post/140461/ ).

Here it is used to inform the instance of the need to delete part of the cache that has just become irrelevant.

As mentioned earlier, at AtContent.com we use publication caching. At the same time the publication is cached by an instance at the time of its choice from storage of Azure. Thus the cache of copies is filled in as far as the circulation to publications. At the time of updating the publication by the author, a message is sent to all instances of the role about the need to clear the cache for this publication, and after processing it, the cache becomes relevant again.

This mechanism allows us to reduce the cost of Azure storage and effectively use all the features of the instance.

As a cache in Windows Azure, you can use not only a hard disk, but also RAM or Windows Azure Cache. Their use is justified if you need to increase the speed of work with any data. Moreover, the volume of this data can not be large. The amount of RAM in the instance is significantly less than the amount of hard disk. Windows Azure Cache also does not provide large amounts of data - the maximum amount that can be obtained is 4 GB, and you have to pay $ 325 per month for it ( http://www.windowsazure.com/ru-ru/home/tour/caching/ ) .

Therefore, if you just need to reduce the number of accesses to the Azure storage for data that rarely changes, but is very often required for reading, then the mechanism described in the article can be very useful to you. It will also be available as part of the OpenSource CPlase library, which will soon be made publicly available.

Read in the series:

Also, the article will address questions about how to manage caching when there is more than one instance of the application and how to synchronize the cache state between instances.

This should start with the fact that all application data in Windows Azure should be stored outside the application or service instance, because when the role is restarted, all data that is in the instance storage is lost. This happens on average once every 1-2 days, as the service is now at a very active development stage and the deployment has to be done very often. Therefore, the most effective solution is to locate this data in Azure storage, such as Azure Table Storage or Azure Blob Storage. But for each operation with this repository we are charged a fee. Although it is not so big, I still want to reduce the cost of accessing the Azure storage.

')

With the introduction of caching, we were able to significantly reduce the cost of operations with Azure storage. So, when viewing the publication, it is selected from the Azure storage only for the first time, and all subsequent ones from the cache. Since authors rarely edit their publications, at this stage the new initiator is the main initiator of the cache update. Even if we take into account that the deployment occurs once a day, then for publication that is viewed, for example, 100 times a day, the savings for Azure storage will be 99%. And this is not the most popular publication.

To solve the problem of caching, we use instance storage. To be able to work with it you will need to connect the appropriate namespaces:

using Microsoft.WindowsAzure; using Microsoft.WindowsAzure.ServiceRuntime; After that, you will need to define local storage. This can be done in the settings of the role:

By default, the storage size is set to 1000 MB, the minimum size can be 1 MB, and the maximum depends on the size of the role. For Small Instance this size will be 165 GB. You can read more about this in MSDN ( http://msdn.microsoft.com/ru-ru/library/ee758708.aspx ).

After configuring the storage, you can work with it as with a regular file system with only some special features. To determine the location of the file, you need to combine the path to the repository and the relative path to the file from the repository root:

var Storage = RoleEnvironment.GetLocalResource(LocalResourceName); var FinalPath = Path.Combine(Storage.RootPath, RelativePath); In order to store .NET objects in the file system, they need to be serialized. For this, we use the JSON.Net library ( http://json.codeplex.com/ ).

Further, if you use more than one instance in your application, then the problem of cache relevance arises. Even if you update the cache on an instance that participates in changing data in Azure storage, the remaining instances will not know anything about it. To cope with this, we have just developed a mechanism for exchanging messages between instances and roles, described in the previous article ( http://habrahabr.ru/post/140461/ ).

Here it is used to inform the instance of the need to delete part of the cache that has just become irrelevant.

As mentioned earlier, at AtContent.com we use publication caching. At the same time the publication is cached by an instance at the time of its choice from storage of Azure. Thus the cache of copies is filled in as far as the circulation to publications. At the time of updating the publication by the author, a message is sent to all instances of the role about the need to clear the cache for this publication, and after processing it, the cache becomes relevant again.

This mechanism allows us to reduce the cost of Azure storage and effectively use all the features of the instance.

As a cache in Windows Azure, you can use not only a hard disk, but also RAM or Windows Azure Cache. Their use is justified if you need to increase the speed of work with any data. Moreover, the volume of this data can not be large. The amount of RAM in the instance is significantly less than the amount of hard disk. Windows Azure Cache also does not provide large amounts of data - the maximum amount that can be obtained is 4 GB, and you have to pay $ 325 per month for it ( http://www.windowsazure.com/ru-ru/home/tour/caching/ ) .

Therefore, if you just need to reduce the number of accesses to the Azure storage for data that rarely changes, but is very often required for reading, then the mechanism described in the article can be very useful to you. It will also be available as part of the OpenSource CPlase library, which will soon be made publicly available.

Read in the series:

- " AtContent.com. Internal structure and architecture ",

- " The mechanism of messaging between roles and instances ",

- " Effective management of processing cloud queues (Azure Queue) ",

- " LINQ extensions for Azure Table Storage, implementing Or and Contains operations ",

- " Practical tips for splitting data into parts, generating PartitionKey and RowKey for Azure Table Storage ."

Source: https://habr.com/ru/post/140955/

All Articles