NetApp Data ONTAP 8 - Storage Hypervisor

This year for the company will become “the year of Cluster-mode”, despite the fact that the version of Data ONTAP supporting this mode of operation, its own internal OS that works in all NetApp storage systems, was released in version 8.0 in 2008, and its capabilities the creation of a “multi-node storage cluster” is starting to be realized only now.

But first, a little history.

A few years ago, the prospects for the development of storage systems in the direction of “cloud” systems began to be spoken about, although it was not yet included in the IT-lexicon this buzzword, and then they talked about GRID systems that are replacing “classic” network storage systems.

There is nothing surprising in the fact that NetApp, as a company that has traditionally paid a lot of attention to promising areas in the industry, was one of the first to focus on the “cloud” cluster systems. And that's what came out of it.

Back in 2003, NetApp bought start-up Spinnaker, which developed in the field of cluster file systems and Global Namespace tools, and several years later launched a special OS on the market called Data ONTAP Generation X (GX or v10). It was a special OS for some NetApp systems that allowed building multi-node storage clusters. Unfortunately, it had many limitations, in particular, it worked only as an NFS server, and did not have many important and familiar features of the “classic” Data ONTAP 7.x, therefore it did not gain much popularity, was expensive, limited, and required considerable specialized knowledge for launch and use, and as a result, its use was limited to the market for high-performance file servers for HPC (High-performance Computing) and storage systems for scientific and audio-video information. The total number of customers in the world who used Data ONTAP GX did not exceed a couple of hundred.

')

The next step NetApp, was an attempt to combine, “merge” these two OS together, the “classic” Data ONTAP 7, which has rich functionality, which I described in detail in this blog, and running on tens of thousands of systems, and a new, “cluster”, “ cloudy. " However, the “merge” announced in 2008 turned out to be largely “fictitious”, simply from one OS distribution, called Data ONTAP 8.x, it became possible to install two OSs: either in 7-mode, that is, “classical” Data ONTAP, or in the mode called “Cluster-mode”, which was the development of Data ONTAP 10. Unfortunately, as I said above, these were just two OSes installed from the same distribution, and only. In Cluster-mode, the usual features of Data ONTAP Classic did not appear, and still they were two incompatible OSs that did not have the ability to migrate data or interact, completely different in data storage structures and work with them.

As a consequence, the existing couple of hundreds of GX clients were upgraded to 8.x Cluster-mode, and the majority of NetApp users continued to use “7-mode”. A significant barrier, apart from functional limitations, was the price of the solution, as well as the complexity of the infrastructure implementation that required expensive components, such as an internal Cluster Network using 10 Gigabit Ethernet.

However, the work continued, and gradually, in 8.x Cluster-mode, all those familiar 7-mode features, such as replication, block deduplication, and, most importantly, work with block protocols — FC, iSCSI and Fcoe. Let me remind you that, prior to version 8.1, Cluster-mode was a purely “file storage” operating under the NFS and CIFS protocols, which, alas, did not suit everyone.

In the meantime, as NetApp “digested” Spinnaker’s legacy, competitors in this area began to appear on the market, and Isilon’s product began to be sold (and eventually sold entirely to EMC), and the growing interest in “cloudy” IT systems naturally became “ the engine of development and storage clouds are multi-node clusters, original cloud storages, which could not be better placed in the cloud paradigm.

And now, starting from the Data ONTAP 8.1 version, the Cluster-mode version began to be able to work with block SAN protocols, the asynchronous replication and snapshots familiar to Data ONTAP Classic , deduplication and compression, finally, the prices were reduced, and the use of Cluster-mode has become somewhat more accessible to users.

What gives this Cluster-mode, from what we have not had so far, and how does it work at all?

In order to answer this question, the easiest way is to present Data ONTAP in Cluster-mode as a kind of “storage hypervisor”. With how the server virtualization system hypervisor works, for example VMware ESX, MS Hyper-V or Citrix XEN, you are already about familiar, I think. Data ONTAP in Cluster-mode works very similarly, only instead of virtual machines with applications under such a hypervisor there will be user-created “virtual storage systems” with data called Vserver, which, like virtual machines under the server hypervisor, can without interrupting work and access for them to migrate from one physical controller in a cluster to another, depending on their availability or speed requirements, or any other needs that you specify. Migration can also be carried out between different platforms, as well as in the server hypervisor cluster we can enable various (of course, with a number of restrictions) on the physical platform of the host server and transparently for our applications to move them inside from one physical host to another.

Thus, Data ONTAP Cluster-mode is the “hypervisor” for the storage system.

At the risk of inflating the article with many technical details that are interesting only to already active users of NetApp, I will tell you some more details.

First of all, when dealing with Cluster-mode, you should understand the difference between the concepts of Global Filesystem and Global Namespace . Data ONTAP 8.1 Cluster-mode is the Global Namespace , but NOT the Global Filesystem .

Global Namespace allows you to see a cluster and its space as a set of nodes of its components, as a single “entity”, a holistic space, regardless of where and how your data is stored. However, in terms of storage, each node controller still operates with data stored in its aggregates and volumes. A single file cannot be located in more than one aggregate / node controller. Also, it cannot migrate, being distributed partly on one, partly on another node controller.

It seems to me that it is very important to understand, that’s why I’m focusing on it so much.

Of course, devices that implement the Global Filesystem have fewer restrictions in this place (for example, EMC Isilon with its OneFS is just the Global Filesystem), however, in our world, as you remember, nothing is free, you have to pay for everything, and Global Filesystem entails a number of very unpleasant adverse effects, primarily for performance. Isilon is quite good for a certain number of tasks, but it is good, first of all, on certain types of workload (mainly sequential access). How important in your particular case is the ability to store huge file (s) larger than the total capacity of disks connected to one controller and distributed to several cluster nodes, and are you ready for such an opportunity to pay for the deterioration of random access to them - decide you. Today on the market there is both one and the other option.

The performance advantage was quite convincingly demonstrated by NetApp in the recent SPECsfs2008 testing on the NFS protocol, where the 24-node FAS6240 system running Data ONTAP 8.1 almost outperformed the 140-node EMC Isilon S200 system.

At the same time, it should be noted that, in the case of NetApp, the worst case was specially tested, the “worst case” that is, only 1/24 of all operations went to the owner-controller, 23 out of every 24 operations went through “non-native” controllers, transmitted through cluster network, and none of the existing optimization tools of NetApp, such as, for example, Load Sharing Mirrors (RO-copies) on non-native cluster nodes, which will certainly increase productivity in real life, were not used.

Let me remind you that the SPECsfs2008 test is a classic and authoritative test that mimics the average typical file access using the NFS (and CIFS) protocols, generated by a mixture of operations of the corresponding protocol, and there, of course, there are many metadata operations and, mainly, random access.

So - Data ONTAP 8.1 Cluster-mode is a Global Storage Namespace , but NOT a Global Storage Filesystem . Despite the fact that you see the cluster as a single entity, a separate file stored on it can not exceed the capacity of aggregate of one controller. However, you can access the data in this file through any of the cluster controllers. This is the difference between Global Filesystem and Global Namespace.

The second point on which I would like to stop in more detail is how the cluster is built physically.

Despite the fact that, formally, the “unit of measurement” of the cluster size is one node, which represents one physical controller, these nodes are always included in HA pairs. For this reason, the number of nodes in a NetApp Data ONTAP 8.x cluster is always even. This ensures reliability and high availability (High Availability) of the node, using the same method as was done for the controllers at 7.x.

Therefore, you cannot make a 5- or 15-node cluster, but you can make a 4-, 6- or 16-node cluster.

At the moment, it is possible to build a cluster operating as a NAS server (NFS or CIFS) from 24 controller nodes (nodes), that is, from 12 HA pairs of controllers.

The version of Data ONTAP 8.1 also introduced support for block protocols (iSCSI, FC, FCoE). However, when using block protocols (only them alone, or in combination with NAS), the maximum cluster size is currently supported at a rate of four nodes, or two HA pairs . This value, as I think, will grow as soon as everything is debugged and reliability is ensured, yet 8.1 is the first version with such functionality, but for now - keep that in mind. This is due primarily to the fact that file protocols such as NFS or CIFS are, in fact, fully managed and controlled on the storage side, and it is easier for him to ensure all the necessary work procedures and synchronization processes between cluster nodes.

The third point, which I would like to highlight in more detail, is that at the moment NetApp has quite strict hardware requirements for implementing cluster communications. To build a Cluster network, that is, the internal inter-control network of the cluster itself, currently only two models of 10-Gigabit switches are supported, these are Cisco Nexus 5010 (20 ports, cluster up to 12/18 nodes) and Cisco Nexus 5020 (40 ports, cluster more than 18 nodes), they are sold by NetApp with their partner numbers, as part of the overall quota of such a system, and a specially designed NetApp configuration file for these switches is installed on them. Moreover, it is possible to use these switches only for the tasks of the internal cluster network ; it is impossible to combine them with other tasks, for example, to connect a client network to them. Even if there are still ports left.

However, there is good news. Now, NetApp and Cisco, as a time-limited promotion, when ordering cluster-mode storage from NetApp, donate the infrastructure equipment necessary for building a cluster for a symbolic price of $ 1 for Cisco Nexus for Cluster network and Cisco Catalyst 2960 for Cluster management network, plus the necessary SFP and cable. At the same time, the price for the Data ONTAP 8.1 Cluster-mode system from two nodes, for promotion, is equalized with the price of a similar 7-mode configuration, and the infrastructure part will come at a price of $ 5, for five devices (two Nexus 5010, two Catalyst 2960, a set of cables ), plus service charges.

Before your eyes light up and shake hands “to buy the Nexus 5010 for one dollar,” I would like to clarify in a separate line that this offer is valid only for the purchase of the cluster-mode system, and, under the terms of the purchase, cannot be used what else.

The two-node system purchased for the promotion can be extended to 12 nodes (6 HA-pairs) by purchasing only SFP and cable.

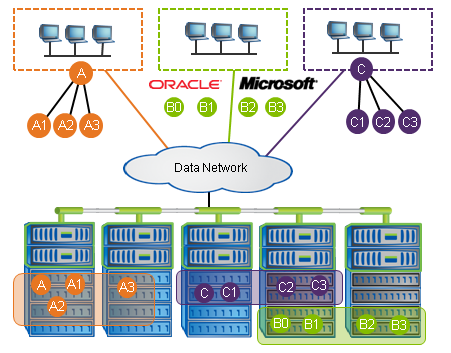

The cluster-mode cluster structure is as follows (in the figure, for example, a two-node system is shown):

As the switches of the client network, you can use any switches, Ethernet or FC, depending on the user's needs.

Only Cisco Nexus 5010 (for clusters with up to 12/18 nodes) or 5020 (for a greater number of nodes) can be used as Cluster network switches .

As a Cluster Management Switch, NetApp recommends Cisco Catalyst 2960, but does not currently require you to buy this particular model, use a different model available from the client if necessary, this can be done through the PVR institution, a special verification request and configuration instructions from NetApp . NB: SMARTnet for such a switch is a must!

Cluster Network is dedicated only for this task network 10Gb Ethernet. The only exception is the FAS2040, which can be used in Cluster-mode using Gigabit Ethernet, but its use with other controllers is not recommended. Please note that even for 2040 and its Gigabit Ethernet, other switches besides Nexus 5010/5020 are not supported !

Cluster nodes may vary by model. You can merge into a single cluster any controllers for which compatibility with cluster-mode is declared (with the only exception in the form of FAS2040, the use of which with other controllers is not recommended (although possible), for the above reason for the absence of 10G ports)

You can also cluster and system with disks of different types, for example, you can build a system with SAS, SATA and SSD disks within one single cluster, and organize data migration between different controller-nodes and disks of different types.

Thus, using Data ONTAP Cluster-mode, which, I recall, can work on any NetApp controllers sold today, you create “virtual storage systems” in much the same way as you create “virtual servers” under the virtualization hypervisor that are independent from the host hardware, can arbitrarily and “live” migrate between physical controllers according to your requirements or applications, you can increase the cluster performance on the fly, scaling such a cluster, adding new “ho you "Controller, and do much, already familiar to users of VMware vSphere, MS Hyper-V or Citrix Xen Server. Only for storage system.

Judging by the activity with which NetApp is now publishing on its website Best Practices for applying Cluster-mode for databases (including Oracle and MS SQL Server), application systems such as SAP, MS Sharepoint, and other “business heavyweights”. “The demand for such solutions is now very high.

I think that this year we will see the implementation of systems using Data ONTAP Cluster-mode in Russia.

Source: https://habr.com/ru/post/140907/

All Articles