Development of the Russian-speaking "analog" of Siri in 7 days

After the release of the iPhone 4S with Siri "on board", the owners of the remaining Apple gadgets felt a little deprived. Even in its new iPad, Apple did not include Siri. Developers around the world have attempted to port Siri to other devices or write similar analogues. And only the Russian-speaking App Store kept silence. Probably all the developers are very busy, I thought, and decided to fix this unfortunate misunderstanding ...

1. The word "analog" is not in vain put in quotes. My application is not a bit of an analogue of Siri, but an amateur hack. I understand perfectly well that to create something really similar to Siri, you need huge resources and a lot of money.

2. Yes, I know that Apple explains that it does not support other iPhones, because of some special noise-canceling chip built into 4S. But I do not strongly believe in it, most likely their servers do not withstand the load from 4S. And if you connect all Apple gadgets to Siri, the servers will simply collapse.

3. The application was created as Just for fun and did not pursue any practical purposes. And besides this, the main work was also worked out.

I initially decided not to spend a lot of time on this project for several reasons. First of all, I read many articles where it was written that Apple does not miss programs like Siri in the App Store. Moreover, trying to remove from the App Store existing ones, such as Evi. Therefore, there is a high probability that they will not miss my program either. As the way it happened, with a client for rutracker.org written by me. I sent the application 4 times for a review, corrected everything the censors told me, but the app did not get into the App Store (I then spat on this case and laid out the cut-down version at w3bsit3-dns.com, not to lose work). Secondly, I naturally don’t have the resources to write a full-fledged program.

')

At first I thought out the very logic of the application. Naturally, all text to speech and speech to text conversions should be performed on the server. And the application itself is just an interface. In this case, the solution will work even on the weakest devices, as well as possess cross-platform. For portability on Android and Windows Phone, you only need to write an interface to these platforms.

Thus, the application logic is as follows:

A) we record the speech of the interlocutor and transmit to the server for recognition;

B) we get the recognized string from the server, and carry out easy initial processing. These are answers to the most frequent questions, we cut off the mats and curses, intercept the words to search in Yandex and search for the weather forecast. Other commands like send SMS or check mail, I decided not to build in yet because of the fear of not going through the revue;

B) send the filtered string to your server for recognition. And we get the answer line with the answer;

D) we send the answer to the server for conversion to speech, we get a link to the mp3 stream and reproduce the answer;

Yes, it turns out slowly, but so far there is no other option but to combine all this on one of its servers. But this is a completely different order of costs: a dedicated powerful server, most likely not one; purchase and licensing of speech recognition engine text-to-speech, etc. So let's stop, while on this logic.

I am looking for engines. This was not a small problem. Firstly, most of them are paid and not a little from $ 50 per 1000 words, secondly a very small amount recognizes Russian speech, in the third the quality of those that Russian recognizes is just awful.

I stopped at the engine ispeech.org. Firstly, it allows you to do two transformations of “speech to text” and “text to speech” at once. Secondly, it has an SDK for the iPhone, and when using this SDK, a free key is issued and recognition is free. Naturally, for the "balls" had something to sacrifice. He disgustingly recognizes Russian cities. According to this, it is not realistic to know the weather forecast in some difficult-to-pronounce city. In Moscow, no problem.

I study its API. I settled on the JSON format. I transmit to the server a key, the language to be recognized, service fields such as the format of the audio file and the speech itself, encoded in the base64encode, .wave file. I get the answer, also in the format JSON, error, if an error. And a line of text and recognition accuracy, if success.

Similarly, the inverse transform is done. I send to the server a line for pronunciation, language, and service fields and get back an mp3 stream that I reproduce.

I try to get something similar to Siri, but did not repeat exactly, otherwise the censors will hack.

That's what happened.

Well, I'm not a designer at all. Day wasted.

Nothing complicated ordinary http POST requests. I build in API. First testing. Hooray!!! It works, but not very fast. When WI FI is normal, although slower than this Siri. With 3G, it slows down. With GPRS just torture, and the answer can not wait. I understood the reason quickly. The wave file compressed with the ULAW codec is transmitted to the server, the sampling rate is 44 kHz. The file turns out huge, it is necessary to press as for a voice on 8 KHz. Something does not work out. I mark myself that there is a problem, I score on it and move on. Filter mats and curses.

I select key fields like “search”, “search”, “find”, “weather”, etc. We have to ask for reliability what exactly we are looking for and in which city the weather forecast is needed. It seems to work out. It turns out that cities are understood poorly. So much work is lost, but decided not to throw out this feature, suddenly the engine will eventually learn to better understand the city. I'm testing again and again and again. The result pleased. I spread the application in the App Store, let him wait for a review while I write my server.

I study the literature on artificial intelligence and speech analysis. Quietly fucking. I master the basics. So far I decide not to bother with artificial intelligence, but simply to disassemble the application into phrases, make a simple analysis, highlight keywords and search them in the database for them.

I throw a brief idea in which direction to go. It means that I am compiling a knowledge base, by searching, I compare the keywords selected from the sentence with the base and give the record that most closely matches the question.

I find dictionaries for interlocutors in open sources, of course, their quality is not enough and it will be necessary to refine. But for the start of the fit.

I am writing a non-complex PHP program for finding answers on my server. In order that the server was not accessed by outsiders and did not drop it, provided for the phone to send a token that is hard-wired in the application. While on the authorization I decided not to bother much.

I also decided not to transfer the phone coordinates to the GPS server, although I like the idea itself. Knowing the coordinates of the phone, you can use the API of any weather server to issue a weather forecast. You can also use the coordinates of the phone to find the nearest bars, cafes, shops. But again, you need a resource with a normal API, to which you sent a request and coordinates and got a clear answer. I wrote down this idea and put it off until later if I would write a new version of the application.

All the questions asked, and the answers to them are recorded in the database, by the way, the phone’s UDID [IMEI] too. Yes, yes, “Big Brother” is watching you (joke). In fact, it is necessary for the development of the program. Knowing the questions that are asked, I can quickly fill up the knowledge base and catch the glitches of the program. UDID is needed for further development. I plan that the program remembers the previous questions, so I use the UDID to identify the phone. Knowing the previous questions, you can make the behavior of the application even more intelligent. Interestingly, Siri takes into account previous questions when building a dialogue?

When searching for answers in the knowledge base, the full-text search MATCH-AGAINST is used. Normal SQL queries, nothing special.

Tested how knowledge base search works. I was pleased. I sat down to write an article on Habr, and my 12-year-old son expressed a desire to teach the knowledge base.

He found information on the Internet, which questions are most often asked by Siri, and I laughed for a long time. At the moment I am writing this article, and he is putting his understanding of this world "in the head" of the car. That "VKontakte" is better than "Classmates" and more. I, of course, then check everything that he entered into the database there.

What happened.

For seven days, it’s quite realistic to write the simplest virtual interlocutor who will be able to support the conversation and answer some questions. Of course, up to Siri is like a moon, but like a little entertainment, it is quite suitable. It is in the category of "Entertainment", if the censors miss the application, it will fall.

You can easily port to Android and Widows Phone.

The disadvantages of the program.

1. Long sending of speech to the server due to the wave format.

I plan to reduce the sampling rate to 8 kHz, but I don’t know how yet.

2. Not very good speech recognition, especially Russian cities, engine recognition.

Maybe I will use the Google engine, he speaks better. But for him you need to recode speech in FLAC format, which I don’t know yet how to do it either. We must look for the appropriate library. And, of course, the question remains of the licensed purity of such a path .

3. Runs slower than Siri.

This is solved only by purchasing the speech recognition engine and installing it on your dedicated server. I'm not sure that I will follow this path, it is very expensive.

4. Not much of what Siri can.

Well, this problem is solved by the release of updates and the development of a knowledge base. This is just a matter of time and funds allocated for it.

If I missed some moments, I am ready to answer in the comments.

UPD: At the request of Habrazhiteley and in order to avoid omissions added video.

www.youtube.com/watch?v=UzFGgH741Cw

UPD2: Added another video.

www.youtube.com/watch?v=LVlllVSyln8

UPD3: Predreliznaya version here

www.youtube.com/watch?v=JlkJva-TGfY

DISCLAIMER:

1. The word "analog" is not in vain put in quotes. My application is not a bit of an analogue of Siri, but an amateur hack. I understand perfectly well that to create something really similar to Siri, you need huge resources and a lot of money.

2. Yes, I know that Apple explains that it does not support other iPhones, because of some special noise-canceling chip built into 4S. But I do not strongly believe in it, most likely their servers do not withstand the load from 4S. And if you connect all Apple gadgets to Siri, the servers will simply collapse.

3. The application was created as Just for fun and did not pursue any practical purposes. And besides this, the main work was also worked out.

Why in 7 days?

I initially decided not to spend a lot of time on this project for several reasons. First of all, I read many articles where it was written that Apple does not miss programs like Siri in the App Store. Moreover, trying to remove from the App Store existing ones, such as Evi. Therefore, there is a high probability that they will not miss my program either. As the way it happened, with a client for rutracker.org written by me. I sent the application 4 times for a review, corrected everything the censors told me, but the app did not get into the App Store (I then spat on this case and laid out the cut-down version at w3bsit3-dns.com, not to lose work). Secondly, I naturally don’t have the resources to write a full-fledged program.

')

Day 1st. Design

At first I thought out the very logic of the application. Naturally, all text to speech and speech to text conversions should be performed on the server. And the application itself is just an interface. In this case, the solution will work even on the weakest devices, as well as possess cross-platform. For portability on Android and Windows Phone, you only need to write an interface to these platforms.

Thus, the application logic is as follows:

A) we record the speech of the interlocutor and transmit to the server for recognition;

B) we get the recognized string from the server, and carry out easy initial processing. These are answers to the most frequent questions, we cut off the mats and curses, intercept the words to search in Yandex and search for the weather forecast. Other commands like send SMS or check mail, I decided not to build in yet because of the fear of not going through the revue;

B) send the filtered string to your server for recognition. And we get the answer line with the answer;

D) we send the answer to the server for conversion to speech, we get a link to the mp3 stream and reproduce the answer;

Yes, it turns out slowly, but so far there is no other option but to combine all this on one of its servers. But this is a completely different order of costs: a dedicated powerful server, most likely not one; purchase and licensing of speech recognition engine text-to-speech, etc. So let's stop, while on this logic.

Second day. Search engine

I am looking for engines. This was not a small problem. Firstly, most of them are paid and not a little from $ 50 per 1000 words, secondly a very small amount recognizes Russian speech, in the third the quality of those that Russian recognizes is just awful.

I stopped at the engine ispeech.org. Firstly, it allows you to do two transformations of “speech to text” and “text to speech” at once. Secondly, it has an SDK for the iPhone, and when using this SDK, a free key is issued and recognition is free. Naturally, for the "balls" had something to sacrifice. He disgustingly recognizes Russian cities. According to this, it is not realistic to know the weather forecast in some difficult-to-pronounce city. In Moscow, no problem.

I study its API. I settled on the JSON format. I transmit to the server a key, the language to be recognized, service fields such as the format of the audio file and the speech itself, encoded in the base64encode, .wave file. I get the answer, also in the format JSON, error, if an error. And a line of text and recognition accuracy, if success.

Similarly, the inverse transform is done. I send to the server a line for pronunciation, language, and service fields and get back an mp3 stream that I reproduce.

Day 3rd. I'm starting to write an application. Design

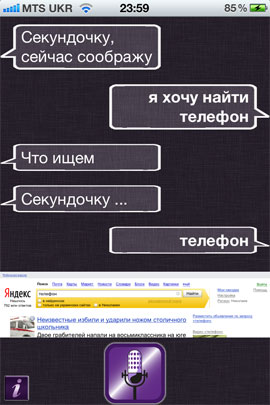

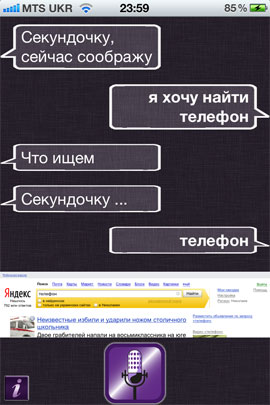

I try to get something similar to Siri, but did not repeat exactly, otherwise the censors will hack.

That's what happened.

Well, I'm not a designer at all. Day wasted.

Day four. I am writing application logic

Nothing complicated ordinary http POST requests. I build in API. First testing. Hooray!!! It works, but not very fast. When WI FI is normal, although slower than this Siri. With 3G, it slows down. With GPRS just torture, and the answer can not wait. I understood the reason quickly. The wave file compressed with the ULAW codec is transmitted to the server, the sampling rate is 44 kHz. The file turns out huge, it is necessary to press as for a voice on 8 KHz. Something does not work out. I mark myself that there is a problem, I score on it and move on. Filter mats and curses.

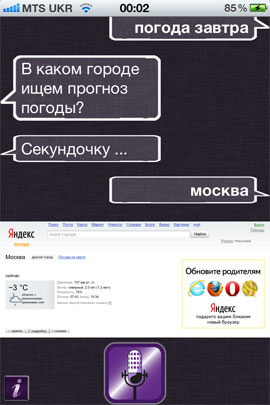

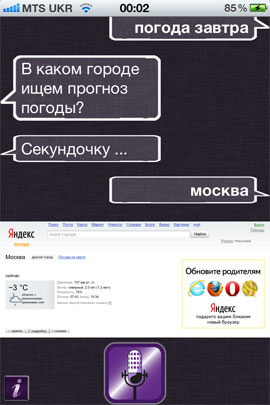

Day 5th. Integrating search in Yandex and weather. Sending to the App Store

I select key fields like “search”, “search”, “find”, “weather”, etc. We have to ask for reliability what exactly we are looking for and in which city the weather forecast is needed. It seems to work out. It turns out that cities are understood poorly. So much work is lost, but decided not to throw out this feature, suddenly the engine will eventually learn to better understand the city. I'm testing again and again and again. The result pleased. I spread the application in the App Store, let him wait for a review while I write my server.

Day 6th. Linguistics and speech analysis. Server writing

I study the literature on artificial intelligence and speech analysis. Quietly fucking. I master the basics. So far I decide not to bother with artificial intelligence, but simply to disassemble the application into phrases, make a simple analysis, highlight keywords and search them in the database for them.

I throw a brief idea in which direction to go. It means that I am compiling a knowledge base, by searching, I compare the keywords selected from the sentence with the base and give the record that most closely matches the question.

I find dictionaries for interlocutors in open sources, of course, their quality is not enough and it will be necessary to refine. But for the start of the fit.

I am writing a non-complex PHP program for finding answers on my server. In order that the server was not accessed by outsiders and did not drop it, provided for the phone to send a token that is hard-wired in the application. While on the authorization I decided not to bother much.

I also decided not to transfer the phone coordinates to the GPS server, although I like the idea itself. Knowing the coordinates of the phone, you can use the API of any weather server to issue a weather forecast. You can also use the coordinates of the phone to find the nearest bars, cafes, shops. But again, you need a resource with a normal API, to which you sent a request and coordinates and got a clear answer. I wrote down this idea and put it off until later if I would write a new version of the application.

All the questions asked, and the answers to them are recorded in the database, by the way, the phone’s UDID [IMEI] too. Yes, yes, “Big Brother” is watching you (joke). In fact, it is necessary for the development of the program. Knowing the questions that are asked, I can quickly fill up the knowledge base and catch the glitches of the program. UDID is needed for further development. I plan that the program remembers the previous questions, so I use the UDID to identify the phone. Knowing the previous questions, you can make the behavior of the application even more intelligent. Interestingly, Siri takes into account previous questions when building a dialogue?

When searching for answers in the knowledge base, the full-text search MATCH-AGAINST is used. Normal SQL queries, nothing special.

Seventh day. Today

Tested how knowledge base search works. I was pleased. I sat down to write an article on Habr, and my 12-year-old son expressed a desire to teach the knowledge base.

He found information on the Internet, which questions are most often asked by Siri, and I laughed for a long time. At the moment I am writing this article, and he is putting his understanding of this world "in the head" of the car. That "VKontakte" is better than "Classmates" and more. I, of course, then check everything that he entered into the database there.

Total

What happened.

For seven days, it’s quite realistic to write the simplest virtual interlocutor who will be able to support the conversation and answer some questions. Of course, up to Siri is like a moon, but like a little entertainment, it is quite suitable. It is in the category of "Entertainment", if the censors miss the application, it will fall.

You can easily port to Android and Widows Phone.

The disadvantages of the program.

1. Long sending of speech to the server due to the wave format.

I plan to reduce the sampling rate to 8 kHz, but I don’t know how yet.

2. Not very good speech recognition, especially Russian cities, engine recognition.

Maybe I will use the Google engine, he speaks better. But for him you need to recode speech in FLAC format, which I don’t know yet how to do it either. We must look for the appropriate library. And, of course, the question remains of the licensed purity of such a path .

3. Runs slower than Siri.

This is solved only by purchasing the speech recognition engine and installing it on your dedicated server. I'm not sure that I will follow this path, it is very expensive.

4. Not much of what Siri can.

Well, this problem is solved by the release of updates and the development of a knowledge base. This is just a matter of time and funds allocated for it.

If I missed some moments, I am ready to answer in the comments.

UPD: At the request of Habrazhiteley and in order to avoid omissions added video.

www.youtube.com/watch?v=UzFGgH741Cw

UPD2: Added another video.

www.youtube.com/watch?v=LVlllVSyln8

UPD3: Predreliznaya version here

www.youtube.com/watch?v=JlkJva-TGfY

Source: https://habr.com/ru/post/140206/

All Articles