Nginx balancing of static files

Imagine that we have an application / site with a fairly high load.

Many application developers for VK or Odnoklassniki have been faced with a situation where an application goes to the top of new applications and a huge load falls on you.

Suppose, in the process of client accessing the server, a picture is generated. We have a lot of servers. How can the client give this picture if you do not have a single file system and the files are not synchronized between the servers?

What to do when a large number of people come to the server every second? The answer is simple - nginx.

')

In this topic I will consider balancing both static files and dynamic ones.

Immediately make a reservation. Options for balancing requests in the direction of MySQL, we will not discuss now.

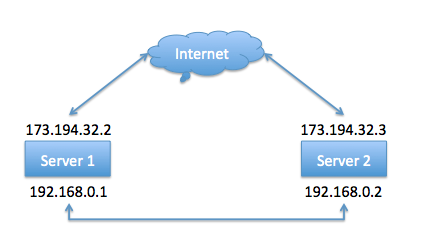

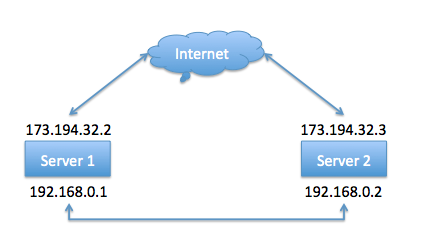

That is, roughly speaking, we have servers on which the same set of php scripts is installed, php-fpm is installed and configured. Both servers respond to client requests and the DNS records are entered in which your domain is responsible for addresses 173.194.32.2 and 173.194.32.3.

So let's go.

Server 1:

External IP Address: 173.194.32.2

Internal IP Address: 192.168.0.1

Server 2:

External IP Address: 173.194.32.3

Internal IP Address: 192.168.0.2

The scheme of our network will look like this:

Nginx configuration Server 1:

Let's look at this config in more detail.

To begin with, we determine the servers to which we will distribute clients.

nextserver - list of servers for uploading static files

backend - list of servers for uploading dynamic content (for example, php)

By the way, if you absolutely need to get requests from the client on the same server every time, then in the upstream section you need to add the ip_hash directive;

Standard Directive. The server root is set by the root directive.

Disable access_log. With a large load, the access log entry will, in the first place, give an additional load to the disks, and secondly, it will quickly score the disk.

The most interesting thing happens in this line :) We check the existence of the file, then the existence of the directory. If the file is, then nginx gives it to the client. If the file / directory does not exist, the client is redirected to location @nextserver.

It's simple. When redirecting a client to this location, we redirect the client's request via the proxy_pass directive to the upstream nextserver. In this case, it is 192.168.0.2. There can be more than one server in the upstream section, so if you wish, we can redirect clients to several servers.

If there are two servers, the first client will go to the first server, and the second client will go to the second.

In addition, redirection settings are set in this section. In this case, timeouts.

When requesting files that have the extension php5, php, phtml - redirect the client to the processor - in this case, on the upstream backend

It remains the case for small - to register another server section, this time the server should listen to the address 127.0.0.1.

At this address we will have to get connections and work them out using php-fpm.

The server section is prescribed in the image and likeness described above, except for one detail. Change the location section ~ * \. (Php5 | php | phtml) $ to the specified below.

The description of this section assumes that you have the php-fpm daemon installed and running, and it is listening to a unix socket at /tmp/php-fpm.sock

The second server is configured by analogy with the first. We change the address in the upstream nextserver section to 192.168.0.1 (that is, we tell the server to send requests for non-existent files to server 1).

In addition, we change the server_name directive.

Server configuration 2 should look like this:

Thus, if a client requests a picture that does not exist on one server, an attempt will be made to search for pictures on the second server.

The only problem in this case is that if the pictures are not on both servers, the requests will be transmitted in a circle from one server to the other until it reaches the timeout. As a result, error 503 will be returned.

The nginx configuration specified in this article is simplified and can be improved, including problems with a cycle in the absence of the requested file. But this is a separate question.

Many application developers for VK or Odnoklassniki have been faced with a situation where an application goes to the top of new applications and a huge load falls on you.

Suppose, in the process of client accessing the server, a picture is generated. We have a lot of servers. How can the client give this picture if you do not have a single file system and the files are not synchronized between the servers?

What to do when a large number of people come to the server every second? The answer is simple - nginx.

')

In this topic I will consider balancing both static files and dynamic ones.

Immediately make a reservation. Options for balancing requests in the direction of MySQL, we will not discuss now.

That is, roughly speaking, we have servers on which the same set of php scripts is installed, php-fpm is installed and configured. Both servers respond to client requests and the DNS records are entered in which your domain is responsible for addresses 173.194.32.2 and 173.194.32.3.

So let's go.

Server settings

Server 1:

External IP Address: 173.194.32.2

Internal IP Address: 192.168.0.1

Server 2:

External IP Address: 173.194.32.3

Internal IP Address: 192.168.0.2

The scheme of our network will look like this:

Nginx configuration Server 1:

upstream nextserver { server 192.168.0.2; } upstream backend { server 127.0.0.1; } server { listen *:80; server_name 173.194.32.2; location / { root /var/www/default; # . access_log off; try_files $uri $uri/ @nextserver; } location @nextserver { proxy_pass http://nextserver; proxy_connect_timeout 70; proxy_send_timeout 90; proxy_read_timeout 90; } location ~* \.(php5|php|phtml)$ { proxy_pass http://backend; } } Let's look at this config in more detail.

To begin with, we determine the servers to which we will distribute clients.

upstream nextserver

nextserver - list of servers for uploading static files

server 192.168.0.2; upstream backend

backend - list of servers for uploading dynamic content (for example, php)

server 127.0.0.1; By the way, if you absolutely need to get requests from the client on the same server every time, then in the upstream section you need to add the ip_hash directive;

Location /

Standard Directive. The server root is set by the root directive.

root /var/www/default; Disable access_log. With a large load, the access log entry will, in the first place, give an additional load to the disks, and secondly, it will quickly score the disk.

access_log off; The most interesting thing happens in this line :) We check the existence of the file, then the existence of the directory. If the file is, then nginx gives it to the client. If the file / directory does not exist, the client is redirected to location @nextserver.

try_files $uri $uri/ @nextserver; location @nextserver

It's simple. When redirecting a client to this location, we redirect the client's request via the proxy_pass directive to the upstream nextserver. In this case, it is 192.168.0.2. There can be more than one server in the upstream section, so if you wish, we can redirect clients to several servers.

If there are two servers, the first client will go to the first server, and the second client will go to the second.

In addition, redirection settings are set in this section. In this case, timeouts.

proxy_pass http://nextserver; proxy_connect_timeout 70; proxy_send_timeout 90; proxy_read_timeout 90; location ~ * \. (php5 | php | phtml) $

When requesting files that have the extension php5, php, phtml - redirect the client to the processor - in this case, on the upstream backend

proxy_pass http://backend; It remains the case for small - to register another server section, this time the server should listen to the address 127.0.0.1.

At this address we will have to get connections and work them out using php-fpm.

The server section is prescribed in the image and likeness described above, except for one detail. Change the location section ~ * \. (Php5 | php | phtml) $ to the specified below.

location ~* \.(php5|php|phtml)$ { fastcgi_pass unix:/tmp/php-fpm.sock; fastcgi_index index.php; fastcgi_param SCRIPT_FILENAME /var/www/default$fastcgi_script_name; include fastcgi_params; } The description of this section assumes that you have the php-fpm daemon installed and running, and it is listening to a unix socket at /tmp/php-fpm.sock

The second server is configured by analogy with the first. We change the address in the upstream nextserver section to 192.168.0.1 (that is, we tell the server to send requests for non-existent files to server 1).

In addition, we change the server_name directive.

Server configuration 2 should look like this:

upstream nextserver { server 192.168.0.1; } upstream backend { server 127.0.0.1; } server { listen *:80; server_name 173.194.32.3; location / { root /var/www/default; # . access_log off; try_files $uri $uri/ @nextserver; } location @nextserver { proxy_pass http://nextserver; proxy_connect_timeout 70; proxy_send_timeout 90; proxy_read_timeout 90; } location ~* \.(php5|php|phtml)$ { proxy_pass http://backend; } } Thus, if a client requests a picture that does not exist on one server, an attempt will be made to search for pictures on the second server.

The only problem in this case is that if the pictures are not on both servers, the requests will be transmitted in a circle from one server to the other until it reaches the timeout. As a result, error 503 will be returned.

The nginx configuration specified in this article is simplified and can be improved, including problems with a cycle in the absence of the requested file. But this is a separate question.

Source: https://habr.com/ru/post/140068/

All Articles