2D-> 3D in Augmented reality

In this article, I will tell you how to build a 3D space using the found location of an object in the scene in applications of Augmented reality . To do this, you need to get two matrices - projection (GL_PROJECTION) and model (GL_MODELVIEW) for work, for example, in OpenGL. We will do this by means of the OpenCV library .

Recently I had to solve this problem, but I couldn’t find a resource where I couldn’t find how to do it in a step-by-step (maybe I was looking badly), and there are enough pitfalls in this problem. In any case, the article on Habré describing this task does not hurt.

Introduction

I myself am an iOS programmer, in my spare time I am developing my own Augmented Reality engine. For the base I took OpenCV - an open source library for computer vision.

')

In general, the most interesting proposal for mobile devices (iOS / Android) in this area are the development of Qualcomm.

More recently, they released their own AR SDK under the name Vuforia . At the same time, the use of the SDK is free both for developing and laying out the application in the store (AppStore, AndroidMarket), as the Licensing paragraph proudly declares. At the same time, they write that you must notify the end user that this SDK may collect some anonymous information and send it to Qualcomm servers. You can find this section at this link by choosing Getting Started SDK -> Step 3: Compiling & Running ... -> Publish Your Application in the right menu. And plus to this, you can consider me paranoid, but I am 90% sure that when their SDK gains a certain percentage of popularity, they will say, “Everything, the freebie is over, pay grandmas”.

Therefore, I consider developing my own engine not a waste of time.

Actually, to the point!

Theory

We believe that by this time you have implemented OpenCV in your project ( how? ), And have already written a method for recognizing an object in the frame coming from the camera. That is, about the picture you have:

The theory on this issue can be found in many sources. I cited the main links below. The starting resource is the OpenCV documentation page, although there will be a lot of reading questions.

In a nutshell, in order to build a 3D space using the 2D homography found, we need to know 2 matrices:

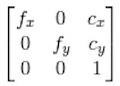

- Intrinsic matrix, or camera matrix — this matrix consists of camera parameters — the focal distance along two axes ( fx , fy ) and the coordinate of the center of the focus ( cx , cy ).

The structure of this matrix:

- The extrinsic matrix, or model matrix, is a matrix for stretching, rotating, and transferring a model. It, in fact, uniquely defines the position of the object in space. Structure:

,

,

where the diagonal elements are responsible for stretching, the remaining elements r for rotation, and the elements t for the transfer.

Note: in general, the structure of this matrix may vary depending on the model (coordinate transformation equations). For example, in the same OpenGL, the matrix is transferred to a slightly different structure, but the key elements are always present in it.

Practice

In practice, in order for OpenGL to render a 3D model on top of our object, we need to ask it:

- Projection Matrix - GL_PROJECTION.

- The model matrix is GL_MODELVIEW.

Note: In iOS, you can use 2 versions of OpenGLES - 1.1 and 2.0. The main difference is the presence of shaders in the second version. In both cases, we need to specify 2 matrices, only in the first case, they are defined by a type construction:

glMatrixMode(GL_PROJECTION); glLoadMatrixf(projectionMatrix); glMatrixMode(GL_MODELVIEW); glLoadMatrixf(modelViewMatrix); And in the second, you pass them on to the shaders.

Further, we will determine the size of the frame that you get from camera cameraSize = (width, height). In my case, cameraSize = (640, 480).

We will understand how to build each matrix.

Projection matrix

This matrix is based on the matrix of the camera. As shown above, the latter consists of certain camera parameters. In theory, one can calculate these parameters based on the technical characteristics of the camera, but in practice no one does this.

The process of finding the parameters of the camera is called its calibration. All necessary functions are written in OpenCV for calibration. Also, there is an example that allows you to simply calibrate your webcam online. But we need to calibrate the camera on the device - iPhone / iPad / iPod. And here I went the way described here .

Calibrate the camera, we will be "offline". This means that we will take pictures of the calibration pattern (chessboard) with the camera of our device, transfer the photos to the computer, and calculate the parameters from these photos. A few points:

- Checkerboard pattern print on A4 paper. The sheet must be clean, it is very desirable that the printer is "not tech".

- Try to keep the sheet of the chessboard on the pictures flat on the surface - the edges were not bent, there were no bends of the sheet. To do this, you can glue it to a piece of cardboard, or simply put heavy objects on the edges, but so that they do not cover the look of the pattern.

- Images from the camera need to be converted to the size of cameraSize. This is not an obvious step - for example on iPhone 4, when recognizing I use the camera frame size of 640x480, and when you take a photo with a simple camera and drop it onto a computer, you get larger photos (2592x1936), I compressed them to 640x480, and used these in the program.

- The number of snapshots of the template should be 12-16 + taken from different angles. Personally, I used 16 shots, while changing the parameters built on 12 and 16, though not large, but present. In general, the more pictures, the more accurate the parameters, and this accuracy will further affect the presence / absence of shifts when building 3D objects.

- In addition to the camera matrix, at this stage, we find distortion coefficients (distortion coefficients). In a nutshell, these coefficients describe the distortion of the image by the camera lens. More details can be found in the OpenCV documentation and on Wikipedia . As for mobile devices, most of the cameras are of sufficient quality, and these coefficients are relatively small, so they can be ignored.

Xcode draft program for calibration you can download here . In this case, you should have compiled OpenCV. Or you can download the compiled framework from here .

The photos themselves, you need to put in the folder with the compiled binary, and rename them in order.

If everything is ok, you will get 2 files at the output of the program:

- Intrinsics.xml - a 3x3 camera matrix will be recorded line by line here

- Distortion.xml - there will be calculated coefficients.

If a template is not found on some pictures - try replacing these pictures with others, with better lighting, perhaps at a less acute angle to the pattern. OpenCV should easily find all internal template points.

Having numbers from these files, we can build a projection matrix for OpenGL.

float cameraMatrix[9] = {6.24860291e+02, 0., cameraSize.width*0.5f, 0., 6.24860291e+02, cameraSize.height*0.5f, 0., 0., 1.}; - (void)buildProjectionMatrix { // Camera parameters double f_x = cameraMatrix[0]; // Focal length in x axis double f_y = cameraMatrix[4]; // Focal length in y axis (usually the same?) double c_x = cameraMatrix[2]; // Camera primary point x double c_y = cameraMatrix[5]; // Camera primary point y double screen_width = cameraSize.width; // In pixels double screen_height = cameraSize.height; // In pixels double near = 0.1; // Near clipping distance double far = 1000; // Far clipping distance projectionMatrix[0] = 2.0 * f_x / screen_width; projectionMatrix[1] = 0.0; projectionMatrix[2] = 0.0; projectionMatrix[3] = 0.0; projectionMatrix[4] = 0.0; projectionMatrix[5] = 2.0 * f_y / screen_height; projectionMatrix[6] = 0.0; projectionMatrix[7] = 0.0; projectionMatrix[8] = 2.0 * c_x / screen_width - 1.0; projectionMatrix[9] = 2.0 * c_y / screen_height - 1.0; projectionMatrix[10] = -( far+near ) / ( far - near ); projectionMatrix[11] = -1.0; projectionMatrix[12] = 0.0; projectionMatrix[13] = 0.0; projectionMatrix[14] = -2.0 * far * near / ( far - near ); projectionMatrix[15] = 0.0; } A few notes:

- We replace the coefficients ( cx , cy ) of the camera matrix with the center of our frame. Then there will be no offset of the 3D model relative to the object in the frame. The author of the post came to the same conclusion (see UPDATE: at the end of the article).

- I took the formulas for obtaining the projection matrix from here . In essence, this is how a perspective projection is set, which takes into account the parameters of our camera and the frame size.

- The presented matrix of the camera was obtained by me for the iPhone 4. For other devices, the matrix will be different, although, I think, not much.

Matrix model

When building this matrix, this question helped me in StackOverflow.

Fortunately, the necessary functions in OpenCV are already implemented.

So, the code:

float cameraMatrix[9] = {6.24860291e+02, 0., cameraSize.width*0.5f, 0., 6.24860291e+02, cameraSize.height*0.5f, 0., 0., 1.}; float distCoeff[5] = {1.61426172e-01, -5.95113218e-01, 7.10574386e-04, -1.91498715e-02, 1.66041708e+00}; - (void)buildModelViewMatrixUseOld:(BOOL)useOld { clock_t timer; startTimer(&timer); CvMat cvCameraMatrix = cvMat( 3, 3, CV_32FC1, (void*)cameraMatrix ); CvMat cvDistortionMatrix = cvMat( 1, 5, CV_32FC1, (void*)distCoeff ); CvMat* objectPoints = cvCreateMat( 4, 3, CV_32FC1 ); CvMat* imagePoints = cvCreateMat( 4, 2, CV_32FC1 ); // Defining object points and image points int minDimension = MIN(detector->modelWidth, detector->modelHeight)*0.5f; for (int i=0; i<4; i++) { float objectX = (detector->x_corner[i] - detector->modelWidth/2.0f)/minDimension; float objectY = (detector->y_corner[i] - detector->modelHeight/2.0f)/minDimension; cvmSet(objectPoints, i, 0, objectX); cvmSet(objectPoints, i, 1, objectY); cvmSet(objectPoints, i, 2, 0.0f); cvmSet(imagePoints, i, 0, detector->detected_x_corner[i]); cvmSet(imagePoints, i, 1, detector->detected_y_corner[i]); } CvMat* rvec = cvCreateMat(1, 3, CV_32FC1); CvMat* tvec = cvCreateMat(1, 3, CV_32FC1); CvMat* rotMat = cvCreateMat(3, 3, CV_32FC1); cvFindExtrinsicCameraParams2(objectPoints, imagePoints, &cvCameraMatrix, &cvDistortionMatrix, rvec, tvec); // Convert it CV_MAT_ELEM(*rvec, float, 0, 1) *= -1.0; CV_MAT_ELEM(*rvec, float, 0, 2) *= -1.0; cvRodrigues2(rvec, rotMat); GLfloat RTMat[16] = {cvmGet(rotMat, 0, 0), cvmGet(rotMat, 1, 0), cvmGet(rotMat, 2, 0), 0.0f, cvmGet(rotMat, 0, 1), cvmGet(rotMat, 1, 1), cvmGet(rotMat, 2, 1), 0.0f, cvmGet(rotMat, 0, 2), cvmGet(rotMat, 1, 2), cvmGet(rotMat, 2, 2), 0.0f, cvmGet(tvec, 0, 0) , -cvmGet(tvec, 0, 1), -cvmGet(tvec, 0, 2), 1.0f}; cvReleaseMat(&objectPoints); cvReleaseMat(&imagePoints); cvReleaseMat(&rvec); cvReleaseMat(&tvec); cvReleaseMat(&rotMat); printTimerWithPrefix((char*)"ModelView matrix computation", timer); } First we need to define 4 pairs of object points and the corresponding position in the frame.

The position points in the frame are the vertices of the quadrilateral describing (bounding) the object in the frame. To obtain these points, having a homography of the H transformation, you can simply act on the extreme points of the pattern given by the homography:

Regarding the points of the object itself, there are a couple of moments:

- Object points are defined in 3D, and points on a frame in 2D. Accordingly, if we allow it to be given a non-zero value z , then the origin of coordinates on z will be shifted relative to the object plane on the frame. It is easier to understand from these two pictures:

z = 1.0

z = 0.0 - Also, we set the points of the object so that we can continue to work in this 3D space further. For example, I want the origin of coordinates to be right in the center of the template, and the unit of length equal to half the smaller side (in the minDimension code). In this case, we will not depend on the specific size of the template in pixels, and the 3D space will be scaled on the smaller side.

The constructed matrices are passed to the cvFindExtrinsicCameraParams2 function. It will build us a rotation vector, and a transfer vector. From the rotation vector we need to get the rotation matrix. This is done using the cvRodrigues2 function, having previously converted the rotation vector slightly by multiplying the second and third elements by -1 . Further, we can only save the data in the model matrix for OpenGL. At the same time, the OpenGL matrix must be transposed.

Everything, we delete temporary objects, and the matrix of model is received.

Total

Having a procedure for constructing two matrices, we can safely create a GLView, and draw models there. I note that the function of finding the matrix of the model is not more than 10 milliseconds on the iPhone 4, that is, using it will not lower your recognition FPS significantly.

Thanks for attention.

Learn more:

1. http://old.uvr.gist.ac.kr/wlee/web/techReports/ar/Camera%20Models.html

2. http://www.hitl.washington.edu/artoolkit/mail-archive/message-thread-00653-Re--Questions-concering-.html

3. http://sightations.wordpress.com/2010/08/03/simulating-calibrated-cameras-in-opengl/

4. http://www.songho.ca/opengl/gl_projectionmatrix.html

Source: https://habr.com/ru/post/139429/

All Articles