MS Windows Server 2003, failover cluster

Introduction

We got a real dinosaur, - HP ProLiant DL380 G4 Packaged Cluster with MSA500 G2 , this is a turnkey solution from Hewlett Packard, two servers with external storage, which means building a cluster. Well, the cluster is so cluster, it is said done. Previously, the experience of creating such systems was not, so I will try to describe the process as detailed as possible with all the errors we made.

A bit of theory

So why do we need a cluster? Here roughly two options: either the creation of a fault-tolerant system, or a load distribution system. In my case, this is a fault-tolerant system: initially one server is running, if a software or hardware failure occurs, the second server comes into play, and a certain service installed on these servers quickly enough resumes. I will not go into the details of the service for which this is all done, even for an example, it will be a program that pings something and creates a report about it.

To build a cluster, it is necessary to take into account several fundamental conditions: compatible equipment of cluster segments - there are no problems here, we have identical servers, we also have compatible software and shared disk space. Also cluster segments must be in the same domain. Since the system will be independent in our technological network, we will have to create a domain as well. For good, you need to have a separate server as a domain controller, and since the system must be fault tolerant, there must be two domain controllers. But in the absence of these servers, the cluster segments will be domain controllers. Active Directory services do not lend themselves to clustering, well, to hell with them, cluster by cluster, and domain controllers by themselves.

Disk array

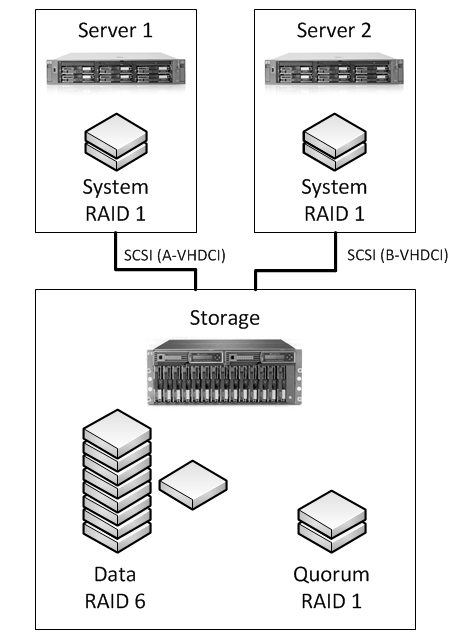

Let's start with the preparation of hard drives. The HP StorageWorks 500 G2 Modular Smart Array disk array got with nine SCSI disks of 146 gigabytes and two of 34 gigabytes each. It is connected to the servers via the SCSI interface, and is controlled by the built-in RAID controller utility in the servers themselves. For the total disk space we will use disks of 146 gigabytes, and combine them into a RAID array. Of the possible options was selected RAID 6 , of course, compared with the fifth, it is less efficient, which in principle is not critical for us, but it is also more reliable. Also for the cluster, you need to create a technological cluster monitoring section - quorum. He needs at least 50 megabytes of disk space, so for the quorum we will use a mirror ( RAID 1 ) of the two remaining 34 gigabyte disks, of course, a bit too much but there are no fewer disks. On board the servers themselves, two SCSI disks are installed, the same in RAID 1, and the server operating systems will be on them.

')

So, we have three RAID 1 RAID arrays and one RAID 6, with only eight of the nine disks used and one disk as a backup, so in theory, RAID will work even if three disks fail.

OS and Active Directory

We initially installed Windows Server 2008, but later it turned out that the HP ProLiant DL380 G4 uses parallel SCSI, and parallel SCSI support, as the type of shared storage device was removed in a Windows Server 2008-based failover cluster. I had to use Windows Server 2003, and Hyper -V is out of the question. I also want to note that you need to use Windows 2003 Enterprise or Datacenter, since Windows Server 2003 Standard and Web Editon do not support clustering.

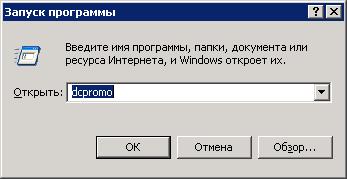

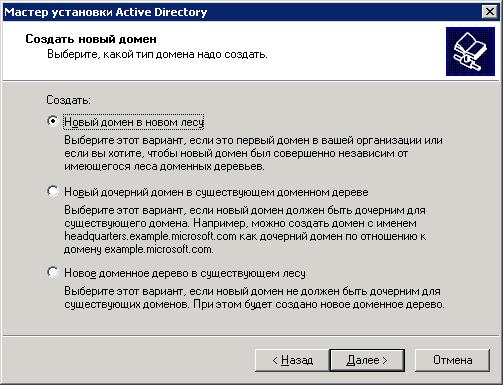

Using the Active Directory installation wizard, create a new domain:

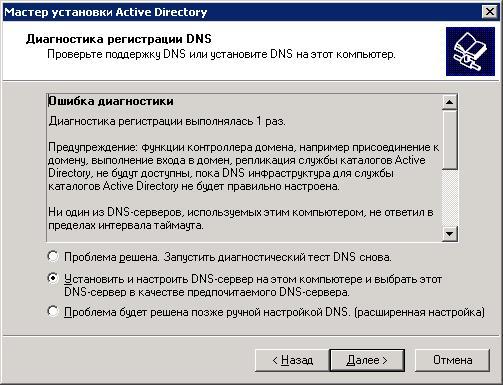

I don’t see all the stages of the wizard show, I’ll not see, I’ll only pay attention to some moments. AD Setup Wizard alerts you to a missing DNS. The role of the DNS server could of course be added before creating the Active Directory, but you can do it right here:

So, when Active Directory is created, you need to add an account with domain administrator privileges for our future cluster, since the cluster is configured under the domain account.

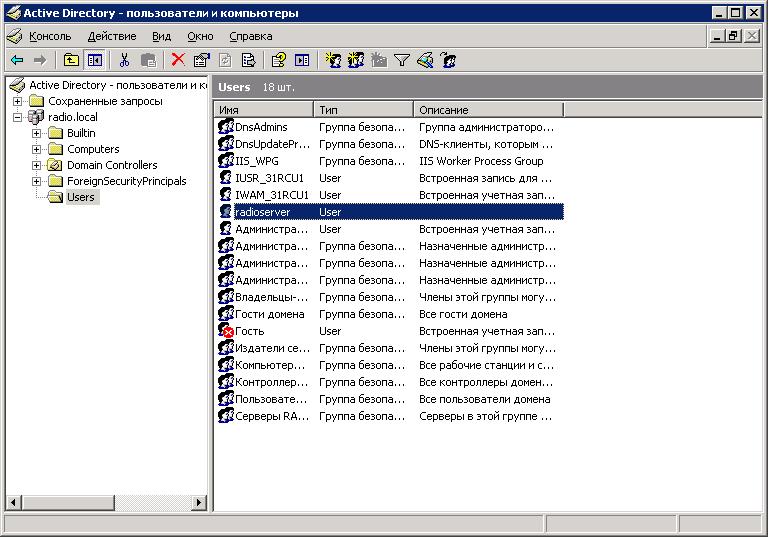

The record will be called Radioserver in the domain Radio.local:

Then, we connect the second server as an additional domain controller using the same Active Directory Installation Wizard (dcpromo):

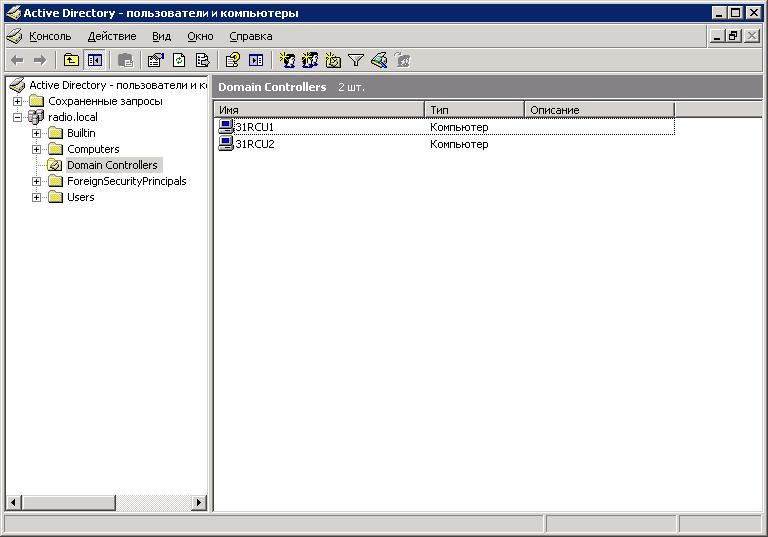

An added controller has been added, we can see the controllers in the Active Directory management window:

Now you can start creating a cluster.

Cluster

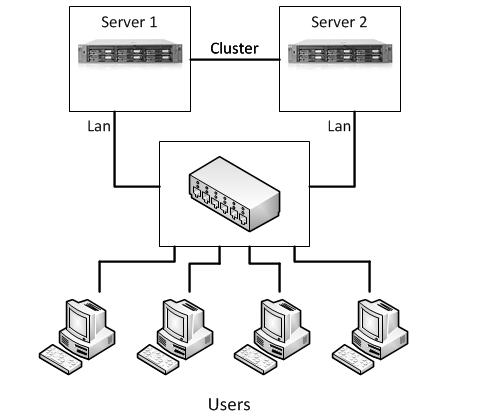

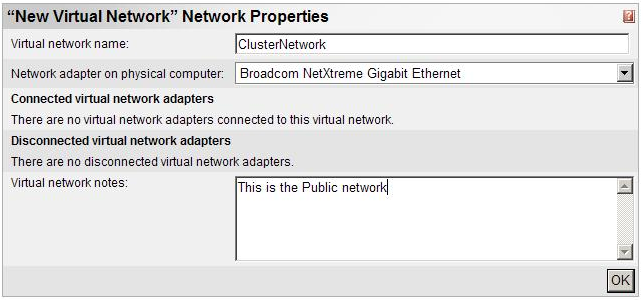

To create a cluster, you need to finish preparing the servers. We have a common disk space, Quorum, we need to create a fault-tolerant network for a fault-tolerant server. The servers have two network adapters, one pair will be used for internal communication between the cluster segments, and the second for communication with the outside world, let's call them “Cluster” and “Lan”, respectively.

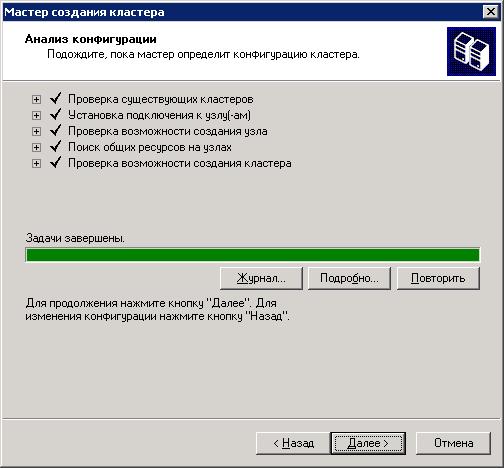

We start "administration of a cluster", action - "to create a new cluster". The cluster creation wizard starts, there you need to specify the domain and assign a unique cluster name, because for the remaining devices on the network, our cluster will look like an independent server. Then we specify the computer that will be the first node of the new cluster. Next comes the configuration analysis:

If our configuration does not satisfy the clustering, it is desirable to eliminate all comments, a description of the problem will be described in detail in the journal. Well, if all the points are satisfied, enter the cluster IP address and the wizard suggests the configuration of the future cluster. In the same window, you can change the automatically selected quorum section, by default the wizard selects the smallest public section.

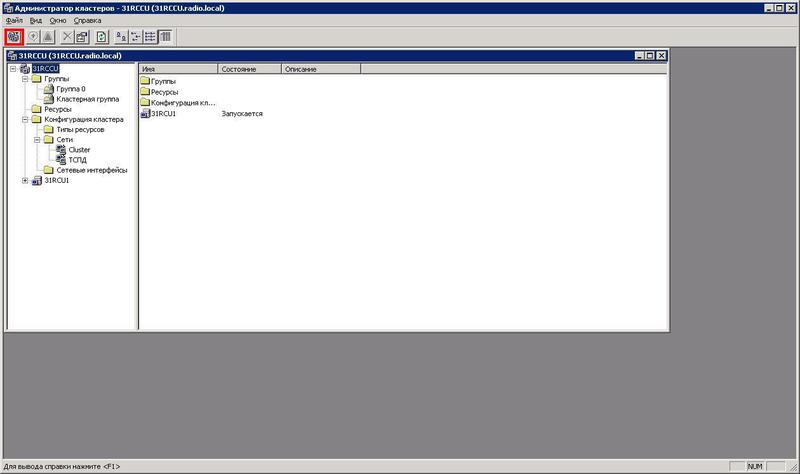

After the creation of the cluster is completed, we see that we have a cluster consisting of one node with two cluster groups and two networks:

Cluster groups contain resources: Cluster name, IP address and quorum.

Now, similarly to the first, we add the second node to the created cluster. And finally, the cluster is ready, to test its performance, you can try to manually move the cluster groups from one node to another, also artificially provoke this transition, for example, by disconnecting the public network's network adapter - “Lan”.

So, the cluster is ready, but what to do next? Our application, unfortunately, does not support clustering, which means you have to run it in a virtual machine.

Microsoft Virtual Server 2005 R2

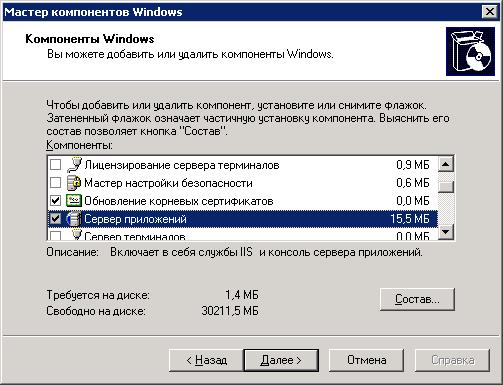

The virtual machine is selected - Microsoft Virtual Server 2005 R2 SP1 - Enterprise Edition . Install the virtual machine on both sites, but before that you need to add the Windows component - “Application Server”:

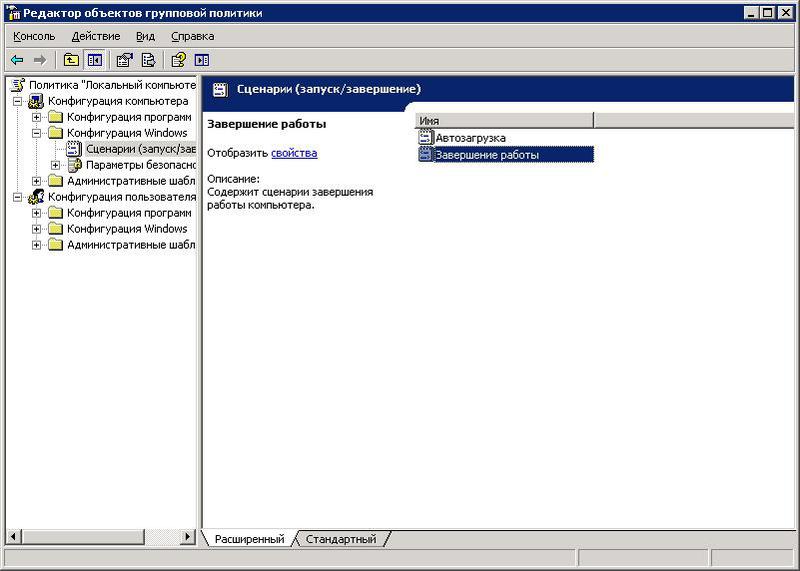

After installation, we will create a script that will be used by the cluster service on node 1 to move the virtual server to node 2. In addition, when the cluster service stops, the transition of all resources to node 2 is triggered. The script contains one line - “net stop clussvc”, save it under the name "C: \ Stop_cluss_script.cmd". Go to the group policies (gpedit.msc), select "Shut down" and add our script:

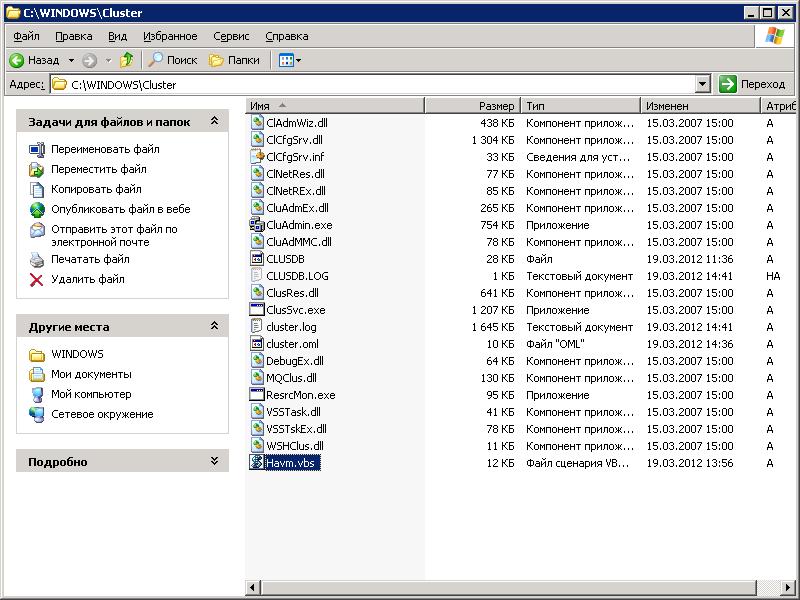

This script needs to be added on both nodes. Then on both nodes in the folder "% systemroot% \ Cluster" we add the script "Havm.vbs" , which will start the virtual machine when the node is changed .

In order for our virtual machine to run on both nodes, it must be placed on a shared disk - D (data). To do this, you need to add a shared disk as a cluster resource. We go to the Administration of the cluster and select the operation "New group", we indicate the preferred owners, these are our two nodes. The group is called "SmartPTT". Now in the created group we create the resource “DiskStorage”, we add the owners in the same way and select the physical disk from the drop-down disk.

Using the Virtual Server Administration Website we create a virtual network. As a virtual network adapter, select the adapter corresponding to the cluster's public network adapter - “Lan”.

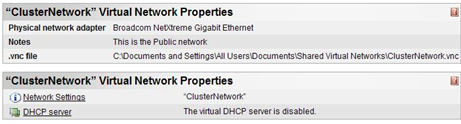

Now we need to transfer the configuration file to a shared disk, for this, we direct it to “Virtual Networks” in the navigation panel, click “Configure”, then click “View All”. We hover the mouse on the newly created network and select "Edit Configuration":

So we will find out where the default configuration file is saved. Now in the Virtual Server Administration Website we click “Back”, move the mouse pointer over our network and select “Remove”. The goal of this step is not to cancel the creation of a virtual network, but to clear the Virtual Server system information in the event that the .vnc file is moved to the cluster storage. If you skip this operation, the virtual network will not work correctly.

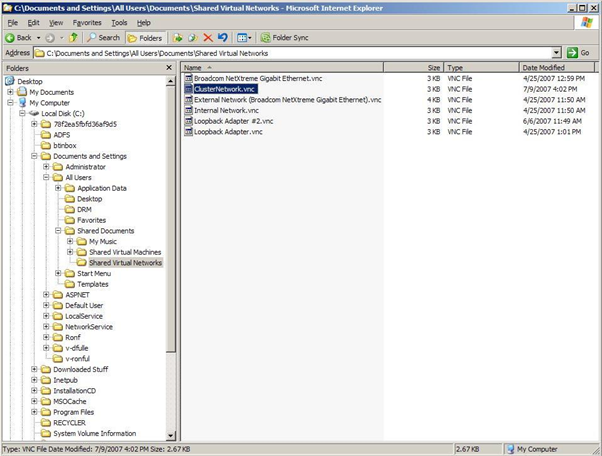

On the shared storage disk we create the SmartPTT folder, there will be files of the virtual machine. And we transfer here ClusterNetwork.vnc.

Next in the Virtual Server Administration Website, we suggest “Virtual Networks”, click on “Add”, indicate the new location of our .vnc file, and then click “Add”. Then, when creating a virtual machine in Virtual machine name, you need not just to enter a name, but to enter the storage path of the virtual machine configuration, specifying our folder in the repository, “D: \ SmartPTT \ ... vnc”.

On the Master Status page, a sketch of the created machine. If you are prompted to enable VMRC server, install ActiveX control, do it. Click OK for any subsequent security dialogs. Go to the configuration of the machine we created and select the CD / DVD section where we specify where to get the installation files. Then we press Turn On of our machine and start the standard OS installation procedure. Then, on site 2 through the Virtual Server Administration Website, you must manually specify the configuration files of our virtual server.

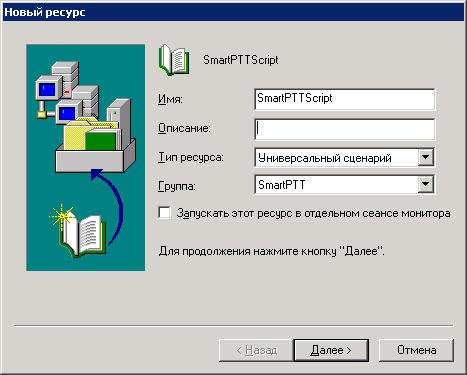

Next, go to Cluster Administration and create a new resource in our previously created group. Resource type "Universal Script":

Specify the path to our script Havm.vbs -% systemroot% \ Cluster \ Havm.vbs, and add resource dependencies to “DiskStorage”, because if the shared disk is unavailable to the virtual machine, then there will not be any configuration files. This completes the setup, installs the operating system and the service for which all this is done on the virtual machine.

Conclusion

Let me remind you that our service is, for example, a program that pings something and creates a report about it. To view a report outside the virtual machine, the report should be saved to a disk accessible to the virtual machine and all other members of the network. As a way out of this situation in the OS running on a virtual machine, a network drive was added - our Data storage. The network drive was connected through the cluster IP address, and this caused a problem. When the cluster resource “cluster IP address” is moved, the rest of the network members, including the virtual machine, do not understand that the cluster has replaced the adapter, and continue to break on the inactive adapter of the failed node. As a result, the cluster IP address is unavailable for 5-10 minutes, as a result, and our service all this time cannot save data to a network drive.

Halyards

Stop_cluss_script.cmd

"Havm.vbs"

Source: https://habr.com/ru/post/139410/

All Articles