Selectel accident

March 3, at about 10:50 pm Moscow time, the car stopped responding in a new cloud.

I managed to see the download schedule - the last 15 minutes on it was zero processor activity.

Made 2 attempts to reboot and 2 forced shutdowns - unsuccessfully.

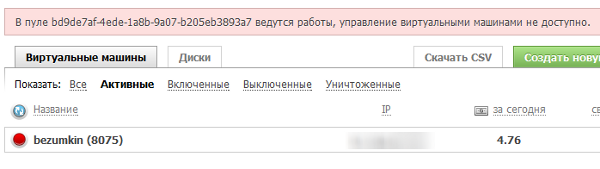

Cloud management is now disabled altogether.

')

The system engineer responded to the ticket:

Hello. Indeed, in the work of several servers of our cloud, there was a failure. Our experts are working on this problem.

We apologize to you.

I create a topic to notify the community and slightly reduce the load on technical support.

Unfortunately, the accident was much more serious.

Chronology

22:50 The problems started. Zero activity on the server, management does not work.

01:25 Machines began to rise.

02:05 Looks like it fell back - the car stopped responding in the same way. Early rejoiced.

04:00 amarao :

To blame. The data was preserved without damage, but the connection between the storage system and the hosts, yes, broke, and twice.

Now they have repaired, the injured cars have started.

05:10 Dlussky discovered that “nothing works,” judging by the charts, the car got out about 4x

05:25 Dlussky turned off the cloud control panel, in support they say about the recovery time 0.5-3 hours

07:08 amarao :

Still struggling with the problem and thinking about solutions. Any relatively high activity on the disk leads to IO paralysis.

10:00 No change. Although there is no karma leaked. If it drops to 8, I remove the topic in drafts.

11:12 dyadyavasya :

Just answered the ticket:

"According to preliminary estimates of specialists, the work will be completed within two hours."

14:00 There are no special changes in the situation. But karma has returned to normal, thanks to the kind people!

14:15 The message in the control panel:

We start the car.My up and running, but for now wait wait to rejoice.

15:00 My server of type works, the schedule of resource consumption is normal, it pings, but neither one site nor ssh access works.

15:04 amarao :

The last traces of the accident eliminated.

Sorry, people.

Compensation will be, tomorrow I will fight for the size. Now they have done so that the situation is not reproduced. We will essentially solve with the highest priority.

Sorry again.

The official explanation of the situation from amarao

So what happened.

If in short - by-turn (with an interval of 10 minutes), the raid10 arrays on both servers providing the storage cluster stopped. On top of these arrays we have flashcache (caching layer) and drbd, working under protocol C (synchronous recording mode).

The system was configured according to the following algorithm: if one of the storages falls, the second continues to work. Which of the repositories is alive, which is not, is determined using multipathd on virtualization hosts.

The basic tests that we drove before starting included: crashing one of the nodes (crash), power loss, taking out (killing) more disks than raid10 could survive, killing ssd raid death (also raid10), random admin who typed ' reboot ', removing the network cable and its "poking" there / back (protection from split brain). All these tests passed and (I) had confidence that the system was sufficiently robust for our needs.

On this night, the usual raid checking started. An innocent process that checks the integrity of raids in the background. The stores in the first pool continue this process slowly (about 2-5mb / s). The kernel module that performs the verification ensures that latency does not increase significantly, so that clients in the normal mode of this process should not notice.

However, a problem arose (our current hypothesis is a bug in the kernel module) and the presence of aggressive IO (about 25 IOPS on each disk of the array) simultaneously with the verification process caused IO to stop on the array. Fully. So much so that dd if = / dev / md10 of = / dev / null bs = 512 count = 1 just “hung”. At the same time, reading directly from all underlying disks was successful and occurred at normal speed. This led to the fact that the iscsi target (and followed by everyone else in the chain) to io did not get any success or response. In addition, the situation was complicated by the fact that the usual multipath "checker" checks the first 512 bytes of the disk for readability. Guess whether these 512 bytes were cached in flashcache or not ... Another thing that was confusing was that part of the requests (those who were in the ssd cache) were served normally, and for some time the write requests also came without problems. At some point, the ssd cache overflowed and there was a problem with it being thrown off. Due to the synchronous mode of drbd, stopping (not an error, namely, stopping) led to the stopping of both peers. In theory, the problem could be solved only by disabling the “sick” feast (which we did at the beginning). This helped for a while, after which the problem recurred in the second (which again speaks of the problem in the kernel module, and not in the hardware). It was at this point that most customers noticed the problem. Since the recording was completely paralyzed, we could not return to the place the previous feast, which had risen by this time (and continued to check the disc, as if nothing had happened). The first “global reboot” occurred. We have an approximate lifting speed of cars of about 2-3s per car (the car starts longer, the start operation goes in parallel). Somewhere on 300 machines, the synchronization speed began to plummet (in fact, it was already 0, and the “decrease in the average” was just an accidental effect). Almost simultaneously, the problem was repeated at the second feast (which, by that time, had also been reset). It was decided to try to update the kernel, this did not fix the situation. Began to draw plans for "manual recovery". At this time, my assistant offered to “give pre-order”. Dali (350 minutes). During this time, I even managed to take a short nap (since the time had already crawled by 7 am). Just in case, this is not about “rebuild” in case of a disk failure, but “monthly” checking the vividness of all the disks in the array. This process went on all 10th raids, of which the storage was collected (in both storages at once) at a speed of about 200-250 Mb / s.

By one o'clock the process was over, another 10 minutes was taken by the manual lifting of the cluster strapping, another 5 minutes of rebooting the pool (since all the machines in the second pool suffered, there was no point in manually correcting and understanding the multipathd opinion about who is alive and who is not). The launch has begun. By 2 pm, the start was over and manual analysis of all sorts of fsck began, asking to press a button on the screen.

Conclusions and actions taken.

1) As a local solution "in the coming days" - the launch of resync raids is prohibited.

2) In the near future, the script “does not work, but does not report an error” will be provided for the raid (and other block devices in the stack - flashcache, lvm, drbd, etc.)

3) An equivalent (disks will be smaller and without SSD) stand will be built in parallel, where we will try to reproduce the situation and look for a configuration in which it does not manifest itself. Our current assumption, raid0 over raid1 should not behave this way.

4) Send bug report (if item 3 is successful).

Apologies:

To blame. We understand that it is impossible to do this and we will make every effort to correct the situation.

Compensation:

In compensation, it was decided to return 100% of the money spent on the affected virtual machines.

Source: https://habr.com/ru/post/139368/

All Articles