Refinement ffmpeg video player

In the previous article, we reviewed the main components of ffmpeg and built on their basis the simplest player to play video at decoding speed, without synchronization.

In this article we will look at how to add sound reproduction and deal with synchronization.

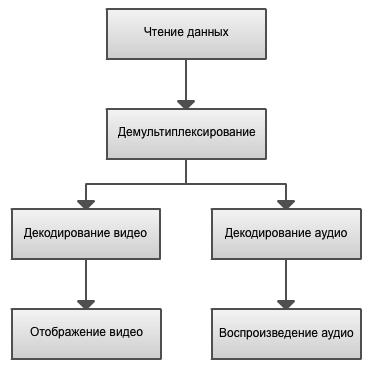

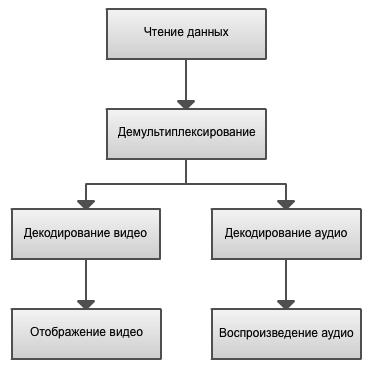

The basis of most applications and frameworks for working with multimedia is the graph. The nodes of the graph are objects that perform a specific task. For example, consider this graph video player.

The work begins with the Read data block, in which in our case the file is read. In the more general case, this is the receipt of data via a network, or from a hardware source.

Demultiplexing splits the incoming stream into several outgoing streams (for example, audio and video). Demultiplexing works at the data container level, that is, at this stage it does not matter what codec a particular stream is encoded. Examples of containers: AVI, MPEG-TS, MP4, FLV.

After demultiplexing, the received streams are decoded in video decoding and audio decoding blocks. At the output of the decoder will be data in standard formats - YUV or RGB frames for video and PCM data for audio. Decoding is usually done in separate streams. Displaying video displays the video on the screen, and Playing Audio plays the resulting audio stream.

For our player, we will use a similar implementation. In a separate stream, we will read the file and demultiplex it into streams, in the other two streams we will decode video and audio. Then, using SDL, display a picture on the screen and play audio. In the main application thread, we will process the SDL events.

The code for this example was quite large, I decided not to give it here in its entirety. I will show only the fundamental points. All code can be viewed at the link at the end of the article.

')

First of all, we merge all the main variables into one common context:

This context will be passed to all streams. video_stream and audio_stream contain information and video and audio streams, respectively. videoq and audioq are the queues in which the demultiplexing thread will add read packets. Given these changes, the demuxing code ( demux_thread ) will be quite simple and will take the following form:

Here we read the next packet from the file, and, depending on the type of stream, we put it in the appropriate queue for decoding. This is the main function of the de-multiplexing flow, no more actions are performed in it.

Now consider the more complex video and audio decoding streams.

The process of decoding and displaying video was discussed in a previous article. The main code remained unchanged, but was divided into two parts - video decoding performed in a separate stream ( video_decode_thread ) and display in a window ( video_refresh_timer ), performed in the main stream by timer. This separation must be done to facilitate the implementation of synchronization, which we will consider in the second part of the article.

Image update is done on a timer, rather than in a separate stream, since SDL requires that video operations be performed on the main application stream. Similarly, you cannot create overlay from an arbitrary stream. This limitation can be circumvented, for example, using SDL events and a condition variable. But we will not do it. We confine ourselves to one overlay, which we will create before the start of decoding.

Computer sound is a continuous stream of samples . Each sample is the value of the waveform. Sounds are recorded at a specific sampling rate , and must be played at the same frequency. The sampling rate is the number of samples per second. For example, 44100 samples per second - the audio CD sampling rate. In addition, audio can contain multiple channels. For example, for stereo samples will come two at a time. When retrieving data from a file, it is not known how many samples will be received, but FFmpeg will not give incomplete samples. This also means that FFmpeg will not share stereo samples.

The first step is to configure the SDL to output audio. In the initialization function, you must add the flag SDL_INIT_AUDIO . Then fill the SDL_AudioSpec structure and pass it to the SDL_OpenAudio function:

The SDL uses the callback function to output audio.

The structure has the following parameters:

Calling SDL_PauseAudio (0) starts the audio playback. If there is no data in the buffer, “silence” will be played.

As you probably remember, during demultiplexing, we folded the read packets into a separate audioq queue . The main purpose of the audio_decode_thread decoding function is to receive a packet from a queue, decode it and put it into another buffer, which will then be read in the function we specified in SDL_OpenAudio .

We will use a ring buffer as such a buffer . Prototypes of the main functions:

The purpose of the arguments must be clear from the title. The block argument indicates whether the function should block if there is not enough space in the buffer or no data to read.

So, the entire decoding function is as follows:

The decoding of an audio packet is performed by the avcodec_decode_audio4 function. In case the whole frame is decoded (the got_frame flag), we determine the size of the buffer in bytes using the av_samples_get_buffer_size function and write it to our ring buffer.

It remains quite a bit, namely to reproduce the decoded samples. This is done in the audio_callback callback function:

Everything is elementary here. We take out len bytes from the buffer and store them in the provided SDL buffer.

Unlike video, audio immediately plays at the correct speed. This happens because the sampling rate was explicitly specified when configuring the audio output, and the call to the SDL callback function will be executed with this frequency.

The video and audio streams in the file have information about at what time and at what speed they should be played. For audio streams, this is the sampling rate that we met in the previous section, and for video streams this is the number of frames per second ( FPS ). However, it is impossible to perform synchronization only on the basis of these values, since the computer is not an ideal device, and most video files have inaccurate values of these parameters. Instead, each packet in the stream contains two values — a decoding label (decoding timestamp, DTS) and a display label (presentation timestamp, PTS ). The existence of two different values is due to the fact that the frames in the file may not go in order. This is possible if there are B-frames in the video ( Bi-predictive picture , a frame that depends on both the previous and the next frames). Also on the video may be repeating frames.

There are three synchronization options:

Consider the simplest of these options, namely the synchronization of video to audio . After displaying the current frame, we will calculate the next display time based on the PTS. To update the image we will use SDL timers.

In our main context, add the following fields:

During initialization, let's assign an initial value for frame_timer :

In the video decoding stream, we will calculate the display time of the next frame:

You may notice that the pts value can take one of three values:

Function code synchronize_video :

In it, we update the frequency of the video, and also take into account possible repeated frames.

In the audio decoding stream, we will save the audio frequency in order to synchronize with it later:

In the video display function, we will calculate the delay before the next frame is displayed:

And the “heart” of our sync is the compute_delay function:

First, we calculate the delay between the previous and current frame and save the current values. After that, we take into account the possible desynchronization with audio and calculate the duration of the required delay until the next frame.

That's all! Launch the player and enjoy watching!

In this part we have completed the development of the simplest player, improved the structure of the program, added audio playback and synchronization.

Of the topics not covered are: rewind, fast / slow playback, other synchronization options.

There is also a completely separate topic of coding and multiplexing. Perhaps I will try to consider it in the next article.

Source code player .

Thank you all for your attention!

In this article we will look at how to add sound reproduction and deal with synchronization.

Introduction

The basis of most applications and frameworks for working with multimedia is the graph. The nodes of the graph are objects that perform a specific task. For example, consider this graph video player.

The work begins with the Read data block, in which in our case the file is read. In the more general case, this is the receipt of data via a network, or from a hardware source.

Demultiplexing splits the incoming stream into several outgoing streams (for example, audio and video). Demultiplexing works at the data container level, that is, at this stage it does not matter what codec a particular stream is encoded. Examples of containers: AVI, MPEG-TS, MP4, FLV.

After demultiplexing, the received streams are decoded in video decoding and audio decoding blocks. At the output of the decoder will be data in standard formats - YUV or RGB frames for video and PCM data for audio. Decoding is usually done in separate streams. Displaying video displays the video on the screen, and Playing Audio plays the resulting audio stream.

Implementation

For our player, we will use a similar implementation. In a separate stream, we will read the file and demultiplex it into streams, in the other two streams we will decode video and audio. Then, using SDL, display a picture on the screen and play audio. In the main application thread, we will process the SDL events.

The code for this example was quite large, I decided not to give it here in its entirety. I will show only the fundamental points. All code can be viewed at the link at the end of the article.

')

First of all, we merge all the main variables into one common context:

typedef struct MainContext { AVFormatContext *format_context; // Streams Stream video_stream; Stream audio_stream; // Queues PacketQueue videoq; PacketQueue audioq; /* ... */ } MainContext; This context will be passed to all streams. video_stream and audio_stream contain information and video and audio streams, respectively. videoq and audioq are the queues in which the demultiplexing thread will add read packets. Given these changes, the demuxing code ( demux_thread ) will be quite simple and will take the following form:

AVPacket packet; while (av_read_frame(main_context->format_context, &packet) >= 0) { if (packet.stream_index == video_stream_index) { // Video packet packet_queue_put(&main_context->videoq, &packet); } else if (packet.stream_index == audio_stream_index) { // Audio packet packet_queue_put(&main_context->audioq, &packet); } else { av_free_packet(&packet); } } Here we read the next packet from the file, and, depending on the type of stream, we put it in the appropriate queue for decoding. This is the main function of the de-multiplexing flow, no more actions are performed in it.

Now consider the more complex video and audio decoding streams.

Video playback

The process of decoding and displaying video was discussed in a previous article. The main code remained unchanged, but was divided into two parts - video decoding performed in a separate stream ( video_decode_thread ) and display in a window ( video_refresh_timer ), performed in the main stream by timer. This separation must be done to facilitate the implementation of synchronization, which we will consider in the second part of the article.

Image update is done on a timer, rather than in a separate stream, since SDL requires that video operations be performed on the main application stream. Similarly, you cannot create overlay from an arbitrary stream. This limitation can be circumvented, for example, using SDL events and a condition variable. But we will not do it. We confine ourselves to one overlay, which we will create before the start of decoding.

Audio playback

Computer sound is a continuous stream of samples . Each sample is the value of the waveform. Sounds are recorded at a specific sampling rate , and must be played at the same frequency. The sampling rate is the number of samples per second. For example, 44100 samples per second - the audio CD sampling rate. In addition, audio can contain multiple channels. For example, for stereo samples will come two at a time. When retrieving data from a file, it is not known how many samples will be received, but FFmpeg will not give incomplete samples. This also means that FFmpeg will not share stereo samples.

The first step is to configure the SDL to output audio. In the initialization function, you must add the flag SDL_INIT_AUDIO . Then fill the SDL_AudioSpec structure and pass it to the SDL_OpenAudio function:

SDL_AudioSpec wanted_spec, spec; // Set audio settings from codec info wanted_spec.freq = codec_context->sample_rate; wanted_spec.format = AUDIO_S16SYS; wanted_spec.channels = codec_context->channels; wanted_spec.silence = 0; wanted_spec.samples = SDL_AUDIO_BUFFER_SIZE; wanted_spec.callback = audio_callback; wanted_spec.userdata = main_context; if (SDL_OpenAudio(&wanted_spec, &spec) < 0) { fprintf(stderr, "SDL: %s\n", SDL_GetError()); return -1; } SDL_PauseAudio(0); The SDL uses the callback function to output audio.

The structure has the following parameters:

- freq : sample rate.

- format : The format of the transmitted data. The symbol “S” in “AUDIO_S16SYS” means that the data will be signed, 16 - the size of the sample is 16 bits, “SYS” - the system byte order is used. It is in this format that FFmpeg returns the decoded data.

- Channels : The number of audio channels.

- silence : Meaning "silence." For character data, 0 is usually used.

- samples : The size of the SDL audio buffer. Normal values are values from 512 to 8192 bytes. We will use 1024.

- callback : callback function to fill the buffer with data.

- userdata : User data passed to the callback function. We use our main context here.

Calling SDL_PauseAudio (0) starts the audio playback. If there is no data in the buffer, “silence” will be played.

Audio decoding

As you probably remember, during demultiplexing, we folded the read packets into a separate audioq queue . The main purpose of the audio_decode_thread decoding function is to receive a packet from a queue, decode it and put it into another buffer, which will then be read in the function we specified in SDL_OpenAudio .

We will use a ring buffer as such a buffer . Prototypes of the main functions:

int ring_buffer_write(RingBuffer* rb, void* buffer, int len, int block); int ring_buffer_read(RingBuffer* rb, void* buffer, int len, int block); The purpose of the arguments must be clear from the title. The block argument indicates whether the function should block if there is not enough space in the buffer or no data to read.

So, the entire decoding function is as follows:

static int audio_decode_thread(void *arg) { assert(arg != NULL); MainContext* main_context = (MainContext*)arg; Stream* audio_stream = &main_context->audio_stream; AVFrame frame; while (1) { avcodec_get_frame_defaults(&frame); // Get packet from queue AVPacket pkt; packet_queue_get(&main_context->audioq, &pkt, 1); // The audio packet can contain several frames int got_frame; int len = avcodec_decode_audio4(audio_stream->codec_context, &frame, &got_frame, &pkt); if (len < 0) { av_free_packet(&pkt); fprintf(stderr, "Failed to decode audio frame\n"); break; } if (got_frame) { // Store frame // Get decoded buffer size int data_size = av_samples_get_buffer_size(NULL, audio_stream->codec_context->channels, frame.nb_samples, audio_stream->codec_context->sample_fmt, 1); ring_buffer_write(&main_context->audio_buf, frame.data[0], data_size, 1); } av_free_packet(&pkt); } return 0; } The decoding of an audio packet is performed by the avcodec_decode_audio4 function. In case the whole frame is decoded (the got_frame flag), we determine the size of the buffer in bytes using the av_samples_get_buffer_size function and write it to our ring buffer.

Audio playback

It remains quite a bit, namely to reproduce the decoded samples. This is done in the audio_callback callback function:

static void audio_callback(void* userdata, uint8_t* stream, int len) { assert(userdata != NULL); MainContext* main_context = (MainContext*)userdata; ring_buffer_read(&main_context->audio_buf, stream, len, 1); } Everything is elementary here. We take out len bytes from the buffer and store them in the provided SDL buffer.

Unlike video, audio immediately plays at the correct speed. This happens because the sampling rate was explicitly specified when configuring the audio output, and the call to the SDL callback function will be executed with this frequency.

Synchronization

The video and audio streams in the file have information about at what time and at what speed they should be played. For audio streams, this is the sampling rate that we met in the previous section, and for video streams this is the number of frames per second ( FPS ). However, it is impossible to perform synchronization only on the basis of these values, since the computer is not an ideal device, and most video files have inaccurate values of these parameters. Instead, each packet in the stream contains two values — a decoding label (decoding timestamp, DTS) and a display label (presentation timestamp, PTS ). The existence of two different values is due to the fact that the frames in the file may not go in order. This is possible if there are B-frames in the video ( Bi-predictive picture , a frame that depends on both the previous and the next frames). Also on the video may be repeating frames.

There are three synchronization options:

- sync video to audio;

- synchronization of audio to video;

- synchronization of video and audio with an external generator;

Consider the simplest of these options, namely the synchronization of video to audio . After displaying the current frame, we will calculate the next display time based on the PTS. To update the image we will use SDL timers.

In our main context, add the following fields:

typedef struct MainContext { /* ... */ double video_clock; double audio_clock; double frame_timer; double frame_last_pts; double frame_last_delay; /* ... */ } MainContext; - video_clock : video display frequency;

- audio_clock : audio playback frequency;

- frame_timer : current display time;

- frame_last_pts : PTS value of the last frame shown;

- frame_last_delay : delay value of the last frame displayed;

During initialization, let's assign an initial value for frame_timer :

main_context->frame_timer = (double)av_gettime() / 1000000.0; In the video decoding stream, we will calculate the display time of the next frame:

double pts = frame.pkt_dts; if (pts == AV_NOPTS_VALUE) { pts = frame.pkt_pts; } if (pts == AV_NOPTS_VALUE) { pts = 0; } pts *= av_q2d(main_context->video_stream->time_base); pts = synchronize_video(main_context, &frame, pts); You may notice that the pts value can take one of three values:

- frame.pkt_dts : FFmpeg reorders frames during decoding so that the DTS value matches the PTS value of the frame being decoded. In this case, we use DTS.

- frame.pkt_pts : If there is no DTS value, try using PTS.

- 0 : If both values are missing, we will use the last saved video frequency value.

Function code synchronize_video :

double synchronize_video(MainContext* main_context, AVFrame *src_frame, double pts) { assert(main_context != NULL); assert(src_frame != NULL); AVCodecContext* video_codec_context = main_context->video_stream->codec; if(pts != 0) { /* if we have pts, set video clock to it */ main_context->video_clock = pts; } else { /* if we aren't given a pts, set it to the clock */ pts = main_context->video_clock; } /* update the video clock */ double frame_delay = av_q2d(video_codec_context->time_base); /* if we are repeating a frame, adjust clock accordingly */ frame_delay += src_frame->repeat_pict * (frame_delay * 0.5); main_context->video_clock += frame_delay; return pts; } In it, we update the frequency of the video, and also take into account possible repeated frames.

In the audio decoding stream, we will save the audio frequency in order to synchronize with it later:

if (pkt.pts != AV_NOPTS_VALUE) { main_context->audio_clock = av_q2d(main_context->audio_stream->time_base) * pkt.pts; } else { /* if no pts, then compute it */ main_context->audio_clock += (double)data_size / (audio_codec_context->channels * audio_codec_context->sample_rate * av_get_bytes_per_sample(audio_codec_context->sample_fmt)); } In the video display function, we will calculate the delay before the next frame is displayed:

double delay = compute_delay(main_context); schedule_refresh(main_context, (int)(delay * 1000 + 0.5)); And the “heart” of our sync is the compute_delay function:

static double compute_delay(MainContext* main_context) { double delay = main_context->pict.pts - main_context->frame_last_pts; if (delay <= 0.0 || delay >= 1.0) { // Delay incorrect - use previous one delay = main_context->frame_last_delay; } // Save for next time main_context->frame_last_pts = main_context->pict.pts; main_context->frame_last_delay = delay; // Update delay to sync to audio double ref_clock = get_audio_clock(main_context); double diff = main_context->pict.pts - ref_clock; double sync_threshold = FFMAX(AV_SYNC_THRESHOLD, delay); if (fabs(diff) < AV_NOSYNC_THRESHOLD) { if (diff <= -sync_threshold) { delay = 0; } else if (diff >= sync_threshold) { delay = 2 * delay; } } main_context->frame_timer += delay; double actual_delay = main_context->frame_timer - (av_gettime() / 1000000.0); if(actual_delay < 0.010) { /* Really it should skip the picture instead */ actual_delay = 0.010; } return actual_delay; } First, we calculate the delay between the previous and current frame and save the current values. After that, we take into account the possible desynchronization with audio and calculate the duration of the required delay until the next frame.

That's all! Launch the player and enjoy watching!

Conclusion

In this part we have completed the development of the simplest player, improved the structure of the program, added audio playback and synchronization.

Of the topics not covered are: rewind, fast / slow playback, other synchronization options.

There is also a completely separate topic of coding and multiplexing. Perhaps I will try to consider it in the next article.

Source code player .

Thank you all for your attention!

Source: https://habr.com/ru/post/138426/

All Articles