Face recognition by the human brain: 19 facts that computer vision researchers should be aware of

An important goal of researchers in the field of computer vision is the creation of an automated system that can equal or exceed the ability of the human brain to recognize faces. The results of psychophysical studies of the facial recognition process provide computer vision specialists with valuable facts that will help improve artificial intelligence systems.

As usual, I offer an abridged translation, the full text is available in the original .

')

Despite significant efforts to develop facial recognition algorithms, a system has not yet been created that can work without artificial limitations, taking into account all possible variations of image parameters, such as sensor noise, distance to an object, and light level. The only system that does its job well is human vision. Therefore, it is useful to study the strategies that this biological system uses and try to use them in the development of artificial algorithms. 19 important research results are proposed that do not claim to be the complete theory of facial recognition, but give important clues to the developers of computer vision systems. These 19 results are collected from various publications of many scientific groups, and the original article contains links to these publications.

RECOGNITION AS A FUNCTION OF SPATIAL RESOLUTION

Result 1: People are able to recognize familiar faces in very low resolution images.

Progress in the development of high-resolution video sensors provokes the use of an increasing number of small parts for face recognition in computer vision systems. An example of this approach is iris recognition. Obviously, such algorithms do not work in the absence of high-definition images. This problem is especially relevant when face recognition is required at a considerable distance. Turn to human vision. How does the accuracy of facial recognition on the image resolution? It turns out that people maintain the accuracy of recognizing familiar faces on images smoothed to the size of 16x16 blocks. Recognition accuracy of over 50% is preserved when smoothed to an equivalent size of 7x10 pixels (see Fig. 1), and becomes almost equal to the maximum possible value at a resolution of 19x27 pixels.

Fig. 1 People are able to recognize more than half of familiar faces with the resolution shown in this figure. Here are depicted: 1 - Michael Jordan, 2 - Woody Allen, 3 - Goldie Hawn, 4 - Bill Clinton, 5 - Tom Hanks, 6 - Saddam Hussein, 7 - Elvis Presley, 8 - Jay Leno, 9 - Dustin Hofman, 10 - Prince Charles, 11 - Cher, 12 - Richard Nixon.

Result 2: The ability to ignore the degradation of images increases with the degree of familiarity.

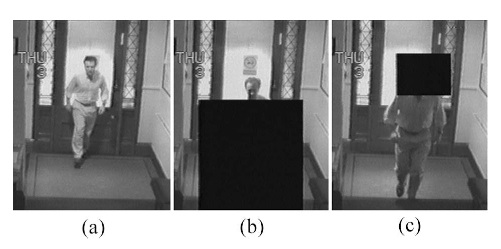

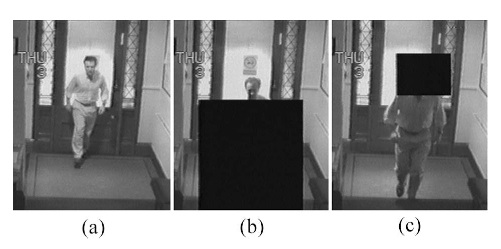

The ability to compensate for the degradation of the resolution of images strongly depends on the degree of familiarity with the subject. Demonstrated a low recognition rate of strangers in two different photos of the same subject, and on the other hand, a high percentage of recognition of images of colleagues at work when observing images from low-quality surveillance cameras. At the same time, the figure and gait turned out to be much less informative than the image of faces, despite their extremely low resolution. This is proved by the fact that when a figure is shielded, but the face is left, the accuracy of recognition drops slightly, but with the opposite effect the accuracy decreases significantly (see Fig. 2).

Fig. 2 Images from the video used in the study. (a) the original image, (b) the subject’s body is closed, (c) the face is closed.

Result 3: High-frequency information by itself does not guarantee high quality recognition.

The traditional approach to recognition is largely based on the use of contour extraction algorithms. Contour is considered to be an invariant under various lighting conditions. In the context of biological facial recognition, contour (vector) images are usually sufficient for face recognition. Pencil sketches and cartoons are often easily recognizable. Does this mean that high-frequency spatial images are crucial, or at least sufficient for face recognition? Research results refute this. Specifically for “vector” drawings, it is shown that images that contain only contours are poorly recognizable (correct recognition in 47% of vector drawings versus 90% of the original photos) - see fig. 3

Fig. 3 Images that contain only contours are difficult to recognize.

NATURE TREATMENT: FRAGMENTARY OR COMPLETELY?

Result 4: Facial features are treated as one.

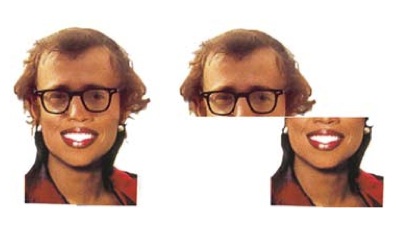

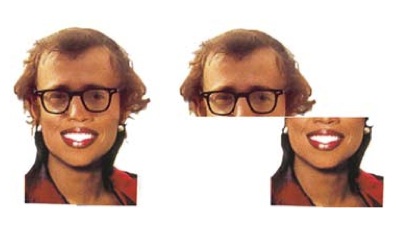

Can facial features (eyes, nose, mouth, eyebrows, etc.) be processed separately from the whole image? Individuals can often be identified by a very small part, for example, only by the eyes or eyebrows. But if the upper half of one person is combined with the lower half of another person, it is very difficult to know to whom these parts belonged (see Fig. 4). The holistic context seems to affect the way individual features are processed. This study showed that separately taken facial features may be sufficient for recognition, but in the context of the whole face, the geometric relationship between the facial feature taken and the rest of it prevails in recognition.

Fig. 4 The upper part of the face belongs to Woody Allen, and the lower - to Oprah Winfrey. When combining it is very difficult to guess who owns the same parts of the face.

Result 5: Eyebrows are one of the most important features for recognition.

Most often, the results of experiments show that the most important facial features for recognition are, in descending order, the eyes, the mouth and the nose. However, recent experiments with digital eyebrow wiping have shown that eyebrows are clearly underestimated by face recognition specialists. In particular, the percentage of recognized people with erased eyebrows was significantly lower than the percentage of recognition of the original portraits. How can this be explained? First, eyebrows are very important for the transmission of emotions. Perhaps the biological system of perception of individuals is initially shifted to give increased importance to these facial features. In addition, eyebrows are very stable element resistant to degradation of image resolution. Eyebrows are located on the protruding part of the skull, and therefore less susceptible to distortion from the shadows.

Fig. 5 Sample images for testing the importance of eyebrows for facial recognition.

Result 6: Significant configuration ratios do not depend on the width / height dimensions.

Many face recognition systems use accurate measurements of attributes, such as the distance between the eyes, the width of the mouth, and the length of the nose. However, in the biological system, it seems that these dimensions are not very important. This is proved by the results of studies of the percentage of face recognition in distorted images. For example, face images can be strongly distorted in width (Fig. 6) without loss of recognition quality. Obviously, the distortions completely knock down algorithms based on measuring the absolute sizes and ratios of sizes along the x and y axes. With such distortions, the ratio of the dimensions along the axis remains unchanged. Perhaps the biological system encodes such ratios to successfully recognize faces when turning the neck.

Fig. 6 Even strong distortions in width (here width was 25% of the original) do not interfere with the recognition of celebrity faces.

NATURE OF KEYS USED: PIGMENTATION, FORM AND MOVEMENT

Result 7: Face shapes are encoded in a slightly caricature form.

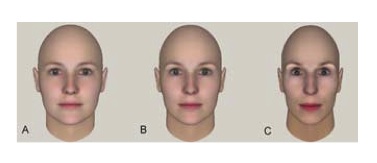

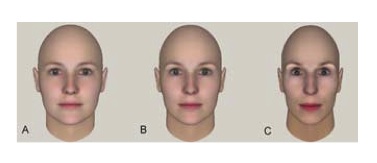

Intuitively, it seems that in order to successfully recognize faces, a person’s visual system must encode what they see exactly what they look like. Errors in saved face images obviously weaken the potential coincidence of new images with old ones. However, experiments have shown that some distortions of truth play a positive role in the recognition of faces. Namely, caricature images of faces ensure the quality of recognition equal or superior to the level of recognition of undistorted faces.

Caricatures can exaggerate individual shape deviations or combine shape deviations and pigmentation (Fig. 7). In both cases, the subjects demonstrated a small but stable superiority of the level of recognition, not only of face recognition, but also of other objects.

These results can be interpreted in this way. There is a space of normal images ("space of persons"). Since cartoons distort individual facial features, individual deviations of the face from the normal play an increased role in recognizing. This gives an interesting strategy to the developers of the algorithms.

Fig. 7 Example of a cartoon image. (A) Female population averaged over the population. (B) The true image of a particular person. (C) A person artificially distorted in form and pigmentation exaggerates the differences between a specific person and an average person. Such distorted images showed a higher recognition rate than true images.

Result 8: Prolonging the face can cause high-level effects, which means it can be prototyped.

After-effects (optical illusions), which occur after a prolonged peering into an “adapting” stimulus (image), have generated many hypotheses about the neural processing of simple visual attributes, such as movement, orientation, and color. Recent studies have shown that adaptation can cause powerful after-effects on much more complex stimuli, such as facial images.

The existence of an after-effect after looking at the face image for a long time indicates the coding of faces based on rationing and contrasting. The effect of the aftereffect can be expressed simply in the perception of a person distorted in the opposite direction with respect to the stimulus, or it can produce a complex effect of the “anti-face” of a specific person without obvious distortions (Fig. 8). <NOTE THE TRANSLATOR break the thunder of me, if someone understood something from this translation!> This suggests that there are several dimensions along which neuron populations can be tuned. Moreover, this may mean that these complex aftereffects are the result of the adaptation of the high parts of the visual cortex.

Fig. 8 Persons and from associated "anti-faces" in the schematic space of individuals. Prolonged gazing at a face marked with a green circle causes the central face to be mistakenly identified as the face of an individual marked with a red circle on the axis on which the original stimulus (green) is located.

Result 9: Pigmentation properties are no less important than shape properties.

Individuals can vary in shape and in the properties of light reflection, let's call it pigmentation. Research has been aimed at finding out what is more important for face recognition: shape or pigmentation. Sets of faces were created that differed from each other only in shape or only in pigmentation - for example, laser scans of faces, artificial models of faces, or morphs of photographs of faces. It turned out that the percentage of recognition did not depend on the method of modification, which means that both classes of stimuli (graphic properties of a form or a combination of color, reflectivity, etc.) are equally important for face recognition. The consequence of this is that taking into account the properties of pigmentation in artificial facial recognition systems should improve the quality of recognition.

Fig. 9 Persons in the bottom row - laser scans of faces, which differ in both shape and pigmentation. Individuals in the middle row differ only in pigmentation, but not in shape. Persons in the top row differ in shape, but not in pigmentation.

Result 10: The chromaticity properties play an important role in the degradation of form properties.

The structure of the brightness of facial images is of course very important for recognition. Using only brightness (i.e. monochrome images) is sufficient for adequate recognition of faces. However, studies have shown that the view that color information is unimportant for recognition is contrary to the observed facts. When shape properties are inaccurate (for example, when resolution is reduced), the brain uses color information for successful recognition. In such cases, the percentage of recognition is significantly higher than that of monochrome images. One of the hypotheses of how color is used is the hypothesis of the diagnostic role of color information — for example, the color of your skin or hair can give us the right answer. The second possibility - the use of chromaticity improves the possibilities of low-level image processing, such as segmentation of image areas.

Fig. 10 Examples of how chroma can facilitate low-level image processing tasks. (A) The distribution of color (right-hand images) makes it possible to more accurately define the boundaries of the regions, and therefore the properties of the form, than the distribution of brightness (monochrome images in the center). (B, C) Pay attention to how the shape of the scalp is more clearly determined by the color distribution than by the monochrome image.

Result 11: Inversion (negative) of the image significantly reduces the percentage of face recognition, possibly due to distortion of the pigmentation properties.

Everyone who was involved in photography knows how difficult it is to recognize even very familiar faces on a negative film. This clearly indicates that although all information about the form remains unchanged, a strong and unnatural distortion of the properties of pigmentation make it difficult to recognize, therefore the human brain actively uses the properties of pigmentation to recognize faces.

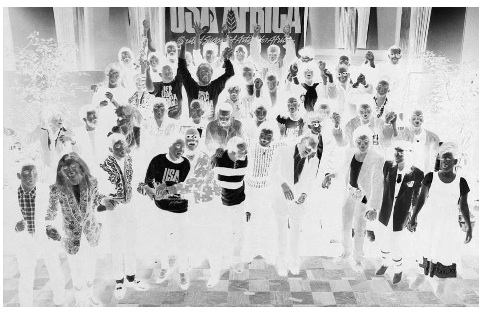

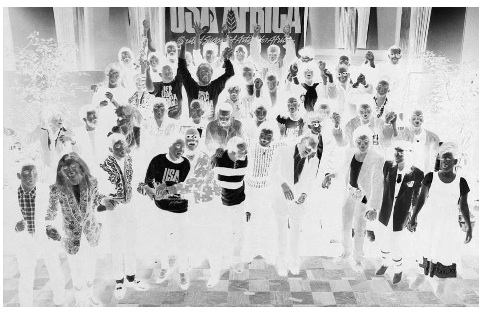

Fig. 11 The negative depicts several well-known singers, but try to recognize them (shooting while recording the song We Are the World).

Result 12: Changes in lighting affect generalization.

Some computational recognition models require the face to be viewed under a variety of lighting conditions for reliable presentation (memorization). However, people are able to generalize ideas about faces under radically different lighting conditions. In the experiment, the subjects were shown a face scan model obtained by laser scanning, when illuminated from one side. Then they were shown a model that was illuminated from a completely different side, and asked if the model was one and the same person. The percentage of recognition was significantly higher than simple guessing, although lower than when covering people on the same side.

Fig. 12 The same face, illuminated on the left and on the right.

Result 13: The generalization of the direction of sight is carried out at the expense of temporal associations.

Recognizing familiar faces from different angles is a very difficult computational task. The human brain solves it with ease. Despite the fact that the images of the same person at different angles are much more different than the images of different people taken from the same angle, people are able to correctly link the images of the same person.

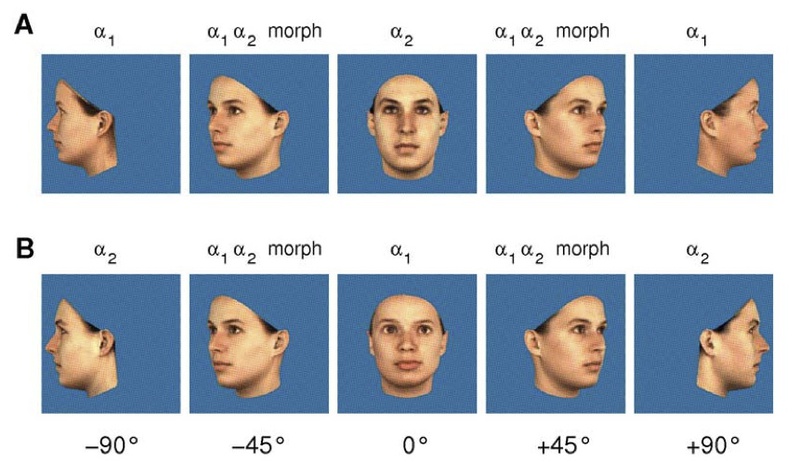

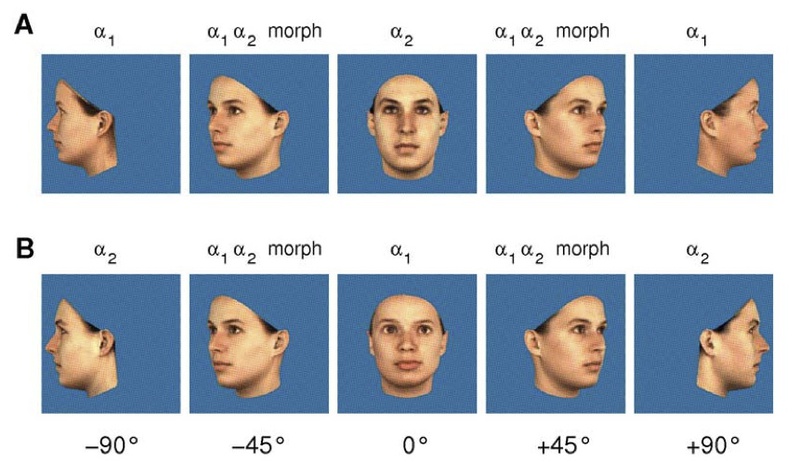

It is hypothesized that temporal associations are the “glue” that binds images of people from different angles into one whole.

In the experiments, the subjects were shown videos in which the face was turned in the frontal plane and at the same time morphing from one face to another was performed. Such a stimulus significantly hampered the ability of subjects to correctly identify individuals. This suggests that viewing sequences of images causes temporal associations.

Fig. 13 Rotation and simultaneous morphing from face a1 to face a2 and again a1.

Result 14: Movement of faces improves recognition.

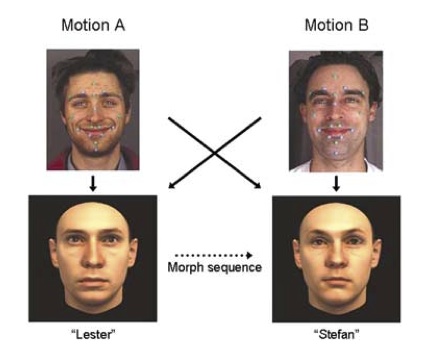

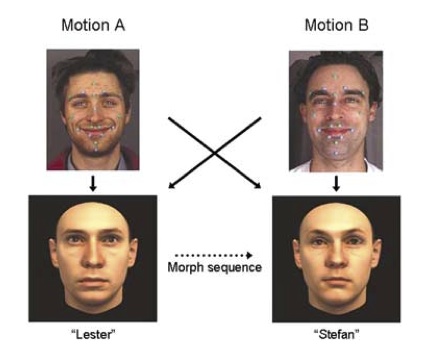

Face movement improves recognition under certain conditions. Rigid movement, such as rotating the camera around a fixed head, improves the recognition of familiar faces, but does not give an advantage when memorizing. But a non-rigid movement, such as emotional changes in facial expressions or changes in conversation, plays a big role. This means that the dynamic properties of individuals, manifested in non-rigid movements, help the brain to more accurately identify the structure of faces and improve the quality of recognition.

Fig. 14 Movement in the reflection of emotions and speech were morphing, as shown by the arrows. The subjects made mistakes in identifying the original faces, for example, when the movement of the “Stefan” lips was superimposed on the “Lester”.

DEVELOPMENT OF THE VISUAL SYSTEM

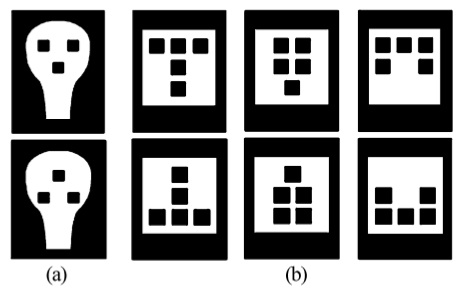

Result 15: The visual system starts recognizing with rudimentary preferences of facial schematics.

Are there specific initial preferences of the human visual system? The answer to this question should help the researcher of computer vision systems to choose from two alternatives: 1) to program specific face pattern structures into the face recognition system; or 2) generate implicit patterns through the learning process, regardless of whether the patterns are specific to individuals or to any objects.

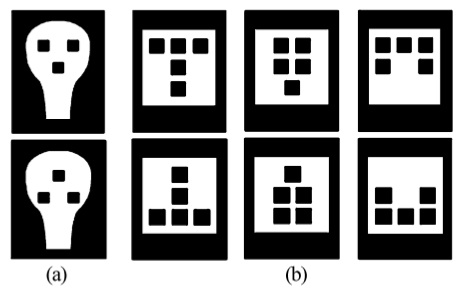

Newborns selectively focus on patterns that look like faces in the first hours after birth. The pattern may look like three dots in the oval, symbolizing the eyes and the mouth (Fig. 15a). An inverted image that is impossible to display a face (an inverted triad of points in the face oval) does not attract the attention of newborns. Later studies have shown that newborns prefer images “weighted from above” to images weighted from below (Fig. 15b). Therefore, it is unclear whether this is a common property of the visual cortex, or specific to facial recognition.

The simplest three-point pattern can be used in the facial search and recognition systems as an initial stage.

Fig. 15 (A) Newborns often focus their eyes on the upper template than on the lower one. (B) Newborns prefer patterns with a predominance of elements at the top.

Result 16: The visual system develops from a strategy of particulars to a holistic strategy during the first years of life.

Ordinary adults are unusually poorly recognized face upside-down images of individuals, while not experiencing difficulties with the recognition of other inverted objects, such as houses. Studies have shown that this property has been developing for several years. Six-year-old children do not show a decrease in the percentage of face recognition from inverted images; in eight-year-olds this ability is already somewhat reduced; ten-year-olds already behave like adults in this regard. In the experiments, they manipulated the distances between individual elements of facial images and substituted individual elements (for example, eyes) from different persons. The results showed that the face recognition strategy develops in the first years of life: from fragmented strategy based on individual properties to a complete system using configuration information.

Fig. 16 Six-year-old children equally well recognize both straight and upturned faces. As you grow older, the recognition of straight faces improves significantly, but the recognition of inverted faces does not. Horizontally - age; vertical - the percentage of correct recognition. On the left - data on face recognition, on the right - on the recognition of houses.

NEURAL BASES

Result 17: The human visual system is likely to form separate areas of the cortex for facial recognition.

Studies have shown that there is an area of the cerebral cortex that gives a strong selective response to the images of the faces of people and animals and a weak response to the images of arbitrary objects and even a schematic image of the faces (Fig. 17). This may suggest to the designers of computer vision systems the framework of possible mechanisms for generalization and selectivity inherent in objectively perfect biological systems.

Fig. 17 The upper left corner shows the location of the FFA (fusiform face area) in the right hemisphere of the brain. Showing examples of visual stimuli and responses to them in the FFA area. Photos of a human face and a cat caused a strong response, and a schematic depiction of the face and an arbitrary object caused a weak response.

Result 18: The delay in response of the inferotemporal cortex to the face image is 120 ms, which probably means basically processing a direct propagation signal.

Studies on the reaction rate include a significant delay in the motor component (for example, the subject must press a button if he sees a face). When using neural recognition markers, such a difficult task as recognizing the fact of the presence of an animal in a natural scene, takes 50 ms. Some cells in the inferotemporal (IT) cortex are specific to individuals. The delay of the response of these cells is in the range of 80-160 ms. This may mean that, from a computational point of view, image processing up to the IT cortex is performed in one straight pass, without feedback and iterations. Processing noisy images may take longer.

Fig. 18 An example of the response of monkey IT cells to various stimulating images of individuals. The response is systematic for varying degrees of degradation of primate images, as well as for the human face. Low response to the image of the hand means that the cell is not responsible for the image of other parts of the body, but is specific to individuals.

Result 19: Face identification and facial expression recognition are likely to be performed by different systems.

Is it possible to extract information about facial expressions regardless of the identification of a person, or is it interrelated? Behavioral studies, electrophysiological studies on animals and visualization of neural activity show that the separation of these two tasks occurs at the very beginning of the facial tract, and there are separate areas of the brain responsible for identification and for emotions.

As usual, I offer an abridged translation, the full text is available in the original .

')

INTRODUCTION

Despite significant efforts to develop facial recognition algorithms, a system has not yet been created that can work without artificial limitations, taking into account all possible variations of image parameters, such as sensor noise, distance to an object, and light level. The only system that does its job well is human vision. Therefore, it is useful to study the strategies that this biological system uses and try to use them in the development of artificial algorithms. 19 important research results are proposed that do not claim to be the complete theory of facial recognition, but give important clues to the developers of computer vision systems. These 19 results are collected from various publications of many scientific groups, and the original article contains links to these publications.

RECOGNITION AS A FUNCTION OF SPATIAL RESOLUTION

Result 1: People are able to recognize familiar faces in very low resolution images.

Progress in the development of high-resolution video sensors provokes the use of an increasing number of small parts for face recognition in computer vision systems. An example of this approach is iris recognition. Obviously, such algorithms do not work in the absence of high-definition images. This problem is especially relevant when face recognition is required at a considerable distance. Turn to human vision. How does the accuracy of facial recognition on the image resolution? It turns out that people maintain the accuracy of recognizing familiar faces on images smoothed to the size of 16x16 blocks. Recognition accuracy of over 50% is preserved when smoothed to an equivalent size of 7x10 pixels (see Fig. 1), and becomes almost equal to the maximum possible value at a resolution of 19x27 pixels.

Fig. 1 People are able to recognize more than half of familiar faces with the resolution shown in this figure. Here are depicted: 1 - Michael Jordan, 2 - Woody Allen, 3 - Goldie Hawn, 4 - Bill Clinton, 5 - Tom Hanks, 6 - Saddam Hussein, 7 - Elvis Presley, 8 - Jay Leno, 9 - Dustin Hofman, 10 - Prince Charles, 11 - Cher, 12 - Richard Nixon.

Result 2: The ability to ignore the degradation of images increases with the degree of familiarity.

The ability to compensate for the degradation of the resolution of images strongly depends on the degree of familiarity with the subject. Demonstrated a low recognition rate of strangers in two different photos of the same subject, and on the other hand, a high percentage of recognition of images of colleagues at work when observing images from low-quality surveillance cameras. At the same time, the figure and gait turned out to be much less informative than the image of faces, despite their extremely low resolution. This is proved by the fact that when a figure is shielded, but the face is left, the accuracy of recognition drops slightly, but with the opposite effect the accuracy decreases significantly (see Fig. 2).

Fig. 2 Images from the video used in the study. (a) the original image, (b) the subject’s body is closed, (c) the face is closed.

Result 3: High-frequency information by itself does not guarantee high quality recognition.

The traditional approach to recognition is largely based on the use of contour extraction algorithms. Contour is considered to be an invariant under various lighting conditions. In the context of biological facial recognition, contour (vector) images are usually sufficient for face recognition. Pencil sketches and cartoons are often easily recognizable. Does this mean that high-frequency spatial images are crucial, or at least sufficient for face recognition? Research results refute this. Specifically for “vector” drawings, it is shown that images that contain only contours are poorly recognizable (correct recognition in 47% of vector drawings versus 90% of the original photos) - see fig. 3

Fig. 3 Images that contain only contours are difficult to recognize.

NATURE TREATMENT: FRAGMENTARY OR COMPLETELY?

Result 4: Facial features are treated as one.

Can facial features (eyes, nose, mouth, eyebrows, etc.) be processed separately from the whole image? Individuals can often be identified by a very small part, for example, only by the eyes or eyebrows. But if the upper half of one person is combined with the lower half of another person, it is very difficult to know to whom these parts belonged (see Fig. 4). The holistic context seems to affect the way individual features are processed. This study showed that separately taken facial features may be sufficient for recognition, but in the context of the whole face, the geometric relationship between the facial feature taken and the rest of it prevails in recognition.

Fig. 4 The upper part of the face belongs to Woody Allen, and the lower - to Oprah Winfrey. When combining it is very difficult to guess who owns the same parts of the face.

Result 5: Eyebrows are one of the most important features for recognition.

Most often, the results of experiments show that the most important facial features for recognition are, in descending order, the eyes, the mouth and the nose. However, recent experiments with digital eyebrow wiping have shown that eyebrows are clearly underestimated by face recognition specialists. In particular, the percentage of recognized people with erased eyebrows was significantly lower than the percentage of recognition of the original portraits. How can this be explained? First, eyebrows are very important for the transmission of emotions. Perhaps the biological system of perception of individuals is initially shifted to give increased importance to these facial features. In addition, eyebrows are very stable element resistant to degradation of image resolution. Eyebrows are located on the protruding part of the skull, and therefore less susceptible to distortion from the shadows.

Fig. 5 Sample images for testing the importance of eyebrows for facial recognition.

Result 6: Significant configuration ratios do not depend on the width / height dimensions.

Many face recognition systems use accurate measurements of attributes, such as the distance between the eyes, the width of the mouth, and the length of the nose. However, in the biological system, it seems that these dimensions are not very important. This is proved by the results of studies of the percentage of face recognition in distorted images. For example, face images can be strongly distorted in width (Fig. 6) without loss of recognition quality. Obviously, the distortions completely knock down algorithms based on measuring the absolute sizes and ratios of sizes along the x and y axes. With such distortions, the ratio of the dimensions along the axis remains unchanged. Perhaps the biological system encodes such ratios to successfully recognize faces when turning the neck.

Fig. 6 Even strong distortions in width (here width was 25% of the original) do not interfere with the recognition of celebrity faces.

NATURE OF KEYS USED: PIGMENTATION, FORM AND MOVEMENT

Result 7: Face shapes are encoded in a slightly caricature form.

Intuitively, it seems that in order to successfully recognize faces, a person’s visual system must encode what they see exactly what they look like. Errors in saved face images obviously weaken the potential coincidence of new images with old ones. However, experiments have shown that some distortions of truth play a positive role in the recognition of faces. Namely, caricature images of faces ensure the quality of recognition equal or superior to the level of recognition of undistorted faces.

Caricatures can exaggerate individual shape deviations or combine shape deviations and pigmentation (Fig. 7). In both cases, the subjects demonstrated a small but stable superiority of the level of recognition, not only of face recognition, but also of other objects.

These results can be interpreted in this way. There is a space of normal images ("space of persons"). Since cartoons distort individual facial features, individual deviations of the face from the normal play an increased role in recognizing. This gives an interesting strategy to the developers of the algorithms.

Fig. 7 Example of a cartoon image. (A) Female population averaged over the population. (B) The true image of a particular person. (C) A person artificially distorted in form and pigmentation exaggerates the differences between a specific person and an average person. Such distorted images showed a higher recognition rate than true images.

Result 8: Prolonging the face can cause high-level effects, which means it can be prototyped.

After-effects (optical illusions), which occur after a prolonged peering into an “adapting” stimulus (image), have generated many hypotheses about the neural processing of simple visual attributes, such as movement, orientation, and color. Recent studies have shown that adaptation can cause powerful after-effects on much more complex stimuli, such as facial images.

The existence of an after-effect after looking at the face image for a long time indicates the coding of faces based on rationing and contrasting. The effect of the aftereffect can be expressed simply in the perception of a person distorted in the opposite direction with respect to the stimulus, or it can produce a complex effect of the “anti-face” of a specific person without obvious distortions (Fig. 8). <NOTE THE TRANSLATOR break the thunder of me, if someone understood something from this translation!> This suggests that there are several dimensions along which neuron populations can be tuned. Moreover, this may mean that these complex aftereffects are the result of the adaptation of the high parts of the visual cortex.

Fig. 8 Persons and from associated "anti-faces" in the schematic space of individuals. Prolonged gazing at a face marked with a green circle causes the central face to be mistakenly identified as the face of an individual marked with a red circle on the axis on which the original stimulus (green) is located.

Result 9: Pigmentation properties are no less important than shape properties.

Individuals can vary in shape and in the properties of light reflection, let's call it pigmentation. Research has been aimed at finding out what is more important for face recognition: shape or pigmentation. Sets of faces were created that differed from each other only in shape or only in pigmentation - for example, laser scans of faces, artificial models of faces, or morphs of photographs of faces. It turned out that the percentage of recognition did not depend on the method of modification, which means that both classes of stimuli (graphic properties of a form or a combination of color, reflectivity, etc.) are equally important for face recognition. The consequence of this is that taking into account the properties of pigmentation in artificial facial recognition systems should improve the quality of recognition.

Fig. 9 Persons in the bottom row - laser scans of faces, which differ in both shape and pigmentation. Individuals in the middle row differ only in pigmentation, but not in shape. Persons in the top row differ in shape, but not in pigmentation.

Result 10: The chromaticity properties play an important role in the degradation of form properties.

The structure of the brightness of facial images is of course very important for recognition. Using only brightness (i.e. monochrome images) is sufficient for adequate recognition of faces. However, studies have shown that the view that color information is unimportant for recognition is contrary to the observed facts. When shape properties are inaccurate (for example, when resolution is reduced), the brain uses color information for successful recognition. In such cases, the percentage of recognition is significantly higher than that of monochrome images. One of the hypotheses of how color is used is the hypothesis of the diagnostic role of color information — for example, the color of your skin or hair can give us the right answer. The second possibility - the use of chromaticity improves the possibilities of low-level image processing, such as segmentation of image areas.

Fig. 10 Examples of how chroma can facilitate low-level image processing tasks. (A) The distribution of color (right-hand images) makes it possible to more accurately define the boundaries of the regions, and therefore the properties of the form, than the distribution of brightness (monochrome images in the center). (B, C) Pay attention to how the shape of the scalp is more clearly determined by the color distribution than by the monochrome image.

Result 11: Inversion (negative) of the image significantly reduces the percentage of face recognition, possibly due to distortion of the pigmentation properties.

Everyone who was involved in photography knows how difficult it is to recognize even very familiar faces on a negative film. This clearly indicates that although all information about the form remains unchanged, a strong and unnatural distortion of the properties of pigmentation make it difficult to recognize, therefore the human brain actively uses the properties of pigmentation to recognize faces.

Fig. 11 The negative depicts several well-known singers, but try to recognize them (shooting while recording the song We Are the World).

Result 12: Changes in lighting affect generalization.

Some computational recognition models require the face to be viewed under a variety of lighting conditions for reliable presentation (memorization). However, people are able to generalize ideas about faces under radically different lighting conditions. In the experiment, the subjects were shown a face scan model obtained by laser scanning, when illuminated from one side. Then they were shown a model that was illuminated from a completely different side, and asked if the model was one and the same person. The percentage of recognition was significantly higher than simple guessing, although lower than when covering people on the same side.

Fig. 12 The same face, illuminated on the left and on the right.

Result 13: The generalization of the direction of sight is carried out at the expense of temporal associations.

Recognizing familiar faces from different angles is a very difficult computational task. The human brain solves it with ease. Despite the fact that the images of the same person at different angles are much more different than the images of different people taken from the same angle, people are able to correctly link the images of the same person.

It is hypothesized that temporal associations are the “glue” that binds images of people from different angles into one whole.

In the experiments, the subjects were shown videos in which the face was turned in the frontal plane and at the same time morphing from one face to another was performed. Such a stimulus significantly hampered the ability of subjects to correctly identify individuals. This suggests that viewing sequences of images causes temporal associations.

Fig. 13 Rotation and simultaneous morphing from face a1 to face a2 and again a1.

Result 14: Movement of faces improves recognition.

Face movement improves recognition under certain conditions. Rigid movement, such as rotating the camera around a fixed head, improves the recognition of familiar faces, but does not give an advantage when memorizing. But a non-rigid movement, such as emotional changes in facial expressions or changes in conversation, plays a big role. This means that the dynamic properties of individuals, manifested in non-rigid movements, help the brain to more accurately identify the structure of faces and improve the quality of recognition.

Fig. 14 Movement in the reflection of emotions and speech were morphing, as shown by the arrows. The subjects made mistakes in identifying the original faces, for example, when the movement of the “Stefan” lips was superimposed on the “Lester”.

DEVELOPMENT OF THE VISUAL SYSTEM

Result 15: The visual system starts recognizing with rudimentary preferences of facial schematics.

Are there specific initial preferences of the human visual system? The answer to this question should help the researcher of computer vision systems to choose from two alternatives: 1) to program specific face pattern structures into the face recognition system; or 2) generate implicit patterns through the learning process, regardless of whether the patterns are specific to individuals or to any objects.

Newborns selectively focus on patterns that look like faces in the first hours after birth. The pattern may look like three dots in the oval, symbolizing the eyes and the mouth (Fig. 15a). An inverted image that is impossible to display a face (an inverted triad of points in the face oval) does not attract the attention of newborns. Later studies have shown that newborns prefer images “weighted from above” to images weighted from below (Fig. 15b). Therefore, it is unclear whether this is a common property of the visual cortex, or specific to facial recognition.

The simplest three-point pattern can be used in the facial search and recognition systems as an initial stage.

Fig. 15 (A) Newborns often focus their eyes on the upper template than on the lower one. (B) Newborns prefer patterns with a predominance of elements at the top.

Result 16: The visual system develops from a strategy of particulars to a holistic strategy during the first years of life.

Ordinary adults are unusually poorly recognized face upside-down images of individuals, while not experiencing difficulties with the recognition of other inverted objects, such as houses. Studies have shown that this property has been developing for several years. Six-year-old children do not show a decrease in the percentage of face recognition from inverted images; in eight-year-olds this ability is already somewhat reduced; ten-year-olds already behave like adults in this regard. In the experiments, they manipulated the distances between individual elements of facial images and substituted individual elements (for example, eyes) from different persons. The results showed that the face recognition strategy develops in the first years of life: from fragmented strategy based on individual properties to a complete system using configuration information.

Fig. 16 Six-year-old children equally well recognize both straight and upturned faces. As you grow older, the recognition of straight faces improves significantly, but the recognition of inverted faces does not. Horizontally - age; vertical - the percentage of correct recognition. On the left - data on face recognition, on the right - on the recognition of houses.

NEURAL BASES

Result 17: The human visual system is likely to form separate areas of the cortex for facial recognition.

Studies have shown that there is an area of the cerebral cortex that gives a strong selective response to the images of the faces of people and animals and a weak response to the images of arbitrary objects and even a schematic image of the faces (Fig. 17). This may suggest to the designers of computer vision systems the framework of possible mechanisms for generalization and selectivity inherent in objectively perfect biological systems.

Fig. 17 The upper left corner shows the location of the FFA (fusiform face area) in the right hemisphere of the brain. Showing examples of visual stimuli and responses to them in the FFA area. Photos of a human face and a cat caused a strong response, and a schematic depiction of the face and an arbitrary object caused a weak response.

Result 18: The delay in response of the inferotemporal cortex to the face image is 120 ms, which probably means basically processing a direct propagation signal.

Studies on the reaction rate include a significant delay in the motor component (for example, the subject must press a button if he sees a face). When using neural recognition markers, such a difficult task as recognizing the fact of the presence of an animal in a natural scene, takes 50 ms. Some cells in the inferotemporal (IT) cortex are specific to individuals. The delay of the response of these cells is in the range of 80-160 ms. This may mean that, from a computational point of view, image processing up to the IT cortex is performed in one straight pass, without feedback and iterations. Processing noisy images may take longer.

Fig. 18 An example of the response of monkey IT cells to various stimulating images of individuals. The response is systematic for varying degrees of degradation of primate images, as well as for the human face. Low response to the image of the hand means that the cell is not responsible for the image of other parts of the body, but is specific to individuals.

Result 19: Face identification and facial expression recognition are likely to be performed by different systems.

Is it possible to extract information about facial expressions regardless of the identification of a person, or is it interrelated? Behavioral studies, electrophysiological studies on animals and visualization of neural activity show that the separation of these two tasks occurs at the very beginning of the facial tract, and there are separate areas of the brain responsible for identification and for emotions.

Source: https://habr.com/ru/post/136483/

All Articles