To fill up 30 servers in a second from a laptop?

Having released a new version of slowhttptest with support for slow reading ( Slow Read DoS attack ), I helped several users test their services. During one of the tests there was an instructive story that I want to tell.

I received a letter with a request to look at the results of running slowhttptest. According to the report, the program bent over the service in a matter of seconds, which seemed rather incredible. The service, according to the architecture, is capable of serving thousands of clients from all over the world, and the slowhttptest is limited to thousands of connections.

To give an idea of the service, I will say that the organization has about 60000 registered users with the client application installed, which occasionally connects to the central server, receives instructions and sends results (Don't botnet it!).

About 2000 client applications are running at a time, and several hundred of them are connected to the server at the same time.

At first, I was skeptical about the fact that it can be poured from one computer, but it’s not funny, I asked about the architecture.

The system turned out to be built according to the well-known drawing, the components of the system were monitored, the patches were installed on time, nothing strange that could allow to overwhelm it.

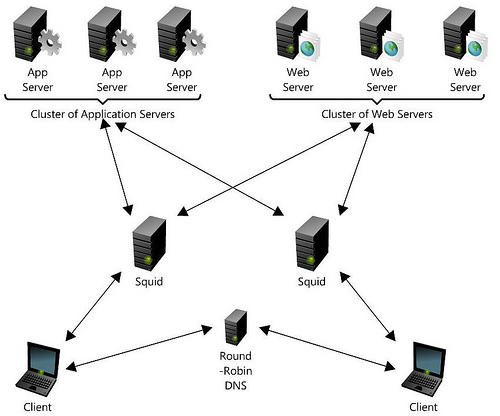

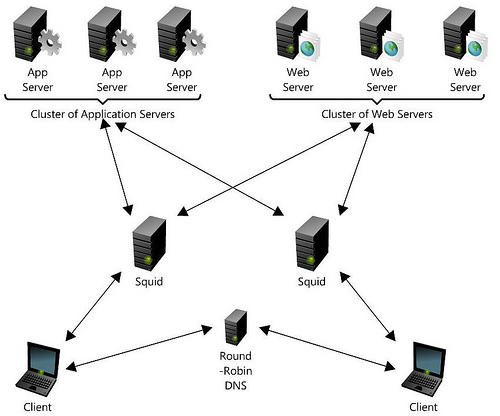

The system architecture is as follows:

- round-robin DNS, working as load-balancer, and responding to requests with the IP address of one or the other Squid, working as a reverse proxy

- two sealed Squid servers on the powerful machines allocated for them

- web server cluster

- application server cluster

')

I started dig - a utility that helps in requesting DNS, received a list of IP addresses corresponding to the domain name, and registered one of the addresses in / etc / hosts to concentrate on one Squide.

Aggressively tuned slowhttptest, giving him a URL with a photo of one of the bosses, start ! 1000 connections request a picture 10 times a connection, and immediately read the server's responses.

And the truth lies! Rechecked from the browser through a proxy to make sure that not my IP is banned, here and this guy pulled himself up, demanding to cut down the attack.

In short, it turned out that this is a simple configuration error, and the limit of the operating system (Enterprise RedHat, by the way) for the number of open file descriptors by one process is 1024, so Squid just stuck with this restriction.

The moral of the story, besides the fact that good triumphed over evil, is that the level of security of a system is determined by the level of security of the weakest link, and podlyanki need to wait anywhere, not relying on the "correctness" of architecture. And also in the fact that it is not enough to install patches in time, and it would be necessary to conduct an assessment of security from the outside. In particular, if the system maintains 10,000 connections within a minute, this does not mean that it will withstand 1,000 connections for 6 seconds, if the life of connections is 6 seconds, for example.

What to do if 20,000 clients have connected with you at the same time is a topic for another discussion.

I received a letter with a request to look at the results of running slowhttptest. According to the report, the program bent over the service in a matter of seconds, which seemed rather incredible. The service, according to the architecture, is capable of serving thousands of clients from all over the world, and the slowhttptest is limited to thousands of connections.

To give an idea of the service, I will say that the organization has about 60000 registered users with the client application installed, which occasionally connects to the central server, receives instructions and sends results (Don't botnet it!).

About 2000 client applications are running at a time, and several hundred of them are connected to the server at the same time.

At first, I was skeptical about the fact that it can be poured from one computer, but it’s not funny, I asked about the architecture.

The system turned out to be built according to the well-known drawing, the components of the system were monitored, the patches were installed on time, nothing strange that could allow to overwhelm it.

The system architecture is as follows:

- round-robin DNS, working as load-balancer, and responding to requests with the IP address of one or the other Squid, working as a reverse proxy

- two sealed Squid servers on the powerful machines allocated for them

- web server cluster

- application server cluster

')

I started dig - a utility that helps in requesting DNS, received a list of IP addresses corresponding to the domain name, and registered one of the addresses in / etc / hosts to concentrate on one Squide.

Aggressively tuned slowhttptest, giving him a URL with a photo of one of the bosses, start ! 1000 connections request a picture 10 times a connection, and immediately read the server's responses.

And the truth lies! Rechecked from the browser through a proxy to make sure that not my IP is banned, here and this guy pulled himself up, demanding to cut down the attack.

In short, it turned out that this is a simple configuration error, and the limit of the operating system (Enterprise RedHat, by the way) for the number of open file descriptors by one process is 1024, so Squid just stuck with this restriction.

The moral of the story, besides the fact that good triumphed over evil, is that the level of security of a system is determined by the level of security of the weakest link, and podlyanki need to wait anywhere, not relying on the "correctness" of architecture. And also in the fact that it is not enough to install patches in time, and it would be necessary to conduct an assessment of security from the outside. In particular, if the system maintains 10,000 connections within a minute, this does not mean that it will withstand 1,000 connections for 6 seconds, if the life of connections is 6 seconds, for example.

What to do if 20,000 clients have connected with you at the same time is a topic for another discussion.

Source: https://habr.com/ru/post/136127/

All Articles