Opening a cloud to new customers

News in one line:

Key changes:

Actually, this announcement ends, then the lyrics begin.

What have we been doing these 3 months?

')

Or, more correctly, “what was wrong?”.

Python is a great language known as an excellent means of rapid development. Write on it a prototype of a working application, “proof of opportunity” (proof of concept) - yes. Write on it an application that spends more time in the code of libraries than on math - yes.

But here it is possible to write multi-threaded applications on it with tens of thousands of transactions per second with reasonable CPU consumption ... Until a certain point. Then the architectural problems begin, the struggle with which begins to take more time than the programming of the new.

This is exactly the situation we have had. At the same time, in several components that were “written and forgotten” (that is, they were not planned for further development in the near future), the bottlenecks were revealed. For some time we “had fun” with code optimization, discussed the possibility of making part of it in sishnye libraries with bindings on python ... So far we have not found that problems are accumulating faster than we solve them. And this is despite the fact that this autumn we almost doubled the number of developers.

An additional problem was created by new users experimenting and playing with functionality (we have nothing against it, but not at that moment when the system is already “on the verge”) - this gave additional stress. At that moment, when some API requests began to be executed 10 s instead of the set 0.1-0.2 (someone could notice how slowly the control panel contents were displayed at that moment, and the delay was completely unpredictable), it was decided to stop creating new machines.

This, firstly, reduced the load from new customers, and secondly, when all the machines are created, users rarely enter the control panel and the number of calls to the control panel has dropped significantly.

At a time when the load was stabilized, during the extremely bloody battles, functionalists defeated the processors. Or, in other words, we looked towards functional programming languages.

Namely, the Haskell programming language.

Yes, yes, that terrible and terrible language, in articles about which the code ends on page 5-10 and the highest mathematics begins.

Personally, I was a desperate opponent of adopting this language. However, the argument from the supporters convinced me - strict typification, type inference, pattern matching (with control of its completeness) ...

Add to this consumer properties: compiled language (read: to run, you only need an executable file without any environment), a long history with long-gone children's diseases (20+ years), a lot of libraries for all occasions (this, by the way, determined “Haskel or okaml "). Finally, I was struck by the fact that the program on Haskel was going nose-to-nose in a simple test for IO and math with a program in C. And “nose-to-nose” is when gcc optimization is enabled.

Is haskell programming fast? Not. According to my observations, the program is written about three times slower than on python. However, the real difference comes after. It’s enough to say that if we rolled out an average of 3 to 10 bugfixes for programs on python, then the programs that we currently work on haskell practically do not contain bugfixes. Most of the changes are related to a change in the TK, and not a reaction to the next “attribute has not method foobar” in the flow log.

We used to write a lot of code on python, now we write a little code on Haskel.

Have we copied everything to Haskel? Alas, no, the process goes on as needs arise and most of our code is still on python, but as the architecture changes, everything else is planned to be rewritten into it.

Moreover, as the final chord - our API server is still written on the python - and it was the “quick python” that caused the two-week delay with the launch, as it had to repeatedly and painfully recheck all the behaviors of the new functions that the python (as a language with dynamic typing) ) could not check.

...

However, while programmers were sharpening their style, the system administration department did not sit still.

One of our shortcomings was the use of non-clustered storage systems. The data was safe, but there was a chance to stumble on problems with uptime. Not very big, but it was.

One of our shortcomings was the use of non-clustered storage systems. The data was safe, but there was a chance to stumble on problems with uptime. Not very big, but it was.

The combination of drbd, flashcache and multipath, I hope, solved this problem. Data is stored on the 10th raid, a complete copy of all data is located on each node of the cluster. If the node dies - the clients either do not notice anything, or (in the worst case) receive 5-10 seconds with a one-time delay of disk operations, after which the multipath finds an alternative path and requests are further processed without fail.

The second important change was the transition to LVM for system drives. This should greatly simplify the process of managing partitions.

OS were updated: OpenSUSE 12.1, CentOS 5.7, Debian Wheezy appeared. There was a template with ClearOS (for those who want a web interface to the VPN server). Some more useful templates in development.

The most difficult and difficult task that we solved with great success. About snapshots and the principles of their work, I will write a separate article, but for now the “squeeze” of snapshots features:

* Snapshots are made "on the go" without stopping the machine

* Snapshots can form both a chronological list and a "tree".

* Snapshots store only changed data (minimum size - 8Mb)

* Snapshots are paid for as disk space (a separate line) at the price of regular disk space

* Disk snapshots can be connected in read only to existing machines without stopping the use of a “regular” disk.

In order to do this well and conveniently, we had to greatly rewrite the usual snapshots in the Xen Cloud Platform, in particular, to add support for the tree-like relations between snapshots and the possibility of free kickbacks between any snapshots.

Much time was given to ergonomics. For example, such fields as password, IP-address and other data, which are often copied, are made in such a way that a double click selects them and only them, without crawling to the neighbors. All controls in the regular menus are static (that is, do not jump back and forth and do not change the value depending on the context). This does not apply to “dangerous operations”, where our main task was not to create maximum comfort, but to make us think “whether I want it or not.” By the way, if someone has a clear feeling of “doing too much” in some case, write to me (or in tickets). We do not promise a correction, but at least I will think about how to implement it and if we can think of it, we will do it.

We will continue to maintain the old pool for a long time (I don’t promise that “always”, but for a very long time - for sure), and all new machines will be created in the new pool - this is where snapshots, “native kernels” will work, and that’s where the new templates are located .

Cloud launched

We reopened the ability to create virtual machines and are ready to accept new users in our cloud in a new pool. Tariffs are the same, more opportunities.

Key changes:

- New Cluster Storage

- Updated LVM virtual machine templates that simplify disk resizing

- Snapshots

- Improved control panel performance

Actually, this announcement ends, then the lyrics begin.

What have we been doing these 3 months?

')

Or, more correctly, “what was wrong?”.

Python is a great language known as an excellent means of rapid development. Write on it a prototype of a working application, “proof of opportunity” (proof of concept) - yes. Write on it an application that spends more time in the code of libraries than on math - yes.

But here it is possible to write multi-threaded applications on it with tens of thousands of transactions per second with reasonable CPU consumption ... Until a certain point. Then the architectural problems begin, the struggle with which begins to take more time than the programming of the new.

This is exactly the situation we have had. At the same time, in several components that were “written and forgotten” (that is, they were not planned for further development in the near future), the bottlenecks were revealed. For some time we “had fun” with code optimization, discussed the possibility of making part of it in sishnye libraries with bindings on python ... So far we have not found that problems are accumulating faster than we solve them. And this is despite the fact that this autumn we almost doubled the number of developers.

An additional problem was created by new users experimenting and playing with functionality (we have nothing against it, but not at that moment when the system is already “on the verge”) - this gave additional stress. At that moment, when some API requests began to be executed 10 s instead of the set 0.1-0.2 (someone could notice how slowly the control panel contents were displayed at that moment, and the delay was completely unpredictable), it was decided to stop creating new machines.

This, firstly, reduced the load from new customers, and secondly, when all the machines are created, users rarely enter the control panel and the number of calls to the control panel has dropped significantly.

At a time when the load was stabilized, during the extremely bloody battles, functionalists defeated the processors. Or, in other words, we looked towards functional programming languages.

Namely, the Haskell programming language.

Yes, yes, that terrible and terrible language, in articles about which the code ends on page 5-10 and the highest mathematics begins.

Personally, I was a desperate opponent of adopting this language. However, the argument from the supporters convinced me - strict typification, type inference, pattern matching (with control of its completeness) ...

Add to this consumer properties: compiled language (read: to run, you only need an executable file without any environment), a long history with long-gone children's diseases (20+ years), a lot of libraries for all occasions (this, by the way, determined “Haskel or okaml "). Finally, I was struck by the fact that the program on Haskel was going nose-to-nose in a simple test for IO and math with a program in C. And “nose-to-nose” is when gcc optimization is enabled.

Is haskell programming fast? Not. According to my observations, the program is written about three times slower than on python. However, the real difference comes after. It’s enough to say that if we rolled out an average of 3 to 10 bugfixes for programs on python, then the programs that we currently work on haskell practically do not contain bugfixes. Most of the changes are related to a change in the TK, and not a reaction to the next “attribute has not method foobar” in the flow log.

We used to write a lot of code on python, now we write a little code on Haskel.

Have we copied everything to Haskel? Alas, no, the process goes on as needs arise and most of our code is still on python, but as the architecture changes, everything else is planned to be rewritten into it.

Moreover, as the final chord - our API server is still written on the python - and it was the “quick python” that caused the two-week delay with the launch, as it had to repeatedly and painfully recheck all the behaviors of the new functions that the python (as a language with dynamic typing) ) could not check.

...

However, while programmers were sharpening their style, the system administration department did not sit still.

Cluster storage

One of our shortcomings was the use of non-clustered storage systems. The data was safe, but there was a chance to stumble on problems with uptime. Not very big, but it was.

One of our shortcomings was the use of non-clustered storage systems. The data was safe, but there was a chance to stumble on problems with uptime. Not very big, but it was.The combination of drbd, flashcache and multipath, I hope, solved this problem. Data is stored on the 10th raid, a complete copy of all data is located on each node of the cluster. If the node dies - the clients either do not notice anything, or (in the worst case) receive 5-10 seconds with a one-time delay of disk operations, after which the multipath finds an alternative path and requests are further processed without fail.

Templates

The key and fundamental was the transition to the 3.1-xen core (except for the centros, which is on its own mind). At the same time, reluctantly, we allowed users to load the cores from the machine, and not “ours”. Why reluctantly heart? Because if the client puts the “wrong core”, then his machine will hang or behave inadequately. Poor, poor technical support department. But the convenience of the fact that the kernel is installed inside the virtual machine still outweighed.The second important change was the transition to LVM for system drives. This should greatly simplify the process of managing partitions.

OS were updated: OpenSUSE 12.1, CentOS 5.7, Debian Wheezy appeared. There was a template with ClearOS (for those who want a web interface to the VPN server). Some more useful templates in development.

Snapshots

The most difficult and difficult task that we solved with great success. About snapshots and the principles of their work, I will write a separate article, but for now the “squeeze” of snapshots features:

* Snapshots are made "on the go" without stopping the machine

* Snapshots can form both a chronological list and a "tree".

* Snapshots store only changed data (minimum size - 8Mb)

* Snapshots are paid for as disk space (a separate line) at the price of regular disk space

* Disk snapshots can be connected in read only to existing machines without stopping the use of a “regular” disk.

In order to do this well and conveniently, we had to greatly rewrite the usual snapshots in the Xen Cloud Platform, in particular, to add support for the tree-like relations between snapshots and the possibility of free kickbacks between any snapshots.

Control Panel

The design of the new control panel we “rolled out” shortly before the suspension of service (stupid, yes, but now everyone can see it). In addition, we rewrote statistics, significantly reduced the load on the database in some types of queries. At the same time, on the sly, we added the upload of consumption data to CSV.Much time was given to ergonomics. For example, such fields as password, IP-address and other data, which are often copied, are made in such a way that a double click selects them and only them, without crawling to the neighbors. All controls in the regular menus are static (that is, do not jump back and forth and do not change the value depending on the context). This does not apply to “dangerous operations”, where our main task was not to create maximum comfort, but to make us think “whether I want it or not.” By the way, if someone has a clear feeling of “doing too much” in some case, write to me (or in tickets). We do not promise a correction, but at least I will think about how to implement it and if we can think of it, we will do it.

The fate of the old pool

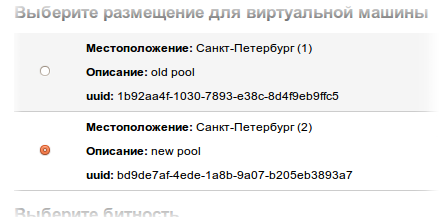

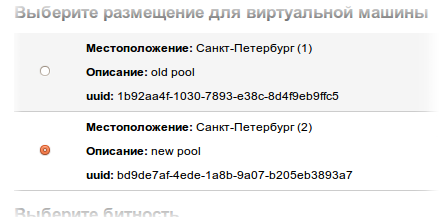

Due to architectural changes, we were unable to implement new features in the old pool, they are implemented in the new pool (virtual machines will be created in it).- Reference: pool (pool) - a group of servers that has a single administrative center (pool wizards) with a single configuration and shared storage. In the context of our cloud, it means the highest hierarchical unit of cloud segmentation. Pools do not interact with each other, although they obey a single API server.

We will continue to maintain the old pool for a long time (I don’t promise that “always”, but for a very long time - for sure), and all new machines will be created in the new pool - this is where snapshots, “native kernels” will work, and that’s where the new templates are located .

Source: https://habr.com/ru/post/135858/

All Articles