All the most fashionable

Having read on the Internet about new, simple, fast and scalable technologies, I wanted to try them all. Suddenly they will be better than the already familiar postgresql + django + json-rpc bundles.

Since there was no idea, but there was a free domain uglyrater.org - I had to do a rating.

The essence is simple: there is a list of users who can be placed + and -. New users are added to the rating at the page of VKontakte.

Caution! The article contains many subjective assessments based on personal experience!

')

The architecture had to be intentionally complicated, otherwise everything interesting would not have been possible to try. Plus, the operation of obtaining user data on vk api is still quite long, and the idea of parallelizing it is not so bad. For this you need MQ, the choice fell on RabbitMQ . You may say AMQP “sucks”, ØMQ “our everything”, but with zeromq I have already gained enough, and in this context I’m not interested in it.

RabbitMQ was used through pika . It took a minimum of code to initialize and send / receive messages, it can be viewed in the worker and its tests . After writing, a small comparison with the already used ZerocIce and ØMQ to meet this or a similar task appeared:

And it so happened that for the current task RabbitMQ and ØMQ fit equally well, and ZerocIce is like a cannon on a sparrow.

Now the received and processed data must be stored somewhere. Now nosql is fashionable, so mongodb was chosen. For this task, there is no difference regarding postgresql with orm, everything is quick and convenient.

It is the turn of the web server. Now the fashion for asynchrony and push from the server to the client has gone , so tornado and tornadio2 were chosen , especially since they recently wrote about it on a habr. Then the problem immediately got out; it was impossible to get cookies from tornadio2. Therefore, I had to shake a crutch out of sending an id with a “secret” from a client through socketio, which made the implementation a little more difficult. After all this, a small comparison with django + json-rpc was obtained:

It's all ambiguous, but because of the push option with tornado still better.

For the client side, nothing special was thought out, so they simply used the coffee script , haml and sass , which saved a lot of code.

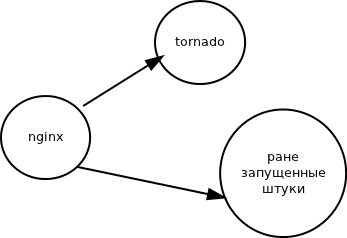

Without thinking for a long time, I decided to deploy everything on the server through nginx using the standard scheme:

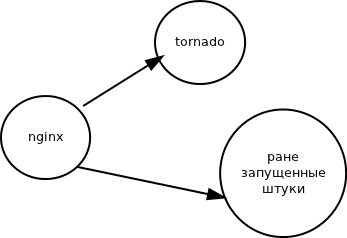

But it was not there. It turned out that nginx does not support web sockets and there were 3 solutions to the problem:

This, of course, everything is cool, convenient and fast, but there are still a lot of jambs. The main joint with the deployment, but the final solution is not so bad.

github with code.

In running form.

Project idea

Since there was no idea, but there was a free domain uglyrater.org - I had to do a rating.

The essence is simple: there is a list of users who can be placed + and -. New users are added to the rating at the page of VKontakte.

Caution! The article contains many subjective assessments based on personal experience!

')

Implementation

The architecture had to be intentionally complicated, otherwise everything interesting would not have been possible to try. Plus, the operation of obtaining user data on vk api is still quite long, and the idea of parallelizing it is not so bad. For this you need MQ, the choice fell on RabbitMQ . You may say AMQP “sucks”, ØMQ “our everything”, but with zeromq I have already gained enough, and in this context I’m not interested in it.

RabbitMQ was used through pika . It took a minimum of code to initialize and send / receive messages, it can be viewed in the worker and its tests . After writing, a small comparison with the already used ZerocIce and ØMQ to meet this or a similar task appeared:

| RabbitMQ | ØMQ | ZerocIce | |

|---|---|---|---|

| pros | small amount of code; simplicity of work; | speed; forwarding native objects; does not require his demon; | the ability to work with remote functions and objects; the absence of the socket itself; signals / slots; |

| Minuses | a daemon launch is required; | requires writing a router; a lot of code; | requires writing a slice; requires the launch of the demon (icebox); complexity; |

And it so happened that for the current task RabbitMQ and ØMQ fit equally well, and ZerocIce is like a cannon on a sparrow.

Now the received and processed data must be stored somewhere. Now nosql is fashionable, so mongodb was chosen. For this task, there is no difference regarding postgresql with orm, everything is quick and convenient.

It is the turn of the web server. Now the fashion for asynchrony and push from the server to the client has gone , so tornado and tornadio2 were chosen , especially since they recently wrote about it on a habr. Then the problem immediately got out; it was impossible to get cookies from tornadio2. Therefore, I had to shake a crutch out of sending an id with a “secret” from a client through socketio, which made the implementation a little more difficult. After all this, a small comparison with django + json-rpc was obtained:

| tornado + tornadio2 | django + json-rpc | |

|---|---|---|

| pros | push from server; lightness; speed; | simplicity; standardized protocol; |

| Minuses | complexity; "Curve" work with cookies; | monstrosity; periodic queries for push simulation; |

It's all ambiguous, but because of the push option with tornado still better.

For the client side, nothing special was thought out, so they simply used the coffee script , haml and sass , which saved a lot of code.

Depla

Without thinking for a long time, I decided to deploy everything on the server through nginx using the standard scheme:

But it was not there. It turned out that nginx does not support web sockets and there were 3 solutions to the problem:

- Set unstable nginx.

For ubuntu, there is ppa , I put it out of it, but http authorization fell off, the problem did not work out. And since the server also contains a mercurial repository with this authorization, the option quickly disappeared. - Collect nginx with tcp proxy .

Finding a simple instruction , everything turned out to collect. All the old projects started. But here either I was mistaken somewhere, or what, but the websockets didn’t work as normal. Option disappears. - Use HAProxy.

Here we have a scheme with an overhead projector, which is easier to show with a picture:

With it, everything started and worked well.

Total

This, of course, everything is cool, convenient and fast, but there are still a lot of jambs. The main joint with the deployment, but the final solution is not so bad.

github with code.

In running form.

Source: https://habr.com/ru/post/135650/

All Articles