Creating a 1k / 4k intro for Linux, part 1

“On the Russian stage, we surprise each other with the fact that we do something at all”, © manwe

(from SCRIMERS status on demoscene.ru/forum )

Five minutes of meta: in this text you, kittens, have to read about how to spend your time completely ineffective in terms of the ratio of the size of the resulting product to the time and effort spent.

Suppose we want to do something such as, for example, an intro up to 4kb in size, but we are rogue, and we have no money for windows and a video card with shaders that support branching. Or we just do not want to take the standard set of apack / crinkler / sonant / 4klang / God-what-there-else-is, do the next “see everything! I am also able to reymarching distans fields! ”and get lost among tens or hundreds of the same . Well, or we just love to show off at random in the hope that the girls will finally pay attention to us.

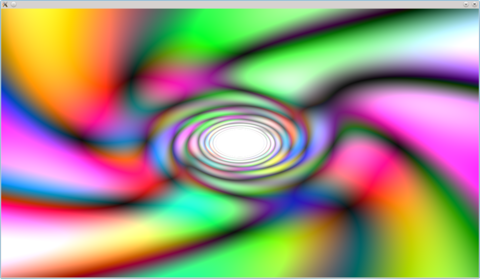

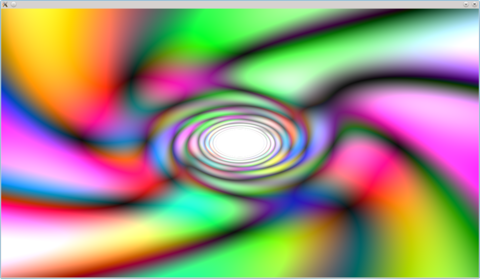

In general, it does not matter. Suppose we just have some Linux with a weak video card and all the youth ahead. Let's try with all this now to create a startup file of no more than, say, 1024 bytes, which at startup would somehow manage to create and show the user something (such).

')

What can we use for this? First of all, we have X11 and OpenGL. How can they be initialized with the least blood:

The last option is undoubtedly the truest, but it’s too complicated for me yet. Therefore, we will do the penultimate.

Let's uncover gcc and try to feel all that we have to deal with. Let's start with our intro skeleton - initialize the OpenGL context, specify the viewport and launch a simple eventloop that terminates the application when you press any key:

(An attentive reader here may discover the following:

Compile it:

(Note1: for users of binary distributions: you need a compiler (gcc), headers for OpenGL (usually in mesa) and an SDL version for developers (libsdl-dev, or something like that).)

(Note2: the -m32 flag is needed only for owners of 64-bit distributions)

And terrified that such a simple program takes almost 7kb!

We scratch the turnips, we understand that we in the fig did not give up crt and other bourgeois excesses, so we cut them out.

Need to:

In addition, you must specify the gcc parameter -nostartfiles.

And at the same time immediately strip the binary:

We get ~ 4.7 kilobytes, which is ~ 30% better, but still very much.

In this program, the useful code is still a cat cry, and almost all the place is occupied by various elf-headers. There is a sstrip utility from the elfkickers suite (http://www.muppetlabs.com/~breadbox/software/elfkickers.html) for cutting out the futility of headers:

This gives us about 600 bytes more and dangerously brings the size of the binary to 4kb. And that he still does not do anything useful.

Once again we get upset, look at our binary in the hex editor and find zeros. A lot of them. And who is the best to fight zeroes and other similarities?

Right:

(Note1-obvious: you need to put the p7zip package. Why p7zip and such complexity? Because: (a) gzip and others compress by a few percent worse, (b) you need to remove the gz-header with extra metadata, like the file name)

(Note2: why is gzip at all, and not, say, bzip2? Because empirically p7zip -tGZip gives the best result of everything I've tried, and the unpackers of which are more or less everywhere)

intro.gz is already about 650 bytes! It remains only to make it run. Create an unpack_header file with the following content:

and stick this header to our archive:

Let's make the file run and take a look at what we did:

Make sure that these 700 byte kopecks really create an empty window.

What we have just done is called gzip-dropping, and its roots go to the cab-dropping method, popular in its time (before the crinkler era and the guys), which did exactly the same thing, but under Windows.

Let's see, and how many can zahirachit in the remaining 300 bytes. Traditionally we do the following: displaying glRect to the full screen, which is drawn by special shaders of the form color = f (x, y):

The code is quite transparent, except, perhaps, the moment with varying vec4 p, which serves to drag the normalized screen coordinates from the vertex shader to the fragment shader, and makes us independent of the physical dimensions of the window.

So, let's try to collect:

We find out that such a trifle we already hapnuli extra 50 bytes, and got out for the permitted one kilobyte. “You will still have a chance to dance with me, you will dance!”, We think and get the last trick out of the bosom: manual loading of all necessary functions from dynamic libraries:

Wow! Finally, it becomes more or less fleshy and confusing!

What is going on here: we store the libraries we need and the functions of them in one line through zero separators, instead of storing all this data in very loose and poorly packed structures inside the elf header. Functions from a string are loaded into just an array of pointers, from which they are called at the right time through macros. It should be noted that the specific types of parameters within reasonable limits do not matter - they will still be aligned to 4 bytes in the stack. And the return value can be ignored altogether, if it is not needed - all the same, the calling function, by convention, does not expect that eax will be saved. And the more so it is not necessary to check for errors - only dicks and rags that no one gives are checked for errors.

In the build team, we will undress the binary completely to the goal, leaving it to depend only on ld-linux.so (an interpreter that allows dynamic linking in general), and libld.so, in which the dlopen and dlsym functions lie:

899 bytes! You can still score as many as a hundred with fat bytes with Creativity ™!

What can fit there? For example, the old effect of the tunnel, from which everyone is already sick (yes, that one from the screenshot above).

First we need to drag time into the shaders. We will not do it as nerds through glUniform, but we will act in a simple way, like yard kids:

In order to not lose time in interpolation, we need a little help from the vertex shader:

Everything, now in the pz component we have the current time. It remains only to stick it to the tunnel.

The tunnel is done simply by the angle a = atan (px, py) and the perspective in a rustic way: z = 1. / length (p.xy), it only remains to generate some texture color = f (a, z, t ). In the coordinates themselves time, of course, can also be mixed. And in general, everything can be done, for example, quit reading this nonsense and go for a walk - there is such an awesome clear weather, big clean drifts and a pine forest two steps away from home!

In general, using the method of scientific iteration, we get this:

This guy is going to my gcc-4.5.3 in exactly 1024 packed bytes.

Okay, we think, it’s hardly possible to do something even better and more difficult here. Almost satisfied with ourselves climb to look at what the other guys in this category are doing ...

And we fall into despondency.

There is nothing left, you have to climb into the assembler.

But more about that next time.

(from SCRIMERS status on demoscene.ru/forum )

Five minutes of meta: in this text you, kittens, have to read about how to spend your time completely ineffective in terms of the ratio of the size of the resulting product to the time and effort spent.

Suppose we want to do something such as, for example, an intro up to 4kb in size, but we are rogue, and we have no money for windows and a video card with shaders that support branching. Or we just do not want to take the standard set of apack / crinkler / sonant / 4klang / God-what-there-else-is, do the next “see everything! I am also able to reymarching distans fields! ”and get lost among tens or hundreds of the same . Well, or we just love to show off at random in the hope that the girls will finally pay attention to us.

In general, it does not matter. Suppose we just have some Linux with a weak video card and all the youth ahead. Let's try with all this now to create a startup file of no more than, say, 1024 bytes, which at startup would somehow manage to create and show the user something (such).

')

What can we use for this? First of all, we have X11 and OpenGL. How can they be initialized with the least blood:

- Directly via Xlib, GLX

+: there is a guarantee in the system;

-: 11 calls only to raise the GL context - glut

+: 4-5 functions for GL context;

-: there is not everywhere - SDL

+: 2 calls for context, the de facto standard, is almost everywhere;

-: it may not happen somewhere, or in some future, compatibility with new versions could potentially crawl - hammer on the library and do everything by hand

+: you can throw out a dynamic link and get rumored (viznut?), about 300 bytes to initialize the GL context

-: you can break everything for yourself while you figure it out

The last option is undoubtedly the truest, but it’s too complicated for me yet. Therefore, we will do the penultimate.

Let's uncover gcc and try to feel all that we have to deal with. Let's start with our intro skeleton - initialize the OpenGL context, specify the viewport and launch a simple eventloop that terminates the application when you press any key:

#include <SDL.h> #define W 1280 #define H 720 #define FULLSCREEN 0//SDL_FULLSCREEN int main(int argc, char* argv) { SDL_Init(SDL_INIT_VIDEO); SDL_SetVideoMode(W, H, 32, SDL_OPENGL | FULLSCREEN); glViewport(0, 0, W, H); SDL_Event e; for(;;) { SDL_PollEvent(&e); if (e.type == SDL_KEYDOWN) break; // - SDL_GL_SwapBuffers(); } SDL_Quit(); return 0; } (An attentive reader here may discover the following:

- optimism, eclipsing sun - complete lack of error checks

- the lack of verification on e.type == SDL_QUIT (processing the window closing by the user), which will slightly annoy those who like closing applications by clicking on the cross, rather than pressing an arbitrary key

Compile it:

(Note1: for users of binary distributions: you need a compiler (gcc), headers for OpenGL (usually in mesa) and an SDL version for developers (libsdl-dev, or something like that).)

(Note2: the -m32 flag is needed only for owners of 64-bit distributions)

cc -m32 -o intro intro.c `pkg-config --libs --cflags sdl` -lGL And terrified that such a simple program takes almost 7kb!

We scratch the turnips, we understand that we in the fig did not give up crt and other bourgeois excesses, so we cut them out.

Need to:

- Instead of main (), declare a _start () function with no arguments and a return value (you can rename it, but why?).

- Instead of simply exiting from this function, make an exit call from the application (eax = 1, ebx = exit_code).

#include <SDL.h> #define W 1280 #define H 720 #define FULLSCREEN 0//SDL_FULLSCREEN void _start() { SDL_Init(SDL_INIT_VIDEO); SDL_SetVideoMode(W, H, 32, SDL_OPENGL | FULLSCREEN); glViewport(0, 0, W, H); SDL_Event e; for(;;) { SDL_PollEvent(&e); if (e.type == SDL_KEYDOWN) break; // - SDL_GL_SwapBuffers(); } SDL_Quit(); asm ( \ "xor %eax,%eax\n" \ "inc %eax\n" \ "int $0x80\n" \ ); } In addition, you must specify the gcc parameter -nostartfiles.

And at the same time immediately strip the binary:

cc -m32 -o intro intro.c `pkg-config --libs --cflags sdl` -lGL -Os -s -nostartfiles We get ~ 4.7 kilobytes, which is ~ 30% better, but still very much.

In this program, the useful code is still a cat cry, and almost all the place is occupied by various elf-headers. There is a sstrip utility from the elfkickers suite (http://www.muppetlabs.com/~breadbox/software/elfkickers.html) for cutting out the futility of headers:

sstrip intro This gives us about 600 bytes more and dangerously brings the size of the binary to 4kb. And that he still does not do anything useful.

Once again we get upset, look at our binary in the hex editor and find zeros. A lot of them. And who is the best to fight zeroes and other similarities?

Right:

cat intro | 7z a dummy -tGZip -mx=9 -si -so > intro.gz (Note1-obvious: you need to put the p7zip package. Why p7zip and such complexity? Because: (a) gzip and others compress by a few percent worse, (b) you need to remove the gz-header with extra metadata, like the file name)

(Note2: why is gzip at all, and not, say, bzip2? Because empirically p7zip -tGZip gives the best result of everything I've tried, and the unpackers of which are more or less everywhere)

intro.gz is already about 650 bytes! It remains only to make it run. Create an unpack_header file with the following content:

T=/tmp/i;tail -n+2 $0|zcat>$T;chmod +x $T;$T;rm $T;exit and stick this header to our archive:

cat unpack_header intro.gz > intro.sh Let's make the file run and take a look at what we did:

chmod +x intro.sh ./intro.sh Make sure that these 700 byte kopecks really create an empty window.

What we have just done is called gzip-dropping, and its roots go to the cab-dropping method, popular in its time (before the crinkler era and the guys), which did exactly the same thing, but under Windows.

Let's see, and how many can zahirachit in the remaining 300 bytes. Traditionally we do the following: displaying glRect to the full screen, which is drawn by special shaders of the form color = f (x, y):

#include <SDL.h> #include <GL/gl.h> #define W 1280 #define H 720 #define FULLSCREEN 0//SDL_FULLSCREEN char* shader_vtx[] = { "varying vec4 p;" "void main(){gl_Position=p=gl_Vertex;}" }; char* shader_frg[] = { "varying vec4 p;" "void main(){" "gl_FragColor=p;" "}" }; void shader(char** src, int type, int p) { int s = glCreateShader(type); glShaderSource(s, 1, src, 0); glCompileShader(s); glAttachShader(p, s); } void _start() { SDL_Init(SDL_INIT_VIDEO); SDL_SetVideoMode(W, H, 32, SDL_OPENGL | FULLSCREEN); glViewport(0, 0, W, H); int p = glCreateProgram(); shader(shader_vtx, GL_VERTEX_SHADER, p); shader(shader_frg, GL_FRAGMENT_SHADER, p); glLinkProgram(p); glUseProgram(p); SDL_Event e; for(;;) { SDL_PollEvent(&e); if (e.type == SDL_KEYDOWN) break; glRecti(-1,-1,1,1); SDL_GL_SwapBuffers(); } SDL_Quit(); asm ( \ "xor %eax,%eax\n" \ "inc %eax\n" \ "int $0x80\n" \ ); } The code is quite transparent, except, perhaps, the moment with varying vec4 p, which serves to drag the normalized screen coordinates from the vertex shader to the fragment shader, and makes us independent of the physical dimensions of the window.

So, let's try to collect:

cc -m32 -o intro intro.c `pkg-config --libs --cflags sdl` -lGL -Os -s -nostartfiles && \ sstrip intro && \ cat intro | 7z a dummy -tGZip -mx=9 -si -so > intro.gz && \ cat unpack_header intro.gz > intro.sh && chmod +x intro.sh && \ wc -c intro.sh && \ ./intro.sh We find out that such a trifle we already hapnuli extra 50 bytes, and got out for the permitted one kilobyte. “You will still have a chance to dance with me, you will dance!”, We think and get the last trick out of the bosom: manual loading of all necessary functions from dynamic libraries:

#include <dlfcn.h> #include <SDL.h> #include <GL/gl.h> #define W 1280 #define H 720 #define FULLSCREEN 0//SDL_FULLSCREEN const char* shader_vtx[] = { "varying vec4 p;" "void main(){gl_Position=p=gl_Vertex;}" }; const char* shader_frg[] = { "varying vec4 p;" "void main(){" "gl_FragColor=p;" "}" }; const char dl_nm[] = "libSDL-1.2.so.0\0" "SDL_Init\0" "SDL_SetVideoMode\0" "SDL_PollEvent\0" "SDL_GL_SwapBuffers\0" "SDL_Quit\0" "\0" "libGL.so.1\0" "glViewport\0" "glCreateProgram\0" "glLinkProgram\0" "glUseProgram\0" "glCreateShader\0" "glShaderSource\0" "glCompileShader\0" "glAttachShader\0" "glRecti\0\0\0"; // : #define _SDL_Init ((void(*)(int))dl_ptr[0]) #define _SDL_SetVideoMode ((void(*)(int,int,int,int))dl_ptr[1]) #define _SDL_PollEvent ((void(*)(void*))dl_ptr[2]) #define _SDL_GL_SwapBuffers ((void(*)())dl_ptr[3]) #define _SDL_Quit ((void(*)())dl_ptr[4]) #define _glViewport ((void(*)(int,int,int,int))dl_ptr[5]) #define _glCreateProgram ((int(*)())dl_ptr[6]) #define _glLinkProgram ((void(*)(int))dl_ptr[7]) #define _glUseProgram ((void(*)(int))dl_ptr[8]) #define _glCreateShader ((int(*)(int))dl_ptr[9]) #define _glShaderSource ((void(*)(int,int,const char**,int))dl_ptr[10]) #define _glCompileShader ((void(*)(int))dl_ptr[11]) #define _glAttachShader ((void(*)(int,int))dl_ptr[12]) #define _glRecti ((void(*)(int,int,int,int))dl_ptr[13]) void* dl_ptr[14]; // - void dl() { const char* pn = dl_nm; void** pp = dl_ptr; for(;;) // { void* f = dlopen(pn, RTLD_LAZY); // for(;;) // precious { while(*(pn++) != 0); // if (*pn == 0) break; // , so' *pp++ = dlsym(f, pn); } // if (*++pn == 0) break; // - , , } } void shader(const char** src, int type, int p) { int s = _glCreateShader(type); _glShaderSource(s, 1, src, 0); _glCompileShader(s); _glAttachShader(p, s); } void _start() { dl(); _SDL_Init(SDL_INIT_VIDEO); _SDL_SetVideoMode(W, H, 32, SDL_OPENGL | FULLSCREEN); _glViewport(0, 0, W, H); int p = _glCreateProgram(); shader(shader_vtx, GL_VERTEX_SHADER, p); shader(shader_frg, GL_FRAGMENT_SHADER, p); _glLinkProgram(p); _glUseProgram(p); SDL_Event e; for(;;) { _SDL_PollEvent(&e); if (e.type == SDL_KEYDOWN) break; _glRecti(-1,-1,1,1); _SDL_GL_SwapBuffers(); } _SDL_Quit(); asm ( \ "xor %eax,%eax\n" \ "inc %eax\n" \ "int $0x80\n" \ ); } Wow! Finally, it becomes more or less fleshy and confusing!

What is going on here: we store the libraries we need and the functions of them in one line through zero separators, instead of storing all this data in very loose and poorly packed structures inside the elf header. Functions from a string are loaded into just an array of pointers, from which they are called at the right time through macros. It should be noted that the specific types of parameters within reasonable limits do not matter - they will still be aligned to 4 bytes in the stack. And the return value can be ignored altogether, if it is not needed - all the same, the calling function, by convention, does not expect that eax will be saved. And the more so it is not necessary to check for errors - only dicks and rags that no one gives are checked for errors.

In the build team, we will undress the binary completely to the goal, leaving it to depend only on ld-linux.so (an interpreter that allows dynamic linking in general), and libld.so, in which the dlopen and dlsym functions lie:

cc -Wall -m32 -c intro.c `pkg-config --cflags sdl` -Os -nostartfiles && \ ld -melf_i386 -dynamic-linker /lib32/ld-linux.so.2 -ldl intro.o -o intro && \ sstrip intro && \ cat intro | 7z a dummy -tGZip -mx=9 -si -so > intro.gz && \ cat unpack_header intro.gz > intro.sh && chmod +x intro.sh && \ wc -c intro.sh && \ ./intro.sh 899 bytes! You can still score as many as a hundred with fat bytes with Creativity ™!

What can fit there? For example, the old effect of the tunnel, from which everyone is already sick (yes, that one from the screenshot above).

First we need to drag time into the shaders. We will not do it as nerds through glUniform, but we will act in a simple way, like yard kids:

float t = _SDL_GetTicks() / 1000. + 1.; _glRectf(-t, -t, t, t); In order to not lose time in interpolation, we need a little help from the vertex shader:

const char* shader_vtx[] = { "varying vec4 p;" "void main(){gl_Position=p=gl_Vertex;pz=length(p.xy);}" }; Everything, now in the pz component we have the current time. It remains only to stick it to the tunnel.

The tunnel is done simply by the angle a = atan (px, py) and the perspective in a rustic way: z = 1. / length (p.xy), it only remains to generate some texture color = f (a, z, t ). In the coordinates themselves time, of course, can also be mixed. And in general, everything can be done, for example, quit reading this nonsense and go for a walk - there is such an awesome clear weather, big clean drifts and a pine forest two steps away from home!

In general, using the method of scientific iteration, we get this:

#include <dlfcn.h> #include <SDL.h> #include <GL/gl.h> #define W 1280 #define H 720 #define FULLSCREEN 0//SDL_FULLSCREEN const char* shader_vtx[] = { "varying vec4 p;" "void main(){gl_Position=p=gl_Vertex;pz=length(p.xy);}" }; const char* shader_frg[] = { "varying vec4 p;" "void main(){" "float " "z=1./length(p.xy)," "a=atan(px,py)+sin(p.z+z);" "gl_FragColor=" "2.*abs(.2*sin(pz*3.+z*3.)+sin(p.z+a*4.)*p.xyxx*sin(vec4(z,a,a,a)))+(z-1.)*.1;" "}" }; const char dl_nm[] = "libSDL-1.2.so.0\0" "SDL_Init\0" "SDL_SetVideoMode\0" "SDL_PollEvent\0" "SDL_GL_SwapBuffers\0" "SDL_GetTicks\0" "SDL_Quit\0" "\0" "libGL.so.1\0" "glViewport\0" "glCreateProgram\0" "glLinkProgram\0" "glUseProgram\0" "glCreateShader\0" "glShaderSource\0" "glCompileShader\0" "glAttachShader\0" "glRectf\0\0\0"; // : #define _SDL_Init ((void(*)(int))dl_ptr[0]) #define _SDL_SetVideoMode ((void(*)(int,int,int,int))dl_ptr[1]) #define _SDL_PollEvent ((void(*)(void*))dl_ptr[2]) #define _SDL_GL_SwapBuffers ((void(*)())dl_ptr[3]) #define _SDL_GetTicks ((unsigned(*)())dl_ptr[4]) #define _SDL_Quit ((void(*)())dl_ptr[5]) #define _glViewport ((void(*)(int,int,int,int))dl_ptr[6]) #define _glCreateProgram ((int(*)())dl_ptr[7]) #define _glLinkProgram ((void(*)(int))dl_ptr[8]) #define _glUseProgram ((void(*)(int))dl_ptr[9]) #define _glCreateShader ((int(*)(int))dl_ptr[10]) #define _glShaderSource ((void(*)(int,int,const char**,int))dl_ptr[11]) #define _glCompileShader ((void(*)(int))dl_ptr[12]) #define _glAttachShader ((void(*)(int,int))dl_ptr[13]) #define _glRectf ((void(*)(float,float,float,float))dl_ptr[14]) static void* dl_ptr[15]; // - static void dl() __attribute__((always_inline)); static void dl() { const char* pn = dl_nm; void** pp = dl_ptr; for(;;) // { void* f = dlopen(pn, RTLD_LAZY); // for(;;) // precious { while(*(pn++) != 0); // if (*pn == 0) break; // , so' *pp++ = dlsym(f, pn); } // if (*++pn == 0) break; // - , , } } static void shader(const char** src, int type, int p) __attribute__((always_inline)); static void shader(const char** src, int type, int p) { int s = _glCreateShader(type); _glShaderSource(s, 1, src, 0); _glCompileShader(s); _glAttachShader(p, s); } void _start() { dl(); _SDL_Init(SDL_INIT_VIDEO); _SDL_SetVideoMode(W, H, 32, SDL_OPENGL | FULLSCREEN); _glViewport(0, 0, W, H); int p = _glCreateProgram(); shader(shader_vtx, GL_VERTEX_SHADER, p); shader(shader_frg, GL_FRAGMENT_SHADER, p); _glLinkProgram(p); _glUseProgram(p); SDL_Event e; for(;;) { _SDL_PollEvent(&e); if (e.type == SDL_KEYDOWN) break; float t = _SDL_GetTicks() / 400. + 1.; _glRectf(-t, -t, t, t); _SDL_GL_SwapBuffers(); } _SDL_Quit(); asm ( \ "xor %eax,%eax\n" \ "inc %eax\n" \ "int $0x80\n" \ ); } This guy is going to my gcc-4.5.3 in exactly 1024 packed bytes.

Okay, we think, it’s hardly possible to do something even better and more difficult here. Almost satisfied with ourselves climb to look at what the other guys in this category are doing ...

And we fall into despondency.

There is nothing left, you have to climb into the assembler.

But more about that next time.

Source: https://habr.com/ru/post/134551/

All Articles