Failover proxy server based on Squid in the Windows domain

UPD: the differences in memory consumption between my configuration and the one used by the authors of negative comments are due to the fact that with Squid, in addition to the standard script wbinfo_group.pl, which determines the domain user’s domain group, the script that defines itself is used user login in order for squid to be able to grant the user rights different from those granted to his group.

Once on a rainy gray evening I had the need to implement a proxy server, but not simple, but one that would have the following functionality:

Once on a rainy gray evening I had the need to implement a proxy server, but not simple, but one that would have the following functionality:

Naturally, my opinion fell on Squid with authorization using the ntlmssp protocol. Squid's configuration utility was found via the web interface - SAMS. But somehow I didn’t grow together with him: either the php version had to be rolled back from 5.3 to 5.2, he didn’t cling to the domain, he didn’t see any users. In no case do I want to say that this is a bad product, my friend turned it around and set it up (so that the source is reliable), just the location of the stars did not allow me to become a follower of SAMS.

Having suffered a lot from Google, various products and solutions, I thought: “the essence of the configuration is to generate the squid.conf file, I used to write in php, and why not implement the web interface myself?” It is said - done. Soon the bags ran, the users screamed, they brought me office notes to open access to Internet resources.

It would seem, what else do you need from life? But no, after some time, several shortcomings in the solution infrastructure have surfaced.

Accordingly, there were new Wishlist.

The starting point for a solution is the ability to load balance in iptables.

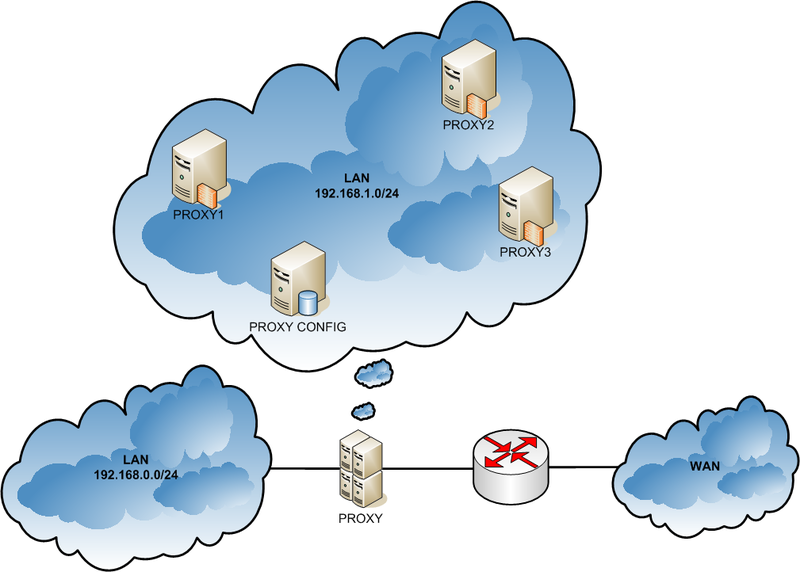

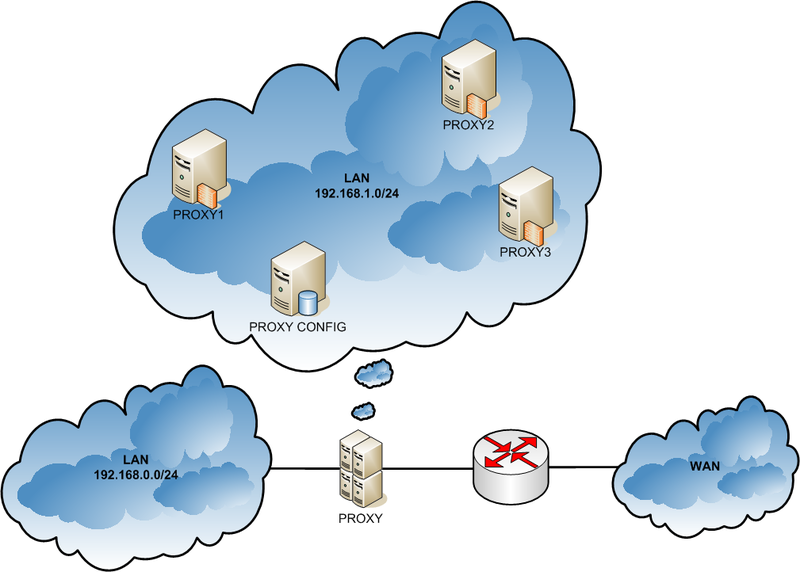

The code of this listing allows you to evenly distribute the load between the three proxy servers. Now we need three proxy servers that will be hidden behind a single IP address. My solution is shown in the figure below.

Legend:

In this case, the “proxy server” that the user sees is actually a virtual machine hypervisor, on which the required number of proxy servers and their management interface are deployed.

With this structure, you can easily provide:

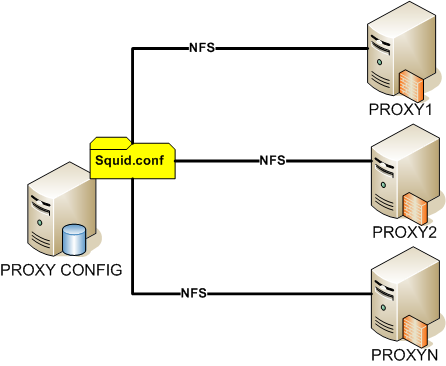

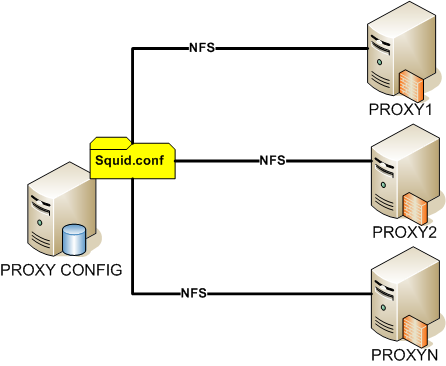

Squid's configuration is the same for all proxy servers, so the squid.conf file is stored on the PROXY_CONF computer in the nfs ball.

To implement this scheme, the following hardware and software components were used:

To automatically start and stop virtual machines when the computer was turned on and off, the vmstart and vmstop scripts were written that alternately turn on and off all virtual machines. I will not give their content, since it is just using the VBoxHeadless and VBoxManage commands in conjunction with ping to check the status of the machine.

Thus, several problems were solved:

Time showed that in case of failures, it would be good if the proxy server was able to determine if everything was normal with the connection to the domain controller, and otherwise applied the configuration independent of the controller, and when restoring the controller it returned to the functionality for which all this is conceived.

Talk less, work more!

Notes:

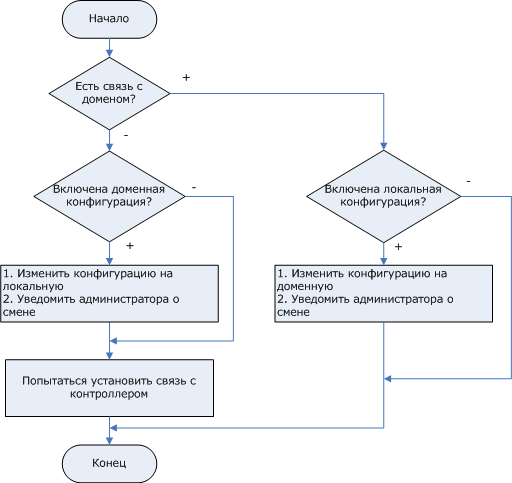

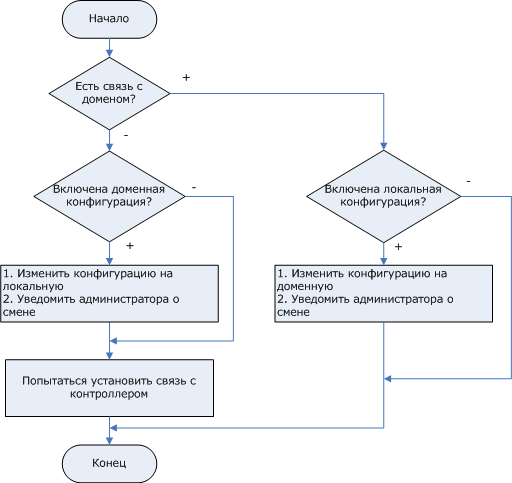

The script logic is shown in the figure below.

In order not to synchronize the script in the future on all proxy servers, we drop it into the network balloon on the PROXY_CONFIG machine, mount it on all proxies, and add the script to cron for every minute. Thus, if the connection with the domain is lost, the idle time will be reduced to one minute, that is, the evil accountants will not have time to call or will have time, but will almost immediately rejoice.

Well, that's all. Everything works, there are no problems. I would like to immediately answer the frequently asked questions about the described configuration.

Once on a rainy gray evening I had the need to implement a proxy server, but not simple, but one that would have the following functionality:

Once on a rainy gray evening I had the need to implement a proxy server, but not simple, but one that would have the following functionality:- granting / restricting access depending on the membership of an account in a particular Active Directory group;

- granting / restricting access depending on the rights granted to the account (for example, so that the whole group can be allowed to use search engines, and one tricky user of this group can be turned off by a strictly defined search engine);

- providing access without any restrictions for "VIP" MAC-addresses;

- providing access to the minimum set of resources to all users (and non-domain users as well);

- the number of movements that a user must perform to work with a proxy server should be minimized;

- administration of the proxy server is done via the web interface;

- The total cost of ownership of this proxy server should be minimal.

Naturally, my opinion fell on Squid with authorization using the ntlmssp protocol. Squid's configuration utility was found via the web interface - SAMS. But somehow I didn’t grow together with him: either the php version had to be rolled back from 5.3 to 5.2, he didn’t cling to the domain, he didn’t see any users. In no case do I want to say that this is a bad product, my friend turned it around and set it up (so that the source is reliable), just the location of the stars did not allow me to become a follower of SAMS.

Having suffered a lot from Google, various products and solutions, I thought: “the essence of the configuration is to generate the squid.conf file, I used to write in php, and why not implement the web interface myself?” It is said - done. Soon the bags ran, the users screamed, they brought me office notes to open access to Internet resources.

It would seem, what else do you need from life? But no, after some time, several shortcomings in the solution infrastructure have surfaced.

- If the domain controller is not functioning, if the connection with it is lost, the backup channel of communication with the outside world, which is the Internet (and the main one is corporate mail), ceased to function, because users could not log in.

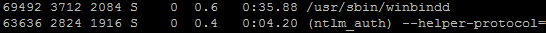

- The user logs in to the proxy using the ntlmssp protocol using the helper (oil) ntlm_auth, one copy of which takes up about 60 megabytes in RAM.

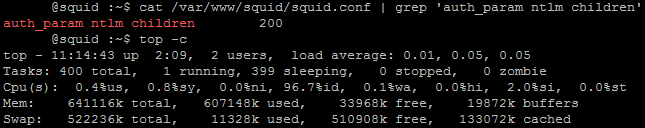

As you can see, the winbindd process is also involved in authorization, which eats up as much memory. Total, 120 megabytes per request. It was experimentally found that the parameter auth_param ntlm children , indicating the number of threads for authorization, should exceed the number of users by about 10%. With auth_param ntlm children = 200, 600 megabytes of memory is occupied on the proxy server.

It was experimentally found out that if at the same time 150 users with mail-agents and other shalupon will work on the proxy, the server may behave unpredictably, for example, hang, lose contact with the domain controller, etc. Again, I note that perhaps this is my curvature, but it was she who pushed me to understand the topic of this opus. - The resources of the processor are practically not used, while a huge amount of RAM is being reserved.

Accordingly, there were new Wishlist.

- Reduce the load on the proxy server and get the opportunity to expand the composition of proxy servers with a flick of the wrist.

- If there are problems with authorization, switch to the non-domain configuration, and when problems disappear, switch back to the domain configuration. Along the way, you can notify the administrator about emerging and disappearing problems.

Reducing the load on the proxy server + scalability

The starting point for a solution is the ability to load balance in iptables.

# iptables -t nat -A PREROUTING --dst $GW_IP -p tcp --dport 3128 -m state --state NEW -m statistic --mode nth --every 3 --packet 0 -j DNAT --to-destination $PROXY1 #iptables -t nat -A PREROUTING --dst $GW_IP -p tcp --dport 3128 -m state --state NEW -m statistic --mode nth --every 3 --packet 1 -j DNAT --to-destination $PROXY2 #iptables -t nat -A PREROUTING --dst $GW_IP -p tcp --dport 3128 -m state --state NEW -m statistic --mode nth --every 3 --packet 2 -j DNAT --to-destination $PROXY3 The code of this listing allows you to evenly distribute the load between the three proxy servers. Now we need three proxy servers that will be hidden behind a single IP address. My solution is shown in the figure below.

Legend:

- LAN 192.168.0.0/24 - local area network;

- PROXY - a proxy server that the user sees;

- LAN 192.168.1.0/24 - local area network for proxy servers;

- PROXY1..PROXYN — Linux-based virtual machines on which Squid is deployed and Samba integrated with Active Directory;

- PROXY_CONFIG is a virtual machine on which the proxy server management interface is deployed.

In this case, the “proxy server” that the user sees is actually a virtual machine hypervisor, on which the required number of proxy servers and their management interface are deployed.

With this structure, you can easily provide:

- Passing packets from “VIP” MAC addresses to bypass the proxy;

- proxy server scalability;

- fast recovery after a crash;

- a field for experimentation with Squid's configuration (it is enough to deploy a new virtual machine and test everything on it);

- fast removal from the pool of proxy servers of the problem virtual machine;

- more or less optimal use of hardware resources.

Squid's configuration is the same for all proxy servers, so the squid.conf file is stored on the PROXY_CONF computer in the nfs ball.

To implement this scheme, the following hardware and software components were used:

- computer CPU Core i3 / RAM 4 Gb / HDD 500 Gb;

- OS Ubuntu-Server 11.04;

- VirtualBox + phpvirtualbox.

To automatically start and stop virtual machines when the computer was turned on and off, the vmstart and vmstop scripts were written that alternately turn on and off all virtual machines. I will not give their content, since it is just using the VBoxHeadless and VBoxManage commands in conjunction with ping to check the status of the machine.

Thus, several problems were solved:

- reducing the load on the proxy server - packets with a request for a new connection are scattered to N virtual proxy servers in order of priority, one proxy server with 650 MB RAM feels quite comfortable with auth_param ntlm children = 200, with three proxy servers the number of authorization threads already equal to 600;

- Scalability - if necessary, unload the server, you can simply copy the hard drive of an existing proxy and create a new machine in full alert in 5-10 minutes;

- rational use of hardware resources - the two previous tasks were solved due to increased load on the processor;

- fault tolerance - it is enough to have copies of the PROXY_CONFIG and PROXY1 machines and after the fall you can restore the entire scheme, slowly drinking coffee, and for complete peace of mind you can periodically back up the hypervisor hard disk, the benefit of Linux will not fall into the blue screens when you try to insert the hard disk into another system unit.

Automatic switching between domain and non-domain configurations

Time showed that in case of failures, it would be good if the proxy server was able to determine if everything was normal with the connection to the domain controller, and otherwise applied the configuration independent of the controller, and when restoring the controller it returned to the functionality for which all this is conceived.

Talk less, work more!

#!/bin/bash # , /bin/ls -la /etc/squid/squid.conf | /bin/grep 'local' LOCAL=$? echo Local configuration - $LOCAL # , /usr/bin/ntlm_auth --username=ad_user --password=ad_pass 2>/dev/null 1>/dev/null if [ $? != 0 ]; then # echo No connection with domain if [ $LOCAL -eq 1 ]; then # # echo Reconfigure to local /bin/rm /etc/squid/squid.conf /bin/ln -s /etc/squid/squid.conf.local /etc/squid/squid.conf /usr/sbin/squid -k reconfigure # , /usr/bin/php5 /home/squiduser/mail/mail.php 'from' 'Proxy XX - Gone to non-domain mode' '=(' fi # /etc/init.d/joindomain else # echo OK connection to domain if [ $LOCAL -eq 0 ]; then # # echo Reconfigure to domain /bin/rm /etc/squid/squid.conf /bin/ln -s /var/www/squid/squid.conf /etc/squid/squid.conf /usr/sbin/squid -k reconfigure # , /usr/bin/php5 /home/squiduser/mail/mail.php 'from' 'Gone to domain mode' '=)' fi fi Notes:

- the /etc/squid/squid.conf file is a symbolic link files with local or domain configuration (squid.conf and squid.conf.local);

- for the notification, the mail.php script is used, which sends a message to corporate mail;

- /etc/init.d/joindomain - a script for entering a computer into a domain.

The script logic is shown in the figure below.

In order not to synchronize the script in the future on all proxy servers, we drop it into the network balloon on the PROXY_CONFIG machine, mount it on all proxies, and add the script to cron for every minute. Thus, if the connection with the domain is lost, the idle time will be reduced to one minute, that is, the evil accountants will not have time to call or will have time, but will almost immediately rejoice.

Well, that's all. Everything works, there are no problems. I would like to immediately answer the frequently asked questions about the described configuration.

Question 1

Wouldn't it be easier to deploy one real proxy server on a good hardware platform than to bother with virtual machines?Answer

deploy - yes, easier to manage - no. Of course, if the organization is small and you are responsible for both the proxy and the controllers, then there is no sense in making such a garden. In the case of the presence of a distributed network of branches, branches, when the duties and responsibilities of administrators are separated, then such a system, “waiting” for the best infrastructure, is the best that came to mind. If earlier, with a sharp connection of two new branches, I had to climb into the configuration and increase the number of authorization threads, wading through the terrible brakes of the OS deployed on the hardware, then disconnect the network cable from the proxy when the CD fails, because without the CD everything hangs even locally, spend a few dozens of minutes to wait for sagging, now I have already forgotten what that system man looks like on which all this is deployed, I only remember that he was black.Question 2

Why do I need to check the status of the connection to the domain, is it not easier to troubleshoot the controller?Answer

see the answer to question 1 regarding separation of powers.')

Source: https://habr.com/ru/post/134220/

All Articles