Shadow Detection Algorithm on Video

Introduction

In this article I wanted to talk about the algorithm that allows you to share real objects and shadows on the video image.

This algorithm was first implemented by me during the development of video analytics algorithms for the MagicBox IP video server , which is being developed by Synesis , in which I currently work. As you know, when detecting motion in a video sequence, the lighting conditions are not always perfect. And the simplest motion detector based on the difference between the current frame and a certain averaged background will react not only to real objects, but also to virtual ones: moving shadows and light hares. What is undesirable, as it can lead to a distortion of the shape of the detected objects and also to false alarms of the motion detector. This is true in sunny weather, and especially in the case of variable cloudiness. Therefore, the presence of an algorithm for the selection of shadows can have a very positive effect on the accuracy of the entire detector. But let's look at everything in order.

Motion detection

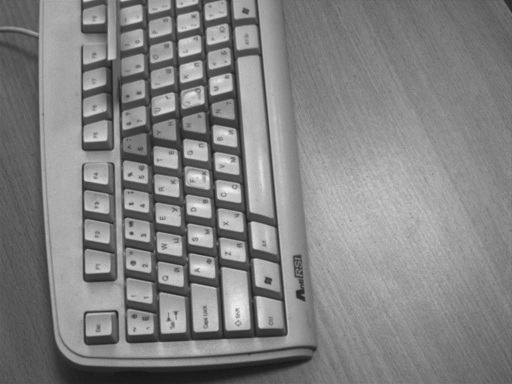

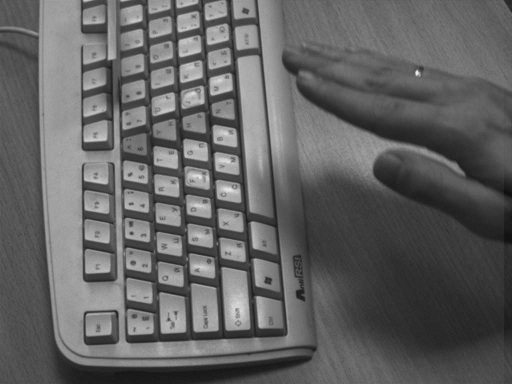

A simple algorithm was chosen for motion detection: from the averaged background

')

pixel subtracts the current frame

And it turns out the mask, which unfortunately includes not only a real object (my hand), but also a virtual (shadow from it).

Shadow properties

In order to separate the image of the real object from the image of the shadow (light hare), you need to select a set of properties (criteria) that they have different. The most important feature of the image of the shadow, which distinguishes it from the image of a real object is transparency. And indeed, through the shadow, the features of the background on which it overlaps clearly appear, although its overall illumination varies considerably. Therefore, to separate the real object and the shadow, you need to check the object for transparency, for which you should analyze the correlation between the original image and the background, taking into account changes in illumination. To do this, there are two fairly quick method, which we consider below.

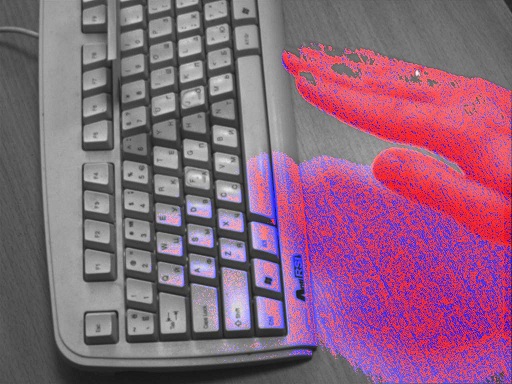

Method 1: Gradient Direction

The direction of the gradient has the remarkable property that is invariant to the illumination of the object. Indeed, if the left pixel is darker than the right, then this ratio will be maintained at any uniform illumination. Therefore, if we compare the deviation of the gradient direction for the background and the current frame with a certain threshold, then we can, in theory, divide the points belonging to the real object and the shadow.

In reality, due to noise, this method gives a significant error, which is clearly seen on the ghost image.

Method 2: Relative Correlation

For a completely uniform darkening of the background, for each of its points the condition is satisfied:

Current [x, y] = k * Background [x, y],

where k is some dimming factor, the same for the whole image. In reality, blackout is of course not uniform for the entire image, but with a high degree of confidence it can be considered uniform in a certain neighborhood of a given point. Therefore, if the deviation from equality

Current [x, y] * AverageBackground [x, y] = Background [x, y] * AverageCurrent [x, y]

does not exceed a certain threshold for the neighborhood of the point [x, y], then it can be considered a shadow.

However, as practice shows, this method also has a significant error, as significant areas of a real object are recognized as a shadow.

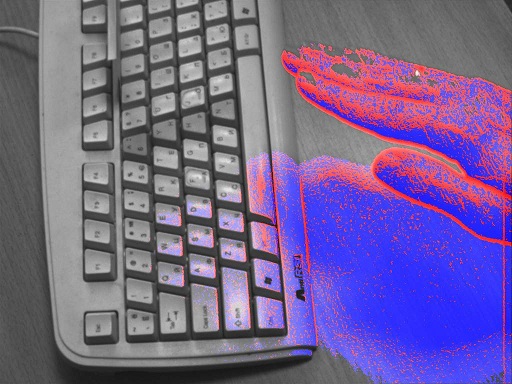

Method 3: Mixed Method

The logical next step is to combine these two methods. If the results of the previous two methods are combined (the shadow will count only those points that are the same for both methods), then we get the following result:

Of course, in this case too, a substantial error of both types is preserved: a part of a real object is considered a shadow, and vice versa. However, from the statistical point of view, the areas of the shadow and the real object are already well distinguished. If we apply the averaging filter, we can clearly separate the shadow area and the real object area:

Source: https://habr.com/ru/post/134197/

All Articles