Beginners about channel width control in Linux

Some time ago I was asked to set up a simplest traffic balancing at a remote branch office. They work, poor fellows, via ADSL, and sending large e-mails (scans of documents) clogs the entire return channel, which leads to problems in working with office online programs via VPN.

They use Linux (Fedora) as a gateway. Before that, I saw a couple of times how such balancing is configured via ipfw on FreeBSD, and since I know the iptables mechanism quite well, I did not expect any special problems. But when I searched the Internet, I was unpleasantly surprised that iptables was not at all an assistant for me. And knowledge of the order of passing packages through its tables and rules will hardly come in handy to me. You need to learn tc from the iproute2 package.

Unexpectedly, I spent two days in order to more or less understand how to balance traffic with iproute2. First came across the HTB article that wasn't the best for a newbie ( here ). Various examples from the Internet also sometimes introduced into a stupor, as they often did not contain a description of specific options or the meaning of their use. That's why I tried to collect the knowledge I received in one article, and most importantly, to describe everything at a level accessible to beginners.

At once I will make a reservation, we will only cut the traffic coming from the network interface. Incoming, too, can be adjusted, but it requires additional tricks.

So, in Linux, a discipline (qdisc) is assigned to each network interface for managing traffic. It is from the disciplines that the whole traffic management system is built. But you should not be afraid, in fact, discipline is just an algorithm for processing a queue of network packets.

Several disciplines can be involved on one interface, and the so-called root discipline (root qdisc) is attached directly to the interface. In addition, each interface has its own root discipline.

')

By default, after booting the system, root qdisc sets the algorithm for processing packages of type pfifo_fast .

Checking:

# tc qdisc

qdisc pfifo_fast 0: dev eth0 root bands 3 priomap 1 2 2 2 1 2 0 0 1 1 1 1 1 1 1 1

pfifo_fast is the usual “First Input - First Output” algorithm, but with some traffic prioritization. This type of discipline contains within itself three FIFO queues with different priority of packet processing. Packages are laid out on the basis of the ToS (Type of Service) flag in each IP packet. The packet hit in FIFO0 has the highest priority to processing, in FIFO2 - the smallest. The ToS itself requires a separate conversation, so I suggest limiting myself to the fact that the operating system itself knows which ToS to assign to the sent IP packet. For example, in the telnet and ping packets, ToS will have different values.

0: - handle the root discipline.

The descriptors must be of the following major number: the lower number , but for the disciplines the junior number must always be 0, and therefore it can be omitted.

The priomap parameter 1 2 2 2 1 2 0 0 1 1 1 1 1 1 1 1 1 just sets the bitwise match of the ToS field to each internal queue (band). For example, with ToS = 4, the packet is processed in queue 1, with ToS = 7 in queue 0.

In a number of sources indicated that the parameters of the discipline pfifo_fast can not be changed, we believe.

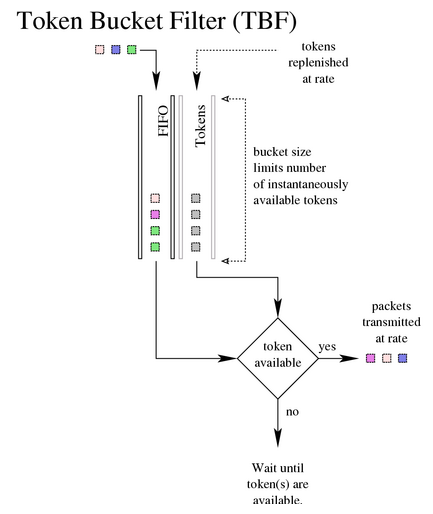

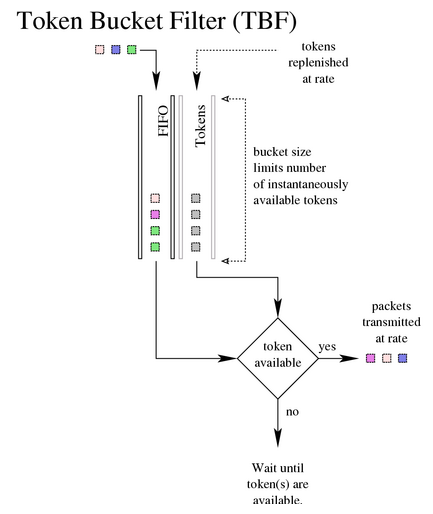

Now consider how you can limit the rate of total outgoing traffic. To do this, we assign the root discipline of the interface discipline type TBF (Token Bucket Filter) .

# tc qdisc add dev eth0 root tbf rate 180kbit latency 20ms buffer 1540

rate 180kbit - sets the threshold for the transfer rate on the interface.

latency 20ms - sets the maximum time for a data packet to wait for a token.

buffer 1540 - set the size of the token buffer in bytes. In the examples they write that for a 10Mbit / s constraint, a buffer of 10Kbytes is enough. The main thing is not to make it too small, more can be. Approximate calculation formula: rate_in_Bytes / 100.

Discipline TBF for their work uses the mechanism of tokens. Tokens are generated by the system at a constant speed and are placed in a buffer (bucket). For each token that came out of the buffer, an IP packet leaves the interface.

If the transfer rates of packets and generation of tokens coincide, the data transfer process goes without delay.

If the packet transfer rate is less than the speed of tokens, the latter begin to accumulate in the buffer and then can be used for short-term data transmission at a speed above the threshold.

If the packet transfer rate is higher, there will be a lack of tokens. Data packets await new tokens for a while, and then begin to be discarded.

The two disciplines described refer to the so-called classless (classless) disciplines. They have a number of functional limitations: they are connected only to the interface (or to the boundary class), plus they cannot use packet filters. And, accordingly, my task of balancing e-mail traffic cannot be solved with their help.

By the way, the full set of classless disciplines is somewhat broader: pfifo, bfifo, sqf (ensures the same speed of packets received from different streams), esqf, etc.

If disciplines can be represented as sections of water pipes, then classes are connectors (fittings). It can be a simple fitting adapter, or it can be a cunning fitting splitter with a dozen taps.

As a parent of a class, either a discipline or another class can act.

Subclasses can join a class (joining several fittings).

A class that does not have child classes is called a leaf (leaf class) . Here, data packets, having run through our “water supply system” leave the traffic management system and are sent by the network interface. By default, any regional class has an attached fifo discipline, and it is this group that determines the packet transmission order for this class. But the beauty is that we can change this discipline to any other.

In the case of adding a child class, this discipline is deleted.

Let us return to the postal traffic balancing problem and consider the prio class discipline.

It is very similar to the already described pfifo_fast. But this discipline is special in that when it is assigned, three classes are automatically created (the number can be changed with the parameter bands).

Replace the root discipline interface prio.

# tc qdisc add dev eth0 root handle 1: prio

handle 1: - set the handle to the root qdisc. In class disciplines, it is then indicated when the classes are connected.

Check the discipline:

# tc qdisc

qdisc prio 1: dev eth0 bands 3 priomap 1 2 2 2 1 2 0 0 1 1 1 1 1 1 1 1

Checking classes:

# tc -d -s class show dev eth0

class prio 1: 1 parent 1:

Sent 734914 bytes 7875 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

class prio 1: 2 parent 1:

Sent 1555058583 bytes 8280199 pkt (dropped 124919, overlimits 26443 requeues 0)

backlog 0b 0p requeues 0

class prio 1: 3 parent 1:

Sent 57934378 bytes 802213 pkt (dropped 70976, overlimits 284608 requeues 0)

backlog 0b 0p requeues 0

We see three classes with identifiers 1: 1, 1: 2 and 1: 3 connected to the parent discipline 1: of the prio type (classes are required to have a common identifier ID with their parent).

Ie, the root qdisc “pipe”, which separates the data streams in the same way as pfifo_fast does, we planted a tee. Being guided by ToS, high-priority traffic falls into class 1: 1, normal traffic falls into class 1: 2, and absolutely garbage comes to class 1: 3.

Suppose the reverse ADSL channel provides a speed of 90Kbytes / s. We divide it into 20K bytes / c for mail and 70K bytes / c for everything else.

Traffic from the 1: 1 class will not specifically limit. Its packets will always be able to occupy at least the full width of the channel due to the high priority of ToS, but the traffic volume in this class will be negligible compared to the other two classes. Therefore, we do not reserve a separate lane for it.

Standard traffic usually falls into the 1: 2 class. We connect to the second output of the class-tee pipe-discipline at 70Kbyte / c:

# tc qdisc add dev eth0 parent 1: 2 handle 10: tfb rate 70kbps buffer 1500 latency 50ms

To the third pin of the tee, we connect the pipe discipline to 20Kbyte / c:

# tc qdisc add dev eth0 parent 1: 3 handle 20: tfb rate 20kbps buffer 1500 latency 50ms

All three of these classes are marginal.

And now it remains only to send mail traffic not to class 1: 2, as happened earlier, but to class 1: 3. This is done using class discipline filters.

# tc filter add dev eth0 parent 1: protocol ip prio 1 u32 match ip dport 25 0xffff flowid 1: 3

parent 1: - the filter can be attached only to the discipline and is called from it. Based on the filter response, the discipline decides in which class the packet processing will continue.

protocol ip - determine the type of network protocol

prio 1 - the parameter for a long time introduced me into confusion, as it is used in classes and filters, plus this is the name of the discipline. Here, prio sets the filter priority, the filters with the lower prio are used first.

u32 - the so-called traffic classifier, which can perform packet selection for any of its grounds: by sender / recipient ip-address, source / destination port, protocol type. These conditions, in fact, are listed below.

match ip dport 25 0xffff - sets the filter to trigger when sending packets to port 25. 0xffff is the bitmask for the port number.

flowid 1: 3 - we specify in which class the packets are transmitted when this filter is triggered.

Done roughly, but the task will perform.

We look at the packet passing statistics:

# tc -s -d qdisq show dev eth0

# tc -s -d class show dev eth0

# tc -s -d filter show dev eth0

Quickly remove all classes, filters and return the interface qdisc root to its original state by using the command:

# tc qdisc del dev eth0 root

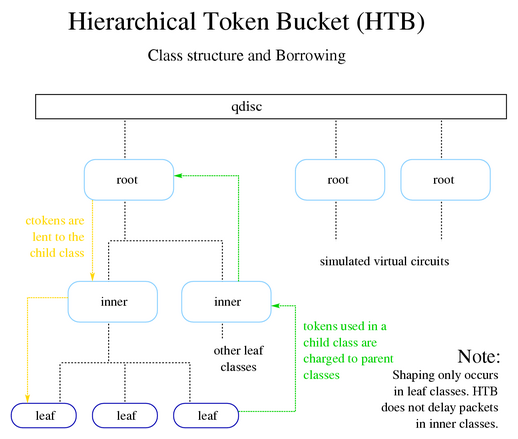

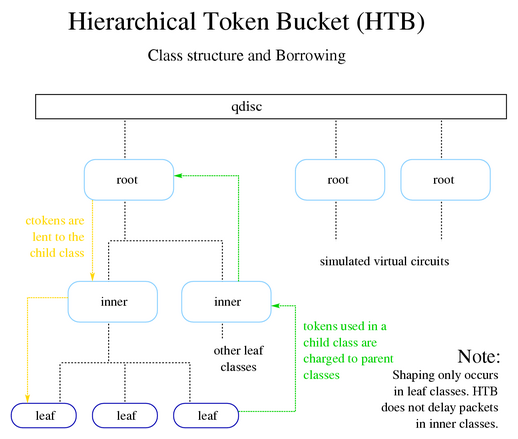

On the other hand, our return channel is already too thin to reserve 20K bytes / c only for sending email. Therefore, it is better to use the class discipline HTB (Hierarchical Token Bucket). It allows borrowing bandwidth from parent classes of children.

# tc qdics add dev eth0 root handle 1: htb default 20

default 20 - set the default class. It will process packages that are not in other classes of discipline htb. If you do not specify it, then “default 0” will be assigned and all unclassified (non-filtered) traffic will be sent at the interface speed.

# tc class add dev eth0 parent 1: classid 1: 1 htb rate 90kbps ceil 90kbps

Attach a class with a 1: 1 identifier to root qdisc. Thus, we limit the speed on the interface to 90Kbyte / s.

classid 1: 1 is a class identifier.

rate 90kbps - set the lower bandwidth threshold for the class.

ceil 90kbps - set the upper bandwidth threshold for the class.

# tc class add dev eth0 parent 1: 1 classid 1:10 htb rate 20kbps ceil 70kbps

create a 1:10 class, a child of a 1: 1 class. Then the outgoing mail traffic will be sent to it by the filter.

rate 20kbps - set a guaranteed lower bandwidth threshold for the class.

ceil 70kbps - set the upper bandwidth threshold for the class. If the parent class has a free bandwidth (the presence of “extra” tokens), class 1:10 will be able to temporarily increase the data transfer rate, up to the specified limit of 70 Kb / s.

# tc class add dev eth0 parent 1: 1 classid 1:20 htb rate 70kbps ceil 90kbps

Create a default class. All other traffic will fall into it. Similarly, with the rate and ceil parameters, we set the bandwidth expansion in the absence of mail traffic.

# tc filter add dev eth0 parent 1: protocol ip prio 1 u32 match ip dport 25 0xffff flowid 1:10

filter based on u32, directing packets outgoing to port 25 in class 1:10.

By the way, the documentation states that in fact in HTB traffic shaping occurs only in the regional classes, in our case 1:10 and 1:20. Specifying bandwidth limiting parameters in the rest of the HTB classes is only necessary for the functioning of the inter-class borrowing system.

When adding a class, it is also possible to specify the prio parameter. It sets the class priority (0 - max. Priority). Classes with lower priority are not processed while there is data in higher priority classes.

Sources:

Linux Advanced Routing & Traffic Control HOWTO

Traffic Control HOWTO

A Tale of Linux and Traffic Management

They use Linux (Fedora) as a gateway. Before that, I saw a couple of times how such balancing is configured via ipfw on FreeBSD, and since I know the iptables mechanism quite well, I did not expect any special problems. But when I searched the Internet, I was unpleasantly surprised that iptables was not at all an assistant for me. And knowledge of the order of passing packages through its tables and rules will hardly come in handy to me. You need to learn tc from the iproute2 package.

Unexpectedly, I spent two days in order to more or less understand how to balance traffic with iproute2. First came across the HTB article that wasn't the best for a newbie ( here ). Various examples from the Internet also sometimes introduced into a stupor, as they often did not contain a description of specific options or the meaning of their use. That's why I tried to collect the knowledge I received in one article, and most importantly, to describe everything at a level accessible to beginners.

At once I will make a reservation, we will only cut the traffic coming from the network interface. Incoming, too, can be adjusted, but it requires additional tricks.

Classless disciplines

So, in Linux, a discipline (qdisc) is assigned to each network interface for managing traffic. It is from the disciplines that the whole traffic management system is built. But you should not be afraid, in fact, discipline is just an algorithm for processing a queue of network packets.

Several disciplines can be involved on one interface, and the so-called root discipline (root qdisc) is attached directly to the interface. In addition, each interface has its own root discipline.

')

prio_fast

By default, after booting the system, root qdisc sets the algorithm for processing packages of type pfifo_fast .

Checking:

# tc qdisc

qdisc pfifo_fast 0: dev eth0 root bands 3 priomap 1 2 2 2 1 2 0 0 1 1 1 1 1 1 1 1

pfifo_fast is the usual “First Input - First Output” algorithm, but with some traffic prioritization. This type of discipline contains within itself three FIFO queues with different priority of packet processing. Packages are laid out on the basis of the ToS (Type of Service) flag in each IP packet. The packet hit in FIFO0 has the highest priority to processing, in FIFO2 - the smallest. The ToS itself requires a separate conversation, so I suggest limiting myself to the fact that the operating system itself knows which ToS to assign to the sent IP packet. For example, in the telnet and ping packets, ToS will have different values.

0: - handle the root discipline.

The descriptors must be of the following major number: the lower number , but for the disciplines the junior number must always be 0, and therefore it can be omitted.

The priomap parameter 1 2 2 2 1 2 0 0 1 1 1 1 1 1 1 1 1 just sets the bitwise match of the ToS field to each internal queue (band). For example, with ToS = 4, the packet is processed in queue 1, with ToS = 7 in queue 0.

In a number of sources indicated that the parameters of the discipline pfifo_fast can not be changed, we believe.

TBF

Now consider how you can limit the rate of total outgoing traffic. To do this, we assign the root discipline of the interface discipline type TBF (Token Bucket Filter) .

# tc qdisc add dev eth0 root tbf rate 180kbit latency 20ms buffer 1540

rate 180kbit - sets the threshold for the transfer rate on the interface.

latency 20ms - sets the maximum time for a data packet to wait for a token.

buffer 1540 - set the size of the token buffer in bytes. In the examples they write that for a 10Mbit / s constraint, a buffer of 10Kbytes is enough. The main thing is not to make it too small, more can be. Approximate calculation formula: rate_in_Bytes / 100.

Discipline TBF for their work uses the mechanism of tokens. Tokens are generated by the system at a constant speed and are placed in a buffer (bucket). For each token that came out of the buffer, an IP packet leaves the interface.

If the transfer rates of packets and generation of tokens coincide, the data transfer process goes without delay.

If the packet transfer rate is less than the speed of tokens, the latter begin to accumulate in the buffer and then can be used for short-term data transmission at a speed above the threshold.

If the packet transfer rate is higher, there will be a lack of tokens. Data packets await new tokens for a while, and then begin to be discarded.

The two disciplines described refer to the so-called classless (classless) disciplines. They have a number of functional limitations: they are connected only to the interface (or to the boundary class), plus they cannot use packet filters. And, accordingly, my task of balancing e-mail traffic cannot be solved with their help.

By the way, the full set of classless disciplines is somewhat broader: pfifo, bfifo, sqf (ensures the same speed of packets received from different streams), esqf, etc.

Class disciplines

If disciplines can be represented as sections of water pipes, then classes are connectors (fittings). It can be a simple fitting adapter, or it can be a cunning fitting splitter with a dozen taps.

As a parent of a class, either a discipline or another class can act.

Subclasses can join a class (joining several fittings).

A class that does not have child classes is called a leaf (leaf class) . Here, data packets, having run through our “water supply system” leave the traffic management system and are sent by the network interface. By default, any regional class has an attached fifo discipline, and it is this group that determines the packet transmission order for this class. But the beauty is that we can change this discipline to any other.

In the case of adding a child class, this discipline is deleted.

prio

Let us return to the postal traffic balancing problem and consider the prio class discipline.

It is very similar to the already described pfifo_fast. But this discipline is special in that when it is assigned, three classes are automatically created (the number can be changed with the parameter bands).

Replace the root discipline interface prio.

# tc qdisc add dev eth0 root handle 1: prio

handle 1: - set the handle to the root qdisc. In class disciplines, it is then indicated when the classes are connected.

Check the discipline:

# tc qdisc

qdisc prio 1: dev eth0 bands 3 priomap 1 2 2 2 1 2 0 0 1 1 1 1 1 1 1 1

Checking classes:

# tc -d -s class show dev eth0

class prio 1: 1 parent 1:

Sent 734914 bytes 7875 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

class prio 1: 2 parent 1:

Sent 1555058583 bytes 8280199 pkt (dropped 124919, overlimits 26443 requeues 0)

backlog 0b 0p requeues 0

class prio 1: 3 parent 1:

Sent 57934378 bytes 802213 pkt (dropped 70976, overlimits 284608 requeues 0)

backlog 0b 0p requeues 0

We see three classes with identifiers 1: 1, 1: 2 and 1: 3 connected to the parent discipline 1: of the prio type (classes are required to have a common identifier ID with their parent).

Ie, the root qdisc “pipe”, which separates the data streams in the same way as pfifo_fast does, we planted a tee. Being guided by ToS, high-priority traffic falls into class 1: 1, normal traffic falls into class 1: 2, and absolutely garbage comes to class 1: 3.

Suppose the reverse ADSL channel provides a speed of 90Kbytes / s. We divide it into 20K bytes / c for mail and 70K bytes / c for everything else.

Traffic from the 1: 1 class will not specifically limit. Its packets will always be able to occupy at least the full width of the channel due to the high priority of ToS, but the traffic volume in this class will be negligible compared to the other two classes. Therefore, we do not reserve a separate lane for it.

Standard traffic usually falls into the 1: 2 class. We connect to the second output of the class-tee pipe-discipline at 70Kbyte / c:

# tc qdisc add dev eth0 parent 1: 2 handle 10: tfb rate 70kbps buffer 1500 latency 50ms

To the third pin of the tee, we connect the pipe discipline to 20Kbyte / c:

# tc qdisc add dev eth0 parent 1: 3 handle 20: tfb rate 20kbps buffer 1500 latency 50ms

All three of these classes are marginal.

And now it remains only to send mail traffic not to class 1: 2, as happened earlier, but to class 1: 3. This is done using class discipline filters.

# tc filter add dev eth0 parent 1: protocol ip prio 1 u32 match ip dport 25 0xffff flowid 1: 3

parent 1: - the filter can be attached only to the discipline and is called from it. Based on the filter response, the discipline decides in which class the packet processing will continue.

protocol ip - determine the type of network protocol

prio 1 - the parameter for a long time introduced me into confusion, as it is used in classes and filters, plus this is the name of the discipline. Here, prio sets the filter priority, the filters with the lower prio are used first.

u32 - the so-called traffic classifier, which can perform packet selection for any of its grounds: by sender / recipient ip-address, source / destination port, protocol type. These conditions, in fact, are listed below.

match ip dport 25 0xffff - sets the filter to trigger when sending packets to port 25. 0xffff is the bitmask for the port number.

flowid 1: 3 - we specify in which class the packets are transmitted when this filter is triggered.

Done roughly, but the task will perform.

We look at the packet passing statistics:

# tc -s -d qdisq show dev eth0

# tc -s -d class show dev eth0

# tc -s -d filter show dev eth0

Quickly remove all classes, filters and return the interface qdisc root to its original state by using the command:

# tc qdisc del dev eth0 root

HTB

On the other hand, our return channel is already too thin to reserve 20K bytes / c only for sending email. Therefore, it is better to use the class discipline HTB (Hierarchical Token Bucket). It allows borrowing bandwidth from parent classes of children.

# tc qdics add dev eth0 root handle 1: htb default 20

default 20 - set the default class. It will process packages that are not in other classes of discipline htb. If you do not specify it, then “default 0” will be assigned and all unclassified (non-filtered) traffic will be sent at the interface speed.

# tc class add dev eth0 parent 1: classid 1: 1 htb rate 90kbps ceil 90kbps

Attach a class with a 1: 1 identifier to root qdisc. Thus, we limit the speed on the interface to 90Kbyte / s.

classid 1: 1 is a class identifier.

rate 90kbps - set the lower bandwidth threshold for the class.

ceil 90kbps - set the upper bandwidth threshold for the class.

# tc class add dev eth0 parent 1: 1 classid 1:10 htb rate 20kbps ceil 70kbps

create a 1:10 class, a child of a 1: 1 class. Then the outgoing mail traffic will be sent to it by the filter.

rate 20kbps - set a guaranteed lower bandwidth threshold for the class.

ceil 70kbps - set the upper bandwidth threshold for the class. If the parent class has a free bandwidth (the presence of “extra” tokens), class 1:10 will be able to temporarily increase the data transfer rate, up to the specified limit of 70 Kb / s.

# tc class add dev eth0 parent 1: 1 classid 1:20 htb rate 70kbps ceil 90kbps

Create a default class. All other traffic will fall into it. Similarly, with the rate and ceil parameters, we set the bandwidth expansion in the absence of mail traffic.

# tc filter add dev eth0 parent 1: protocol ip prio 1 u32 match ip dport 25 0xffff flowid 1:10

filter based on u32, directing packets outgoing to port 25 in class 1:10.

By the way, the documentation states that in fact in HTB traffic shaping occurs only in the regional classes, in our case 1:10 and 1:20. Specifying bandwidth limiting parameters in the rest of the HTB classes is only necessary for the functioning of the inter-class borrowing system.

When adding a class, it is also possible to specify the prio parameter. It sets the class priority (0 - max. Priority). Classes with lower priority are not processed while there is data in higher priority classes.

Sources:

Linux Advanced Routing & Traffic Control HOWTO

Traffic Control HOWTO

A Tale of Linux and Traffic Management

Source: https://habr.com/ru/post/133076/

All Articles