Geographical binding sites: how is this done?

Every day more and more information accumulates on the Internet. And thanks to the Internet, everyone can get access to the data they need. On the other hand, to navigate in such a large array without the use of special tools is almost impossible. And such a tool of course are the search engines that help people navigate the ever-expanding sea of information.

Since the search engines took their first steps, developers have spent a tremendous amount of effort on improving the organization, navigation and search for documents. Today, probably the most used technique is a keyword search, giving users the opportunity to find information on a given topic. At the same time, the global expansion of the Internet leads to the fact that the amount of information found by a person when searching using only keywords is too large. By typing the same word in the search box, different people may want different results.

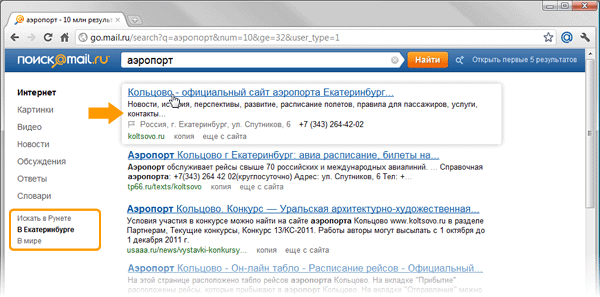

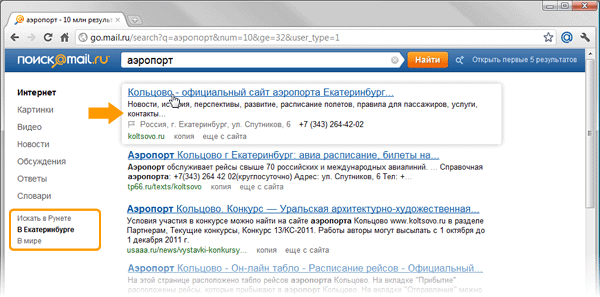

The figures show what the same query looks like, asked by users living in the cities of Novosibirsk and Yekaterinburg. It is clear that by asking the word “airport” for a search engine, people want to get the address of the city airport site:

')

To solve this problem using the keyword search is impossible. In the same time. it becomes the starting point for the formation of high-level semantic queries that can be used to find such information. Thus, additional metadata can be attached to the page, using which. improve the quality of the response of the search engine. For example, for geo-dependent queries such metadata will be information about the geographic location of the resource. Knowing where the desired object is physically located and having knowledge of the user's position, you can link this data and give the person information about the desired object.

In general, the task can be divided into two parts:

a) determining the location of the user;

b) determining the location of the desired resource.

In the article we propose a solution to the second part of the problem, i.e. how to determine the location of the Internet resource.

There are many sources where you can get information about the geography of the site. These include the following: the WHOIS database, directories, the context of pages, etc. In our article we will look at how to link a site to geography based on an analysis of the context of pages.

As a rule, the sources that can supply information about geography are sites of organizations that publish data on their locations - addresses and phone numbers. The solution to the problem of extracting information in our case will be divided into several parts:

• determination of typical templates of sites on which information about the location of the organization can be placed;

• extraction of candidates for the subsequent binding of the site to geographic information;

• filtering candidates.

At this stage, we try to determine the set of search patterns for the pages on which the proposed address is located. One of the most common locations for contact information is the root page. At the same time, this page is not always a reliable source of information, since there are websites, for example, placing ads that include well-readable addresses, some of which can go to the main page, so in the future, addresses extracted from the main page will be filtered . Another common location for contact information is the Contacts page. As a rule, there are links to it from the main page, and in most cases they follow a number of rules. For example, the link text may contain the word "contacts", "About us", etc.

After analyzing the structure of the sites of various organizations, we will select the most common sample templates for pages where you can find the address. Briefly, you can write down the following three steps:

• Search for addresses on the root page of the site.

• Search for links to the Contacts page.

• Search for addresses on the Contacts page.

As we said above, in the first stage we get the most likely pages on which contact information can be placed. From these pages you can extract something that is very similar to the address. Why is “very similar”? Because we will use the hidden Markov model for extraction.

Before we start building our model, we will define the data and try to simplify everything a bit. To begin with, we will determine that the number of significant cities in Russia is finite and, accordingly, can be described by a dictionary. Got the first simplification - using the dictionary of cities, you can find a reference point on the page. It can be assumed that if we are on the most likely contact page, then the city we have found may enter the address.

Go ahead. If you move from the point of entry to the left or right. You can get the address, but for this you need a system for evaluating a sequence of characters, probably included in the address. The HMM or the hidden Markov model can help us in this. But to build a model, we need to determine the following data:

• states of the model s belonging to the set that is, what can be mistaken for states;

that is, what can be mistaken for states;

• elements of the sequence v belonging to the set ; that is, what can be taken for the elements of a sequence.

; that is, what can be taken for the elements of a sequence.

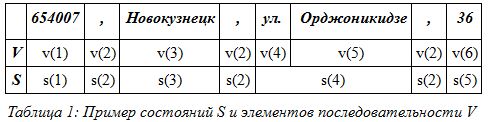

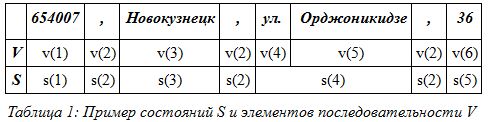

For example, take the address: 654007, Novokuznetsk, st. Ordzhonikidze, 36, and select from it the elements of the sequence V and the state S.

As can be seen in Table 1, we obtained six sequence elements and five states of the model. Thus, if we take individual words as elements of a sequence, then we can imagine what this will lead to when calculating the model. But we will make several simplifications to reduce the number of states of the set S and the number of elements of the sequence of the set V. If you look closely at table 1, you can see that the elements of the states s (2) and the elements of the sequence v (2) are repeated. If all elements of the address are divided into types, then this will lead us to a decrease in the model states. For example, you can combine all the modifiers of the streets into one element. In this case, the street, highway, lane ... form an element of the sequence . Thus, we obtain the second simplification - the translation of many well-known geographical names into one element of the sequence. Let's give an example in the form of a table:

. Thus, we obtain the second simplification - the translation of many well-known geographical names into one element of the sequence. Let's give an example in the form of a table:

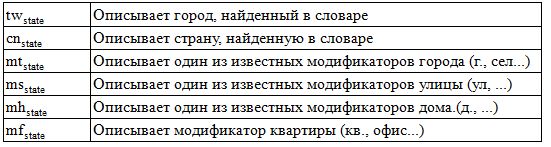

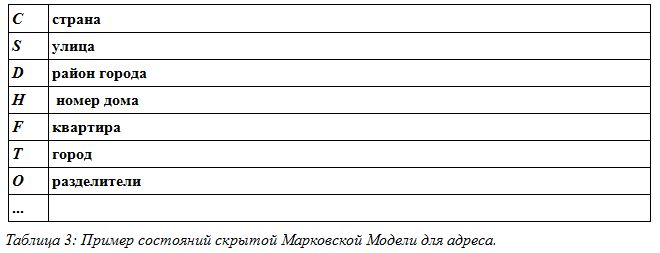

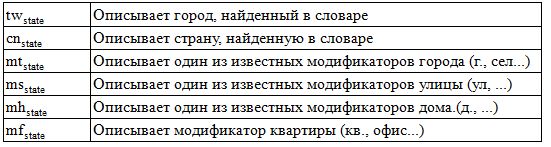

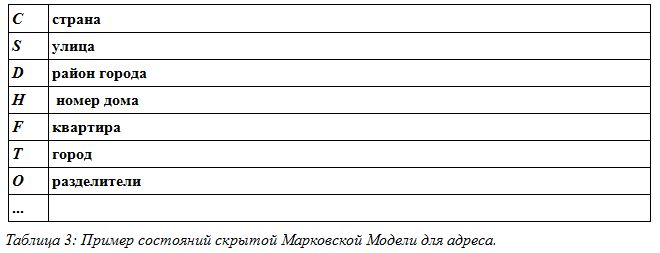

Thus, we get only 19 typed elements of the set V. The same can be done with the set S, that is, with the states of the model. For example, if during the analysis the model gives information about the street, we say that the model is in state S (street), if the model gives information about the city, we say. that the model is in state T (city). We obtain the third simplification of the model states. Examples are given in table 3.

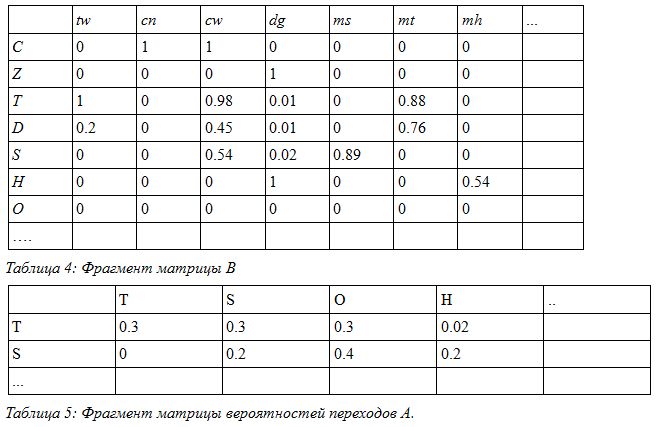

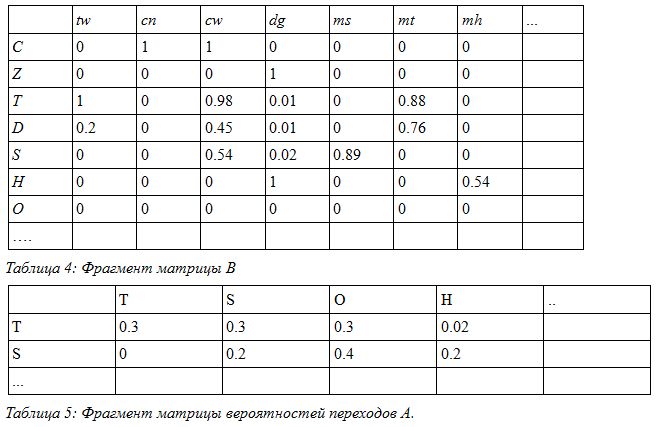

Now, knowing the description of the states of the model and the elements of the sequence, we create a training set on which our model is built. From the training set, we need to obtain the transition probability matrix A and B as the probability matrix for obtaining data from the set V at the moment when the model is in the state s.

For example, in our case, the fragments of the matrix B and A are as follows:

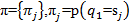

To complete the picture, we also need to consider the initial distributions. . And now, when we have a model built, in a brief record, it looks like

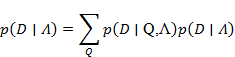

. And now, when we have a model built, in a brief record, it looks like  we take our sequence D surrounding the found city and find the probability that the words, i.e. the data, are like a model. We consider the probability:

we take our sequence D surrounding the found city and find the probability that the words, i.e. the data, are like a model. We consider the probability:

Since in this problem it is required to calculate only the probability of the occurrence of the address sequence in the vicinity of the city, for the solution we use the forward-backward algorithm.

Extracted addresses are filtered. The first stage of filtering is that additional information is also extracted from the page, such as a telephone, which is assigned to one or several addresses extracted from the page.

One of the comparisons is to check the code of the region indicated in the telephone number for compliance with the city indicated in the address.

The second stage of filtering includes a set of rules of thumb that are superimposed on the highlighted address. Such rules, for example, include a limit on the possible number of digits contained in a house number. After applying a number of rules, the extracted address is either accepted as one of the addresses describing the location of the organization, or is rejected.

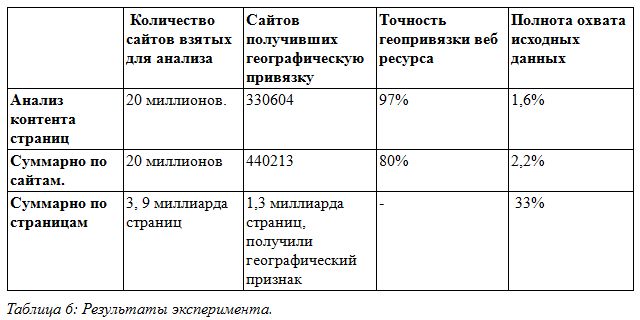

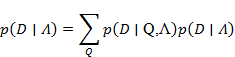

For the experiments, a database of pages downloaded from the Internet was taken, containing about 20 million sites and 3.9 billion pages. From this data, based on the analysis of the content of the pages, the site was geo-referenced using the algorithm described above. The results are presented in table 6.

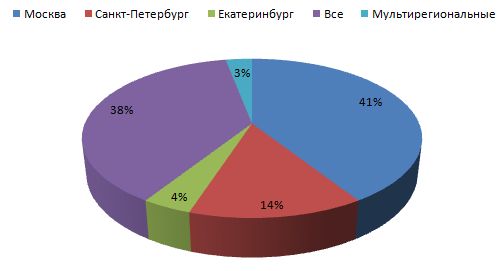

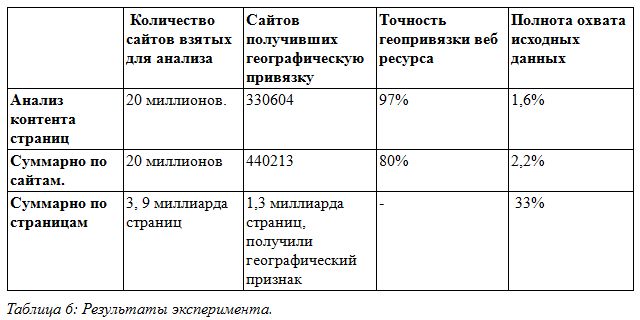

As the experiments showed, the described method is quite accurate. In his case, the accuracy reaches 97%. This is due to a number of restrictions, namely: the use of predefined patterns for finding a page with an address; using the dictionary of cities; comparison of phone number and city, as well as existing formal rules for recording addresses. All these limitations make it possible to achieve a sufficiently high accuracy in determining the geography of a web resource. On the other hand, these restrictions lead to a decrease in completeness in cases where the address is entered without a direct indication of the city or with an unknown city, if the contact page is located at an address that is not described in the known search patterns. Diagram 1 shows the distribution of sites by region - as can be seen from the diagram, the largest region to which the sites fall is Moscow.

At the moment we use the described method, as one of the methods, to extract information about the geolocation of sites and add it to the search engine index. Thus, a person can search for information on the sites of his region. Additionally, the extracted address can be displayed in address snippets.

Dmitry Solovyov

Search.com.Ru Developer

Since the search engines took their first steps, developers have spent a tremendous amount of effort on improving the organization, navigation and search for documents. Today, probably the most used technique is a keyword search, giving users the opportunity to find information on a given topic. At the same time, the global expansion of the Internet leads to the fact that the amount of information found by a person when searching using only keywords is too large. By typing the same word in the search box, different people may want different results.

The figures show what the same query looks like, asked by users living in the cities of Novosibirsk and Yekaterinburg. It is clear that by asking the word “airport” for a search engine, people want to get the address of the city airport site:

')

To solve this problem using the keyword search is impossible. In the same time. it becomes the starting point for the formation of high-level semantic queries that can be used to find such information. Thus, additional metadata can be attached to the page, using which. improve the quality of the response of the search engine. For example, for geo-dependent queries such metadata will be information about the geographic location of the resource. Knowing where the desired object is physically located and having knowledge of the user's position, you can link this data and give the person information about the desired object.

In general, the task can be divided into two parts:

a) determining the location of the user;

b) determining the location of the desired resource.

In the article we propose a solution to the second part of the problem, i.e. how to determine the location of the Internet resource.

There are many sources where you can get information about the geography of the site. These include the following: the WHOIS database, directories, the context of pages, etc. In our article we will look at how to link a site to geography based on an analysis of the context of pages.

Analyzing the contents of the site pages

As a rule, the sources that can supply information about geography are sites of organizations that publish data on their locations - addresses and phone numbers. The solution to the problem of extracting information in our case will be divided into several parts:

• determination of typical templates of sites on which information about the location of the organization can be placed;

• extraction of candidates for the subsequent binding of the site to geographic information;

• filtering candidates.

We define typical templates

At this stage, we try to determine the set of search patterns for the pages on which the proposed address is located. One of the most common locations for contact information is the root page. At the same time, this page is not always a reliable source of information, since there are websites, for example, placing ads that include well-readable addresses, some of which can go to the main page, so in the future, addresses extracted from the main page will be filtered . Another common location for contact information is the Contacts page. As a rule, there are links to it from the main page, and in most cases they follow a number of rules. For example, the link text may contain the word "contacts", "About us", etc.

After analyzing the structure of the sites of various organizations, we will select the most common sample templates for pages where you can find the address. Briefly, you can write down the following three steps:

• Search for addresses on the root page of the site.

• Search for links to the Contacts page.

• Search for addresses on the Contacts page.

We retrieve the address

As we said above, in the first stage we get the most likely pages on which contact information can be placed. From these pages you can extract something that is very similar to the address. Why is “very similar”? Because we will use the hidden Markov model for extraction.

Before we start building our model, we will define the data and try to simplify everything a bit. To begin with, we will determine that the number of significant cities in Russia is finite and, accordingly, can be described by a dictionary. Got the first simplification - using the dictionary of cities, you can find a reference point on the page. It can be assumed that if we are on the most likely contact page, then the city we have found may enter the address.

Go ahead. If you move from the point of entry to the left or right. You can get the address, but for this you need a system for evaluating a sequence of characters, probably included in the address. The HMM or the hidden Markov model can help us in this. But to build a model, we need to determine the following data:

• states of the model s belonging to the set

that is, what can be mistaken for states;

that is, what can be mistaken for states;• elements of the sequence v belonging to the set

; that is, what can be taken for the elements of a sequence.

; that is, what can be taken for the elements of a sequence.For example, take the address: 654007, Novokuznetsk, st. Ordzhonikidze, 36, and select from it the elements of the sequence V and the state S.

As can be seen in Table 1, we obtained six sequence elements and five states of the model. Thus, if we take individual words as elements of a sequence, then we can imagine what this will lead to when calculating the model. But we will make several simplifications to reduce the number of states of the set S and the number of elements of the sequence of the set V. If you look closely at table 1, you can see that the elements of the states s (2) and the elements of the sequence v (2) are repeated. If all elements of the address are divided into types, then this will lead us to a decrease in the model states. For example, you can combine all the modifiers of the streets into one element. In this case, the street, highway, lane ... form an element of the sequence

. Thus, we obtain the second simplification - the translation of many well-known geographical names into one element of the sequence. Let's give an example in the form of a table:

. Thus, we obtain the second simplification - the translation of many well-known geographical names into one element of the sequence. Let's give an example in the form of a table:

Thus, we get only 19 typed elements of the set V. The same can be done with the set S, that is, with the states of the model. For example, if during the analysis the model gives information about the street, we say that the model is in state S (street), if the model gives information about the city, we say. that the model is in state T (city). We obtain the third simplification of the model states. Examples are given in table 3.

Now, knowing the description of the states of the model and the elements of the sequence, we create a training set on which our model is built. From the training set, we need to obtain the transition probability matrix A and B as the probability matrix for obtaining data from the set V at the moment when the model is in the state s.

For example, in our case, the fragments of the matrix B and A are as follows:

To complete the picture, we also need to consider the initial distributions.

. And now, when we have a model built, in a brief record, it looks like

. And now, when we have a model built, in a brief record, it looks like  we take our sequence D surrounding the found city and find the probability that the words, i.e. the data, are like a model. We consider the probability:

we take our sequence D surrounding the found city and find the probability that the words, i.e. the data, are like a model. We consider the probability:

Since in this problem it is required to calculate only the probability of the occurrence of the address sequence in the vicinity of the city, for the solution we use the forward-backward algorithm.

Filter the data

Extracted addresses are filtered. The first stage of filtering is that additional information is also extracted from the page, such as a telephone, which is assigned to one or several addresses extracted from the page.

One of the comparisons is to check the code of the region indicated in the telephone number for compliance with the city indicated in the address.

The second stage of filtering includes a set of rules of thumb that are superimposed on the highlighted address. Such rules, for example, include a limit on the possible number of digits contained in a house number. After applying a number of rules, the extracted address is either accepted as one of the addresses describing the location of the organization, or is rejected.

What did you get?

For the experiments, a database of pages downloaded from the Internet was taken, containing about 20 million sites and 3.9 billion pages. From this data, based on the analysis of the content of the pages, the site was geo-referenced using the algorithm described above. The results are presented in table 6.

As the experiments showed, the described method is quite accurate. In his case, the accuracy reaches 97%. This is due to a number of restrictions, namely: the use of predefined patterns for finding a page with an address; using the dictionary of cities; comparison of phone number and city, as well as existing formal rules for recording addresses. All these limitations make it possible to achieve a sufficiently high accuracy in determining the geography of a web resource. On the other hand, these restrictions lead to a decrease in completeness in cases where the address is entered without a direct indication of the city or with an unknown city, if the contact page is located at an address that is not described in the known search patterns. Diagram 1 shows the distribution of sites by region - as can be seen from the diagram, the largest region to which the sites fall is Moscow.

At the moment we use the described method, as one of the methods, to extract information about the geolocation of sites and add it to the search engine index. Thus, a person can search for information on the sites of his region. Additionally, the extracted address can be displayed in address snippets.

Dmitry Solovyov

Search.com.Ru Developer

Source: https://habr.com/ru/post/132762/

All Articles