A little more about P and NP

There is a big difference between difficult tasks and complex tasks. A task may not have effective solutions in the worst cases, but it can remain easily solved for most cases, or for cases that arise in practice. Therefore, generally accepted definitions of the complexity of tasks may turn out to be relatively meaningless in terms of real complexity, since two tasks can be NP-complete, but one can be solved quickly in most cases and the other cannot. As a consequence, the notion of “complexity on average” plays an important role in the theory of complexity (here, by “average” we mean the expectation of solution time).

To illustrate the central role of this concept, one can imagine five different possible worlds (possible - because it has not yet been proved that they are unreal, and ours can turn out to be any of them) and see how the conditions in them will affect informatics and life in general.

In particular, for the demonstration from the point of view of a person, we will use the sad story of Professor Grouse, the very mathematics teacher who asked the class a task to count the sum of numbers from 1 to 100. Everyone knows that his trouble in the class turned out to be little Gauss, who quickly I noticed patterns of arithmetic progression and almost instantly counted this amount. But few know that after this the Professor was obsessed with the obsession to take revenge on Gauss and humiliate him in front of the whole class, inventing tasks that Gauss could not solve. This led the Professor to an insane asylum (not the most pleasant end, especially in the 19th century), and Gauss developed an interest in number-theoretic algorithms. Let's try to imagine what it would be in different worlds that we will consider.

')

Algorithm

Algorithm is a world in which P = NP. In this world, the Professor would be even less fortunate than in reality. Since the Professor needs to confuse Gauss with the task on which he (the Professor) then himself has to show the class the right answer, he is limited in choosing tasks to those that have an easily verifiable solution (that is, tasks from NP). Gauss can use the verification method to automatically solve this problem.

The method of automatically obtaining an algorithm for solving problems from the algorithm for checking the correct solution will revolutionize the computer science of that world. Tasks that seemed indestructible will prove trivial. Almost all optimization tasks will be simple and automatic. For example, heuristics will no longer be used in designing VLSIs, instead optimal wiring will be generated if an optimality criterion is set. Programming languages will no longer contain instructions describing how to perform calculations. Instead, they will only describe the properties that the output should have in relation to the input data. The compiler will independently translate the specification language into the solution algorithm.

It is less obvious that P = NP will make many aspects of artificial intelligence programs trivial. It will be possible to use learning systems based on the principle of "Occam's razor" to automatically train the computer to perform actions that people can do. It will be sufficient to provide a training sample of the input data and output data produced by a human expert, and the computer will output the simplest algorithm that will produce the same answers as the expert. So, the computer can be taught to recognize and parse sentences in natural language, you only need to provide a sufficient number of examples of correct and incorrect sentences (here it is assumed that there is some simple algorithm that people use to parse natural languages).

On the other hand, in Algorithmic there will be no possibility to distinguish people and computers from an information point of view. These learning algorithms can easily learn to imitate the behavior of other machines and even people. Any code that can be developed can be cracked just as easily. There will be little benefit from trying to hide the algorithm on which the code is based, since the same algorithm can be easily obtained by having a small number of ciphertext-playtext pairs. It will not be possible to provide access to information for several people without making it available to all. Therefore, any identification methods will need to be based on physical measurements. And the security of identification will be based on the immutability of physical parameters and resistance to external influences of data transmission channels from the meter to the identification device.

Heuristic

A heuristic is a world in which problems from an NP do not have effective solutions at worst, but are easily solvable on average (for some probability distribution over a set of inputs).

Heuristics, in some way, paradoxical world. Here there are complex instances of problems from NP, but to find such a complex instance is in itself a difficult task to solve. In this world, the Professor will have the opportunity to find tasks that will be too tough for Gauss. But he will have to spend a week searching for a task that Gauss will not be able to solve during the day, and a year to find a task that Gauss will spend a month on (in this case, it is assumed that Gauss has some polynomial advantage over the professor, because Gauss all, genius). Most likely, life is not so severe to provide us with complex tasks, so long as life does not appear to be raspberries. Therefore, for practical purposes, this world is almost indistinguishable from Algorithms.

Or is it still different? In Heuristics, the average time to solve a problem from NP depends on the average “thinking time” for this problem. This somewhat complicates the situation. Suppose, on average, the computation of a solution to a problem is twice as long as inventing a solution. If we spend time T on thinking about task No. 1, then we will spend 2T time units on calculating the solution. The solution of this problem influences the solution of problem No. 2 we spent 3T time units on thinking about solving the problem №2. Therefore, 6T time units will be spent on solving Problem 2. Which leads to problem number 3, to think about which we have already spent 10T time units, therefore 20T will be spent on the solution. As this recursion grows exponentially, after a few steps we will cross the border between the "realizable" and the "unreal."

In the case of VLSI design, effective solutions will no longer be necessary here, since in this case we are not interested in most of the possible schemes that are suitable for the specifications, but the minimum options that satisfy the specifications are important. Such schemes may not have a good distribution, and, since it will most likely take exponential time to find them, there are no guarantees that they are not the very “worst cases” that the Heuristics algorithms do not work well on.

In cryptography and network security there will be no fundamental differences with algorithms. It would be of little use if bona fide users spend a lot of time searching for an instance of a task that could uniquely identify them when an attacker can solve this instance for a comparable amount of time (it is assumed that an attacker who wants to hack the system uses significantly more resources than bona fide user).

Pessilandia

Pessilandia is the worst possible case - it has problems that are difficult to solve even on average, but there are no one-way functions. The absence of one-sided functions means the following: for any problem that is easy to calculate, it is also easy to find a solution to the inverse problem, in the sense that for almost all values of x, if given F (x), then you can find some x ', such that F (x ') = F (x), and approximately the same time that is spent on the calculation of F (x).

In Pessilandia, the Professor would be able to set Gauss puzzles that even a young genius could not solve. But the Professor himself would not have been able to demonstrate a solution, so the humiliation of Gauss would be incomplete.

In this world, in many areas, tasks would not have easy solutions. Progress would be the same as in our world: it would slowly advance through a deeper understanding of the world and through the use of not entirely satisfactory heuristics. Common problem solving methods would not work in most areas. However, several useful algorithms would be possible based on the absence of one-way functions. For example, a data compression method in which, knowing the distribution of the input data, in the limit could be compressed to the expected length in accordance with the entropy of the distribution.

In this world there will be no possibility to use complex tasks in cryptography. A task to which no one knows the answer cannot be used to distinguish a bona fide user from an attacker.

Minicript

Minicript has one-way functions, but public-key cryptography is impossible. Here we understand by public-key cryptography the task of secret communication through public communication channels, although, strictly speaking, public-key cryptography is only one of the ways to solve this problem.

One-way functions can be used to generate complex, but solved problems, namely: the generator will choose x, calculate y = F (x), and set the search task to “find x such that F (x ') = y”. Thus, in the Minicript, the Professor will finally be able to prevail over Gauss in front of the whole class.

In the network, participants will be able to identify themselves to other users, as well as authenticate messages using a digital electronic signature. It will be possible to prove the facts about the secret without revealing the other secret information contained in it. If a small amount of information is transmitted in advance between the participants, then it is possible to establish a reliable secret code between two participants in the network that will allow them to communicate in private using open communication channels. However, it will be impossible to conduct secret voting through open channels, or to ensure secure negotiations, without sending in advance some information through secure channels. It will not be possible to create anonymous digital money.

Cryptomania

In Cryptomania, public key cryptography exists. In Cryptomania, Gauss will be degraded even more; The professor and his pet pupil will be able to jointly choose a task for which they both will know the answer, but Gauss will not be able to solve it. Moreover, in this world, the Professor will be able to make everyone in the class know how to solve a given task, except Gauss.

The existence of public key protocols implies the existence of one-way functions, so pseudo-randomness, digital signatures, identification, protocols with zero knowledge and so on still exist in this world. Also, if a secret exchange is performed using one-way functions with a secret (and all known protocols use such functions directly or indirectly), any imaginable cryptographic tasks can be solved. Unlike other worlds, where creating confidentiality is a technological challenge, technologies in Cryptomania, on the contrary, will limit the ability of users to reduce confidentiality. Most decisions on how much privacy is allowed to citizens will be made as a result of political and social processes, and not because of technical capabilities.

This world is most similar to ours with you, at least as long as it is believed that known public key protocols are reliable.

R. Impagliazzo, " A personal view of the average-case complexity ," sct, pp.134, 10th Annual Structure in Theory Conference (SCT'95), 1995

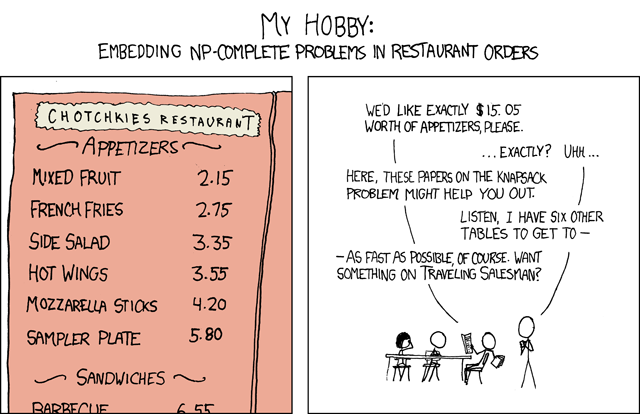

The image is borrowed from the site XKCD .

Source: https://habr.com/ru/post/132127/

All Articles