Recirculating Neural Networks

Recirculating neural networks are multilayered neural networks with inverse propagation of information. In this case, the reverse distribution of information occurs through bidirectional links, which have different weights in different directions. During the back propagation of signals, in such networks they are transformed in order to restore the input image. In the case of direct signal propagation, the input data is compressed. Recycling networks are trained without a teacher.

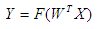

Recirculation networks are characterized by both direct Y = f (X) and inverse X = f (Y) information conversion. The task of such a transformation is to achieve the best auto-prediction or self-reproducibility of the vector X. Recirculating neural networks are used to compress (direct transform) and restore the original (inverse transform) information. Such networks are self-organizing in the process of work. They were offered in 1988. The theoretical basis of recirculation neural networks is the analysis of the main components.

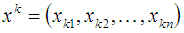

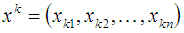

The principal component method is used in statistics to compress information without significant loss of information content. It consists in a linear orthogonal transformation of the input vector X of dimension n to the output vector Y of dimension p, where p <n. In this case, the components of the vector Y are uncorrelated and the total dispersion after transformation remains unchanged. The set of input patterns will be represented in the form of a matrix:

Where

corresponds to the k-th input image, L is the total number of images.

')

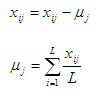

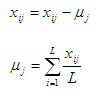

We assume that the matrix X is centered, that is, the expectation vector μ = 0. This is achieved through the following transformations:

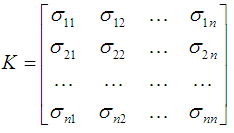

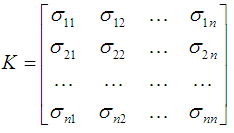

The covariance matrix of input data X is defined as

where σij is the covariance between the i-th and j-th component of the input images.

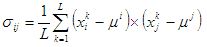

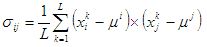

The elements of the covariance matrix can be calculated as follows:

where i, j = 1, ..., n.

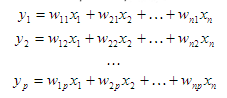

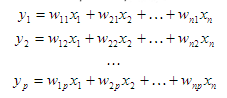

The principal component method is to find such linear combinations of source variables.

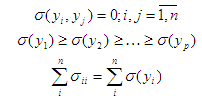

what

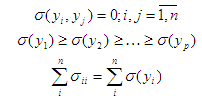

From the last expressions, it follows that the variables i are uncorrelated, ordered by the increase in the variance, and the sum of the variances of the input images remains unchanged. Then the subset of the first p variables y characterizes most of the total variance. The result is a representation of the input information.

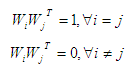

The variables y, i = 1, ..., p are called the main components. In matrix form, the transformation of the principal components can be represented as

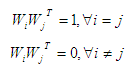

where the rows of the WT matrix must satisfy the orthogonality condition, i.e.

the vector Wi is defined as

To determine the principal components, it is necessary to determine the weight coefficients Wi, j = 1, ..., p.

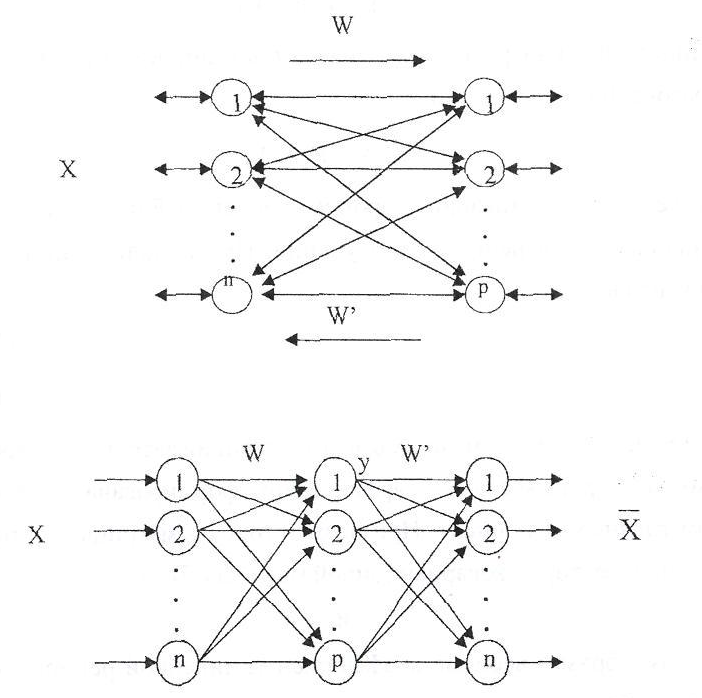

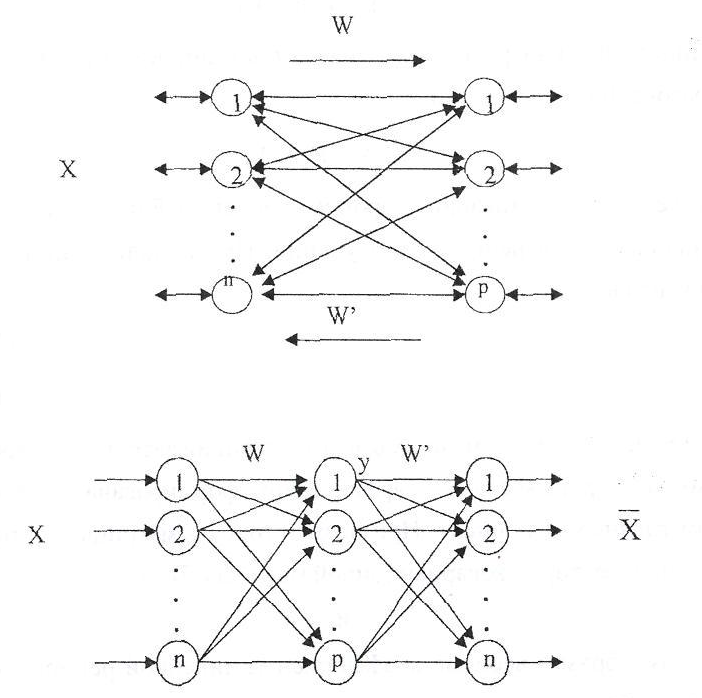

A recirculating neural network is a combination of two layers of neural elements that are interconnected by bidirectional connections.

Each of the layers of neural elements can be used as input or output. If the layer of neural elements serves as an input, then it performs distribution functions.

Otherwise, the neural elements of the layer are processing. The weighting coefficients corresponding to direct and feedback are characterized by a matrix of weighting factors W and W '. For clarity, the recirculation network can be presented in expanded form.

Such a network representation is equivalent and characterizes the complete information conversion cycle. In this case, the intermediate layer of neural elements encodes (compressed) the input data X, and the last layer recovers the compressed information Y. Call the neural network layer corresponding to the connection matrix W direct, and the corresponding connection matrix W 'reverse.

The recirculation network is designed for both data compression and recovery of compressed information. Data compression is carried out by direct conversion of information into accordance with the expression:

Data recovery or reconstruction occurs during the inverse transformation of information:

As an activation function of neural elements F, both linear and nonlinear functions can be used. When using the linear activation function:

Linear recirculation networks in which weighting factors are determined in accordance with the method of principal components are called PCA networks.

Recycle neural networks can be used to compress and repair images. The image is divided into blocks. The block is called a window, which is assigned a recycle neural network. The number of neurons in the first layer of the network corresponds to the dimension of the window (the number of pixels; sometimes each color is separate). By scanning the image using the window and feeding it to the neural network, you can compress the input image. A compressed image can be restored using reverse propagation of information.

An example of a neural network can be seen below:

On the left, the original image, on the right, the restored image after compression. A 3 by 3 pixel window was used, the number of neurons on the second layer was 21, the maximum allowable error was 50. The compression ratio was 0.77. It took 129 iterations to train the neural network.

Source codes can be found here (or here is a faster version).

Recirculation networks are characterized by both direct Y = f (X) and inverse X = f (Y) information conversion. The task of such a transformation is to achieve the best auto-prediction or self-reproducibility of the vector X. Recirculating neural networks are used to compress (direct transform) and restore the original (inverse transform) information. Such networks are self-organizing in the process of work. They were offered in 1988. The theoretical basis of recirculation neural networks is the analysis of the main components.

Principal component method

The principal component method is used in statistics to compress information without significant loss of information content. It consists in a linear orthogonal transformation of the input vector X of dimension n to the output vector Y of dimension p, where p <n. In this case, the components of the vector Y are uncorrelated and the total dispersion after transformation remains unchanged. The set of input patterns will be represented in the form of a matrix:

Where

corresponds to the k-th input image, L is the total number of images.

')

We assume that the matrix X is centered, that is, the expectation vector μ = 0. This is achieved through the following transformations:

The covariance matrix of input data X is defined as

where σij is the covariance between the i-th and j-th component of the input images.

The elements of the covariance matrix can be calculated as follows:

where i, j = 1, ..., n.

The principal component method is to find such linear combinations of source variables.

what

From the last expressions, it follows that the variables i are uncorrelated, ordered by the increase in the variance, and the sum of the variances of the input images remains unchanged. Then the subset of the first p variables y characterizes most of the total variance. The result is a representation of the input information.

The variables y, i = 1, ..., p are called the main components. In matrix form, the transformation of the principal components can be represented as

where the rows of the WT matrix must satisfy the orthogonality condition, i.e.

the vector Wi is defined as

To determine the principal components, it is necessary to determine the weight coefficients Wi, j = 1, ..., p.

Recycle Neural Network Architecture

A recirculating neural network is a combination of two layers of neural elements that are interconnected by bidirectional connections.

Each of the layers of neural elements can be used as input or output. If the layer of neural elements serves as an input, then it performs distribution functions.

Otherwise, the neural elements of the layer are processing. The weighting coefficients corresponding to direct and feedback are characterized by a matrix of weighting factors W and W '. For clarity, the recirculation network can be presented in expanded form.

Such a network representation is equivalent and characterizes the complete information conversion cycle. In this case, the intermediate layer of neural elements encodes (compressed) the input data X, and the last layer recovers the compressed information Y. Call the neural network layer corresponding to the connection matrix W direct, and the corresponding connection matrix W 'reverse.

The recirculation network is designed for both data compression and recovery of compressed information. Data compression is carried out by direct conversion of information into accordance with the expression:

Data recovery or reconstruction occurs during the inverse transformation of information:

As an activation function of neural elements F, both linear and nonlinear functions can be used. When using the linear activation function:

Linear recirculation networks in which weighting factors are determined in accordance with the method of principal components are called PCA networks.

Image processing

Recycle neural networks can be used to compress and repair images. The image is divided into blocks. The block is called a window, which is assigned a recycle neural network. The number of neurons in the first layer of the network corresponds to the dimension of the window (the number of pixels; sometimes each color is separate). By scanning the image using the window and feeding it to the neural network, you can compress the input image. A compressed image can be restored using reverse propagation of information.

An example of a neural network can be seen below:

On the left, the original image, on the right, the restored image after compression. A 3 by 3 pixel window was used, the number of neurons on the second layer was 21, the maximum allowable error was 50. The compression ratio was 0.77. It took 129 iterations to train the neural network.

Source codes can be found here (or here is a faster version).

Source: https://habr.com/ru/post/130581/

All Articles