Arithmetic coding

Now there are many information compression algorithms. Most of them are widely known, but there are some very effective, but, nevertheless, little-known algorithms. This article talks about the arithmetic coding method, which is the best of the entropy, but nevertheless very few people know about it.

Before we talk about arithmetic coding, I must say a few words about the Huffman algorithm. This method is effective when the frequency of occurrence of characters is proportional to 1/2 n (where n is a positive integer). This statement becomes obvious if we recall that the Huffman codes for each character always consist of an integer number of bits. Consider a situation where the frequency of the appearance of a symbol is 0.2, then the optimal code for encoding this symbol should be –log 2 (0.2) = 2.3 bits. It is clear that the Huffman prefix code cannot have such a length, i.e. ultimately, this leads to a deterioration in data compression.

Arithmetic coding is intended to solve this problem. The basic idea is to assign codes not to individual characters, but to their sequences.

First, consider the idea underlying the algorithm, then consider a small practical example.

As with all entropy algorithms, we have information about the frequency of use of each character of the alphabet. This information is the source for the method in question. Now we introduce the concept of a working segment. A worker is a semi-interval [a; b) with points located on it. Moreover, the points are located so that the lengths of the segments formed by them are equal to the frequency of use of symbols. When initializing the algorithm, a = 0 b = 1.

One coding step consists in a simple operation: the character being encoded is taken, the corresponding section on the working segment is searched for it. The found area becomes a new working segment (i.e., it must also be split using points).

This operation is performed for a number of characters in the source stream. The result of encoding a string of characters is any number (as well as the length of its bit record) from the final working segment (most often, the left border of the segment is taken).

It sounds pretty hard. Let's try to figure it out with a small example. Encode the message "THIS_METOD_READER_HAFFMAN" using the described method.

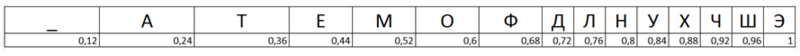

Making a table of the frequency of occurrence of characters, we can proceed to encoding. At the first stage we will make a working segment. It will look like this:

')

Take the first character from the stream, this is the symbol "E". The corresponding segment is the segment [0.96; 1). If we wanted to encode a single character, then the result of coding would be any number from this segment. But we will not dwell on one symbol, but add one more. The symbol "T". To do this, we compose a new work segment with a = 0.96 and b = 1. We split this segment with dots in the same way as we did it for the original segment and read the new symbol "T". The “T” symbol corresponds to the range [0.24; 0.36), but our working segment has already been reduced to the segment [0.96; 1). Those. boundaries we need to recalculate. This can be done using the following two formulas: High = Low old + (High old – Low old ) * Range High (x), Low = Low old + (High old – Low old ) * Range Low (x), where Low old - the lower limit of the interval, High old - the upper limit of the range Range High and Range Low - the upper and lower limits of the character being encoded.

Using these formulas, we encode the first word of the message entirely:

The result of the coding will be any number from the half-interval [0.97218816; 0.97223424).

Suppose that the left border of the half-interval was chosen as the result of coding, i.e. the number 0.97218816.

Consider the decoding process. The code lies in the half-interval [0.96; 1). Those. The first character of the message "E". To decode the second character (which was encoded in the half-interval [0.96; 1)) the half-interval needs to be normalized, i.e. lead to the form [0; 1). This is done using the following formula: code = (code-Range Low (x)) / (Range High (x) -Range Low (x)), where code is the current code value.

Using this formula, we get the new value code = (0.97218816-0.96) / (1-0.96) = 0.304704. Those. the second character of the sequence "T".

Again, apply the formula: code = (0.304704-0.24) / (0.36-0.24) = 0.5392. The third character of the sequence is “O”.

Continuing the decoding, according to the scheme described, we can completely restore the original text.

So We have reviewed the encoding and decoding algorithm using the most efficient of all the entropy algorithms. I hope you learned something new from this article and understood the basic ideas behind the arithmetic coding algorithm.

Before we talk about arithmetic coding, I must say a few words about the Huffman algorithm. This method is effective when the frequency of occurrence of characters is proportional to 1/2 n (where n is a positive integer). This statement becomes obvious if we recall that the Huffman codes for each character always consist of an integer number of bits. Consider a situation where the frequency of the appearance of a symbol is 0.2, then the optimal code for encoding this symbol should be –log 2 (0.2) = 2.3 bits. It is clear that the Huffman prefix code cannot have such a length, i.e. ultimately, this leads to a deterioration in data compression.

Arithmetic coding is intended to solve this problem. The basic idea is to assign codes not to individual characters, but to their sequences.

First, consider the idea underlying the algorithm, then consider a small practical example.

As with all entropy algorithms, we have information about the frequency of use of each character of the alphabet. This information is the source for the method in question. Now we introduce the concept of a working segment. A worker is a semi-interval [a; b) with points located on it. Moreover, the points are located so that the lengths of the segments formed by them are equal to the frequency of use of symbols. When initializing the algorithm, a = 0 b = 1.

One coding step consists in a simple operation: the character being encoded is taken, the corresponding section on the working segment is searched for it. The found area becomes a new working segment (i.e., it must also be split using points).

This operation is performed for a number of characters in the source stream. The result of encoding a string of characters is any number (as well as the length of its bit record) from the final working segment (most often, the left border of the segment is taken).

It sounds pretty hard. Let's try to figure it out with a small example. Encode the message "THIS_METOD_READER_HAFFMAN" using the described method.

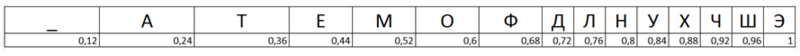

Making a table of the frequency of occurrence of characters, we can proceed to encoding. At the first stage we will make a working segment. It will look like this:

')

Take the first character from the stream, this is the symbol "E". The corresponding segment is the segment [0.96; 1). If we wanted to encode a single character, then the result of coding would be any number from this segment. But we will not dwell on one symbol, but add one more. The symbol "T". To do this, we compose a new work segment with a = 0.96 and b = 1. We split this segment with dots in the same way as we did it for the original segment and read the new symbol "T". The “T” symbol corresponds to the range [0.24; 0.36), but our working segment has already been reduced to the segment [0.96; 1). Those. boundaries we need to recalculate. This can be done using the following two formulas: High = Low old + (High old – Low old ) * Range High (x), Low = Low old + (High old – Low old ) * Range Low (x), where Low old - the lower limit of the interval, High old - the upper limit of the range Range High and Range Low - the upper and lower limits of the character being encoded.

Using these formulas, we encode the first word of the message entirely:

The result of the coding will be any number from the half-interval [0.97218816; 0.97223424).

Suppose that the left border of the half-interval was chosen as the result of coding, i.e. the number 0.97218816.

Consider the decoding process. The code lies in the half-interval [0.96; 1). Those. The first character of the message "E". To decode the second character (which was encoded in the half-interval [0.96; 1)) the half-interval needs to be normalized, i.e. lead to the form [0; 1). This is done using the following formula: code = (code-Range Low (x)) / (Range High (x) -Range Low (x)), where code is the current code value.

Using this formula, we get the new value code = (0.97218816-0.96) / (1-0.96) = 0.304704. Those. the second character of the sequence "T".

Again, apply the formula: code = (0.304704-0.24) / (0.36-0.24) = 0.5392. The third character of the sequence is “O”.

Continuing the decoding, according to the scheme described, we can completely restore the original text.

So We have reviewed the encoding and decoding algorithm using the most efficient of all the entropy algorithms. I hope you learned something new from this article and understood the basic ideas behind the arithmetic coding algorithm.

Source: https://habr.com/ru/post/130531/

All Articles