Clodo Cloud Storage

We are pleased to introduce our new service to the Habrahabra community - Cloud Storage . Like all solutions of this class, it is designed to store and quickly distribute static content - including web site content.

Those who attended the excellent Highload ++ conference had the opportunity, among other things, to hear our report on how the repository works. A brief summary of what we talked about, we offer to the respected “Habrahabra” audience.

The essence of any cloud is the ability to quickly obtain the required amount of resources of a given type upon request. There are the usual thanks to desktops - disk space, processor power, RAM. Taking a bit of the other and the third (for example, acquiring a virtual machine with 256 megabytes of memory and a disk for a couple of hundred gigabytes), the user hopes to distribute these gigabytes in the form of thousands of small files - and distribute quickly to any number of clients: obviously using some special "cloud magic", about which he sang near-minded marketers. In fact, there are other, not such familiar types of resources that should be borne in mind when designing your service — for example, a content distribution or load balancing resource.

')

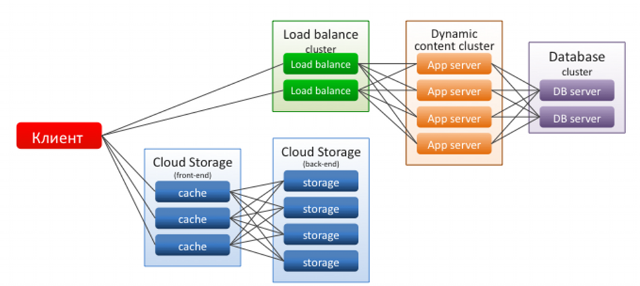

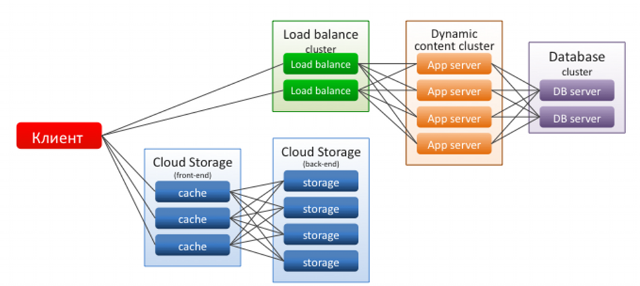

Using the above-mentioned types of entities, you can build a solution architecture that, using the traditional LAMP stack, will be designed just to implement the very “cloud magic” and ease of scaling with increasing load. We systematically implement what is needed to implement such a scheme.

For example, database servers, when using the familiar MySQL, are notoriously difficult to scale horizontally, so it makes sense to scale them vertically - for this we work Scale Server. The application servers accessing them, ideally behind the load balancer, can be scaled horizontally, producing copies using clone APIs . In order to be able to use exact clones, it makes sense to disseminate static information through specially created storage - this allows you not to engage in synchronization, which often does not keep pace with the content updates.

So, we set ourselves the task to create a repository:

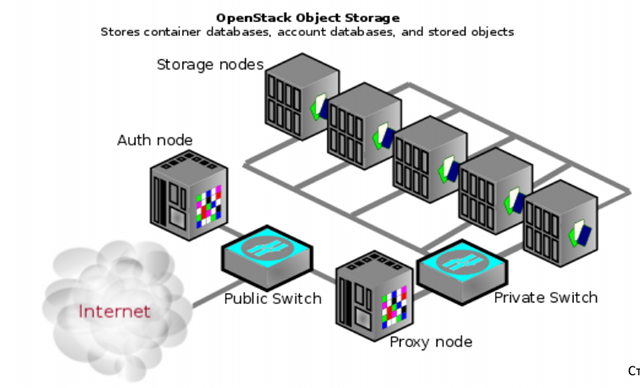

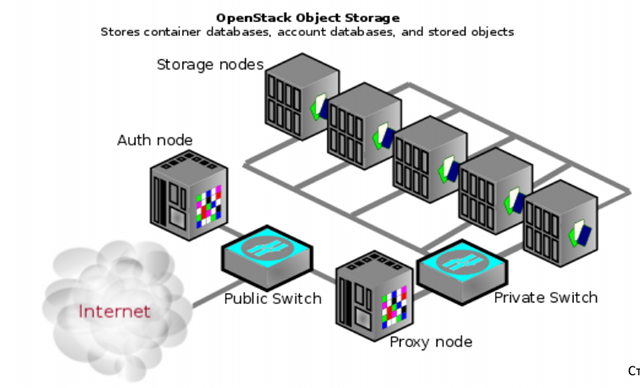

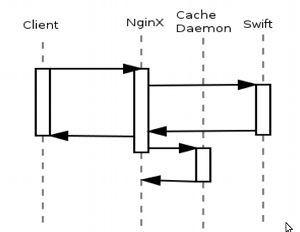

Deciding not to reinvent the wheel, we chose Openstack Swift as the repository — the same repository that our Western colleagues from Rackspace use. The picture explains his device quite well.

A client from the Internet comes to the authorization node, presents his token there, and depending on the token he is given access to one or another section of the repository. The storage is flat: the directory structure is set using the file meta-attributes (the meta attributes in Swift generally provide quite a rich toolkit - we can tell you more about this if there is such interest).

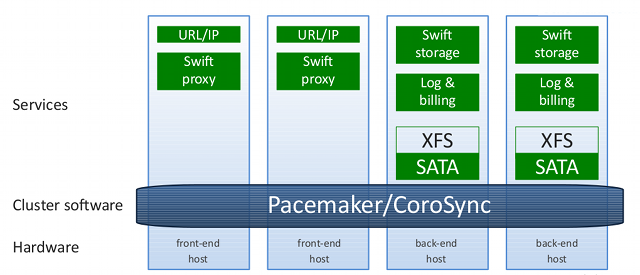

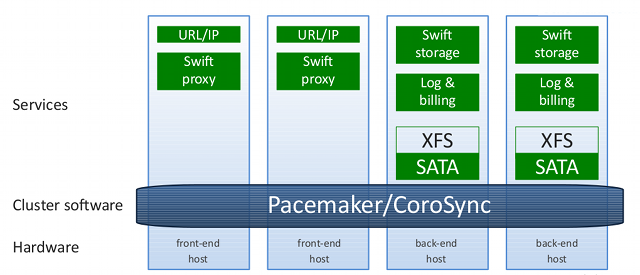

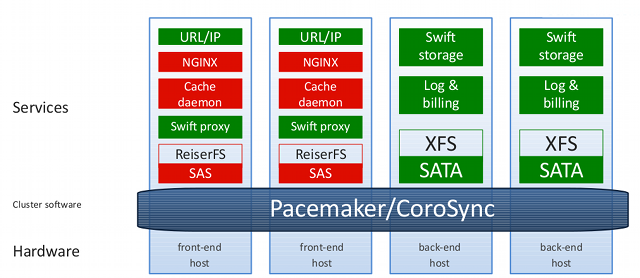

To begin with, we tried a solution entirely based on Swift with Swift Proxy on frontends running by Pacemaker.

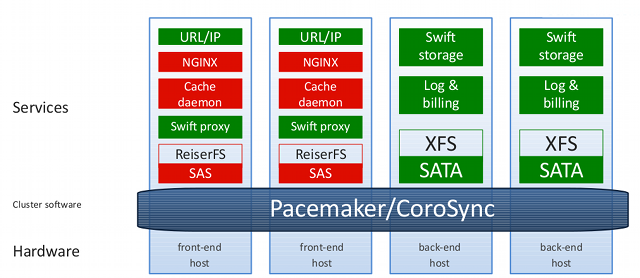

The solution is working, but it began to sink on the processor already at 400 requests per second for the frontend, which is no good under our conditions. Therefore, we decided to add NGINX as a caching proxy. We have placed cache on SAS disks. Since nginx does not know how to store cache on several volumes by default, we didn’t want to spend expensive SAS disks on overhead RAID 6, we turned to catap and soon we had nginx with a multizone cache. This configuration allowed our frontends to withstand 12,000 requests easily, while resting against a gigabit channel on the frontend rather than a processor.

After that, finalization of the service began according to the wishes of customers. To begin with, no one liked the public links of the form.

http://cs1.clodo.ru/v1/CLODO_0563290e28e0d6f79a83ab6a84b42b7d/public/logo.gif - everyone wanted something in the spirit of http://st.clodo.ru/logo.gif . In addition to the ability to connect domains, it was necessary to remove from the URL the address of the public public default container. This was solved by a small “programming on the nginx configs”.

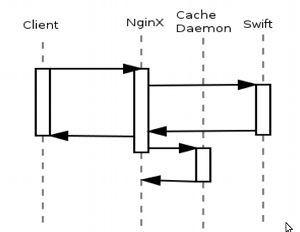

The next problem is much more thorough. After removal from the backend, the file remains in the cache and may be available for some time. Our colleagues from Rackspace believe that it is not necessary to solve this problem (and, in general, refer to CDN partners on all issues of public distribution). We decided to try to solve this problem - and the demon Kesh helped us with it.

Charming daemon written in Perl and interacting with nginx via FastCGI, each time the data is deleted, the cache becomes invalid. At the same time, he tries to show intelligence, and, for example, when deleting a file from a directory, deletes from the cache not only the file itself, but also the listing of the directory.

We have already outlined several areas of storage development:

Our current solution allows in one storage segment to have up to 840 TB of disk space, 7 TB of cache and 512 GB of cache in RAM. All this breaks into 30 units in the data center. All of this is automatically deployed with Chef on Debian Live, controlled by Pacemaker and the Clodo panel (operations implemented beyond the Openstack limit — for example, hooking up your domain). In principle, a similar solution can be built for yourself with a noticeably smaller amount of iron and deployed in your own small private cloud.

Cloud storage has been working in production for a month now, and for now our customers like the fact that it is very easy to use, and its billing is the easiest, which can be - 1 kopeck for storing 1 GB for 1 hour and 1 ruble for 1 Gb of outgoing traffic. No unpredictable loads on processors and RAM and price hike with increasing load on the resource: it is an order of magnitude easier to estimate the cost of cloud storage than on regular cloud hosting.

PS Pictures in this post are posted on our Cloud Storage.

Those who attended the excellent Highload ++ conference had the opportunity, among other things, to hear our report on how the repository works. A brief summary of what we talked about, we offer to the respected “Habrahabra” audience.

The essence of any cloud is the ability to quickly obtain the required amount of resources of a given type upon request. There are the usual thanks to desktops - disk space, processor power, RAM. Taking a bit of the other and the third (for example, acquiring a virtual machine with 256 megabytes of memory and a disk for a couple of hundred gigabytes), the user hopes to distribute these gigabytes in the form of thousands of small files - and distribute quickly to any number of clients: obviously using some special "cloud magic", about which he sang near-minded marketers. In fact, there are other, not such familiar types of resources that should be borne in mind when designing your service — for example, a content distribution or load balancing resource.

')

Using the above-mentioned types of entities, you can build a solution architecture that, using the traditional LAMP stack, will be designed just to implement the very “cloud magic” and ease of scaling with increasing load. We systematically implement what is needed to implement such a scheme.

For example, database servers, when using the familiar MySQL, are notoriously difficult to scale horizontally, so it makes sense to scale them vertically - for this we work Scale Server. The application servers accessing them, ideally behind the load balancer, can be scaled horizontally, producing copies using clone APIs . In order to be able to use exact clones, it makes sense to disseminate static information through specially created storage - this allows you not to engage in synchronization, which often does not keep pace with the content updates.

So, we set ourselves the task to create a repository:

- Securely stores data;

- With simple data management - including through the API,

because it needs to be used from the program code; - Who knows how to quickly distribute hot content over HTTP;

- Provides a familiar interaction interface for not the most experienced users (FTP, FUSE). Any content manager of the website of the provincial house of culture should be able to download the file to the repository (yes, we also have such clients);

Deciding not to reinvent the wheel, we chose Openstack Swift as the repository — the same repository that our Western colleagues from Rackspace use. The picture explains his device quite well.

A client from the Internet comes to the authorization node, presents his token there, and depending on the token he is given access to one or another section of the repository. The storage is flat: the directory structure is set using the file meta-attributes (the meta attributes in Swift generally provide quite a rich toolkit - we can tell you more about this if there is such interest).

To begin with, we tried a solution entirely based on Swift with Swift Proxy on frontends running by Pacemaker.

The solution is working, but it began to sink on the processor already at 400 requests per second for the frontend, which is no good under our conditions. Therefore, we decided to add NGINX as a caching proxy. We have placed cache on SAS disks. Since nginx does not know how to store cache on several volumes by default, we didn’t want to spend expensive SAS disks on overhead RAID 6, we turned to catap and soon we had nginx with a multizone cache. This configuration allowed our frontends to withstand 12,000 requests easily, while resting against a gigabit channel on the frontend rather than a processor.

After that, finalization of the service began according to the wishes of customers. To begin with, no one liked the public links of the form.

http://cs1.clodo.ru/v1/CLODO_0563290e28e0d6f79a83ab6a84b42b7d/public/logo.gif - everyone wanted something in the spirit of http://st.clodo.ru/logo.gif . In addition to the ability to connect domains, it was necessary to remove from the URL the address of the public public default container. This was solved by a small “programming on the nginx configs”.

The next problem is much more thorough. After removal from the backend, the file remains in the cache and may be available for some time. Our colleagues from Rackspace believe that it is not necessary to solve this problem (and, in general, refer to CDN partners on all issues of public distribution). We decided to try to solve this problem - and the demon Kesh helped us with it.

Charming daemon written in Perl and interacting with nginx via FastCGI, each time the data is deleted, the cache becomes invalid. At the same time, he tries to show intelligence, and, for example, when deleting a file from a directory, deletes from the cache not only the file itself, but also the listing of the directory.

We have already outlined several areas of storage development:

- The possibility of the client receiving logs of their repository;

- Distribution of media content, streaming;

- Replication between data centers;

- Authorization by pubcookies;

- Inclusion of all Swift-proxy functionality as a nginx module;

- Full support for HTTP 1.1;

Our current solution allows in one storage segment to have up to 840 TB of disk space, 7 TB of cache and 512 GB of cache in RAM. All this breaks into 30 units in the data center. All of this is automatically deployed with Chef on Debian Live, controlled by Pacemaker and the Clodo panel (operations implemented beyond the Openstack limit — for example, hooking up your domain). In principle, a similar solution can be built for yourself with a noticeably smaller amount of iron and deployed in your own small private cloud.

Cloud storage has been working in production for a month now, and for now our customers like the fact that it is very easy to use, and its billing is the easiest, which can be - 1 kopeck for storing 1 GB for 1 hour and 1 ruble for 1 Gb of outgoing traffic. No unpredictable loads on processors and RAM and price hike with increasing load on the resource: it is an order of magnitude easier to estimate the cost of cloud storage than on regular cloud hosting.

PS Pictures in this post are posted on our Cloud Storage.

Source: https://habr.com/ru/post/130410/

All Articles