Mathematical model of autofocus in the human eye

Scientists still do not know exactly how autofocus functions in the eyes of humans and other animals. It is known that it works extremely quickly and accurately. Getting a vague picture, the brain instantly recognizes the distance to the object and changes the focal length - the curvature of the lens to get a clear image on the retina.

The question is, how exactly does the focus mechanism work in the human visual system? Now biologists are close to answering this question. Specialists from the Center for Perceptual Systems of the University of Texas at Austin (USA) developed a self-learning statistical algorithm that eventually learned how to quickly and accurately calculate the degree of defocusing of any fragment of a blurred image.

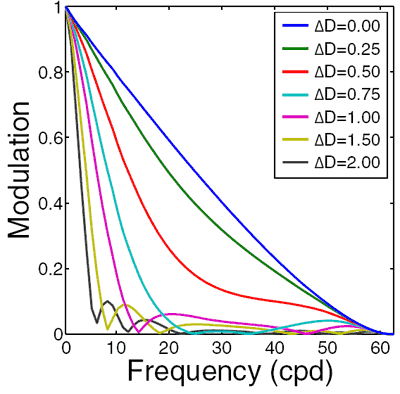

The algorithm is a computer model of the biological system of human vision, which was “trained” on 400 fragments of digital photographs of natural scenes of 128x128 pixels. To begin with, data was collected, what the frequency-contrast characteristic looks like, that is, the MTF curve for images of different degrees of defocusing (in diopters, ΔD). It has long been known that image blur directly affects this characteristic.

')

After that, an algorithm based on the accuracy maximization method (AMA, accuracy maximization analysis, AMA code for Matlab ) with a step of 0.25 diopters was run through the photos. Optimal filters were obtained that maximally approximate the graph of the frequency-contrast characteristic of a blurred image to the ideal.

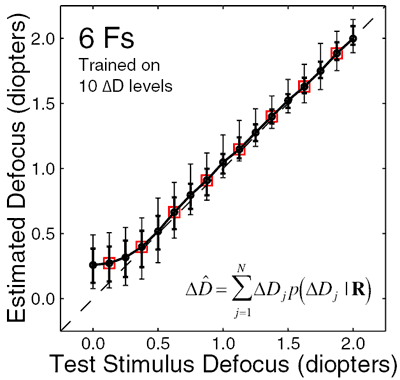

To test the scientists took a new set of data (another 400 images), which drove the filters. If you apply each filter on the same object, you can compare the results of their work and understand which filter is best suited, that is, what is the degree of blurring of the image. The graph shows the results of the algorithm for determining the degree of defocusing in diopters with a probability of 68% (thick vertical stripes) and 90% (thin stripes).

To improve the accuracy of the system, the scientists decided to add monochromatic aberrations of the human eye to the model - fundamentally unavoidable errors of optical systems. The eye of each person does not work perfectly, but with a certain error in sharpness and astigmatism. These errors (aberration map) can be measured on special ophthalmological equipment.

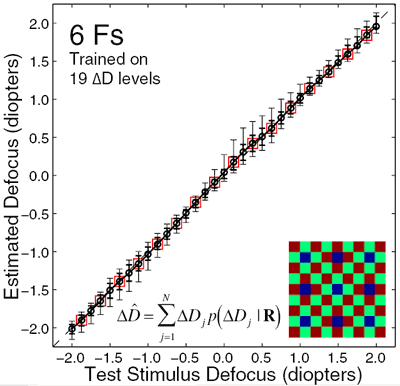

Scientists have changed the algorithm of the filters, taking into account the aberration map of the human eye and the corresponding frequency-contrast characteristics. Oddly enough, the recognition accuracy of blurry images has increased significantly. It turned out that astigmatism plays an important role in determining the sharpness of the image with the human eye - one of the types of aberrations in the human eye, in which the image of a point located off-axis and formed by a narrow beam of rays is two straight line segments perpendicular to each other at different distances from the plane of non-aberration focus (Gauss plane).

Now it became clear why, when curing astigmatism, many patients temporarily lose the ability to see sharp images.

After taking into account chromatic aberrations and diffraction in the human eye, new filters were able to recognize the blurriness of the image with much higher accuracy.

The published work may underlie fundamentally new automatic focusing systems that will find application in image processing programs for sharpness filters, as well as in digital cameras and video cameras. Here they can replace multipoint AF, which works much less efficiently - by repeatedly measuring the contrast of several image areas.

Optimal defocus estimation in individual natural images. Johannes Burge, Wilson S. Geisler. Proceedings of the National Academy of Sciences, October 4, 2011, vol. 108 no. 40 , doi: 10.1073 / pnas.1108491108 , PDF

Source: https://habr.com/ru/post/130181/

All Articles