An example of a practical project using NetApp FAS2040A

I have already talked about theory in this blog a lot, and I promised to turn to practical issues and concrete implementations, using which we will see how everything that I have already said “plays” in practice. So “closer to the body,” as Maupassant said ;). Today I will talk about a practical project that I recently completed, participating in it as an architect and consultant. It seemed to me that the resulting project is quite interesting, as a model for building a virtual server infrastructure, has many opportunities for further growth, and perhaps someone will want to take it as a basis for their decision in a similar area.

The project background information was as follows.

The organization is considering the possibility of creating a large internal “private cloud”, where it is planned to provide internal IT services in an extensive organizational structure of the company, application leasing, SaaS, desktop virtualization deployment (based on VMware View and / or Citrix Xen Desktop) and so on. The base system for organizing the "cloud" is planned VMware solution. Especially for inattentive readers, I’ll emphasize that this is about an internal private cloud , with its own specific tasks and requirements, NOT about web hosting services.

')

But before the decision on the implementation of a full-size project is made “at the top”, the management, investors and other “people who give money” would like to see how it all will “play” on a small scale. In addition, the desire to have a “testing ground” for experiments, personnel training, demonstrations, tests, development, and so on, looks quite reasonable. For this reason, the task was to create such a kind of “large-scale copy” in a relatively small budget, but with the majority of the planned technological solutions preserved, so that the resulting system, having seen how it healed “in hardware”, could be scaled to the required size “up” on the new equipment.

In the future, the created system will be included in the “large” solution as its component, for example, it can deploy a backup DR-data center, or create a backup and copy storage system on disks.

Therefore, special attention was paid to the possibilities of a seamless upgrade of the configuration being created, and its integration into the “big” system.

Since I myself am a “storagist”, it is not surprising that I began to dance “from the hundred-strongj” (surely the traditional “servers” would start dancing “from the server”). But I am convinced that on an unsuitable storage, which is the basis of the foundation of the entire solution, not to build the required quality system, therefore, special attention was paid to the choice of storage system.

As a storage system for the project, the model was a NetApp FAS2040 , a small class system, but quite modern, supporting all the capabilities available in NetApp storage systems, and powerful enough not to limit the resulting solution from this side.

Brief specifications of the FAS2040A storage system:

Multiprotocol, “unified storage” storage system. Possible data processing protocols: FC, iSCSI, CIFS (SMB, SMB2.0), NFS v3, v4.

Two controllers in a high availability cluster.

Each controller has 4 Gigabit Ethernet ports, 2 FC 4Gb / s ports, each controller has 4GB of cache memory.

The maximum number of used disks is 136 (up to 136TB of total storage capacity).

Despite the fact that, constructively, NetApp FAS2040 and allows you to install 12 SAS or SATA disks directly into the controller's storage system, and save in a small configuration on a disk shelf, the option without disks in the case was selected, and with the DS4243 external disk shelf connected to 24 disks in which disks SAS 300GB 15K are installed.

The choice of such a scheme was dictated by two considerations. Firstly, such a scheme allows, if necessary, easy upgrade of the storage system controller, simply by purchasing and physically switching this disk shelf to it, the upgrade process takes only fifteen minutes (I personally did), unlike similar processes in other systems transfer data, recreate the structure and restore data from backups. Let me remind you that this project is a “pilot” and its further development is planned in “full-format”. This scheme allows you to easily change the configuration with the necessary flexibility.

Secondly, 12 disks for this configuration are clearly small (based on the number of spindles, not capacity), and 24 - according to calculations, is quite enough. Thirdly, it allows, if necessary (again I remind you that the project is experimental), it is relatively cheap to add the necessary amount of capacity or “spindles” just “at the price of disks” to the existing 12 empty spaces in the controller (total, with minimal side costs, “For the price of disks” the vendor expands to 36 disks, that is, by 50%).

As I mentioned earlier, the cost of NetApp storage is made up of two main parts: the cost of the hardware, and the cost of software, that is, licenses for various options, protocols, and features. When buying a storage system, you choose a set of options from the existing “menu”, or you buy a ready-made “pack” (often such a “complex lunch” is cheaper than “order by menu”). For this reason, the price of two different ready-made systems can vary greatly even for a similar set of hardware (for example, the number of disks). This, by the way, is one of the main reasons why I don’t name NetApp “abstract” storage systems, nor can I give specific prices.

In this case, the configuration ordered the so-called Virtualization Pack * , which includes a set of licenses for options and access protocols corresponding to the needs of the storage system in a virtualization environment, with VMware vSphere, MS Hyper-V, Citrix Xen, and others.

This “complex lunch” includes iSCSI, NFS and FC protocols, as well as many additional useful licenses and software products, such as SnapManager for Virtual Infrastructure, which allows you to make consistent snapshots of virtual machines using storage systems without the drawbacks of VMware snapshots, as well as unlimited license for SnapDrive for Windows and UNIX / Linux, a tool that allows you to manage the creation of snapshots and connect RDM inside virtual machines, and besides this many other features, such as deduplication , thin provisioning , synchronization Native and asynchronous replication to another NetApp stack, snapshot backup, and other features.

We will not use FC, this is a conscious decision to move away from FC in favor of a converged Ethernet network, but we will have a protocol at one time, and if such a need arises, they can easily and without additional costs be able to connect to this solution and server according to the FC protocol (there are 4 FC ports on the storage system, that is, a pair of servers can be connected with a redundant connection even without using FC switches, with a "direct wire", and more likely, it will not be useful), or, for example, a tape library for backups.

In the server part of the solution, the choice was made in favor of the not so well-known server manufacturer in Russia - Cisco, and its new, for the company, product - servers of the UCS family - Unified Computer System. The UCS family consists of two lines: UCS B-class - blade systems, and UCS C-class - “regular”, rack-mount, “rack-mount” servers, in a typical 19 "cabinet. In this project, we chose the Cisco UCS C250M2 server.

There were also several reasons for this choice. First, in the future we plan to use (in the “big” project) UCS servers of the B family (blade), in this case, the existing Cisco UCS C servers are conveniently controlled by the “big” UCS B administration tools.

Secondly, Cisco server solutions clearly target the server virtualization segment, with appropriate hardware solutions in them, for example, the already mentioned Cisco UCS C250M2, has a very large amount of memory available to the server, and it’s not a secret that it’s the server’s and virtual machines the amount of memory often “solves” and sets the possible number of virtual machines on a host.

Finally, thirdly, at the time of the decision, a very pleasant decline in prices for C-class servers occurred, which allowed to buy servers in a configuration far exceeding the options of other vendors, and to keep within a strict and limited “top” budget.

As a result of the configuration, we have a couple of relatively inexpensive servers with 96GB of RAM, and this volume is typed with inexpensive 4GB DIMMs (in comparison with the 8GB modules that would be required for such a volume on other models). Plus a significant discount for this series (C-class). The remaining server settings are 4 Gigabit Ethernet + 2 out-of-band management Ethernet ports and two six-core Xeon X5650 (2.66GHz) in each server. Servers were not completed by own hard disks.

The choice of servers from Cisco logically led to the purchase and network parts from Cisco. Of course, there are other network equipment manufacturers of comparable capabilities on the market, the same HP, but choosing a server part from Cisco and taking some other network vendor, this is some kind of extreme non-conformism, especially, I repeat, a full-scale project, to which this is a “test approach”, a pilot and a means of training and demonstration, will use relatively new converged network capabilities on Cisco Nexus series switches, so there was no point in a pilot project to breed a zoo and put “operators”, therefore, 243-port gigabit Cisco Catalyst 3750, which, among other things, allow cross-stack etherchannel, greatly simplifying the configuration of a resilient IP storage network, which we planned to implement.

On the basis of them they are created in the tagged VLANs of the IP-SAN network using the iSCSI protocol, and they also use the NFS protocol to connect datastores in VMware vSphere. Plus, the network of virtual machines and external clients also runs in them.

The result on the hardware looked like this:

The solution occupies 12RU (“units”) in a standard 19 ”cabinet, has about 5TB of disk space (without taking into account possible further deduplication, compression, and the use of thin provisioning) and allows you to allocate about 50 virtual machines on it.

The total power consumption is 13.7 A, the heat dissipation is 9320 BTU / hr, the mounted weight is 115 kg.

The solution does not impose special requirements for placement in the data center.

The edition of VMware licenses was, for this solution, selected Essential Plus (at the time of purchase - 4.1), the licensed capacity of which is quite enough for the existing equipment. The version of Essential Plus, I recall, includes licenses for three physical host servers (there are two in this solution, that is, there is a reserve for expansion) and a license for vCenter. All this is given for very small for VMware, money, in the future can be upgraded to Standard or Enterprise.

It should also be noted that the hardware-generated “virtualization platform” is completely neutral with regard to the choice of hypervisor, not targeted to a specific version of vSphere, and most of its advantages and capabilities will also work in Xen and MS Hyper-V, which is also useful for “ pilot project.

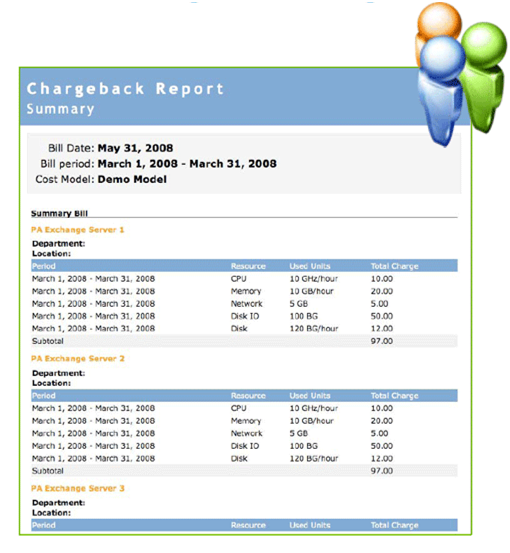

In addition to VMware Essential, a vCenter Chargeback product was purchased, which bills consumed computing resources, generates reports on their use, and so on.

I have already said that in this blog I’m not talking about the price of NetApp storage systems, but in this case I can say that when developing this project, the customer set the top budget level of 100 thousand USD for everything, and we managed to meet this limit. with the whole kitchen, with a stack, licenses, servers, switches, VMware vSphere (at that time v4.1), a service on the stack, a smartnet on Cisco, and annual support on VMware (and even a little left to wash;).

-

* The Virtualization Pack includes the following license sets:

Base Pack + Foundation Pack + Protection Pack + Protection Pack + NFS

BASE PACK (one set that comes with any storage system and is included in its price)

Snapshot

Flexvol

Thin provisioning

RAID-DP

FlexShare

Deduplication

Operations Manager

NearStore

Syncmirror

System manager

Filerview

FC protocol

iSCSI protocol

HTTP protocol

FOUNDATION PACK

SnapRestore

SnapVault Primary

Provisioning manager

PROTECTION PACK

Snapmirror

SnapVault Secondary

Protection manager

SERVER PACK

SnapManager for Virtual Infrastructure

SnapDrive (for Windows, UNIX)

NetApp DSM

And plus to all this summed up set, as part of the Virtualization Set, a license for NFS is added.

In fact, it differs from All Inclusive in the absence of CIFS, FlexClone, and SnapLock (means of creating WORM-certified unchanged data storage) and several software products to hosts.

Source: https://habr.com/ru/post/128301/

All Articles