HP data center building strategy: Critical Facilites Services, FlexDC and EcoPOD

On the last day of the calendar summer, HP held an interesting event dedicated to the strategy of building a new data center (DPC). The central element of the strategy is modularity, and its mover is a special division within the company, Critical Facilities Services (CFS). Such a unit has been operating in the Russian representative office of the company for about a year, working in four important areas at once. However, let's do everything in order.

On the last day of the calendar summer, HP held an interesting event dedicated to the strategy of building a new data center (DPC). The central element of the strategy is modularity, and its mover is a special division within the company, Critical Facilities Services (CFS). Such a unit has been operating in the Russian representative office of the company for about a year, working in four important areas at once. However, let's do everything in order.An employee of the Russian CFS and at the same time HP's data center development manager in Russia, Alexander Zaitsev, spoke about the CFS activities, the specifics of work in Russia and the data centers of the new generation of HP, codenamed “butterfly” (butterfly). The topic of modular data centers was continued by Sergey Chlek, head of HP BladeSystem in Russia, with a story about the latest, in June, environmentally friendly and flexible modular data center of HP POD 240a, also known as EcoPOD (in the photo).

The history of CFS began with a very ambitious task that HP faced a few years ago: it was necessary to consolidate about three hundred data centers. To solve the problem, in addition to our own specialists, EYP Mission Critical Facilities were involved. Together, the number of data centers was able to "shrink" almost a hundred times, up to three pieces. At the same time, the cooperation between the two companies turned out to be so effective that HP had no choice but to acquire EYP and deeply integrate the company's experts into its own structure. This was the beginning of the CFS division.

')

CFS has four job directions:

Consulting (Critical Facilities Consulting) . Within this direction, customers can get expert opinions on various aspects of the functioning of data centers, including their number and location in accordance with the assigned tasks, migration, reservation level and cost optimization. This kind of expertise can be useful when the company already has a data center or plans to build a new one.

Design (Critical Facilities Design) . Here are concentrated innovative engineering solutions for high-tech objects, which, no doubt, are data centers. CFS employees can comprehensively assess a potential site for a data center based on a mass of parameters, develop conceptual, detailed and detailed projects, build reliability models and make a detailed cost estimate. In addition, in CFS you can get a weighted assessment of projects carried out by other design organizations, which can be very useful if the designer has questions that he cannot provide a clear answer to, which is often found in Russian practice.

Infrastructure Analysis (Critical Facilities Assurance) . In the process of analyzing the infrastructure, CFS specialists assess the basic parameters of the data center, the state of the infrastructure and the efficiency of capacity utilization, energy efficiency and operational risks. A separate line includes such a popular service as the analysis of equipment operating conditions, from the primary thermal analysis to the preparation of a detailed thermal map of the data center. Express analysis involves taking into account the ratio of cooling and useful power, distribution of air flow, the location of racks. Overheated zones and potential points of failure are also identified. But with extended analysis, three-dimensional thermal modeling is already being done based on the data collected from the sensors, and scenarios for optimizing cooling with distribution by temperature zones are being developed and modeled. All these nuances, as a rule, are set out in a detailed report with specific recommendations for a major improvement in equipment operating conditions.

The full deployment of a data center (Critical Facilities Implementation) is, in fact, the construction of a turnkey data center.

After celebrating only a year since starting work in Russia, the local CFS has been conducting about a dozen or so projects throughout Russia, including for the company Data Space, which is building a network of data centers in Moscow and the region.

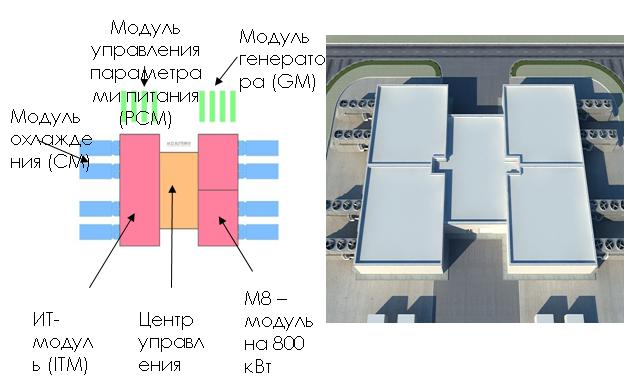

Alexander Zaitsev did not limit himself to the description of CFS activity principles, and, in full accordance with the theme of the event, also spoke about the HP FlexDC “flexible” data center, codenamed “butterfly”. As standard, it consists of five modules.

The central module is required. This is an administrative module that includes access control and monitoring systems, coupled with the workplaces of operators and administrative staff. There are loading / unloading areas, as well as telecommunication inputs premises.

The remaining modules are added depending on the requirements for power / scalability imposed on the data center. The standard module contains racks with server and network equipment. Around the modules are located cooling and power supply. Cooling equipment moved out for greater flexibility. This approach allows the use of various cooling systems, liquid or air, with free cooling or without. The generating modules are also brought out, so that FlexDC is easy to connect, for example, a gas generator station or diesel generators for uninterrupted power supply.

The walls for all modules are special sandwich panels that provide the necessary parameters for protection against electromagnetic radiation, air-sound and other permeability, as well as fire safety. The modular system allows to reduce the cost of deploying a data center by about half against the background of increased flexibility and scalability, which, however, is quite obvious. But the high cooling efficiency, supported by the possibility of choosing several materials for different climatic zones, is a serious argument in favor of the new approach. About 30 large American companies from the first hundred of Fortune have already chosen FlexDC as one of the solutions for their data centers.

Sergey Blak, head of HP BladeSystem in Russia, continued the topic of modular data centers with a story about the latest HP POD 240a, also known as EcoPOD , presented in June, as an environmentally friendly and flexible modular data center. We have already managed to describe in detail his older brothers - HP POD 40c / 20c .

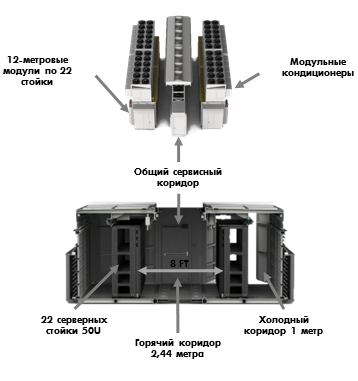

The key differences between the new POD (this abbreviation in Russian translates as “performance-optimized data center”) from models 40c and 20c is the use of air cooling instead of water cooling and delivery in the form of a fully ready-to-use solution. Older models in the basic configuration are supplied without cooling and power systems, only with interfaces to connect to them. HP POD 240a is an assembly of two 40-foot modules for the placement of IT equipment and air-cooled modules.

The key differences between the new POD (this abbreviation in Russian translates as “performance-optimized data center”) from models 40c and 20c is the use of air cooling instead of water cooling and delivery in the form of a fully ready-to-use solution. Older models in the basic configuration are supplied without cooling and power systems, only with interfaces to connect to them. HP POD 240a is an assembly of two 40-foot modules for the placement of IT equipment and air-cooled modules.Thanks to air cooling, the HP POD 240a has outstanding energy efficiency and packing power for computing power: the POD fits 44 server racks of 50U each, and the average heat dissipation is 44 kW per rack!

With such capabilities, using traditional servers and storage systems of a standard architecture is simply not economically feasible - a transition to high-density blade solutions is needed. By scoring racks in a module with blade server baskets and disk shelves, you can get a surprisingly concentrated and super-productive solution on a small area, and even at times cheaper than in the usual data center. In American realities, the difference in performance-price ratio reaches three times in favor of HP POD 240a, and in Russia it should be about the same, adjusted for local realities.

With such capabilities, using traditional servers and storage systems of a standard architecture is simply not economically feasible - a transition to high-density blade solutions is needed. By scoring racks in a module with blade server baskets and disk shelves, you can get a surprisingly concentrated and super-productive solution on a small area, and even at times cheaper than in the usual data center. In American realities, the difference in performance-price ratio reaches three times in favor of HP POD 240a, and in Russia it should be about the same, adjusted for local realities.Like the HP POD 20c / 40c, the EcoPOD is assembled at a special site in Houston - POD-Works - consisting of seven independent docks with access to all necessary communications. In the process of assembling, a module, like a car, passes through a conveyor belt, where elements of IT infrastructure are installed into it, software is installed, data is transferred from existing customer data centers, complete configuration and preparation is carried out ... A customer, by analogy with cars, can choose from a variety of options including electrical equipment, cooling, fire suppression and other systems, and not necessarily supplied by HP itself. If you wish, you can even specify the production as the delivery address of the equipment of third-party vendors - any standard components will be installed and integrated into the infrastructure. As a result, regardless of the complexity of the task, the customer receives a fully ready-to-work data center, which can be a backup, and temporary, and a full replacement for the traditional data center.

Thanks to POD-Works, the full deployment of HP POD 240a can take 12 weeks. That is, according to HP estimates, 8 times faster than building a conventional “brick” data center. And this is once again a huge plus for Russia - with our game rules on construction and loan rates.

Since the release of previous HP POD models, Russian customers have been interested in them, but only one module reached the actual installation. With the release of EcoPOD, the situation should change: despite the fact that the product was presented quite recently, several Russian companies from the mining industry and retail trade have shown substantive interest in it. The fact is that POD 240a is much less demanding of the infrastructure on the installation site and, in fact, can be installed in a clean field (just add electricity). In addition, it easily transfers temperatures from almost -30 C to extreme heat. And this without the use of hangars! With them, in the future, EcoPOD can be placed at least in the Arctic.

I must say that the first two EcoPODs will be based on HP's own sites, and the customer will receive computing power in the form of services.

Well, we told a lot of things, and now - your questions!

Source: https://habr.com/ru/post/127982/

All Articles