Headtracking or how to see a three-dimensional teapot on a two-dimensional monitor

In the past semester, I took a computer vision course and in the end I had to do a final project on any topic that is connected with it. I wondered whether it was possible using the webcam to track the user's eyes and show him 3D objects from the right angle, which I decided to do. Those. to make such a window out of the monitor into the virtual world. Now I want to share with the users about how I did it and what pitfalls we encountered on the way.

Then I had a little time, so I didn’t want to do everything from scratch. Without hesitation, I pulled off FaceAPI, which was free for educational purposes, and began to torture her. After some torment, it turned out that it can quite well determine the position of the head, as well as the eye, and, most importantly, it can immediately issue the 3D position and orientation of each eye in the webcam's coordinate system.

So, we have eye positions, now we need to find out what they see. By the way, it is assumed that the user will be a cyclops with one working eye just between the two real ones. The first attempt to make everything easy and simple, screwing a virtual camera in OpenGL to the place where the user's eye should be unsuccessful, as it was not realistic at all. On the video you can see how it looked:

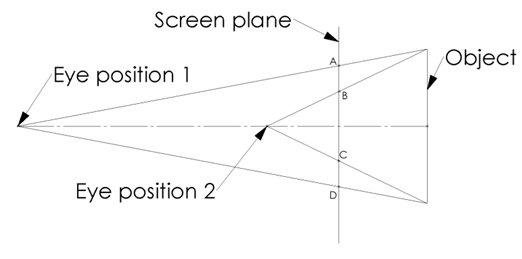

Purely subjective, it was bad that the teapot increased greatly as it approached. I began to think what was wrong here and even conducted a simple experiment with improvised means:

')

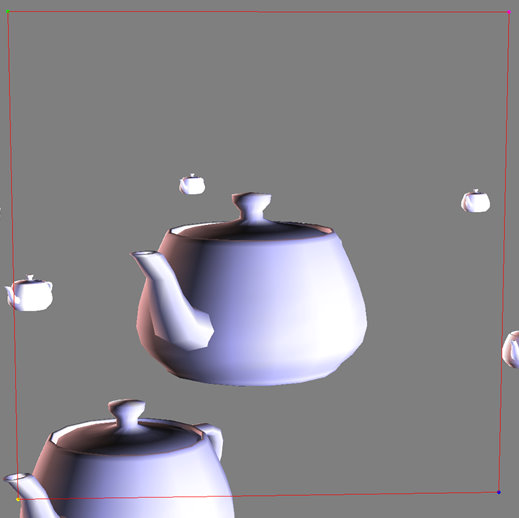

Pay attention to the size of the phone relative to the size of the hole. It turns out that when approaching our kettle should not increase, but, on the contrary, decrease (for the case when it is outside the “window”). This is how it looks like:

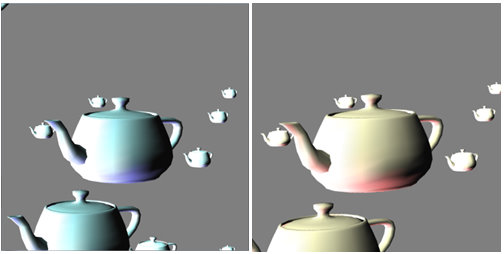

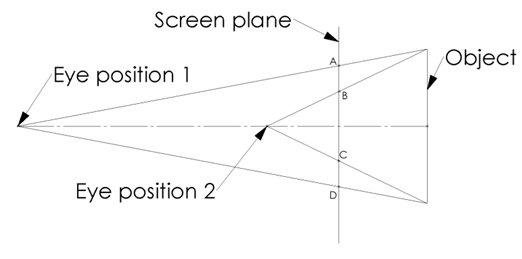

Immediately there is another problem, when viewed from the side, the proportions are distorted. The problem is that OpenGL renders us a picture as if it is perpendicular to the view, but the user sees the monitor plane at an angle.

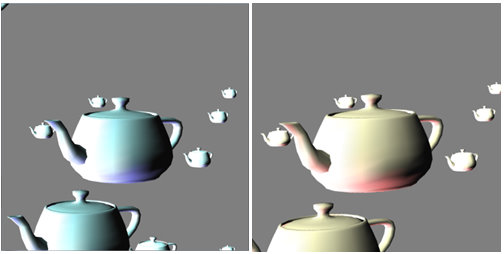

As a result, the image is distorted.

And in the picture the correct image is on the right, not on the left. If you look at it to the right of the monitor (approximately there was the user's head), then it will be correct, and the left one distorted.

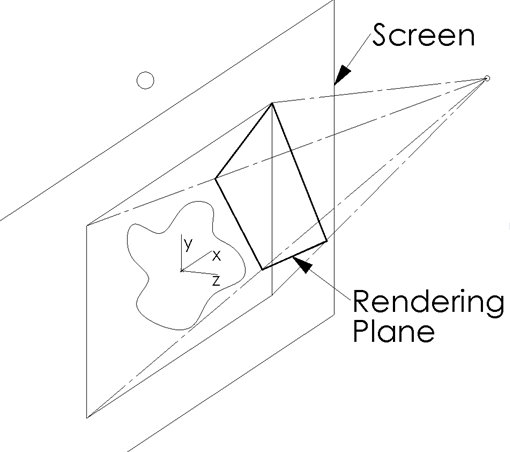

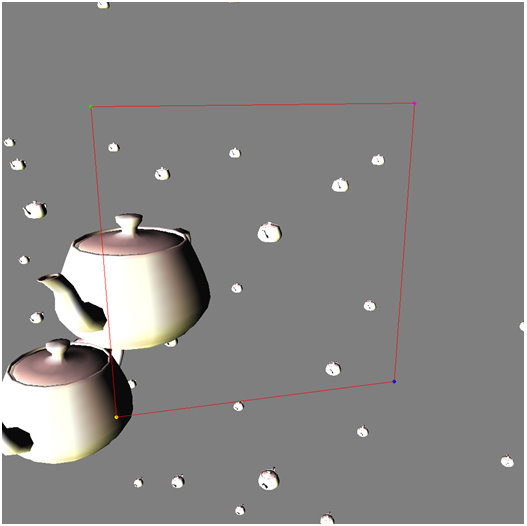

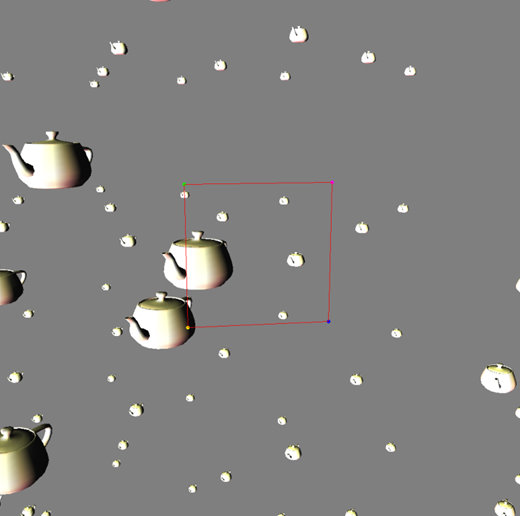

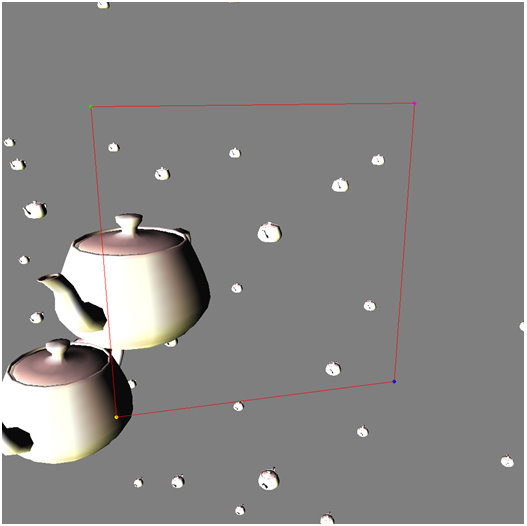

To fix both problems in one fell swoop, you need to make sure that the user sees on the screen exactly what he would see in the real world, if he looks through the window. This can be done in different ways, but at that time I came up with this: I need to render everything as before, but look for the rendered image of the area that would correspond to the area of the monitor in the real world. To do this, we had to arm ourselves with a ruler, measure the display and the position of each angle relative to the camera (our SC is attached to it) and thus get its position in the three-dimensional space of our scene, then calculate the physical position of the window on the monitor. The picture shows a frame that corresponds to a window on the monitor in three-dimensional space.

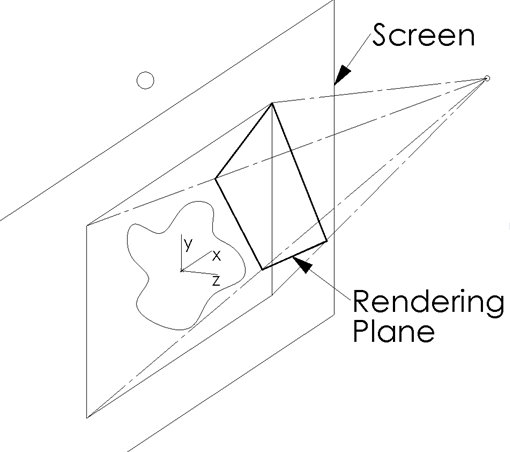

Now we need to make the contents of this area stretched across the entire window. To do this, we project the points corresponding to the corners of the real window on the monitor in 3D space on the display plane, where the OpenGL image is rendered (actually multiply the coordinates of these points on the projection matrix), we find the homography (homography is such a matrix that allows you to map the points to one image points of another, you can find it with the help of OpenCV or write all the pens) between the resulting quadrilateral and the rectangle of the window on the real display and map our rendered image to the rectangle niku real display window (again OpenCV to help us).

The result is a correct picture of what a person would see if this window were a real window into the virtual world.

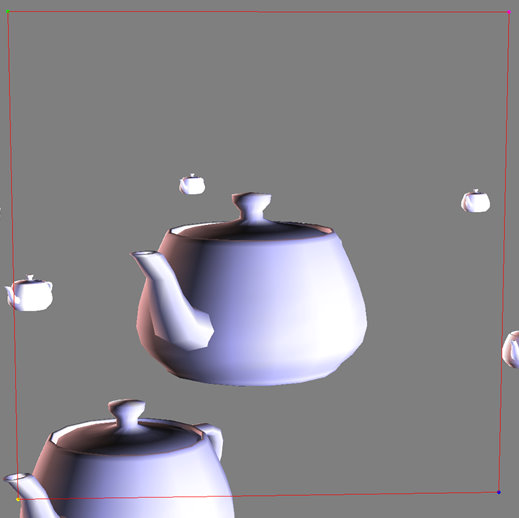

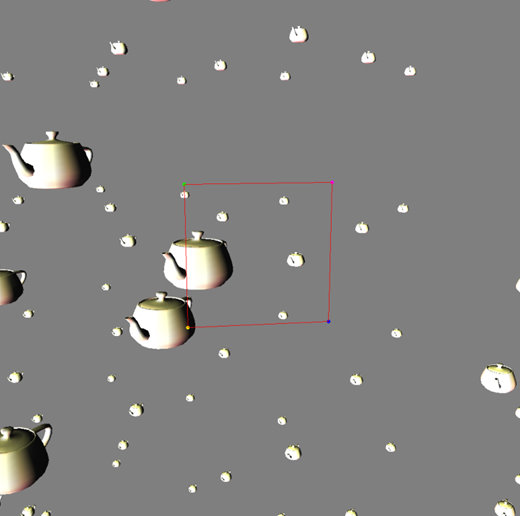

The truth is, there is one more trifle: if the user is far from the monitor, then with a constant FOV it turns out that the useful part of the image (actually what is seen through the monitor) is quite small.

and the final picture has a low resolution:

To combat this, I recalculate FOV so that the useful picture takes slightly less than the largest possible area:

The result is a completely decent picture with the correct (I thought so then, but about the details later) image.

The results of all this can be seen in the video:

And now a few comments, how it works from a subjective point of view and why:

1) The biggest problem is the small viewing angles of the webcam on the laptop. Therefore, it is quite easy to go out of sight and the image stops. It is not adapted for this. But the good news is that everything is fast enough and there are no problems in terms of speed. There are in nature more wide-angle, but you need to get this.

2) It is not so easy to deceive the human brain. With two eyes, I see for sure that the monitor is flat and without a stereo, this 3D does not work. Even if you close one eye, the brain, anyway, knows that it is a flat monitor and there can be no teapot there. But there is one nuance, if you close one eye and constantly imagine a three-dimensional teapot, then after some time you can fool the brain.

3) Not everything is so simple with projections, as it seems at first glance. For me personally, it was rather strange that the object should decrease when approaching if it is located behind the screen. By the way, there is a bug here, my teapot, after all, is voluminous and was located just in the plane of the screen, so I cannot do what I did. It is necessary to recalculate the position of each top of the kettle separately.

4) In the next semester, I will try to do the same, but with 3D stereo support and a separate picture for each eye. Let's see if the brain will then believe.

At the request of workers, I spread the source code and binaries . I included all dlki in the binaries, so I got 24 meters in the archive.

Still, it seems that I broke something there during the experiments, the teapot is now very much elongated (it seems that the sign has changed somewhere when projecting, so the teapot is stretched in the wrong direction), but who are interested, will figure it out.

And one more feature, there will be two windows with a kettle, you need to move one to the other (just drag it) so that it’s right for me to debug it more conveniently).

The lost model of the kettle can be found here and should lie here: C: \ _ WORK \ __ 3d_Models \

Where are the eyes?

Then I had a little time, so I didn’t want to do everything from scratch. Without hesitation, I pulled off FaceAPI, which was free for educational purposes, and began to torture her. After some torment, it turned out that it can quite well determine the position of the head, as well as the eye, and, most importantly, it can immediately issue the 3D position and orientation of each eye in the webcam's coordinate system.

What do eyes see?

So, we have eye positions, now we need to find out what they see. By the way, it is assumed that the user will be a cyclops with one working eye just between the two real ones. The first attempt to make everything easy and simple, screwing a virtual camera in OpenGL to the place where the user's eye should be unsuccessful, as it was not realistic at all. On the video you can see how it looked:

Purely subjective, it was bad that the teapot increased greatly as it approached. I began to think what was wrong here and even conducted a simple experiment with improvised means:

')

Pay attention to the size of the phone relative to the size of the hole. It turns out that when approaching our kettle should not increase, but, on the contrary, decrease (for the case when it is outside the “window”). This is how it looks like:

Immediately there is another problem, when viewed from the side, the proportions are distorted. The problem is that OpenGL renders us a picture as if it is perpendicular to the view, but the user sees the monitor plane at an angle.

As a result, the image is distorted.

And in the picture the correct image is on the right, not on the left. If you look at it to the right of the monitor (approximately there was the user's head), then it will be correct, and the left one distorted.

How to correct what he saw?

To fix both problems in one fell swoop, you need to make sure that the user sees on the screen exactly what he would see in the real world, if he looks through the window. This can be done in different ways, but at that time I came up with this: I need to render everything as before, but look for the rendered image of the area that would correspond to the area of the monitor in the real world. To do this, we had to arm ourselves with a ruler, measure the display and the position of each angle relative to the camera (our SC is attached to it) and thus get its position in the three-dimensional space of our scene, then calculate the physical position of the window on the monitor. The picture shows a frame that corresponds to a window on the monitor in three-dimensional space.

Now we need to make the contents of this area stretched across the entire window. To do this, we project the points corresponding to the corners of the real window on the monitor in 3D space on the display plane, where the OpenGL image is rendered (actually multiply the coordinates of these points on the projection matrix), we find the homography (homography is such a matrix that allows you to map the points to one image points of another, you can find it with the help of OpenCV or write all the pens) between the resulting quadrilateral and the rectangle of the window on the real display and map our rendered image to the rectangle niku real display window (again OpenCV to help us).

The result is a correct picture of what a person would see if this window were a real window into the virtual world.

The truth is, there is one more trifle: if the user is far from the monitor, then with a constant FOV it turns out that the useful part of the image (actually what is seen through the monitor) is quite small.

and the final picture has a low resolution:

To combat this, I recalculate FOV so that the useful picture takes slightly less than the largest possible area:

The result is a completely decent picture with the correct (I thought so then, but about the details later) image.

What happened?

The results of all this can be seen in the video:

And now a few comments, how it works from a subjective point of view and why:

1) The biggest problem is the small viewing angles of the webcam on the laptop. Therefore, it is quite easy to go out of sight and the image stops. It is not adapted for this. But the good news is that everything is fast enough and there are no problems in terms of speed. There are in nature more wide-angle, but you need to get this.

2) It is not so easy to deceive the human brain. With two eyes, I see for sure that the monitor is flat and without a stereo, this 3D does not work. Even if you close one eye, the brain, anyway, knows that it is a flat monitor and there can be no teapot there. But there is one nuance, if you close one eye and constantly imagine a three-dimensional teapot, then after some time you can fool the brain.

3) Not everything is so simple with projections, as it seems at first glance. For me personally, it was rather strange that the object should decrease when approaching if it is located behind the screen. By the way, there is a bug here, my teapot, after all, is voluminous and was located just in the plane of the screen, so I cannot do what I did. It is necessary to recalculate the position of each top of the kettle separately.

4) In the next semester, I will try to do the same, but with 3D stereo support and a separate picture for each eye. Let's see if the brain will then believe.

UPDATE :

At the request of workers, I spread the source code and binaries . I included all dlki in the binaries, so I got 24 meters in the archive.

Still, it seems that I broke something there during the experiments, the teapot is now very much elongated (it seems that the sign has changed somewhere when projecting, so the teapot is stretched in the wrong direction), but who are interested, will figure it out.

And one more feature, there will be two windows with a kettle, you need to move one to the other (just drag it) so that it’s right for me to debug it more conveniently).

The lost model of the kettle can be found here and should lie here: C: \ _ WORK \ __ 3d_Models \

Source: https://habr.com/ru/post/126290/

All Articles