Determining the part of speech of words in Russian text (POS-tagging) in Python 3

Suppose that the sentence “ Eat more of these soft French buns and drink tea. ”In which we need to define a part of speech for each word:

Why do you need it? For example, to automatically identify tags for a blog post (for selecting nouns). Morphological markup is one of the first stages of computer text analysis.

Of course, everything is already invented before us. There is a mystem from Yandex, TreeTagger with support for the Russian language, there is nltk on the python , and also pymorphy from kmike . All these utilities work fine, though, pymorphy does not have python 3 support, and nltk only supports third version of python in beta (and there is always something falling off). But the real goal for creating a module is an academic one, to understand how the morphological analyzer works.

')

To begin with, let's see how an ordinary person defines to which part of speech a word belongs.

Of course, for a computer this task will be somewhat more difficult, since he does not have the knowledge base that man possesses. But we will try to simulate computer training using the data available to us.

To teach our script, I used the national corpus of the Russian language . Part of the body, SynTagRus, is a collection of texts with marked information for each word, such as, part of speech, number, case, verb tense, etc. This is how a part of the body looks in XML format:

Sentences are enclosed in <se> tags, inside of which words are located in the <w> tag. Information about each word is contained in the <ana> tag, the lex attribute corresponds to the lexeme, gr - grammatical categories. The first category is part of speech:

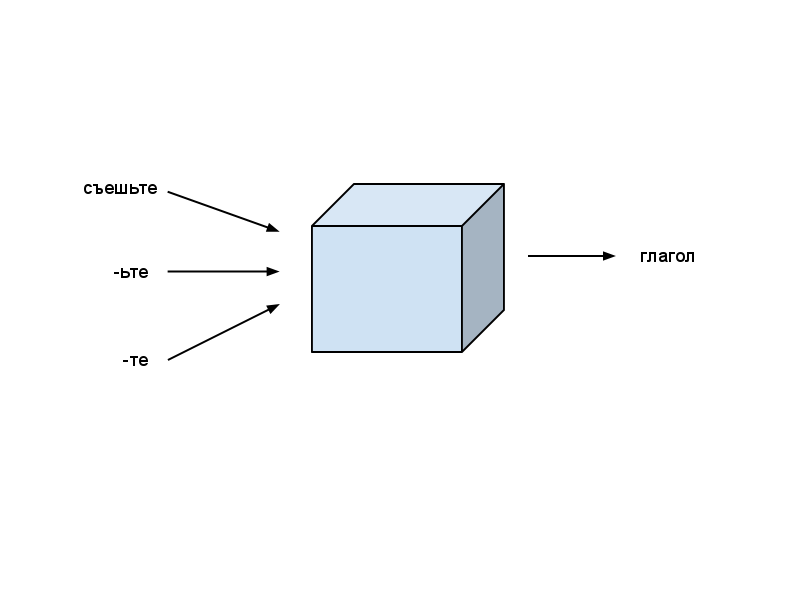

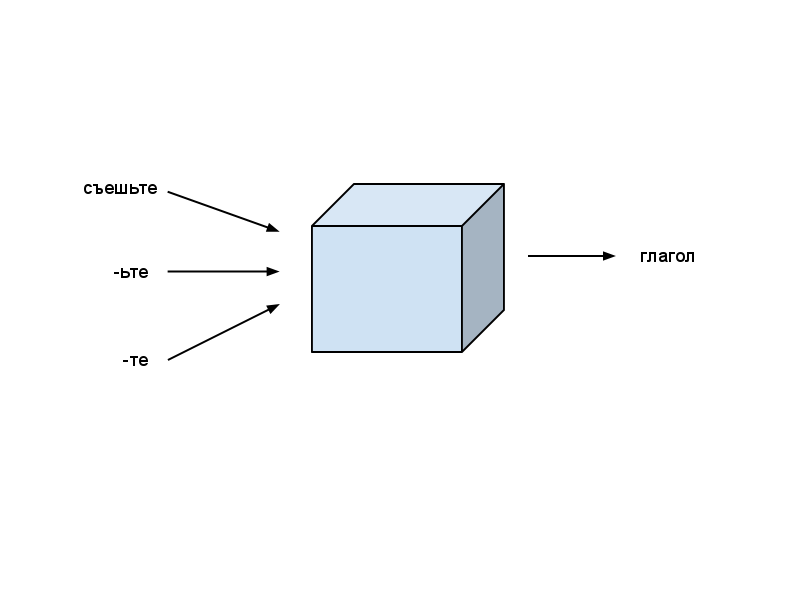

As a learning algorithm, I chose the support vector machine ( SVM ) method. If you are not familiar with SVM or machine learning algorithms in general, then imagine that SVM is a kind of black box that accepts data characteristics as input, and classification into predefined categories as output. As characteristics, we will set, for example, the ending of a word, and as categories, parts of speech.

In order for the black box to automatically recognize a part of speech, you first need to train it, i.e. give many characteristics of the examples of input, and their corresponding parts of speech to output. SVM will build a model that, with sufficient data, will in most cases correctly determine the part of speech.

Even for academic purposes, to implement SVM is laziness, so let's use the ready-made library LIBLINEAR in C ++, which has a wrapper for python. To train the model, we use the train (prob, param) function, which takes the task as the first argument: problem (y, x) , where y is an array of parts of speech for each example in array x . Each example is presented in turn with a vector of characteristics. To achieve such a statement of the problem, we first need to relate each part of speech and each characteristic with a certain numerical number. For example:

As a result, our algorithm is as follows:

Source codes can be found here: github.com/irokez/Pyrus/tree/master/src

First you need to get a marked body. The national corpus of the Russian language is spread in a very mysterious way. On the corpus site itself, you can only search for texts, but you cannot download the entire corpus:

Although for our purposes a small sample from the case will go, available here: www.ruscorpora.ru/download/shuffled_rnc.zip

Files in the resulting archive must be passed through the convert-rnc.py utility, which translates the text into UTF-8 and corrects the XML markup. After that, you may still need to fix the XML manually (xmllint to help you). The rnc.py file contains a simple Reader class for reading the normalized XML files nat. enclosures.

The Reader.read (self, filename) method reads a file and displays a list of sentences:

The SVM library can be downloaded here: http://www.csie.ntu.edu.tw/~cjlin/liblinear/ . To make the python wrapper work under the 3rd version, I wrote a small patch .

The pos.py file contains two main classes: Tagger and TaggerFeatures . Tagger is, in fact, a class that performs text markup, i.e. defines for each word its part of speech. The Tagger.train method (self, sentences, labels) takes as arguments a list of sentences (in the same format as rnc.Reader.read), as well as a list of parts of speech for each word, and then trains the SVM model using the library LIBLINEAR. The trained model is subsequently saved (via the Tagger.save method) in order not to train the model each time. The Tagger.label (self, sentence) method marks the sentence.

The TaggerFeatures class is designed to generate characteristics for training and markup. TaggerFeatures.from_body () returns a characteristic based on the shape of the word, i.e. returns the word ID in the body. TaggerFeatures.from_suffix () and TaggerFeatures.from_prefix () generate characteristics at the end and prefix of words.

To start training the model, the train.py script was written, which reads the case files using rnc.Reader, and then calls the Tagger.train method:

After the model is trained and saved, we finally got a script for marking text. An example of use is shown in test.py :

It works like this:

To assess the accuracy of the classification of the algorithm, the Tagger.train () learning method has the optional cross_validation parameter, which, if set to True, performs a cross-check , i.e. training data is divided into K parts, after which each part is used in turn to evaluate the work of the method, while the rest is used for training. I managed to achieve an average accuracy of 92% , which is quite good, considering that only the available part of the nat was used. enclosures. Typically, the accuracy of the markup of parts of speech ranges from 96-98% .

In general, it was interesting to work with nat. case. It can be seen that much work has been done on it, and it contains a large amount of information that I would like to use in full. I sent a request for a full version, but so far, unfortunately, no answer.

The resulting markup script can be easily expanded so that it also defines other morphological categories, for example, number, gender, case, etc. What I will do in the future. In the future, I would like, of course, to write the Russian language syntax parser to get the structure of the sentence, but this requires the full version of the corpus.

I will be glad to answer questions and suggestions.

Source code is available here: github.com/irokez/Pyrus

Demo: http://vps11096.ovh.net:8080

[('', '.'), ('', '.'), ('', '. .'), ('', '.'), ('', '.'), ('', '.'), ('', ''), ('', '.'), ('', '.')]Why do you need it? For example, to automatically identify tags for a blog post (for selecting nouns). Morphological markup is one of the first stages of computer text analysis.

Existing Solutions

Of course, everything is already invented before us. There is a mystem from Yandex, TreeTagger with support for the Russian language, there is nltk on the python , and also pymorphy from kmike . All these utilities work fine, though, pymorphy does not have python 3 support, and nltk only supports third version of python in beta (and there is always something falling off). But the real goal for creating a module is an academic one, to understand how the morphological analyzer works.

')

Algorithm

To begin with, let's see how an ordinary person defines to which part of speech a word belongs.

- Usually we know to which part of the speech the familiar word belongs. For example, we know that “ eat ” is a verb.

- If we meet a word that we do not know, then we can guess a part of speech by comparing it with already familiar words. For example, we can guess that the word “ congruence ” is a noun, i.e. has the ending “ -ost ”, usually inherent in nouns.

- We can also guess what part of the speech is by tracing the chain of words in the sentence: “ eat french x ” - in this example, x will most likely be a noun.

- Word length can also provide useful information. If the word consists of just one or two letters, then most likely it is a preposition, a pronoun or a union.

Of course, for a computer this task will be somewhat more difficult, since he does not have the knowledge base that man possesses. But we will try to simulate computer training using the data available to us.

Data

To teach our script, I used the national corpus of the Russian language . Part of the body, SynTagRus, is a collection of texts with marked information for each word, such as, part of speech, number, case, verb tense, etc. This is how a part of the body looks in XML format:

<se> <w><ana lex="" gr="PR"></ana>`</w> <w><ana lex="" gr="S-PRO,n,sg=ins"></ana></w> <w><ana lex="" gr="S,m,anim=pl,nom"></ana>`</w> <w><ana lex="" gr="V,ipf,intr,act=pl,praes,3p,indic"></ana>`</w> <w><ana lex="" gr="PR"></ana></w> <w><ana lex="" gr="S,f,inan=pl,acc"></ana>`</w> . </se> <se> <w><ana lex="" gr="PART"></ana></w> <w><ana lex="" gr="ADV-PRO"></ana></w>, <w><ana lex="" gr="PR"></ana>`</w> <w><ana lex="" gr="NUM=acc"></ana></w> <w><ana lex="" gr="S,f,inan=pl,gen"></ana>`</w> <w><ana lex="" gr="PR"></ana></w> <w><ana lex="" gr="S,f,inan=pl,gen"></ana></w> , <w><ana lex="" gr="V,pf,intr,med=m,sg,praet,indic"></ana>``</w> <w><ana lex="" gr="A=m,sg,nom,plen"></ana>`</w> <w><ana lex="" gr="S,m,anim=sg,nom"></ana>`</w> . </se> Sentences are enclosed in <se> tags, inside of which words are located in the <w> tag. Information about each word is contained in the <ana> tag, the lex attribute corresponds to the lexeme, gr - grammatical categories. The first category is part of speech:

'S': '.',

'A': '.',

'NUM': '.',

'A-NUM': '.-.',

'V': '.',

'ADV': '.',

'PRAEDIC': '',

'PARENTH': '',

'S-PRO': '. .',

'A-PRO': '. .',

'ADV-PRO': '. .',

'PRAEDIC-PRO': '. .',

'PR': '',

'CONJ': '',

'PART': '',

'INTJ': '.'SVM

As a learning algorithm, I chose the support vector machine ( SVM ) method. If you are not familiar with SVM or machine learning algorithms in general, then imagine that SVM is a kind of black box that accepts data characteristics as input, and classification into predefined categories as output. As characteristics, we will set, for example, the ending of a word, and as categories, parts of speech.

In order for the black box to automatically recognize a part of speech, you first need to train it, i.e. give many characteristics of the examples of input, and their corresponding parts of speech to output. SVM will build a model that, with sufficient data, will in most cases correctly determine the part of speech.

Even for academic purposes, to implement SVM is laziness, so let's use the ready-made library LIBLINEAR in C ++, which has a wrapper for python. To train the model, we use the train (prob, param) function, which takes the task as the first argument: problem (y, x) , where y is an array of parts of speech for each example in array x . Each example is presented in turn with a vector of characteristics. To achieve such a statement of the problem, we first need to relate each part of speech and each characteristic with a certain numerical number. For example:

''' - - - . ''' x = [{1001: 1, 2001: 1, 3001: 1}, # 1001 - , 2001 - , 3001 - {1002: 1, 2002: 1, 3001: 1}, # 1002 - , 2002 - , 3001 - {1003: 1, 2003: 1, 3002: 1}] # 1003 - , 2003 - , 3002 - y = [1, 1, 2] # 1 - , 2 - . import liblinearutil as svm problem = svm.problem(y, x) # param = svm.parameter('-c 1 -s 4') # model = svm.train(prob, param) # # '' label, acc, vals = svm.predict([0], {1001: 1, 2001: 1, 3001: 1}, model, '') # [0] - , As a result, our algorithm is as follows:

- We read the corpus file and for each word we define its characteristics: the word itself, the ending (the last 2 and 3 letters), the prefix (the first and second letters), as well as parts of the speech of the previous words

- To each part of speech and characteristics, assign a sequence number and create a task for learning SVM

- We train the SVM model

- We use the trained model to determine the part of speech of words in the sentence: for this, each word must again be represented as characteristics and input to the SVM model, which will select the most appropriate class, i.e. Part of speech.

Implementation

Source codes can be found here: github.com/irokez/Pyrus/tree/master/src

Housing

First you need to get a marked body. The national corpus of the Russian language is spread in a very mysterious way. On the corpus site itself, you can only search for texts, but you cannot download the entire corpus:

“The offline version of the corpus is not available, however, for free use, a random sample of sentences (with disturbed order) from the corpus with homonymy of 180 thousand word usage (90 thousand press, 30 thousand each from artistic texts, legislation and scientific texts) is provided” .At the same time written in Wikipedia

“The corpus will be made available for non-commercial purposes, but it is currently available only.”

Although for our purposes a small sample from the case will go, available here: www.ruscorpora.ru/download/shuffled_rnc.zip

Files in the resulting archive must be passed through the convert-rnc.py utility, which translates the text into UTF-8 and corrects the XML markup. After that, you may still need to fix the XML manually (xmllint to help you). The rnc.py file contains a simple Reader class for reading the normalized XML files nat. enclosures.

import xml.parsers.expat class Reader: def __init__(self): self._parser = xml.parsers.expat.ParserCreate() self._parser.StartElementHandler = self.start_element self._parser.EndElementHandler = self.end_element self._parser.CharacterDataHandler = self.char_data def start_element(self, name, attr): if name == 'ana': self._info = attr def end_element(self, name): if name == 'se': self._sentences.append(self._sentence) self._sentence = [] elif name == 'w': self._sentence.append((self._cdata, self._info)) elif name == 'ana': self._cdata = '' def char_data(self, content): self._cdata += content def read(self, filename): f = open(filename) content = f.read() f.close() self._sentences = [] self._sentence = [] self._cdata = '' self._info = '' self._parser.Parse(content) return self._sentences The Reader.read (self, filename) method reads a file and displays a list of sentences:

[[('`', {'lex': '', 'gr': 'S,m,anim=sg,nom'}), ('`', {'lex': '', 'gr': 'S,f,inan=sg,gen'}), ('`', {'lex': '', 'gr': 'A-PRO=f,sg,acc'}), ('`', {'lex': '', 'gr': 'S,m,anim=pl,nom'}), ('`', {'lex': '', 'gr': 'V,pf,tran=pl,act,praet,indic'}), ('', {'lex': '', 'gr': 'PR'}), ('', {'lex': '', 'gr': 'S,m,inan,0=sg,gen'}), ('`', {'lex': '', 'gr': 'V,pf,tran=m,sg,act,praet,indic'}), ('', {'lex': '', 'gr': 'S-PRO,pl,3p=dat'}), ('`', {'lex': '', 'gr': 'A=n,sg,acc,inan,plen'}), ('`', {'lex': '', 'gr': 'S,n,inan=sg,acc'}), ('', {'lex': '', 'gr': 'PR'}), ('', {'lex': '', 'gr': 'S-PRO,n,sg=acc'}), ('`', {'lex': '', 'gr': 'V,pf,intr,med=m,sg,praet,indic'}), ('`', {'lex': '', 'gr': 'S,f,inan=sg,ins'})]] Learning and text layout

The SVM library can be downloaded here: http://www.csie.ntu.edu.tw/~cjlin/liblinear/ . To make the python wrapper work under the 3rd version, I wrote a small patch .

The pos.py file contains two main classes: Tagger and TaggerFeatures . Tagger is, in fact, a class that performs text markup, i.e. defines for each word its part of speech. The Tagger.train method (self, sentences, labels) takes as arguments a list of sentences (in the same format as rnc.Reader.read), as well as a list of parts of speech for each word, and then trains the SVM model using the library LIBLINEAR. The trained model is subsequently saved (via the Tagger.save method) in order not to train the model each time. The Tagger.label (self, sentence) method marks the sentence.

The TaggerFeatures class is designed to generate characteristics for training and markup. TaggerFeatures.from_body () returns a characteristic based on the shape of the word, i.e. returns the word ID in the body. TaggerFeatures.from_suffix () and TaggerFeatures.from_prefix () generate characteristics at the end and prefix of words.

To start training the model, the train.py script was written, which reads the case files using rnc.Reader, and then calls the Tagger.train method:

import sys import re import rnc import pos sentences = [] sentences.extend(rnc.Reader().read('tmp/media1.xml')) sentences.extend(rnc.Reader().read('tmp/media2.xml')) sentences.extend(rnc.Reader().read('tmp/media3.xml')) re_pos = re.compile('([\w-]+)(?:[^\w-]|$)'.format('|'.join(pos.tagset))) tagger = pos.Tagger() sentence_labels = [] sentence_words = [] for sentence in sentences: labels = [] words = [] for word in sentence: gr = word[1]['gr'] m = re_pos.match(gr) if not m: print(gr, file = sys.stderr) pos = m.group(1) if pos == 'ANUM': pos = 'A-NUM' label = tagger.get_label_id(pos) if not label: print(gr, file = sys.stderr) labels.append(label) body = word[0].replace('`', '') words.append(body) sentence_labels.append(labels) sentence_words.append(words) tagger.train(sentence_words, sentence_labels, True) tagger.train(sentence_words, sentence_labels) tagger.save('tmp/svm.model', 'tmp/ids.pickle') After the model is trained and saved, we finally got a script for marking text. An example of use is shown in test.py :

import sys import pos sentence = sys.argv[1].split(' ') tagger = pos.Tagger() tagger.load('tmp/svm.model', 'tmp/ids.pickle') rus = { 'S': '.', 'A': '.', 'NUM': '.', 'A-NUM': '.-.', 'V': '.', 'ADV': '.', 'PRAEDIC': '', 'PARENTH': '', 'S-PRO': '. .', 'A-PRO': '. .', 'ADV-PRO': '. .', 'PRAEDIC-PRO': '. .', 'PR': '', 'CONJ': '', 'PART': '', 'INTJ': '.', 'INIT': '', 'NONLEX': '' } tagged = [] for word, label in tagger.label(sentence): tagged.append((word, rus[tagger.get_label(label)])) print(tagged) It works like this:

$ src/test.py " , "

[('', '.'), ('', '.'), ('', '. .'), ('', '.'), ('', '.'), (',', '.'), ('', ''), ('', '.'), ('', ''), ('', '.')]Testing

To assess the accuracy of the classification of the algorithm, the Tagger.train () learning method has the optional cross_validation parameter, which, if set to True, performs a cross-check , i.e. training data is divided into K parts, after which each part is used in turn to evaluate the work of the method, while the rest is used for training. I managed to achieve an average accuracy of 92% , which is quite good, considering that only the available part of the nat was used. enclosures. Typically, the accuracy of the markup of parts of speech ranges from 96-98% .

Conclusion and future plans

In general, it was interesting to work with nat. case. It can be seen that much work has been done on it, and it contains a large amount of information that I would like to use in full. I sent a request for a full version, but so far, unfortunately, no answer.

The resulting markup script can be easily expanded so that it also defines other morphological categories, for example, number, gender, case, etc. What I will do in the future. In the future, I would like, of course, to write the Russian language syntax parser to get the structure of the sentence, but this requires the full version of the corpus.

I will be glad to answer questions and suggestions.

Source code is available here: github.com/irokez/Pyrus

Demo: http://vps11096.ovh.net:8080

Source: https://habr.com/ru/post/125988/

All Articles