Introduction to Storage Systems

From the author

Good afternoon, Habr! Do you know what HP sells except for printers? And Dell, except laptops and monitors? And Hitachi, except for home appliances? What do listed companies and EMC have in common? The answer seems simple to specialists, but not so obvious to the average IT specialist.

All listed companies sell (including) data storage systems. What systems? Yes, from my own experience, I was convinced that the knowledge of the data storage of most of the IT engineers I know ends in the field of RAID. So the idea was born to write this article, or even a few. To begin, we will look at a number of technologies in the field of information management, we will note what approaches exist to data storage and why each of them was not enough. It describes the basic principles of DAS, NAS and SAN, so this article is likely to be useless to specialists, but if this topic is not close to you, but interesting, you are welcome!

')

Introduction

The purpose of the article is to consider the conceptual basis of approaches to building storage systems. There are no technical specifications here, because to the point they do not have a relationship. So that the article does not look like an advertising brochure, there will be no product names, as well as the degrees “good” and “unparalleled”. An exhaustive article also cannot be named, on the contrary, I tried to cover the minimum-sufficient material that is understandable for the average engineer who has never dealt with storage systems. So, let's begin.

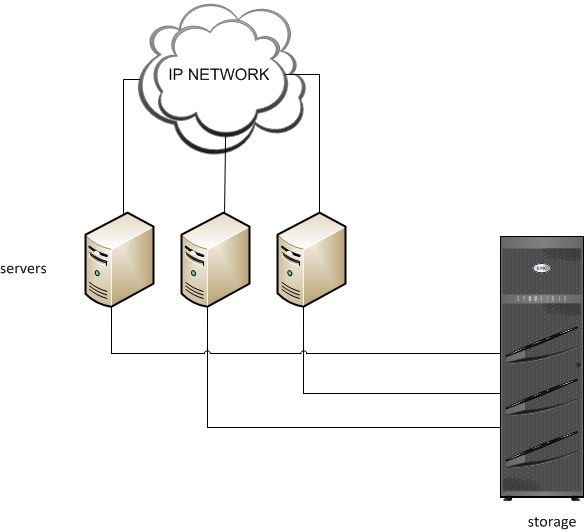

DAS (Direct Attached Storage)

This thing has long been familiar to you. Recall the scheme of working with a regular PC disk: the motherboard is connected to the HDD via ATA / SATA interfaces . You already know all this for a long time, so you can imagine what a DAS is. More precisely, you understand what the internal type DAS architecture is. There is also an external type DAS architecture, which differs from the internal allowable distance between, generally speaking, several servers and a storage device.

External connectivity is achieved through the use of SCSI and FC technologies. If you do not go into the details of these data transfer technologies, this is probably all that can be said about DAS.

From the principal simplicity of the DAS architecture, its main advantages follow: the lowest price compared to the others discussed below and the relative ease of implementation. In addition, this configuration is easier to manage due to the fact that the number of system elements is small. Data integrity in DAS is ensured by using old and popular RAID technology.

However, this solution is suitable for relatively uncritical tasks and a limited number of workstations. Sharing of final computing resources imposes a number of limitations. The number of simultaneously connected machines does not exceed the number of ports in the storage device, limited bandwidth increases read-write time (IO), inefficient use of cache, etc.

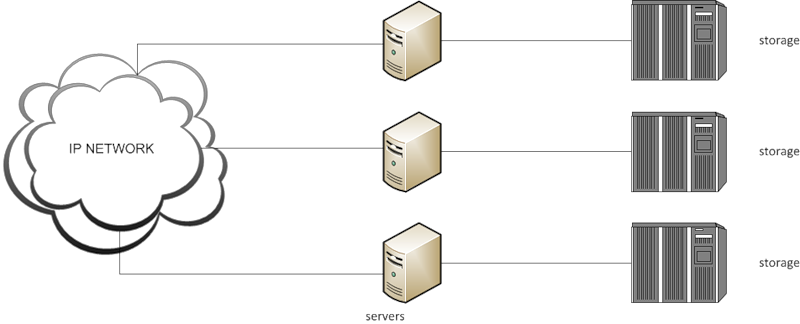

Partially, performance problems can be solved by a fleet of servers (for example, separated by the type of requests being processed), each of which loads a separate storage device.

However, this scheme also begins to have difficulties when it becomes necessary to share data between servers, or the amount of memory used is uneven. It is obvious that in such conditions DAS does not meet the requirements of scalability and fault tolerance, for this reason NAS and SAN were invented.

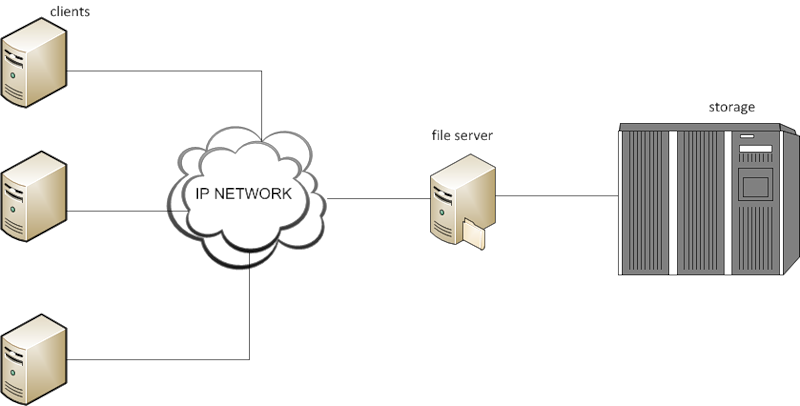

NAS (Network Attached Storage)

Imagine a server on a local network that does nothing but share its folders. This is practically a NAS. Yes, a NAS is just a file sharing device on an IP network. The minimum NAS configuration is as follows:

About the structure. A NAS device (file server) is a dedicated high-performance server with its own OS optimized for read / write operations. The server has several network interfaces for communication with an IP network and a storage device: GigabitEthernet, FastEthernet, FDDI, and so on. In addition, the NAS has a large amount of RAM, most of which is used as a cache, which allows you to perform a write operation asynchronously, and speed up reading due to buffering. Thus, the data can be in RAM for a long time without getting to the disk.

Storage (disk array) - what is most often depicted in articles, which deals with data centers. In other words, it is a cabinet (rack) with disks connected (or integrated) with a file server. Integrated? Yes, the NAS can be a separate server (as shown) or be part of a solid device. In the first case, we are dealing with a gateway implementation of NAS, in the second - with a monolithic system. We will remember the gateway implementation when we talk about SAN.

How does NAS work? NAS supports working with sharing protocols CIFS and NFS . The client mounts the file system provided by the NAS and performs read / write operations in the usual file mode, and the NAS server processes them, translating them into a block-access language understandable to the stack. In addition, protocols such as FTP, DFS, SMB, etc. are supported. That's the whole NAS ... fast and tasty.

What is the profit from using NAS and why does a standard solution need to be allocated a whole class? Firstly, IO operations take less time, therefore, the NAS is much faster than a “general purpose” server, so if you have a server in your architecture that needs to render a lot of static, you should consider using a NAS. Secondly, centralized storage is easier to manage. Thirdly, the overall increase in the capacity of the NAS is transparent to customers, all operations of adding / deleting memory are hidden from consumers. Fourth, the provision of access at the file system level allows you to introduce the concept of rwx privileges. Looking ahead, it can be noted that using a NAS, without sacrificing bandwidth, it is easy to organize multisite (we will tell what it is when it comes to replication).

But there are a number of limitations associated with the use of NAS. This is mainly due to the basic principle for the NAS. By itself, TCP / IP redundancy as a data access protocol leads to overhead. High load on the network with a rather limited bandwidth increases response time. The performance of the system as a whole depends not only on the NAS, but also on the quality of the switching devices of the network. In addition, without proper resource allocation, a client requesting too large file sizes may affect the speed of other clients.

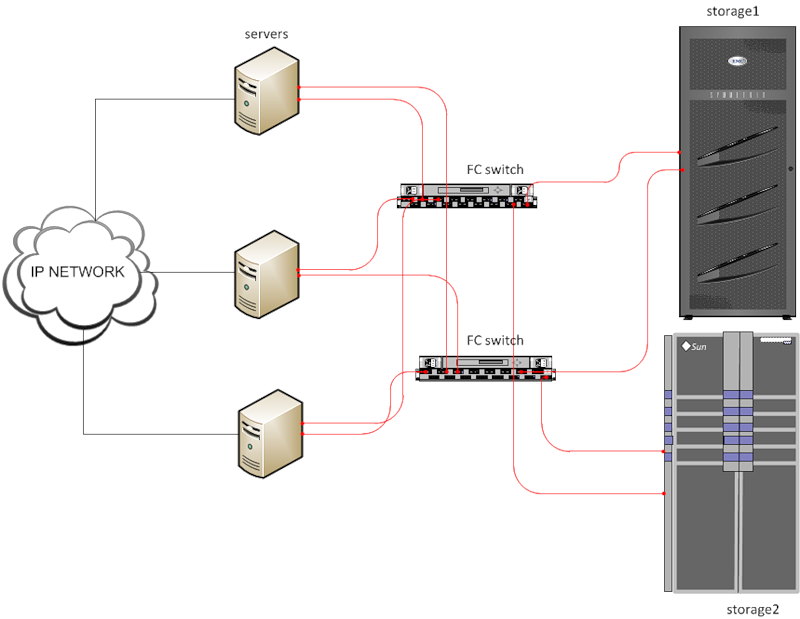

SAN (Storage Area Network)

Here, I did not invent any analogies with enthernet :(. SAN (storage area network) is a block storage infrastructure built on the basis of a high-speed network.

As can be seen from the definition, the main difference from NAS is in providing access to data at the block level. If we compare SAN and DAS, the key concept here is the network. So, among the main SAN components, the same components, but from other architectures, it is distinguished by the presence of special switches that support data transfer via FibreChannel or (Fast-GB-etc.) Ethernet:

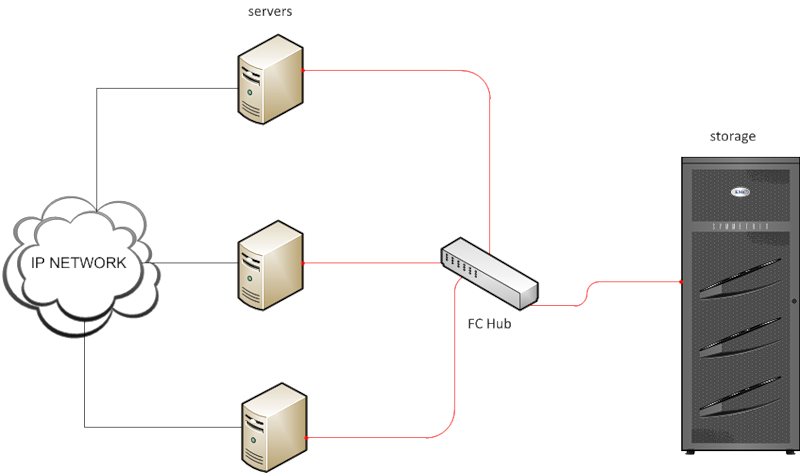

The SAN story begins in the late 1980s, when the idea of building an FC network was first proposed. In early implementations, hubs were used as a switching device, such an approach is called a controlled loop (Arbitrated Loop, hereinafter FC-AL):

The interaction scheme in FC-AL is similar to CSMA / CA: every time a node in FC-AL is about to perform an IO operation, it sends a blocking packet, notifying everyone of the start of the transfer. When a packet is returned to the sender, the node receives full control over the loop for the operation. At the end of the operation, all nodes are notified of the release of the channel and the procedure is repeated. Obviously, this situation is no better than DAS, and another one is added to the bandwidth problems: only one client at a time can perform an IO operation. In addition, the use of 8-bit addressing in FC-AL allows you to have no more than 127 devices on the network. In short, FC-AL was no better than DAS.

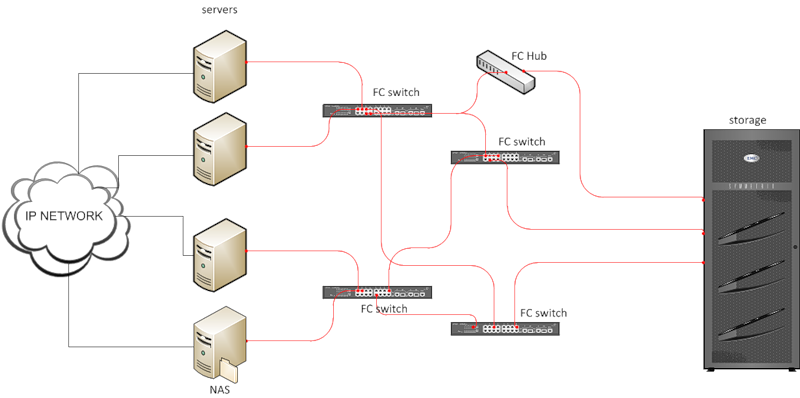

FC-AL was replaced by an architecture whose name I will not translate: FC-Switched Fabric (FC-SW). It is this implementation of SAN has reached our days. In FC-SW, instead of a hub, one or several switches are used, thus the data is transmitted not by a shared one, but by individual channels.

As in Ethernet, on the basis of these switches, you can build many topologies, in particular, another switch or hub can be connected to the root (s):

The advantages of SAN are obvious:

- total memory can grow not only by increasing the capacity of existing guards, but also by adding new ones;

- Each host can work with any storage device, not just with its own, as is the case with DAS;

- the server has several “ways” of receiving data (multipathing), therefore, with a properly constructed topology, even after the failure of one of the switches, the system will remain working;

- there is an option such as Boot From SAN, which means that the server no longer even needs its own boot disk;

- there is a zoning option that allows you to limit the access of servers to resources;

- Partly solved the problem with bandwidth - in part, because the bottleneck between the storage device and the switch still remains a bottleneck, however, operations such as backup do not affect the entire network.

Another important advantage of SAN, which needs to be discussed separately. Flexible architecture and access at the block level allow you to build many different solutions depending on the task. For example, if we provide one of the servers with a special OS, we will get nothing more than a gateway-implementation of NAS (see the figure above), thus, within the solution for one enterprise, DAS, NAS, and SAN can be combined.

But it is impossible without a fly in the ointment. One of the main disadvantages of a SAN is its price. Leave the numbers to marketers, we just note that SAN can not afford all.

Instead of conclusion

The main approaches to building data management systems were reviewed very briefly. No such concepts as IP SAN or CAS are mentioned here, nothing is said about iSCSI and about transfer technology in general - for the time being we’ll leave it for independent reading.

Very superficially said about data protection from losses, backup methods, what to do if a data center burned out, in short about emergency situations (disasters) - they will become the subject of our next review.

Thank you for being with us.

Source: https://habr.com/ru/post/125828/

All Articles