Mail.Ru Mail (even if you are Chinese)

We want to share the joy: we have successfully transferred our mail to UTF-8. Now you can easily correspond with Arabs, Chinese, Japanese, Greeks, Georgians, write letters in Hebrew and Yiddish, show off the knowledge of Phoenician writing, or encrypt the message with notes. And at the same time be sure that the addressee will receive exactly what he was sent, and not the squares or "cracks".

Like many serious changes, the transition process required serious preparation and had a large “underwater” part - the developers had the task to process 6 petabytes of letters in more than one hundred million boxes. The first experiments began in the fall of 2010, and in the spring of 2011 all the boxes were successfully transferred to the new system. At the same time, the “mail” project domain symbolically changed: instead of the main domain win.mail.ru and historical koi.mail.ru and mac.mail.ru, which issued the site in the appropriate encodings, e.mail.ru is now used, issuing everything pages in UTF-8. All mail is also stored, processed and displayed in UTF-8. This means that any living and dead languages, mathematical and musical symbols can be used in letters, both in plain-text form and with formatting.

To remind how things have been going on with international communication just recently, we have prepared a small excursion into the history of encodings.

')

Surprisingly, the problem of message encoding, which in the eighties tormented the first network users, and in the nineties was the scourge of the nascent Runet, arose long before the appearance of the computer. One of the very first shoots of the information age was the telegraph, and now some effort is needed to recall that the method of communication, which arose even before the telephone, was originally digital.

Surprisingly, the problem of message encoding, which in the eighties tormented the first network users, and in the nineties was the scourge of the nascent Runet, arose long before the appearance of the computer. One of the very first shoots of the information age was the telegraph, and now some effort is needed to recall that the method of communication, which arose even before the telephone, was originally digital.

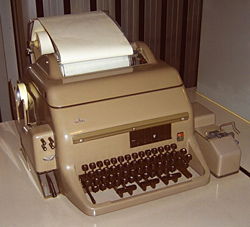

Apart from pure binary Morse code, the first code that has become standard is the Bodo code. This 5-bit synchronous code allowed the telegraphs to transmit about 190 characters per minute (and subsequently up to 760) or 16 bits per second. By the way, those who bought the first modems, remember that the speed was listed in baud - the units of measurement of the name of Emil Bodo, the inventor of the code and high-speed telegraph.

In addition to the name of the speed unit, the Bodo code, or rather the ITA2 standard created on its basis, is notable for the fact that it became the source of the first Cyrillic coding - the telegraph code MTK-2. For the transfer of Russian letters to the code, a third register was added (RUS in addition to LAT and CIF) and tricks began immediately, caused by the need to put our 31-letter (at that time) alphabet into the Procrustean bed of the 5-bit standard, since six positions of each register were busy with official symbols. For example, the widely known amateurs to write sms in Latin accepts the replacement of the letter H with the number 4 in the middle of the 20th century and was fixed in the All-Union standard. Another interesting point that emerges already in the Internet era, was the correspondence of codes in different registers in sound rather than in the order of letters (i.e., the Cyrillic ABECD sequence corresponded to the Latin ABVGD, and not ABCDE).

As soon as the computer era began, the character encoding received a new branch of development - binary-decimal code (BCD - binary-coded decimal). Back in 1928, the developers of the company brought to the logical finals the technology of recording information on punch cards that appeared at the beginning of the 19th century. Gray-beige rectangles with 12 lines and 80 columns recollect all the older generation of scientists. Thirty years later, in the late 50s, a 6-bit BCD code was introduced first, and soon 8-bit EBCDIC.

As soon as the computer era began, the character encoding received a new branch of development - binary-decimal code (BCD - binary-coded decimal). Back in 1928, the developers of the company brought to the logical finals the technology of recording information on punch cards that appeared at the beginning of the 19th century. Gray-beige rectangles with 12 lines and 80 columns recollect all the older generation of scientists. Thirty years later, in the late 50s, a 6-bit BCD code was introduced first, and soon 8-bit EBCDIC.

From this point on, two interesting trends emerge: the explosive growth of various encoding methods (there were at least six EBCDIC versions incompatible with each other) and the competition between “telegraph” and “computer” encoding standards. At the same time, in the early 60s, or more precisely in 1963, a new 7-bit ASCII encoding was replaced by the Bodo code in Bell Laboratories. The importance of this event is hard to overestimate - for America (and other English-speaking countries) this once and for all solved the problem of standardizing data transmission (at that time by TTY).

However, having solved the problem of encoding for themselves, the States, intentionally or not, created it for all other countries that do not speak English, and especially for those who do not write in Latin. For languages that differed from English only in diacritics (additional strokes over the letters), a loophole was opened in the form of a BS symbol - return to a step. He allowed one character to be printed on top of another and to receive a á from a sequence of type “a BS '”. For “national” symbols, “open positions” were reserved - as many as ten pieces.

However, having solved the problem of encoding for themselves, the States, intentionally or not, created it for all other countries that do not speak English, and especially for those who do not write in Latin. For languages that differed from English only in diacritics (additional strokes over the letters), a loophole was opened in the form of a BS symbol - return to a step. He allowed one character to be printed on top of another and to receive a á from a sequence of type “a BS '”. For “national” symbols, “open positions” were reserved - as many as ten pieces.

The basic “communication” of the encoding, which led to “saving on bits” (it is believed that the idea of a fully 8-bit encoding was discussed, but was rejected as generating an extra data stream that increases their transmission) turned into a salvation for those who needed extra character space . In fact, teletypes allowed punching eight bits in one position, and this very eighth bit was used for parity. It was his and involved the developers of national encodings to store national characters, and in the world version of ASCII has become an 8-bit encoding. And it was on this eighth bit that the rigid standardization ended, which made ASCII so convenient.

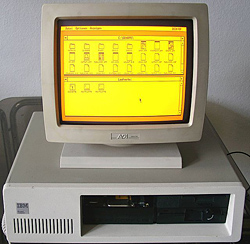

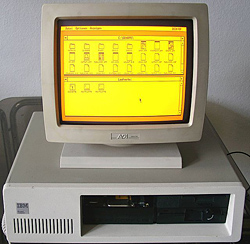

With the proliferation of personal computers, localized operating systems, and then the global network, it became apparent that one Latin in the encoding was indispensable and different manufacturers began to create many national encodings. In practice, not so long ago, this meant that when you received a letter or opened an unsuccessful web page, you risked seeing “cracks” instead of text if your browser did not support the desired encoding. And, as is often the case, each major manufacturer promoted its own version, ignoring competitors.

And each encoding had its own unique advantages. For example, among server systems and, importantly, the first programs for working with e-mail was the most common encoding KOI-8. It had some very successful structural properties, as is often the case with things closely related to UNIX. In particular, the order of the Russian letters was taken from the telegraph standard MTK-2, which preserved the correspondence of the sound, and not the alphabetical order. Thus, with the loss of the eighth bit, the Cyrillic text turned into translit, while maintaining nominal readability, that is, “Hello world!” Without the high-order bit will look like “pRIWET, MIR!”. And pay special attention to the inversion of the register, it is not accidental, but used specifically so that the loss of a bit is immediately noticeable. The same operation on the famous phrase written in WIN1251, the main competitor of KOI-8, will give the unreadable “Ophber, lhp!”.

And each encoding had its own unique advantages. For example, among server systems and, importantly, the first programs for working with e-mail was the most common encoding KOI-8. It had some very successful structural properties, as is often the case with things closely related to UNIX. In particular, the order of the Russian letters was taken from the telegraph standard MTK-2, which preserved the correspondence of the sound, and not the alphabetical order. Thus, with the loss of the eighth bit, the Cyrillic text turned into translit, while maintaining nominal readability, that is, “Hello world!” Without the high-order bit will look like “pRIWET, MIR!”. And pay special attention to the inversion of the register, it is not accidental, but used specifically so that the loss of a bit is immediately noticeable. The same operation on the famous phrase written in WIN1251, the main competitor of KOI-8, will give the unreadable “Ophber, lhp!”.

And since we are talking about the legacy of the telegraph, it is impossible not to mention that perhaps the most important feature that makes email a virtual telegraph is the transfer of all data in a 7-bit format. Yes, all the standards for sending e-mails in one way or another will recode and transmit any data — text not recorded in Latin letters, images, video — in the form of pure ASCII text. The most well-known methods of transport coding have become Quoted-printable and Base64. In the first version, Latin ASCII characters are not recoded, which makes messages with a predominance of Latin characters conditionally readable. However, Base64 is more suitable for data transfer, which is used as the main transport coding method even today.

But back to the issue of national alphabets. In the early 1990s, globalization processes took precedence over the tendency to produce entities and several multinational corporations, including Apple, IBM, and Microsoft, joined together to create a universal encoding. The organization was called the Unicode Consortium, and its work resulted in Unicode — a way of representing the characters of almost all world languages (including the language of mathematics — symbols for formulas, operations, etc. — and musical notation) within a single system.

The capacity of communication channels has grown significantly since the days of telegraph and the first transatlantic cables, so it was already possible not to save on bits, and the universality and extensibility of coding became a priority. That is, it was necessary not only to include all existing national languages and symbols (including hieroglyphs), but also to provide a reserve for the future. Therefore, in the first version of Unicode, fixed-size characters were used - 16 bits.

The capacity of communication channels has grown significantly since the days of telegraph and the first transatlantic cables, so it was already possible not to save on bits, and the universality and extensibility of coding became a priority. That is, it was necessary not only to include all existing national languages and symbols (including hieroglyphs), but also to provide a reserve for the future. Therefore, in the first version of Unicode, fixed-size characters were used - 16 bits.

In this form, the standard allowed to encode up to 65,536 characters, this was more than enough to include the most frequently used characters, and for the most rare, a “user character area” was provided. And already at the first stage all the most famous encodings were included in the standard, so that it could be used as a means of conversion. The Unicode version 1.0.0, released in October 1991, contained, in addition to the usual Latin characters and their modifications, Cyrillic, Arabic and Greek writing, Hebrew, Chinese and Japanese characters, Coptic and Tibetan letters. Subsequently, the character set grew, although sometimes the languages were removed, but only to return to a new place (for example, Tibetan writing and Korean characters).

However, the developers rather quickly decided to “lay down” the possibility of an even more serious expansion and at the same time refuse the fixed size of the symbol. As a result, variable-length characters and various ways of representing code values were used. In 2001, with version 3.1, the standard actually crossed the 16-bit threshold and began to count 94,205 codes. Signs were added to this version to record European and Byzantine music, as well as more than 40,000 unified Chinese-Japanese-Korean hieroglyphs. Since 2006, you can publish web pages with cuneiform, from 2009 to correspond in Vedic Sanskrit, and from 2010 to type horoscopes without inserting alchemical symbols in the form of pictures. As a result, at present, the standard has 93 “languages” and involves 109,449 codes.

At the moment, the Unicode standard has several presentation forms that differ in both structure and scope, although the latter distinction is gradually smoothed out. The most popular form of representation in the global network and for data transmission has become UTF-8 encoding. This is a variable length character encoding - in theory from one to six bytes, in practice - up to four. The first 128 positions are encoded with one byte and coincide with ASCII characters to maintain backward compatibility - even programs without standard support will correctly display the Latin alphabet and Arabic numerals. Two bytes are used to encode the following 1920 characters, and in this area are Latin with diacritics, Greek, Cyrillic, Hebrew, Arabic and Syrian script, and some others. Virtually all of the remaining writing, as well as mathematical symbols, are encoded in three bytes, leaving a four-byte record for notes, extinct languages, and rare Chinese characters.

At the moment, the Unicode standard has several presentation forms that differ in both structure and scope, although the latter distinction is gradually smoothed out. The most popular form of representation in the global network and for data transmission has become UTF-8 encoding. This is a variable length character encoding - in theory from one to six bytes, in practice - up to four. The first 128 positions are encoded with one byte and coincide with ASCII characters to maintain backward compatibility - even programs without standard support will correctly display the Latin alphabet and Arabic numerals. Two bytes are used to encode the following 1920 characters, and in this area are Latin with diacritics, Greek, Cyrillic, Hebrew, Arabic and Syrian script, and some others. Virtually all of the remaining writing, as well as mathematical symbols, are encoded in three bytes, leaving a four-byte record for notes, extinct languages, and rare Chinese characters.

For more than 15 years, it took even such a universal standard as Unicode to enter the direct path to network domination. Until now, not all sites support Unicode - according to Google, only in 2010 the share of Unicode pages in the global network approached 50%. However, large providers, whose services are used by a large number of users from different countries, have recognized the convenience of the standard. The same technology is now used in Mail.Ru Mail.

The history of the development of encodings clearly shows that virtual space can surprisingly inherit the boundaries and barriers of the real world. And the introduction and success of Unicode - how successfully can these obstacles be overcome. At the same time, the development of new technology and the successful application of its companies, whose activities determine the future of the information world, are so closely related that it is difficult to determine where the reason and the consequence. But it is easy to see how gradually more and more features were incorporated into the standard at the development stage. This allows us to hope that in the information world, projects aimed at the future from the very beginning will have the greatest success, and not to gain momentary gain or the simplest solution to local problems.

Respectfully,

Sergey Martynov

Mail.Ru Mail Manager

Like many serious changes, the transition process required serious preparation and had a large “underwater” part - the developers had the task to process 6 petabytes of letters in more than one hundred million boxes. The first experiments began in the fall of 2010, and in the spring of 2011 all the boxes were successfully transferred to the new system. At the same time, the “mail” project domain symbolically changed: instead of the main domain win.mail.ru and historical koi.mail.ru and mac.mail.ru, which issued the site in the appropriate encodings, e.mail.ru is now used, issuing everything pages in UTF-8. All mail is also stored, processed and displayed in UTF-8. This means that any living and dead languages, mathematical and musical symbols can be used in letters, both in plain-text form and with formatting.

To remind how things have been going on with international communication just recently, we have prepared a small excursion into the history of encodings.

')

In the beginning was the figure

Surprisingly, the problem of message encoding, which in the eighties tormented the first network users, and in the nineties was the scourge of the nascent Runet, arose long before the appearance of the computer. One of the very first shoots of the information age was the telegraph, and now some effort is needed to recall that the method of communication, which arose even before the telephone, was originally digital.

Surprisingly, the problem of message encoding, which in the eighties tormented the first network users, and in the nineties was the scourge of the nascent Runet, arose long before the appearance of the computer. One of the very first shoots of the information age was the telegraph, and now some effort is needed to recall that the method of communication, which arose even before the telephone, was originally digital.Apart from pure binary Morse code, the first code that has become standard is the Bodo code. This 5-bit synchronous code allowed the telegraphs to transmit about 190 characters per minute (and subsequently up to 760) or 16 bits per second. By the way, those who bought the first modems, remember that the speed was listed in baud - the units of measurement of the name of Emil Bodo, the inventor of the code and high-speed telegraph.

In addition to the name of the speed unit, the Bodo code, or rather the ITA2 standard created on its basis, is notable for the fact that it became the source of the first Cyrillic coding - the telegraph code MTK-2. For the transfer of Russian letters to the code, a third register was added (RUS in addition to LAT and CIF) and tricks began immediately, caused by the need to put our 31-letter (at that time) alphabet into the Procrustean bed of the 5-bit standard, since six positions of each register were busy with official symbols. For example, the widely known amateurs to write sms in Latin accepts the replacement of the letter H with the number 4 in the middle of the 20th century and was fixed in the All-Union standard. Another interesting point that emerges already in the Internet era, was the correspondence of codes in different registers in sound rather than in the order of letters (i.e., the Cyrillic ABECD sequence corresponded to the Latin ABVGD, and not ABCDE).

Not everything computer is equally useful.

As soon as the computer era began, the character encoding received a new branch of development - binary-decimal code (BCD - binary-coded decimal). Back in 1928, the developers of the company brought to the logical finals the technology of recording information on punch cards that appeared at the beginning of the 19th century. Gray-beige rectangles with 12 lines and 80 columns recollect all the older generation of scientists. Thirty years later, in the late 50s, a 6-bit BCD code was introduced first, and soon 8-bit EBCDIC.

As soon as the computer era began, the character encoding received a new branch of development - binary-decimal code (BCD - binary-coded decimal). Back in 1928, the developers of the company brought to the logical finals the technology of recording information on punch cards that appeared at the beginning of the 19th century. Gray-beige rectangles with 12 lines and 80 columns recollect all the older generation of scientists. Thirty years later, in the late 50s, a 6-bit BCD code was introduced first, and soon 8-bit EBCDIC.From this point on, two interesting trends emerge: the explosive growth of various encoding methods (there were at least six EBCDIC versions incompatible with each other) and the competition between “telegraph” and “computer” encoding standards. At the same time, in the early 60s, or more precisely in 1963, a new 7-bit ASCII encoding was replaced by the Bodo code in Bell Laboratories. The importance of this event is hard to overestimate - for America (and other English-speaking countries) this once and for all solved the problem of standardizing data transmission (at that time by TTY).

However, having solved the problem of encoding for themselves, the States, intentionally or not, created it for all other countries that do not speak English, and especially for those who do not write in Latin. For languages that differed from English only in diacritics (additional strokes over the letters), a loophole was opened in the form of a BS symbol - return to a step. He allowed one character to be printed on top of another and to receive a á from a sequence of type “a BS '”. For “national” symbols, “open positions” were reserved - as many as ten pieces.

However, having solved the problem of encoding for themselves, the States, intentionally or not, created it for all other countries that do not speak English, and especially for those who do not write in Latin. For languages that differed from English only in diacritics (additional strokes over the letters), a loophole was opened in the form of a BS symbol - return to a step. He allowed one character to be printed on top of another and to receive a á from a sequence of type “a BS '”. For “national” symbols, “open positions” were reserved - as many as ten pieces.The basic “communication” of the encoding, which led to “saving on bits” (it is believed that the idea of a fully 8-bit encoding was discussed, but was rejected as generating an extra data stream that increases their transmission) turned into a salvation for those who needed extra character space . In fact, teletypes allowed punching eight bits in one position, and this very eighth bit was used for parity. It was his and involved the developers of national encodings to store national characters, and in the world version of ASCII has become an 8-bit encoding. And it was on this eighth bit that the rigid standardization ended, which made ASCII so convenient.

Babel

With the proliferation of personal computers, localized operating systems, and then the global network, it became apparent that one Latin in the encoding was indispensable and different manufacturers began to create many national encodings. In practice, not so long ago, this meant that when you received a letter or opened an unsuccessful web page, you risked seeing “cracks” instead of text if your browser did not support the desired encoding. And, as is often the case, each major manufacturer promoted its own version, ignoring competitors.

And each encoding had its own unique advantages. For example, among server systems and, importantly, the first programs for working with e-mail was the most common encoding KOI-8. It had some very successful structural properties, as is often the case with things closely related to UNIX. In particular, the order of the Russian letters was taken from the telegraph standard MTK-2, which preserved the correspondence of the sound, and not the alphabetical order. Thus, with the loss of the eighth bit, the Cyrillic text turned into translit, while maintaining nominal readability, that is, “Hello world!” Without the high-order bit will look like “pRIWET, MIR!”. And pay special attention to the inversion of the register, it is not accidental, but used specifically so that the loss of a bit is immediately noticeable. The same operation on the famous phrase written in WIN1251, the main competitor of KOI-8, will give the unreadable “Ophber, lhp!”.

And each encoding had its own unique advantages. For example, among server systems and, importantly, the first programs for working with e-mail was the most common encoding KOI-8. It had some very successful structural properties, as is often the case with things closely related to UNIX. In particular, the order of the Russian letters was taken from the telegraph standard MTK-2, which preserved the correspondence of the sound, and not the alphabetical order. Thus, with the loss of the eighth bit, the Cyrillic text turned into translit, while maintaining nominal readability, that is, “Hello world!” Without the high-order bit will look like “pRIWET, MIR!”. And pay special attention to the inversion of the register, it is not accidental, but used specifically so that the loss of a bit is immediately noticeable. The same operation on the famous phrase written in WIN1251, the main competitor of KOI-8, will give the unreadable “Ophber, lhp!”.And since we are talking about the legacy of the telegraph, it is impossible not to mention that perhaps the most important feature that makes email a virtual telegraph is the transfer of all data in a 7-bit format. Yes, all the standards for sending e-mails in one way or another will recode and transmit any data — text not recorded in Latin letters, images, video — in the form of pure ASCII text. The most well-known methods of transport coding have become Quoted-printable and Base64. In the first version, Latin ASCII characters are not recoded, which makes messages with a predominance of Latin characters conditionally readable. However, Base64 is more suitable for data transfer, which is used as the main transport coding method even today.

How many stars in the sky and letters in all languages of the world

But back to the issue of national alphabets. In the early 1990s, globalization processes took precedence over the tendency to produce entities and several multinational corporations, including Apple, IBM, and Microsoft, joined together to create a universal encoding. The organization was called the Unicode Consortium, and its work resulted in Unicode — a way of representing the characters of almost all world languages (including the language of mathematics — symbols for formulas, operations, etc. — and musical notation) within a single system.

The capacity of communication channels has grown significantly since the days of telegraph and the first transatlantic cables, so it was already possible not to save on bits, and the universality and extensibility of coding became a priority. That is, it was necessary not only to include all existing national languages and symbols (including hieroglyphs), but also to provide a reserve for the future. Therefore, in the first version of Unicode, fixed-size characters were used - 16 bits.

The capacity of communication channels has grown significantly since the days of telegraph and the first transatlantic cables, so it was already possible not to save on bits, and the universality and extensibility of coding became a priority. That is, it was necessary not only to include all existing national languages and symbols (including hieroglyphs), but also to provide a reserve for the future. Therefore, in the first version of Unicode, fixed-size characters were used - 16 bits.In this form, the standard allowed to encode up to 65,536 characters, this was more than enough to include the most frequently used characters, and for the most rare, a “user character area” was provided. And already at the first stage all the most famous encodings were included in the standard, so that it could be used as a means of conversion. The Unicode version 1.0.0, released in October 1991, contained, in addition to the usual Latin characters and their modifications, Cyrillic, Arabic and Greek writing, Hebrew, Chinese and Japanese characters, Coptic and Tibetan letters. Subsequently, the character set grew, although sometimes the languages were removed, but only to return to a new place (for example, Tibetan writing and Korean characters).

However, the developers rather quickly decided to “lay down” the possibility of an even more serious expansion and at the same time refuse the fixed size of the symbol. As a result, variable-length characters and various ways of representing code values were used. In 2001, with version 3.1, the standard actually crossed the 16-bit threshold and began to count 94,205 codes. Signs were added to this version to record European and Byzantine music, as well as more than 40,000 unified Chinese-Japanese-Korean hieroglyphs. Since 2006, you can publish web pages with cuneiform, from 2009 to correspond in Vedic Sanskrit, and from 2010 to type horoscopes without inserting alchemical symbols in the form of pictures. As a result, at present, the standard has 93 “languages” and involves 109,449 codes.

At the moment, the Unicode standard has several presentation forms that differ in both structure and scope, although the latter distinction is gradually smoothed out. The most popular form of representation in the global network and for data transmission has become UTF-8 encoding. This is a variable length character encoding - in theory from one to six bytes, in practice - up to four. The first 128 positions are encoded with one byte and coincide with ASCII characters to maintain backward compatibility - even programs without standard support will correctly display the Latin alphabet and Arabic numerals. Two bytes are used to encode the following 1920 characters, and in this area are Latin with diacritics, Greek, Cyrillic, Hebrew, Arabic and Syrian script, and some others. Virtually all of the remaining writing, as well as mathematical symbols, are encoded in three bytes, leaving a four-byte record for notes, extinct languages, and rare Chinese characters.

At the moment, the Unicode standard has several presentation forms that differ in both structure and scope, although the latter distinction is gradually smoothed out. The most popular form of representation in the global network and for data transmission has become UTF-8 encoding. This is a variable length character encoding - in theory from one to six bytes, in practice - up to four. The first 128 positions are encoded with one byte and coincide with ASCII characters to maintain backward compatibility - even programs without standard support will correctly display the Latin alphabet and Arabic numerals. Two bytes are used to encode the following 1920 characters, and in this area are Latin with diacritics, Greek, Cyrillic, Hebrew, Arabic and Syrian script, and some others. Virtually all of the remaining writing, as well as mathematical symbols, are encoded in three bytes, leaving a four-byte record for notes, extinct languages, and rare Chinese characters.Through thorns to world domination

For more than 15 years, it took even such a universal standard as Unicode to enter the direct path to network domination. Until now, not all sites support Unicode - according to Google, only in 2010 the share of Unicode pages in the global network approached 50%. However, large providers, whose services are used by a large number of users from different countries, have recognized the convenience of the standard. The same technology is now used in Mail.Ru Mail.

Instead of epilogue

The history of the development of encodings clearly shows that virtual space can surprisingly inherit the boundaries and barriers of the real world. And the introduction and success of Unicode - how successfully can these obstacles be overcome. At the same time, the development of new technology and the successful application of its companies, whose activities determine the future of the information world, are so closely related that it is difficult to determine where the reason and the consequence. But it is easy to see how gradually more and more features were incorporated into the standard at the development stage. This allows us to hope that in the information world, projects aimed at the future from the very beginning will have the greatest success, and not to gain momentary gain or the simplest solution to local problems.

Respectfully,

Sergey Martynov

Mail.Ru Mail Manager

Source: https://habr.com/ru/post/123898/

All Articles