Working with Microsoft Kinect in C ++ Applications

Introduction

Most recently, Microsoft released a beta version of the Kinect toolkit - Microsoft Research Kinect SDK. The toolkit includes header files, a library, as well as usage examples in C ++ applications. But the presence of the SDK itself does not solve the problem with the lack of intelligible examples and documentation. It is noticeable that Microsoft is more focused on .NET developers, so, for example, on the official forum, the vast majority of topics are related to C #, and when trying to find any description of the API for Kinect, Google provides only a few links, and those to official documentation.

This article discusses options for using Microsoft Kinect, as well as the above-mentioned software tools in C ++ applications and in conjunction with the wxWidgets library.

')

Beginning of work

To get started, you must at least download development tools. You can do this on the Microsoft Research Kinect SDK page.

We will also need the wxWidgets library. You can download it (v2.9.1) from the official website or from the SVN repository (I prefer SVN HEAD - there are often many new useful things that appear in the official release, but bugs often appear).

For those who prefer the “bright” side and want to develop applications using only free tools, it makes sense to download Visual C ++ 2010 Express , as well as the Microsoft Windows SDK for Windows 7 (or later), without it, build wxWidgets in Visual C ++ Express, most likely not work.

We collect wxWidgets

We will not consider the installation of Visual Studio and Kinect SDK, it all comes down to a few clicks of the Next button in the installation wizard, but we will look at the wxWidgets build process in more detail, since further development steps of the application depend on it.

After the wxWidgets source code has been downloaded and unpacked into a separate folder, you need to add the WXWIN environment variable , in the value of which you must set the path to the wxWidgets source code folder.

When using source codes from SVN, you must copy the % WXWIN% / include / msw / setup0.h file to % WXWIN% / include / msw / setup.h .

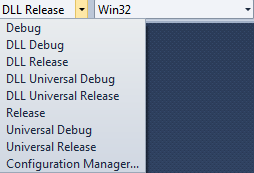

By default, the wxWidgets solution has several available configurations (Figure 1):

- Debug

- Release

- Dll debug

- DLL Release

The first two configurations allow you to build wxWidgets in the form of static libraries, the latter in the form of several dynamically loaded modules.

Building static libraries

Before building static libraries (Debug and Release configurations), in the properties of all projects in the solution, set the C / C ++ -> Code Generation -> Runtime Library parameter to Multi-Threaded Debug and Multi-Threaded respectively (Fig. 2).

Setting these compilation options will eliminate the need to install Visual C ++ Redistributable on end-user machines along with our application. After setting the compilation options, you can build a solution. As a result, several .lib files should be created in the lib / vc_lib subdirectory, which will later be used in our application.

Building dynamic libraries

To build dynamic libraries, nothing needs to be changed in the compiler settings. But there is another problem - the solution does not contain any dependencies, so the build process will need to be restarted several times, because when linking some libraries, errors will occur. After the build, several .DLL and .LIB files should be created in the lib / vc_dll subdirectory.

I would like to note that it is necessary to build (Debug) and debug and optimized (Release) versions of libraries.

Create a test application

So, at the moment we have:

- Visual studio 2010

- Microsoft Research Kinect SDK

- WxWidgets Compiled Libraries

- Static

- Dynamic

You can start creating an application.

In the test application we will have:

- Application class (derived from

wxApp) - Main form class (derived from

wxFrame) - Canvas class (control derived from

wxWindow, which will display the image from the Kinect sensor)

KinectTestApp.h

#ifndef _KINECTTESTAPP_H_

#define _KINECTTESTAPP_H_

#include "wx/image.h"

#include "KinectTestMainFrame.h"

class KinectTestApp: public wxApp

{

DECLARE_CLASS( KinectTestApp )

DECLARE_EVENT_TABLE()

public :

KinectTestApp();

void Init();

virtual bool OnInit();

virtual int OnExit();

};

DECLARE_APP(KinectTestApp)

#endifKinectTestApp.cpp

...

bool KinectTestApp::OnInit()

{

#if wxUSE_LIBPNG

wxImage::AddHandler( new wxPNGHandler);

#endif

#if wxUSE_LIBJPEG

wxImage::AddHandler( new wxJPEGHandler);

#endif

KinectTestMainFrame* mainWindow = new KinectTestMainFrame( NULL );

mainWindow->Show( true );

return true ;

}KinectTestMainFrame.h

class KinectTestMainFrame: public wxFrame, public wxThreadHelper

{

DECLARE_CLASS( KinectTestMainFrame )

DECLARE_EVENT_TABLE()

public :

KinectTestMainFrame();

KinectTestMainFrame( wxWindow* parent,

wxWindowID id = SYMBOL_KINECTTESTMAINFRAME_IDNAME,

const wxString& caption = SYMBOL_KINECTTESTMAINFRAME_TITLE,

const wxPoint& pos = SYMBOL_KINECTTESTMAINFRAME_POSITION,

const wxSize& size = SYMBOL_KINECTTESTMAINFRAME_SIZE,

long style = SYMBOL_KINECTTESTMAINFRAME_STYLE );

bool Create( wxWindow* parent,

wxWindowID id = SYMBOL_KINECTTESTMAINFRAME_IDNAME,

const wxString& caption = SYMBOL_KINECTTESTMAINFRAME_TITLE,

const wxPoint& pos = SYMBOL_KINECTTESTMAINFRAME_POSITION,

const wxSize& size = SYMBOL_KINECTTESTMAINFRAME_SIZE,

long style = SYMBOL_KINECTTESTMAINFRAME_STYLE );

~KinectTestMainFrame();

void Init();

void CreateControls();

wxBitmap GetBitmapResource( const wxString& name );

wxIcon GetIconResource( const wxString& name );

virtual wxThread::ExitCode Entry();

wxGridBagSizer* m_MainSizer;

wxListBox* m_DeviceListBox;

KinectCanvas* m_Canvas;

};

#endifKinectTestMainFrame.cpp

...

void KinectTestMainFrame::CreateControls()

{

KinectTestMainFrame* itemFrame1 = this ;

m_MainSizer = new wxGridBagSizer(0, 0);

m_MainSizer->SetEmptyCellSize(wxSize(10, 20));

itemFrame1->SetSizer(m_MainSizer);

wxArrayString m_DeviceListBoxStrings;

m_DeviceListBox = new wxListBox( itemFrame1,

ID_DEVICE_LISTBOX, wxDefaultPosition,

wxDefaultSize, m_DeviceListBoxStrings,

wxLB_SINGLE );

m_MainSizer->Add(m_DeviceListBox,

wxGBPosition(0, 0), wxGBSpan(1, 1),

wxGROW|wxGROW|wxALL, 5);

m_Canvas = new KinectCanvas( itemFrame1,

ID_KINECT_CANVAS, wxDefaultPosition,

wxSize(320, 240), wxSIMPLE_BORDER );

m_MainSizer->Add(m_Canvas, wxGBPosition(0, 1),

wxGBSpan(1, 1), wxALIGN_CENTER_HORIZONTAL|

wxALIGN_CENTER_VERTICAL|wxALL, 5);

m_MainSizer->AddGrowableCol(1);

m_MainSizer->AddGrowableRow(0);

}

...

wxThread::ExitCode KinectTestMainFrame::Entry()

{

return NULL;

}KinectCanvas.h

...

class KinectCanvas: public wxWindow

{

DECLARE_DYNAMIC_CLASS( KinectCanvas )

DECLARE_EVENT_TABLE()

public :

KinectCanvas();

KinectCanvas(wxWindow* parent,

wxWindowID id = ID_KINECTCANVAS,

const wxPoint& pos = wxDefaultPosition,

const wxSize& size = wxSize(100, 100),

long style = wxSIMPLE_BORDER);

bool Create(wxWindow* parent,

wxWindowID id = ID_KINECTCANVAS,

const wxPoint& pos = wxDefaultPosition,

const wxSize& size = wxSize(100, 100),

long style = wxSIMPLE_BORDER);

~KinectCanvas();

void Init();

void CreateControls();

void OnPaint( wxPaintEvent& event );

wxImage * GetCurrentImage() const { return m_CurrentImage ; }

void SetCurrentImage(wxImage * value ) { m_CurrentImage = value ; }

wxBitmap GetBitmapResource( const wxString& name );

wxIcon GetIconResource( const wxString& name );

wxImage * m_CurrentImage;

};

#endif

KinectCanvas.cpp

...

IMPLEMENT_DYNAMIC_CLASS( KinectCanvas, wxWindow )

BEGIN_EVENT_TABLE( KinectCanvas, wxWindow )

EVT_PAINT( KinectCanvas::OnPaint )

END_EVENT_TABLE()

...

void KinectCanvas::OnPaint( wxPaintEvent& event )

{

wxAutoBufferedPaintDC dc( this );

if (!m_CurrentImage)

{

dc.SetBackground(wxBrush(GetBackgroundColour(), wxSOLID));

dc.Clear();

dc.DrawLabel(_( "No image" ), wxRect(dc.GetSize()),

wxALIGN_CENTER_HORIZONTAL|wxALIGN_CENTER_VERTICAL);

}

else

{

wxBitmap bmp(*m_CurrentImage);

dc.DrawBitmap(bmp,

(dc.GetSize().GetWidth()-bmp.GetWidth())/2,

(dc.GetSize().GetHeight()-bmp.GetHeight())/2);

}

}

In the source code above, there are several empty methods, as well as several methods whose use is not obvious (for example, the methods

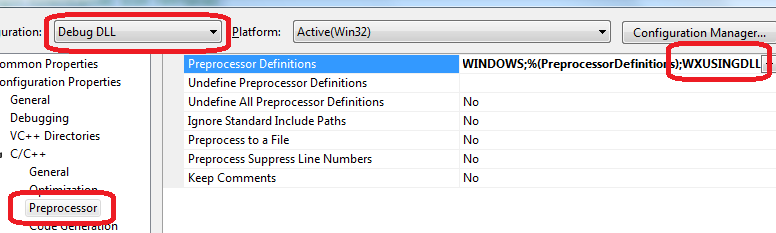

GetIconResource() , GetBitmapResource() , Init() ). All this is because the DialogBlocks form designer was used to create the application framework. This is a paid tool, but the functionality of the trial version is enough to create our application.Before attempting to build an application, you need to change the project parameters so that they match the wxWidgets build parameters. This means that if we want to use the wxWidgets static libraries, we need to set the same values for the Debug and Release configuration in the project properties in the C / C ++ -> Code Generation -> Runtime Library parameter. If we need to use wxWidgets dynamic libraries, then in the project settings in the C / C ++ -> Preprocessor -> Preprocessor Definitions parameter we need to add the

WXUSINGDLL macro. This macro is also used when building wxWidgets dynamic libraries, and as a result, the settings of our project and wxWidgets will be the same (Fig. 3).

Also, for the debug version of the application, it is necessary to add the macro

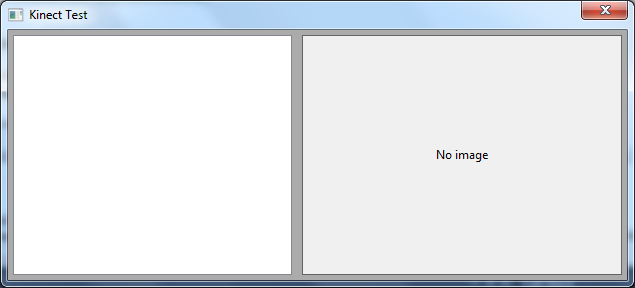

wxUSE_NO_MANIFEST=1 to the preprocessor directives in the resource compiler settings. This is necessary in order to avoid conflicts of the manifest specified in the wxWidgets resource file ( % WXWIN% / include / msw / wx.rc ) and the manifest that Visual Studio automatically adds to the application.After completing the above steps, you can build the application. As a result, we will have something like this (Fig. 4):

Using Microsoft Research Kinect SDK

After installing the Kinect SDK, the environment variable% MSRKINECTSDK% will appear in the system, containing the path to the folder where the SDK was installed. In this folder there is a subdirectory inc - with header files, and lib - with a library. The path to the header files should be added to the compiler settings in our test application, the library path should be added to the linker settings.

Getting a list of devices

At the moment we have all the collected dependencies and the application template. Now you can start writing code that directly uses the Kinect SDK.

In Kinect SDK there is a functionality that allows you to work with several Kinect devices connected to one computer. This is a more universal solution when developing an application. It is not known in advance how many devices we need. Therefore, it would be preferable for us to use this particular API.

To retrieve the device list, use the

MSR_NuiGetDeviceCount() function, which takes a pointer to an integer variable as a parameter, which, if successfully executed, will record the number of available sensors:NUIAPI HRESULT MSR_NuiGetDeviceCount(

int * pCount

);Each Kinect device has its own unique identifier, which can be obtained using the

INuiInstance::MSR_NuiGetPropsBlob() method. This method takes as parameters:- Property ID (in the beta version of the SDK there can be only one value -

INDEX_UNIQUE_DEVICE_NAME) - Pointer to a variable in which the result will be written

- The amount of memory available to record the result (for example, the length of a string). In the beta version of the SDK, this parameter is not used.

virtual bool MSR_NuiGetPropsBlob(

MsrNui::NUI_PROPSINDEX Index,

void * pBlob,

DWORD * pdwInOutSize

);

Armed with the knowledge we have just obtained, we can realize the receipt of a list of devices in our application.

wxKinectHelper.h

#pragma once

#include <vector>

interface INuiInstance;

class KinectHelper

{

protected :

typedef std::pair<INuiInstance *, HANDLE> InstanceInfo;

typedef std::vector<InstanceInfo> InstanceVector;

public :

KinectHelper();

virtual ~KinectHelper();

size_t GetDeviceCount();

wxString GetDeviceName(size_t index);

bool IsDeviceOK(size_t deviceIndex);

protected :

InstanceVector m_Instances;

void Finalize();

InstanceInfo * GetInstanceByIndex(size_t index);

};wxKinectHelper.cpp

#include <wx/wx.h>

#include "msr_nuiapi.h"

#include "KinectHelper.h"

KinectHelper::KinectHelper()

{

}

KinectHelper::~KinectHelper()

{

Finalize();

}

size_t KinectHelper::GetDeviceCount()

{

int result(0);

if (FAILED(MSR_NUIGetDeviceCount(&result))) return 0;

return (size_t)result;

}

KinectHelper::InstanceInfo * KinectHelper::GetInstanceByIndex(size_t index)

{

INuiInstance * instance = NULL;

for (InstanceVector::iterator i = m_Instances.begin();

i != m_Instances.end(); i++)

{

instance = (*i).first;

if (instance->InstanceIndex() == ( int )index) return &(*i);

}

if (!instance)

{

if (!FAILED(MSR_NuiCreateInstanceByIndex(( int )index, &instance)))

{

InstanceInfo info;

info.first = instance;

info.second = NULL;

m_Instances.push_back(info);

return &(m_Instances.at(m_Instances.size()-1));

}

}

return NULL;

}

void KinectHelper::Finalize()

{

for (InstanceVector::const_iterator i = m_Instances.begin();

i != m_Instances.end(); i++)

{

if ((*i).first && (*i).second)

{

(*i).first->NuiShutdown();

MSR_NuiDestroyInstance((*i).first);

}

}

}

wxString KinectHelper::GetDeviceName(size_t index)

{

BSTR result;

DWORD size;

InstanceInfo * info = GetInstanceByIndex(index);

if (info != NULL)

{

INuiInstance * instance = info->first;

if (instance != NULL && instance->MSR_NuiGetPropsBlob(

MsrNui::INDEX_UNIQUE_DEVICE_NAME, &result, &size))

{

wxString name = result;

SysFreeString(result);

return name;

}

}

return wxT( "Unknown Kinect Sensor" );

}

bool KinectHelper::IsDeviceOK(size_t deviceIndex)

{

return GetInstanceByIndex(deviceIndex) != NULL;

}The

InstanceInfo structure contains a pointer to an INuiInstance instance, with which we can get the device name, as well as a handle to the stream in which the image is captured (which will be discussed later).The

wxKinectHelper class contains a vector of InstanceInfo structures and methods for getting the number of devices, as well as the name of each device. In the wxKinectHelper class wxKinectHelper , the Finalize() method is called, which closes all open image capture streams and then deletes all instances of INuiInstance .Now you need to add functionality to get the list of devices in our application.

wxKinectHelperMainFrame.h

...

class KinectTestMainFrame: public wxFrame, public wxThreadHelper

{

...

void ShowDevices();

...

KinectHelper * m_KinectHelper;

}

...wxKinectHelperMainFrame.cpp

...

void KinectTestMainFrame::ShowDevices()

{

size_t count = m_KinectHelper->GetDeviceCount();

m_DeviceListBox->Clear();

for (size_t i = 0; i < count; ++i)

{

int item = m_DeviceListBox->Append(

m_KinectHelper->GetDeviceName(i));

m_DeviceListBox->SetClientData(item, ( void *)i);

}

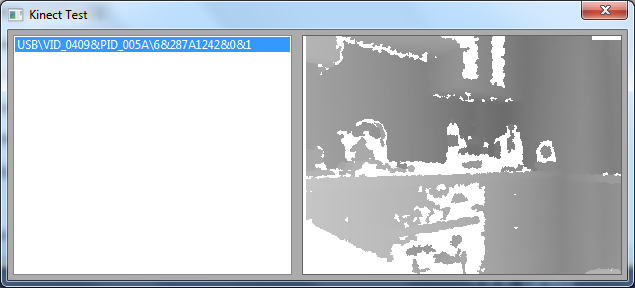

}As a result, after launching the application, we will receive a list of available Kinect devices (Fig. 5):

Getting the image from Kinect

Before you start capturing an image from a device, you need to initialize it. This is done using the

INuiInstance::NuiInitialize() method, which takes a bit mask as a parameter that describes the list of subsystems of the device that we plan to use (depth sensor, camera, or search for players in the video).HRESULT NuiInitialize(

DWORD dwFlags,

);In order to receive an image from Kinect it is necessary to initialize the image capture stream. For these purposes, the method is used

INuiInstance:: NuiImageStreamOpen() , which takes as parameters:- Image type (color image, depth buffer, etc.)

- Resolution (from 80x60 to 1280x1024)

- Image processing flags (not used in the SDK beta)

- The number of cached frames (the maximum value of

NUI_IMAGE_STREAM_FRAME_LIMIT_MAXIMUMis currently 4) - Descriptor of the event that will occur when a new frame is received (optional parameter, but in fact it turned out that if you pass

NULL, then the capture stream may not start) - Pointer to a variable in which the handle of the image capture stream will be written upon successful completion of the function.

In order to stop capturing images from a device, you need to call the

INuiInstance::NuiShutdown() method, and after you finish working with the INuiInstance instance, INuiInstance need to free the memory using the MSR_NuiDestroyInstance() function, in which parameter you must pass a pointer to an INuiInstance object.Depth Buffer

In order to start acquiring the depth buffer, you must call the

INuiInstance:: NuiImageStreamOpen() method and, as the first parameter, pass a value containing the NUI_IMAGE_TYPE_DEPTH_AND_PLAYER_INDEX or NUI_IMAGE_TYPE_DEPTH . The buffer that was most suitable for subsequent processing was obtained using the NUI_IMAGE_TYPE_DEPTH_AND_PLAYER_INDEX flag. In the source code, such a call would look like this:if (FAILED(info->first->NuiImageStreamOpen(NUI_IMAGE_TYPE_DEPTH_AND_PLAYER_INDEX,

NUI_IMAGE_RESOLUTION_320x240, 0,

3,

hDepthFrameEvent,

&info->second))) { /* Handle error here */ }As a result of the call described above, in the variable

info->second will be a handle to the image capture stream. hDepthFrameEvent can create an hDepthFrameEvent event hDepthFrameEvent using the CreateEvent() function.When a new image is available, the

hDepthFrameEvent event will be triggered. Waiting for this event can be implemented using the WaitForMultipleObjects() function or WaitForSingleObject() function.You can get the buffer from the device itself using the

NuiImageStreamGetNextFrame() method, which needs to be passed as parameters:- Capture Stream Handle

- Buffer timeout in milliseconds

- Pointer to a NUI_IMAGE_FRAME structure to which information about the received buffer will be written

virtual HRESULT NuiImageStreamGetNextFrame(

_In_ HANDLE hStream,

_In_ DWORD dwMillisecondsToWait,

_Deref_out_ CONST NUI_IMAGE_FRAME **ppcImageFrame

);In the resulting instance of

NUI_IMAGE_FRAME we are currently most interested in the NuiImageBuffer *pFrameTexture .To work directly with the buffer data, you must call the

LockRect() method. The LockRect() method LockRect() four parameters, of which two are used in the beta version of the API.As the first parameter, you need to pass 0, as the second - a pointer to the

KINECT_LOCKED_RECT structure, in which, after successful completion of the function, the data for working with the buffer will be written. We pass NULL as the third parameter, and 0 as the fourth.STDMETHODIMP LockRect(

UINT Level,

KINECT_LOCKED_RECT* pLockedRect,

CONST RECT* pRectUsuallyNull,

DWORD Flags

);Further, in the

KINECT_LOCKED_RECT structure KINECT_LOCKED_RECT we are interested in the pBits field, which contains the depth data itself. For each pixel of the image in the buffer 2 bytes are allocated. Judging by the FAQ on the official forum , the data format is as follows:• When using the

NUI_INITIALIZE_FLAG_USES_DEPTH_AND_PLAYER_INDEX flag, 12 lower bits are reserved for the depth value and the remaining 3 bits are used for the player index, the upper bit is not used.• When using the

NUI_INITIALIZE_FLAG_USES_DEPTH flag, 12 lower bits are allocated for the depth value, the rest are not used.To obtain a grayscale image, we need to normalize the depth value so that we can get a value in the range from 0 to 255. You can do it like this:

USHORT RealDepth = (s & 0xfff8) >> 3;

BYTE l = 255 - (BYTE)(256*RealDepth/0x0fff);

RGBQUAD q;

q.rgbRed = q.rgbBlue = q.rgbGreen = l;

return q;In order to complete the work with the resulting image, it is necessary to free the memory allocated for the buffer. This can be done using the

NuiImageStreamReleaseFrame() method, which takes a stream handle and a pointer to an instance of NUI_IMAGE_FRAME as parameters.Let's summarize what we have at the moment:

- In order to start the capture, you need to initialize the device using the

NuiInitialize()method. - Then you need to start the capture stream using the

NuiImageStreamOpen()method. - When receiving a new image, an event is called, the handle of which we passed to

NuiImageStreamOpen(). - After calling the event, you can get a frame using the

NuiImageStreamGetNextFrame()method. - Then perform a buffer capture using the

NuiImageBuffer::LockRect()method. - After that, go through the buffer and get the color of each pixel, normalizing the depth value.

- Free the buffer using the

NuiImageStreamReleaseFrame()method. - In order to stop capturing images from a device, it is necessary to de-initialize it with the

NuiShutdown()method.

Now let's see how you can put all this into practice:

wxKinectHelper.h

class KinectHelper

{

...

const wxSize & GetFrameSize();

BYTE * CreateDataBuffer();

void FreeDataBuffer(BYTE * data);

size_t GetDataBufferLength();

bool StartGrabbing(size_t deviceIndex, HANDLE hDepthFrameEvent);

bool ReadKinectFrame(size_t deviceIndex, BYTE * data);

bool IsDeviceOK(size_t deviceIndex);

bool IsGrabbingStarted(size_t deviceIndex);

static RGBQUAD Nui_ShortToQuad_Depth( USHORT s );

protected :

InstanceVector m_Instances;

wxSize m_FrameSize;

...

};wxKinectHelper.cpp

...

void ReadLockedRect(KINECT_LOCKED_RECT & LockedRect, int w, int h, BYTE * data)

{

if ( LockedRect.Pitch != 0 )

{

BYTE * pBuffer = (BYTE*) LockedRect.pBits;

// draw the bits to the bitmap

USHORT * pBufferRun = (USHORT*) pBuffer;

for ( int y = 0 ; y < h ; y++ )

{

for ( int x = 0 ; x < w ; x++ )

{

RGBQUAD quad = KinectHelper::Nui_ShortToQuad_Depth( *pBufferRun );

pBufferRun++;

int offset = (w * y + x) * 3;

data[offset + 0] = quad.rgbRed;

data[offset + 1] = quad.rgbGreen;

data[offset + 2] = quad.rgbBlue;

}

}

}

}

...

BYTE * KinectHelper::CreateDataBuffer()

{

size_t length = GetDataBufferLength();

BYTE * result = (BYTE*)CoTaskMemAlloc(length);

memset(result, 0, length);

return result;

}

size_t KinectHelper::GetDataBufferLength()

{

return m_FrameSize.GetWidth() * m_FrameSize.GetHeight() * 3;

}

void KinectHelper::FreeDataBuffer(BYTE * data)

{

CoTaskMemFree((LPVOID)data);

}

void KinectHelper::Finalize()

{

for (InstanceVector::const_iterator i = m_Instances.begin();

i != m_Instances.end(); i++)

{

if ((*i).first && (*i).second)

{

(*i).first->NuiShutdown();

MSR_NuiDestroyInstance((*i).first);

}

}

}

bool KinectHelper::StartGrabbing(size_t deviceIndex, HANDLE hDepthFrameEvent)

{

do

{

InstanceInfo * info = GetInstanceByIndex(deviceIndex);

if (!info || !info->first) break ;

if (FAILED(info->first->NuiInitialize(

NUI_INITIALIZE_FLAG_USES_DEPTH_AND_PLAYER_INDEX))) break ;

if (FAILED(info->first->NuiImageStreamOpen(

NUI_IMAGE_TYPE_DEPTH_AND_PLAYER_INDEX,

NUI_IMAGE_RESOLUTION_320x240, 0,

3,

hDepthFrameEvent,

&info->second))) break ;

}

while ( false );

return false ;

}

bool KinectHelper::IsDeviceOK(size_t deviceIndex)

{

return GetInstanceByIndex(deviceIndex) != NULL;

}

bool KinectHelper::IsGrabbingStarted(size_t deviceIndex)

{

InstanceInfo * info = GetInstanceByIndex(deviceIndex);

return (info != NULL && info->first != NULL && info->second != NULL);

}

bool KinectHelper::ReadKinectFrame(size_t deviceIndex, BYTE * data)

{

do

{

if (deviceIndex < 0) break ;

InstanceInfo * info = GetInstanceByIndex((size_t)deviceIndex);

if (!info || !info->second) break ;

const NUI_IMAGE_FRAME * pImageFrame;

if (FAILED(NuiImageStreamGetNextFrame(

info->second, 200, &pImageFrame))) break ;

NuiImageBuffer * pTexture = pImageFrame->pFrameTexture;

KINECT_LOCKED_RECT LockedRect;

pTexture->LockRect( 0, &LockedRect, NULL, 0 );

ReadLockedRect(LockedRect, m_FrameSize.GetWidth(),

m_FrameSize.GetHeight(), data);

NuiImageStreamReleaseFrame(info->second, pImageFrame);

return true ;

}

while ( false );

return false ;

}

RGBQUAD KinectHelper::Nui_ShortToQuad_Depth( USHORT s )

{

USHORT RealDepth = (s & 0xfff8) >> 3;

BYTE l = 255 - (BYTE)(256*RealDepth/0x0fff);

RGBQUAD q;

q.rgbRed = q.rgbBlue = q.rgbGreen = l;

return q;

}KinectTestMainFrame.h

class KinectTestMainFrame: public wxFrame, public wxThreadHelper

{

...

void OnDEVICELISTBOXSelected( wxCommandEvent& event );

...

void ShowDevices();

void StopGrabbing();

HANDLE m_NewDepthFrameEvent;

KinectHelper * m_KinectHelper;

BYTE * m_pDepthBuffer;

wxImage * m_CurrentImage;

int m_SelectedDeviceIndex;

};KinectTestMainFrame.cpp

...

BEGIN_EVENT_TABLE( KinectTestMainFrame, wxFrame )

EVT_LISTBOX( ID_DEVICE_LISTBOX, KinectTestMainFrame::OnDEVICELISTBOXSelected )

END_EVENT_TABLE()

...

void KinectTestMainFrame::Init()

{

m_NewDepthFrameEvent = CreateEvent( NULL, TRUE, FALSE, NULL );

m_KinectHelper = new KinectHelper;

m_pDepthBuffer = m_KinectHelper->CreateDataBuffer();

m_CurrentImage = new wxImage(

m_KinectHelper->GetFrameSize().GetWidth(),

m_KinectHelper->GetFrameSize().GetHeight(),

m_pDepthBuffer, true );

m_SelectedDeviceIndex = -1;

m_MainSizer = NULL;

m_DeviceListBox = NULL;

m_Canvas = NULL;

}

...

KinectTestMainFrame::~KinectTestMainFrame()

{

StopGrabbing();

wxDELETE(m_CurrentImage);

m_KinectHelper->FreeDataBuffer(m_pDepthBuffer);

wxDELETE(m_KinectHelper);

}

...

wxThread::ExitCode KinectTestMainFrame::Entry()

{

while (!GetThread()->TestDestroy())

{

int mEventIndex = WaitForMultipleObjects(

1, &m_NewDepthFrameEvent, FALSE, 100);

switch (mEventIndex)

{

case 0:

{

wxCriticalSectionLocker lock (m_CS);

m_KinectHelper->ReadKinectFrame(

m_SelectedDeviceIndex, m_pDepthBuffer);

m_Canvas->Refresh();

}

break ;

default :

break ;

}

}

return NULL;

}

...

void KinectTestMainFrame::OnDEVICELISTBOXSelected( wxCommandEvent& event )

{

do

{

StopGrabbing();

size_t deviceIndex =

(size_t)m_DeviceListBox->GetClientData( event .GetInt());

if (deviceIndex < 0 || deviceIndex >

m_KinectHelper->GetDeviceCount()) break ;

m_SelectedDeviceIndex = deviceIndex;

if (!m_KinectHelper->IsDeviceOK(deviceIndex)) break ;

if (!m_KinectHelper->IsGrabbingStarted(deviceIndex))

{

m_KinectHelper->StartGrabbing(

deviceIndex, m_NewDepthFrameEvent);

if (CreateThread() != wxTHREAD_NO_ERROR) break ;

m_Canvas->SetCurrentImage(m_CurrentImage);

GetThread()->Run();

}

}

while ( false );

}

void KinectTestMainFrame::StopGrabbing()

{

if (GetThread())

{

if (GetThread()->IsAlive())

{

GetThread()->Delete();

}

if (m_kind == wxTHREAD_JOINABLE)

{

if (GetThread()->IsAlive())

{

GetThread()->Wait();

}

wxDELETE(m_thread);

}

else

{

m_thread = NULL;

}

}

}When the application

wxKinectHelper , the wxKinectHelper Object allocates memory for the depth buffer, in accordance with the resolution (320x240x24). The allocated memory is then transferred as an RGB buffer to the m_CurrentImage object.When a device is

m_CurrentImage in the list of available devices, the image capture stream from the device is launched, and the m_CurrentImage object m_CurrentImage associated with the canvas.The

Entry() method waits for a new image from the device. When the image is available, the RGB buffer is filled with new values, and then the canvas is redrawn.As a result, after starting the application and clicking on the device name in the list, we will get something like this (Fig. 6):

Get a color image from the camera

To obtain an image from the device's camera, you must specify the

NUI_INITIALIZE_FLAG_USES_COLOR flag when calling the NuiInitialize() method, as well as specify a resolution of at least 640x480 when you call the NuiImageStreamOpen() method.As a result, the code will look something like this:

if (FAILED(info->first->NuiInitialize(

NUI_INITIALIZE_FLAG_USES_DEPTH_AND_PLAYER_INDEX|

NUI_INITIALIZE_FLAG_USES_COLOR))) break ;

if (FAILED(info->first->NuiImageStreamOpen(NUI_IMAGE_TYPE_COLOR,

NUI_IMAGE_RESOLUTION_640x480, 0,

3,

hDepthFrameEvent,

&info->second))) break ;Accordingly, the data in the

KINECT_LOCKED_RECT structure KINECT_LOCKED_RECT contained in the RGBA format (the RGBQUAD structure, available in the SDK, is quite suitable for data access). Thus, the code to get the RGB buffer will look like this:if ( LockedRect.Pitch != 0 )

{

BYTE * pBuffer = (BYTE*) LockedRect.pBits;

for ( int y = 0 ; y < h ; y++ )

{

for ( int x = 0 ; x < w ; x++ )

{

RGBQUAD * quad = ((RGBQUAD*)pBuffer) + x;

int offset = (w * y + x) * 3;

data[offset + 0] = quad->rgbRed;

data[offset + 1] = quad->rgbGreen;

data[offset + 2] = quad->rgbBlue;

}

pBuffer += LockedRect.Pitch;

}

}Player Position Tracking (Skeleton Tracking)

The algorithm for obtaining and displaying segments of the "skeleton" of players differs from the acquisition of ordinary images.

In order to enable the ability to get skeletal segments,

NuiInitialize() needs to pass a flag containing the value NUI_INITIALIZE_FLAG_USES_SKELETON , and then call NuiSkeletonTrackingEnable() , which as a first parameter to pass the event descriptor that will be called when receiving a new piece of data with segments, and the second parameter is a set of flags (the beta version of the SDK ignores this parameter, so you can pass 0).To complete the stream of getting the skeletal segments, you need to call the

NuiSkeletonTrackingDisable() method.In the code, it will look like this:

if (FAILED(info->first->NuiSkeletonTrackingEnable(hSkeletonFrameEvent, 0)))

{ /* error */ };You can get a data buffer containing information about the position of players using the

NuiSkeletonGetNextFrame() method, which takes as parameters:- Buffer timeout (in milliseconds)

- A pointer to the

NUI_SKELETON_FRAMEstructure, which, if the function completes successfully, will contain a pointer to a data buffer.

After calling the

NuiSkeletonGetNextFrame() method, we get an instance of the NUI_SKELETON_FRAME structure. Let's look at it in more detail.struct _NUI_SKELETON_FRAME {

LARGE_INTEGER liTimeStamp;

DWORD dwFrameNumber;

DWORD dwFlags;

Vector4 vFloorClipPlane;

Vector4 vNormalToGravity;

NUI_SKELETON_DATA SkeletonData[NUI_SKELETON_COUNT];

} NUI_SKELETON_FRAME;liTimeStamp- the date / time of receiving the depth buffer from which the skeleton segments were obtained.dwFlagis a bitmask containing flags.vFloorClipPlane- floor coordinates (calculated inside the library), which were used to cut off everything that is below the floor.vNormalToGravityis a normal vector.dwFrameNumber- frame number.SkeletonDatais an array ofNUI_SKELETON_DATAstructures, each of which contains data about the segmentation of the skeleton of one player.

As can be seen from the description of the

NUI_SKELETON_FRAME structure, a limited number of players are supported (in the current SDK version, the value of NUI_SKELETON_COUNT is 6).Now consider the structure

NUI_SKELETON_DATA :struct _NUI_SKELETON_DATA {

NUI_SKELETON_TRACKING_STATE eTrackingState;

DWORD dwTrackingID;

DWORD dwEnrollmentIndex;

DWORD dwUserIndex;

Vector4 Position;

Vector4 SkeletonPositions[NUI_SKELETON_POSITION_COUNT];

NUI_SKELETON_POSITION_TRACKING_STATE

eSkeletonPositionTrackingState[NUI_SKELETON_POSITION_COUNT];

DWORD dwQualityFlags;

} NUI_SKELETON_DATA;eTrackingStateis the value from theNUI_SKELETON_TRACKING_STATEenumeration. It may indicate that the player was not found, only the coordinates of the player were found (without skeleton segments), or that the coordinates and skeletal segments were found.dwEnrollmentIndex- judging by the documentation (p. 20), is not used in the current version.dwUserIndex- in the current version of the SDK is always equal toXUSER_INDEX_NONE.dwTrackingID- number of the player being tracked.Position- the coordinates of the player.SkeletonPositions- the list of coordinates of the joints of the skeleton segmentseSkeletonPositionTrackingState— A list of flags that indicate whether skeletal segment junctions are found.

As can be seen from the description of the

NUI_SKELETON_DATA structure, the number of supported articulations of the segments is limited to a number equal to NUI_SKELETON_POSITION_COUNT .Now we will consider the implementation of obtaining the coordinates of players using the API described above:

KinectHelper.h

...

struct KinectStreams

{

HANDLE hDepth;

HANDLE hColor;

HANDLE hSkeleton;

KinectStreams() : hDepth(NULL), hColor(NULL), hSkeleton(NULL) {}

};

...KinectHelper.cpp

void KinectHelper::Finalize()

{

for (InstanceVector::const_iterator i = m_Instances.begin();

i != m_Instances.end(); i++)

{

if ((*i).first)

{

...

if ((*i).second.hSkeleton != NULL)

{

(*i).first->NuiSkeletonTrackingDisable();

}

MSR_NuiDestroyInstance((*i).first);

}

}

}

bool KinectHelper::StartGrabbing(size_t deviceIndex,

HANDLE hDepthFrameEvent,

HANDLE hColorFrameEvent,

HANDLE hSkeletonFrameEvent)

{

do

{

if (hDepthFrameEvent == NULL &&

hColorFrameEvent == NULL &&

hSkeletonFrameEvent == NULL) break ;

InstanceInfo * info = GetInstanceByIndex(deviceIndex);

if (!info || !info->first) break ;

if (FAILED(info->first->NuiInitialize(

NUI_INITIALIZE_FLAG_USES_DEPTH_AND_PLAYER_INDEX |

NUI_INITIALIZE_FLAG_USES_COLOR |

NUI_INITIALIZE_FLAG_USES_SKELETON))) break ;

...

if (hSkeletonFrameEvent != NULL)

{

if (FAILED(info->first->NuiSkeletonTrackingEnable(

hSkeletonFrameEvent, 0))) break ;

info->second.hSkeleton = hSkeletonFrameEvent;

}

}

while ( false );

return false ;

}

void * KinectHelper::ReadSkeletonFrame(size_t deviceIndex)

{

do

{

if (deviceIndex < 0) break ;

InstanceInfo * info = GetInstanceByIndex((size_t)deviceIndex);

if (!info || !info->second.hColor) break ;

NUI_SKELETON_FRAME * frame = new NUI_SKELETON_FRAME;

if (FAILED(info->first->NuiSkeletonGetNextFrame(200, frame))) break ;

return frame;

}

while ( false );

return NULL;

}Draw the player's skeleton

At the moment we have information on how:

- Initialize device to get players position

- Start the flow of capturing the position of players

- Get a buffer containing the coordinates of the skeletal segments of the players.

- Get to the right data in the buffer

- Stop the flow of getting the position of players.

Now we need to somehow show the received data in the application.

Before you do something with data from

NUI_SKELETON_FRAME , you need to send them for preprocessing. The NuiTransformSmooth NuiTransformSmooth() method performs the NuiTransformSmooth() - it filters the coordinates of the segments in order to avoid jerks and sudden movements. As parameters, the NuiTransformSmooth() method takes a pointer to a NUI_SKELETON_FRAME structure and, optionally, a pointer to a NUI_TRANSFORM_SMOOTH_PARAMETERS object containing the preprocessing parameters.HRESULT NuiTransformSmooth(

NUI_SKELETON_FRAME *pSkeletonFrame,

CONST NUI_TRANSFORM_SMOOTH_PARAMETERS *pSmoothingParams

);In order to display the skeleton segments, it is necessary to convert their coordinates into image coordinates. This can be done using the

NuiTransformSkeletonToDepthImageF() method, which takes as parameters:- The coordinates of the junction point of the skeleton segments in the form of a

Vector4structure. - Pointer to a variable in which the X-coordinate will be written.

- Pointer to a variable in which the Y-coordinate will be written.

VOID NuiTransformSkeletonToDepthImageF(

Vector4 vPoint,

_Out_ FLOAT *pfDepthX,

_Out_ FLOAT *pfDepthY

);After obtaining the coordinates of all the articulation points, you can display them on the canvas using ordinary graphic primitives.

Here is how the code for displaying skeleton segments looks like in practice:

SkeletonPainter.h

#pragma once

#include <wx/wx.h>

class SkeletonPainterImpl;

class SkeletonPainter

{

public :

SkeletonPainter();

~SkeletonPainter();

void DrawSkeleton(wxDC & dc, void * data);

private :

SkeletonPainterImpl * m_Impl;

};SkeletonPainter.cpp

#include "SkeletonPainter.h"

#if defined(__WXMSW__)

#include "SkeletonPainterImplMSW.h"

#endif

SkeletonPainter::SkeletonPainter()

{

#if defined(__WXMSW__)

m_Impl = new SkeletonPainterImplMSW;

#else

m_Impl = NULL;

#endif

}

SkeletonPainter::~SkeletonPainter()

{

wxDELETE(m_Impl);

}

void SkeletonPainter::DrawSkeleton(wxDC & dc, void * data)

{

if (m_Impl)

{

m_Impl->DrawSkeleton(dc, data);

}

}SkeletonPainterImpl.h

#pragma once

#include <wx/wx.h>

class SkeletonPainterImpl

{

public :

virtual ~SkeletonPainterImpl() {}

virtual void DrawSkeleton(wxDC & dc, void * data) = 0;

};SkeletonPainterImplMSW.h

#pragma once

#include "SkeletonPainterImpl.h"

#include "msr_nuiapi.h"

class SkeletonPainterImplMSW : public SkeletonPainterImpl

{

public :

~SkeletonPainterImplMSW();

void DrawSkeleton(wxDC & dc, void * data);

private :

void Nui_DrawSkeleton(wxDC & dc, NUI_SKELETON_DATA * data, size_t index);

void Nui_DrawSkeletonSegment(wxDC & dc, wxPoint * points, int numJoints, ... );

static wxPen m_SkeletonPen[6];

};SkeletonPainterImplMSW.cpp

#include "SkeletonPainterImplMSW.h"

wxPen SkeletonPainterImplMSW::m_SkeletonPen[6] =

{

wxPen(wxColor(255, 0, 0), wxSOLID),

...

};

SkeletonPainterImplMSW::~SkeletonPainterImplMSW()

{

}

void SkeletonPainterImplMSW::DrawSkeleton(wxDC & dc, void * data)

{

do

{

NUI_SKELETON_FRAME * frame =

reinterpret_cast<NUI_SKELETON_FRAME*>(data);

if (!frame) break ;

int skeletonCount(0);

for ( int i = 0 ; i < NUI_SKELETON_COUNT ; i++ )

{

if ( frame->SkeletonData[i].eTrackingState ==

NUI_SKELETON_TRACKED )

{

skeletonCount++;

}

}

if (!skeletonCount) break ;

NuiTransformSmooth(frame, NULL);

for (size_t i = 0 ; i < NUI_SKELETON_COUNT ; i++ )

{

if (frame->SkeletonData[i].eTrackingState ==

NUI_SKELETON_TRACKED)

{

Nui_DrawSkeleton(dc, &frame->SkeletonData[i], i );

}

}

}

while ( false );

}

void SkeletonPainterImplMSW::Nui_DrawSkeleton(wxDC & dc,

NUI_SKELETON_DATA * data, size_t index)

{

wxPoint points[NUI_SKELETON_POSITION_COUNT];

float fx(0), fy(0);

wxSize imageSize = dc.GetSize();

for (size_t i = 0; i < NUI_SKELETON_POSITION_COUNT; i++)

{

NuiTransformSkeletonToDepthImageF(

data->SkeletonPositions[i], &fx, &fy);

points[i].x = ( int ) ( fx * imageSize.GetWidth() + 0.5f );

points[i].y = ( int ) ( fy * imageSize.GetHeight() + 0.5f );

}

Nui_DrawSkeletonSegment(dc,points,4,

NUI_SKELETON_POSITION_HIP_CENTER,

NUI_SKELETON_POSITION_SPINE,

NUI_SKELETON_POSITION_SHOULDER_CENTER,

NUI_SKELETON_POSITION_HEAD);

Nui_DrawSkeletonSegment(dc,points,5,

NUI_SKELETON_POSITION_SHOULDER_CENTER,

NUI_SKELETON_POSITION_SHOULDER_LEFT,

NUI_SKELETON_POSITION_ELBOW_LEFT,

NUI_SKELETON_POSITION_WRIST_LEFT,

NUI_SKELETON_POSITION_HAND_LEFT);

Nui_DrawSkeletonSegment(dc,points,5,

NUI_SKELETON_POSITION_SHOULDER_CENTER,

NUI_SKELETON_POSITION_SHOULDER_RIGHT,

NUI_SKELETON_POSITION_ELBOW_RIGHT,

NUI_SKELETON_POSITION_WRIST_RIGHT,

NUI_SKELETON_POSITION_HAND_RIGHT);

Nui_DrawSkeletonSegment(dc,points,5,

NUI_SKELETON_POSITION_HIP_CENTER,

NUI_SKELETON_POSITION_HIP_LEFT,

NUI_SKELETON_POSITION_KNEE_LEFT,

NUI_SKELETON_POSITION_ANKLE_LEFT,

NUI_SKELETON_POSITION_FOOT_LEFT);

Nui_DrawSkeletonSegment(dc,points,5,

NUI_SKELETON_POSITION_HIP_CENTER,

NUI_SKELETON_POSITION_HIP_RIGHT,

NUI_SKELETON_POSITION_KNEE_RIGHT,

NUI_SKELETON_POSITION_ANKLE_RIGHT,

NUI_SKELETON_POSITION_FOOT_RIGHT);

}

void SkeletonPainterImplMSW::Nui_DrawSkeletonSegment(wxDC & dc,

wxPoint * points, int numJoints, ...)

{

va_list vl;

va_start(vl,numJoints);

wxPoint segmentPositions[NUI_SKELETON_POSITION_COUNT];

for ( int iJoint = 0; iJoint < numJoints; iJoint++)

{

NUI_SKELETON_POSITION_INDEX jointIndex =

va_arg(vl,NUI_SKELETON_POSITION_INDEX);

segmentPositions[iJoint].x = points[jointIndex].x;

segmentPositions[iJoint].y = points[jointIndex].y;

}

dc.SetPen(*wxBLUE_PEN);

dc.DrawLines(numJoints, segmentPositions);

va_end(vl);

}Using the

SkeletonPainter class in an application will look like this:KinectTestMainFrame.h

...

class KinectTestMainFrame: public wxFrame, public wxThreadHelper

{

...

HANDLE m_NewSkeletonFrameEvent;

wxImage m_SkeletonImage;

...

};

...KinectTestMainFrame.cpp

...

wxThread::ExitCode KinectTestMainFrame::Entry()

{

HANDLE eventHandles[3];

eventHandles[0] = m_NewDepthFrameEvent;

eventHandles[1] = m_NewColorFrameEvent;

eventHandles[2] = m_NewSkeletonFrameEvent;

SkeletonPainter painter;

while (!GetThread()->TestDestroy())

{

int mEventIndex = WaitForMultipleObjects(

_countof(eventHandles), eventHandles, FALSE, 100);

switch (mEventIndex)

{

...

case 2:

{

void * frame = m_KinectHelper->ReadSkeletonFrame(

m_SelectedDeviceIndex);

if (frame)

{

wxBitmap bmp(

m_SkeletonImage.GetWidth(),

m_SkeletonImage.GetHeight());

wxMemoryDC dc(bmp);

painter.DrawSkeleton(dc, frame);

m_KinectHelper->ReleaseSkeletonFrame(frame);

dc.SelectObject(wxNullBitmap);

m_SkeletonImage = bmp.ConvertToImage();

m_SkeletonCanvas->Refresh();

}

}

break ;

default :

break ;

}

}

return NULL;

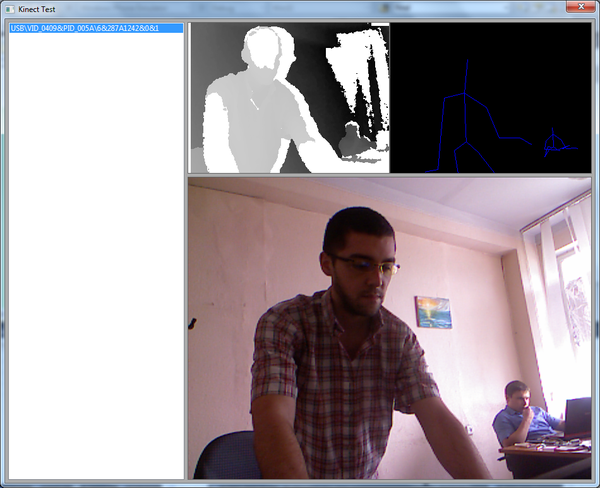

}As a result of the actions described above, we should get approximately the following result (Fig. 7):

Get rid of platform-specific code in the application

The example described above is good for everyone, except that in a project for developing a user interface, a cross-platform library is used, and part of the GUI code is written using an API that is specific only to Windows.

To work with Kinect, there are several third-party libraries, such as libfreenect or OpenNI , but already at this stage we have a situation that the application code is tied to the use of the Microsoft SDK.

In order to solve this annoying misunderstanding, you can take out the code associated with obtaining images from the device in a separate grabber class, and limit the functionality of the

KinectHelper class to obtaining a list of devices and creating instances of the grabber:KinectGrabberBase.h

#pragma once

#include <wx/wx.h>

class KinectGrabberBase

{

public :

KinectGrabberBase(wxEvtHandler * handler);

virtual ~KinectGrabberBase();

virtual bool GrabDepthFrame(unsigned char * data) = 0;

virtual bool GrabColorFrame(unsigned char * data) = 0;

virtual void * GrabSkeletonFrame() = 0;

virtual bool Start() = 0;

virtual bool Stop() = 0;

virtual bool IsStarted() = 0;

const wxSize & GetDepthFrameSize();

const wxSize & GetColorFrameSize();

protected :

wxSize m_DepthFrameSize;

wxSize m_ColorFrameSize;

wxEvtHandler * m_Handler;

};

BEGIN_DECLARE_EVENT_TYPES()

DECLARE_LOCAL_EVENT_TYPE(KINECT_DEPTH_FRAME_RECEIVED, -1)

DECLARE_LOCAL_EVENT_TYPE(KINECT_COLOR_FRAME_RECEIVED, -1)

DECLARE_LOCAL_EVENT_TYPE(KINECT_SKELETON_FRAME_RECEIVED, -1)

END_DECLARE_EVENT_TYPES()KinectGrabberBase.cpp

#include "KinectGrabberBase.h"

DEFINE_EVENT_TYPE(KINECT_DEPTH_FRAME_RECEIVED)

DEFINE_EVENT_TYPE(KINECT_COLOR_FRAME_RECEIVED)

DEFINE_EVENT_TYPE(KINECT_SKELETON_FRAME_RECEIVED)

...KinectGrabberMSW.h

#pragma once

#include "KinectGrabberBase.h"

#include "MSR_NuiApi.h"

class KinectGrabberMSW : public KinectGrabberBase, public wxThreadHelper

{

...

private :

virtual wxThread::ExitCode Entry();

BYTE * CreateDepthDataBuffer();

BYTE * CreateColorDataBuffer();

size_t GetDepthDataBufferLength();

size_t GetColorDataBufferLength();

void FreeDataBuffer(BYTE * data);

bool ReadDepthFrame();

bool ReadColorFrame();

bool ReadSkeletonFrame();

void ReadDepthLockedRect(KINECT_LOCKED_RECT & LockedRect,

int w, int h, BYTE * data);

void ReadColorLockedRect(KINECT_LOCKED_RECT & LockedRect,

int w, int h, BYTE * data);

static RGBQUAD Nui_ShortToQuad_Depth( USHORT s );

void ResetEvents();

void StopThread();

bool CopyLocalBuffer(BYTE * src, BYTE * dst, size_t count);

HANDLE m_NewDepthFrameEvent;

HANDLE m_NewColorFrameEvent;

HANDLE m_NewSkeletonFrameEvent;

HANDLE m_DepthStreamHandle;

HANDLE m_ColorStreamHandle;

BYTE * m_DepthBuffer;

BYTE * m_ColorBuffer;

INuiInstance * m_Instance;

size_t m_DeviceIndex;

NUI_SKELETON_FRAME m_SkeletonFrame;

};KinectGrabberMSW.cpp

#include "KinectGrabberMSW.h"

KinectGrabberMSW::KinectGrabberMSW(wxEvtHandler * handler, size_t deviceIndex)

: KinectGrabberBase(handler), m_DeviceIndex(deviceIndex), m_Instance(NULL)

{

m_DepthBuffer = CreateDepthDataBuffer();

m_ColorBuffer = CreateColorDataBuffer();

ResetEvents();

do

{

if (FAILED(MSR_NuiCreateInstanceByIndex(( int )m_DeviceIndex, &m_Instance))) break ;

if (FAILED(m_Instance->NuiInitialize(

NUI_INITIALIZE_FLAG_USES_DEPTH_AND_PLAYER_INDEX |

NUI_INITIALIZE_FLAG_USES_COLOR |

NUI_INITIALIZE_FLAG_USES_SKELETON))) break ;

}

while ( false );

}

...

void * KinectGrabberMSW::GrabSkeletonFrame()

{

do

{

if (!GetThread() || !GetThread()->IsAlive() ||

!m_Instance || !m_NewSkeletonFrameEvent) break ;

return &m_SkeletonFrame;

}

while ( false );

return NULL;

}

bool KinectGrabberMSW::Start()

{

do

{

if (!m_Instance) break ;

if (GetThread() && GetThread()->IsAlive()) break ;

if (CreateThread() != wxTHREAD_NO_ERROR) break ;

m_NewDepthFrameEvent = CreateEvent( NULL, TRUE, FALSE, NULL );

m_NewColorFrameEvent = CreateEvent( NULL, TRUE, FALSE, NULL );

m_NewSkeletonFrameEvent = CreateEvent( NULL, TRUE, FALSE, NULL );

if (FAILED(m_Instance->NuiImageStreamOpen(

NUI_IMAGE_TYPE_DEPTH_AND_PLAYER_INDEX,

NUI_IMAGE_RESOLUTION_320x240, 0,

3,

m_NewDepthFrameEvent,

&m_DepthStreamHandle))) break ;

if (FAILED(m_Instance->NuiImageStreamOpen(NUI_IMAGE_TYPE_COLOR,

NUI_IMAGE_RESOLUTION_640x480, 0,

4,

m_NewColorFrameEvent,

&m_ColorStreamHandle))) break ;

if (FAILED(m_Instance->NuiSkeletonTrackingEnable(

m_NewSkeletonFrameEvent, 0))) break ;

GetThread()->Run();

return true ;

}

while ( false );

return false ;

}

...

wxThread::ExitCode KinectGrabberMSW::Entry()

{

HANDLE eventHandles[3];

eventHandles[0] = m_NewDepthFrameEvent;

eventHandles[1] = m_NewColorFrameEvent;

eventHandles[2] = m_NewSkeletonFrameEvent;

while (!GetThread()->TestDestroy())

{

int mEventIndex = WaitForMultipleObjects(

_countof(eventHandles), eventHandles, FALSE, 100);

switch (mEventIndex)

{

case 0: ReadDepthFrame(); break ;

case 1: ReadColorFrame(); break ;

case 2: ReadSkeletonFrame(); break ;

default :

break ;

}

}

return NULL;

}

...

void KinectGrabberMSW::StopThread()

{

if (GetThread())

{

if (GetThread()->IsAlive())

{

GetThread()->Delete();

}

if (m_kind == wxTHREAD_JOINABLE)

{

if (GetThread()->IsAlive())

{

GetThread()->Wait();

}

wxDELETE(m_thread);

}

else

{

m_thread = NULL;

}

}

wxYield();

}

bool KinectGrabberMSW::ReadDepthFrame()

{

do

{

if (m_DeviceIndex < 0 || !m_Instance) break ;

const NUI_IMAGE_FRAME * pImageFrame;

if (FAILED(NuiImageStreamGetNextFrame(

m_DepthStreamHandle, 200, &pImageFrame))) break ;

NuiImageBuffer * pTexture = pImageFrame->pFrameTexture;

KINECT_LOCKED_RECT LockedRect;

pTexture->LockRect( 0, &LockedRect, NULL, 0 );

ReadDepthLockedRect(LockedRect,

m_DepthFrameSize.GetWidth(),

m_DepthFrameSize.GetHeight(),

m_DepthBuffer);

NuiImageStreamReleaseFrame(m_DepthStreamHandle, pImageFrame);

if (m_Handler)

{

wxCommandEvent e(KINECT_DEPTH_FRAME_RECEIVED, wxID_ANY);

e.SetInt(m_DeviceIndex);

m_Handler->AddPendingEvent(e);

}

return true ;

}

while ( false );

return false ;

}

bool KinectGrabberMSW::ReadColorFrame()

{

do

{

if (m_DeviceIndex < 0 || !m_Instance) break ;

const NUI_IMAGE_FRAME * pImageFrame;

if (FAILED(NuiImageStreamGetNextFrame(

m_ColorStreamHandle, 200, &pImageFrame))) break ;

NuiImageBuffer * pTexture = pImageFrame->pFrameTexture;

KINECT_LOCKED_RECT LockedRect;

pTexture->LockRect( 0, &LockedRect, NULL, 0 );

ReadColorLockedRect(LockedRect,

m_ColorFrameSize.GetWidth(),

m_ColorFrameSize.GetHeight(),

m_ColorBuffer);

NuiImageStreamReleaseFrame(m_ColorStreamHandle, pImageFrame);

if (m_Handler)

{

wxCommandEvent e(KINECT_COLOR_FRAME_RECEIVED, wxID_ANY);

e.SetInt(m_DeviceIndex);

m_Handler->AddPendingEvent(e);

}

return true ;

}

while ( false );

return false ;

}

bool KinectGrabberMSW::ReadSkeletonFrame()

{

do

{

if (m_DeviceIndex < 0 || !m_Instance) break ;

if (FAILED(m_Instance->NuiSkeletonGetNextFrame(200, &m_SkeletonFrame))) break ;

if (m_Handler)

{

wxCommandEvent e(KINECT_SKELETON_FRAME_RECEIVED, wxID_ANY);

e.SetInt(m_DeviceIndex);

m_Handler->AddPendingEvent(e);

}

return true ;

}

while ( false );

return false ;

}

void KinectGrabberMSW::ReadDepthLockedRect(KINECT_LOCKED_RECT & LockedRect, int w, int h, BYTE * data)

{

if ( LockedRect.Pitch != 0 )

{

BYTE * pBuffer = (BYTE*) LockedRect.pBits;

USHORT * pBufferRun = (USHORT*) pBuffer;

for ( int y = 0 ; y < h ; y++ )

{

for ( int x = 0 ; x < w ; x++ )

{

RGBQUAD quad = KinectGrabberMSW::Nui_ShortToQuad_Depth( *pBufferRun );

pBufferRun++;

int offset = (w * y + x) * 3;

data[offset + 0] = quad.rgbRed;

data[offset + 1] = quad.rgbGreen;

data[offset + 2] = quad.rgbBlue;

}

}

}

}

void KinectGrabberMSW::ReadColorLockedRect(KINECT_LOCKED_RECT & LockedRect, int w, int h, BYTE * data)

{

if ( LockedRect.Pitch != 0 )

{

BYTE * pBuffer = (BYTE*) LockedRect.pBits;

for ( int y = 0 ; y < h ; y++ )

{

for ( int x = 0 ; x < w ; x++ )

{

RGBQUAD * quad = ((RGBQUAD*)pBuffer) + x;

int offset = (w * y + x) * 3;

data[offset + 0] = quad->rgbRed;

data[offset + 1] = quad->rgbGreen;

data[offset + 2] = quad->rgbBlue;

}

pBuffer += LockedRect.Pitch;

}

}

}

...KinectHelper.h

#pragma once

class KinectGrabberBase;

class KinectHelper

{

public :

KinectHelper();

~KinectHelper();

size_t GetDeviceCount();

wxString GetDeviceName(size_t index);

KinectGrabberBase * CreateGrabber(wxEvtHandler * handler, size_t index);

};KinectHelper.cpp

...

wxString KinectHelper::GetDeviceName(size_t index)

{

BSTR result;

DWORD size;

INuiInstance * instance(NULL);

wxString name = wxT( "Unknown Kinect Sensor" );

if (!FAILED(MSR_NuiCreateInstanceByIndex(index, &instance)))

{

if (instance != NULL)

{

if (instance->MSR_NuiGetPropsBlob(

MsrNui::INDEX_UNIQUE_DEVICE_NAME,

&result, &size))

{

name = result;

SysFreeString(result);

}

MSR_NuiDestroyInstance(instance);

}

}

return name;

}

KinectGrabberBase * KinectHelper::CreateGrabber(wxEvtHandler * handler, size_t index)

{

#if defined(__WXMSW__)

return new KinectGrabberMSW(handler, index);

#else

return NULL;

#endif

}

...Allocation of memory for RGB buffers, as well as code to ensure the capture of images in a separate stream, can be removed from the form class. Now the form class will look like this:

KinectTestMainFrame.h

class KinectTestMainFrame: public wxFrame

{

...

void OnDepthFrame(wxCommandEvent & event );

void OnColorFrame(wxCommandEvent & event );

void OnSkeletonFrame(wxCommandEvent & event );

...

wxImage m_CurrentImage;

int m_SelectedDeviceIndex;

wxImage m_ColorImage;

wxImage m_SkeletonImage;

KinectGrabberBase * m_Grabber;

...

};KinectTestMainFrame.cpp

...

BEGIN_EVENT_TABLE( KinectTestMainFrame, wxFrame )

...

EVT_COMMAND (wxID_ANY, KINECT_DEPTH_FRAME_RECEIVED, \

KinectTestMainFrame::OnDepthFrame)

EVT_COMMAND (wxID_ANY, KINECT_COLOR_FRAME_RECEIVED, \

KinectTestMainFrame::OnColorFrame)

EVT_COMMAND (wxID_ANY, KINECT_SKELETON_FRAME_RECEIVED, \

KinectTestMainFrame::OnSkeletonFrame)

END_EVENT_TABLE()

...

void KinectTestMainFrame::OnDEVICELISTBOXSelected( wxCommandEvent& event )

{

do

{

size_t deviceIndex =

(size_t)m_DeviceListBox->GetClientData( event .GetInt());

if (deviceIndex < 0 ||

deviceIndex > m_KinectHelper->GetDeviceCount()) break ;

m_SelectedDeviceIndex = deviceIndex;

StartGrabbing();

}

while ( false );

}

void KinectTestMainFrame::StartGrabbing()

{

StopGrabbing();

m_Grabber = m_KinectHelper->CreateGrabber( this , m_SelectedDeviceIndex);

m_CurrentImage = wxImage(

m_Grabber->GetDepthFrameSize().GetWidth(),

m_Grabber->GetDepthFrameSize().GetHeight());

m_ColorImage = wxImage(

m_Grabber->GetColorFrameSize().GetWidth(),

m_Grabber->GetColorFrameSize().GetHeight());

m_SkeletonImage = wxImage(

m_Grabber->GetDepthFrameSize().GetWidth(),

m_Grabber->GetDepthFrameSize().GetHeight());

m_DepthCanvas->SetCurrentImage(&m_CurrentImage);

m_ColorCanvas->SetCurrentImage(&m_ColorImage);

m_SkeletonCanvas->SetCurrentImage(&m_SkeletonImage);

if (!m_Grabber->Start())

{

StopGrabbing();

}

}

...

void KinectTestMainFrame::OnDepthFrame(wxCommandEvent & event )

{

do

{

if (!m_Grabber) break ;

m_Grabber->GrabDepthFrame(m_CurrentImage.GetData());

m_DepthCanvas->Refresh();

}

while ( false );

}

void KinectTestMainFrame::OnColorFrame(wxCommandEvent & event )

{

do

{

if (!m_Grabber) break ;

m_Grabber->GrabColorFrame(m_ColorImage.GetData());

m_ColorCanvas->Refresh();

}

while ( false );

}

void KinectTestMainFrame::OnSkeletonFrame(wxCommandEvent & event )

{

do

{

if (!m_Grabber) break ;

SkeletonPainter painter;

wxBitmap bmp(m_SkeletonImage.GetWidth(), m_SkeletonImage.GetHeight());

wxMemoryDC mdc(bmp);

painter.DrawSkeleton(mdc, m_Grabber->GrabSkeletonFrame());

mdc.SelectObject(wxNullBitmap);

m_SkeletonImage = bmp.ConvertToImage();

m_SkeletonCanvas->Refresh();

}

while ( false );

}As can be seen from the code, the grabber class, when it receives a new frame, sends a notification to the

wxEvtHandler object (and the wxFrame class in wxWidgets is derived from wxEvtHandler ). The form has event handlers that are called when notifications are received from the grabber.The reason why the

KinectGrabberBase::GrabSkeletonFrame() method returns void* is also quite simple - if you make image capture implementations using various SDKs (including unofficial ones), then it’s not a fact that all these SDKs will receive information about the position of players in the form of identical data structures. In any case, the coordinates must be sent for post-processing. In this case, the code that receives the pointer from the grabber will know by itself what data type it needs to be converted to. GUI about the internal structure of the grabber is not necessary to know.In conclusion

In conclusion, I would like to note that, although Microsoft’s SDK is in beta, it is quite usable, although the Kinect control functionality is not fully implemented (libfreenect, for example, allows you to control the LEDs on the device, and the official SDK, according to the documentation, can not). The library is surprisingly stable. It is noticeable that the developers have taken care to avoid memory leaks. If, for example, you forget to close the stream on exit, then the Visual Studio debugger will not report memory leaks, most likely everything ends correctly and is removed from memory when the library is unloaded.

The source code of the test application and library can be found on Google Code - wxKinectHelper .

I count on the development of the project and the addition of new implementations of grabbers. I'm currently trying to tame libfreenect. Out of the box and without preliminary shamanism, we managed to start everything except receiving images - the LED indicators blink and the buzz with a motor turns out perfectly. I'm trying to do the same with OpenNI.

useful links

Microsoft Research Kinect SDK

Development Video with Kinect SDK

SDK

, Kinect SDK

C++ Kinect SDK ( ) , — .

Source: https://habr.com/ru/post/123588/

All Articles