Automation through integration. Promotional version. UPD

Upd : added skinshots.

The Software Engineering Forum 2011 took place in Minsk on May 19-20. We gave a talk “A New Test Automation Level”, or the alternative long version - “Automation automation” of automated testing through the integration of test tools. In it, we opened up three main questions:

Under the cut - the content of the report, a link to the promotional version of Octopus. Long post.

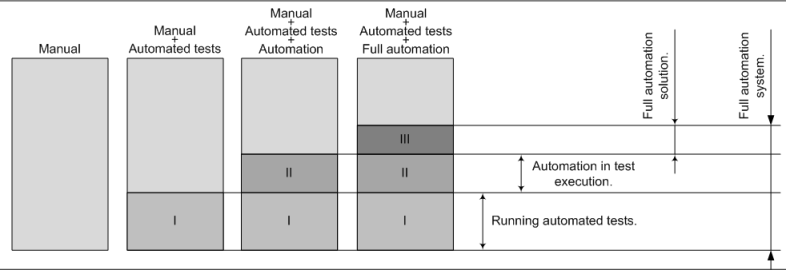

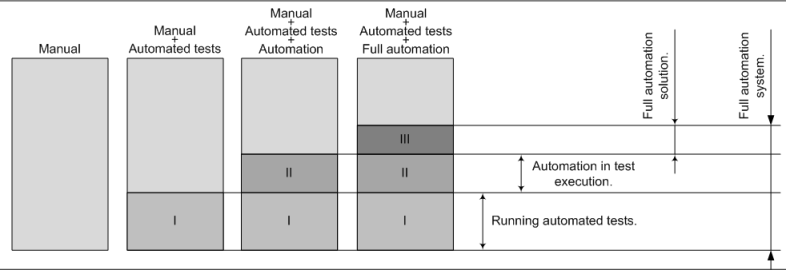

Based on our own experience in introducing automated tests, on the experience of colleagues from other companies with whom we spoke during the QA conferences, as well as on the data of our surveys, we identified three main stages through which the company passes on the way to test automation.

')

Along with manual testing, companies are starting to use:

- scripts that copy the setup of the test product, install it and partially test it;

- tests written in any development environment of automatic tests.

Stage Characteristics

This stage is peculiar to, first of all, companies with short-term projects, which is why they simply do not have time to bring automated testing to a new level. At this stage, there are also organizations that only implement automated tests in their software quality control processes, and small firms that do not clearly describe the testing processes.

Along with the automation of the tests themselves, some part of the process is also automated.

Stage Characteristics

This is one of the most popular stages. It is typical for companies that have established the testing process as such and are working on medium-term and long-term projects. Those. we are talking about organizations that need to conduct regression testing of the product.

Stage "automation of automated testing." Tautology is used intentionally to express the idea that all operations of the automated testing cycle are carried out without the tester's participation - from preparing the environment and running tests on the released build to registering errors in the bug-tracking system (BTS - bug tracking system) create reports. As a rule, this is achieved with the help of scripts and configuration files, and they are less often invested in the development of an integrated automated test management system.

Stage Characteristics

First of all, companies developing complex software products with wide functionality, with medium and long-term projects, and acutely feeling the problem of lack of high-quality and timely regression testing, strive to achieve this stage.

About 12.5% of respondents to our survey, conducted among Habrozhiteley, said that their company is at this stage.

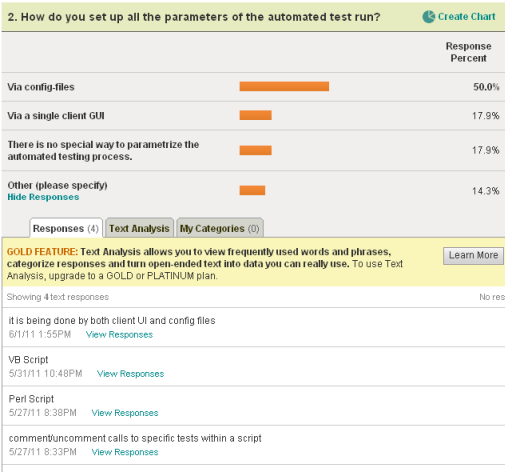

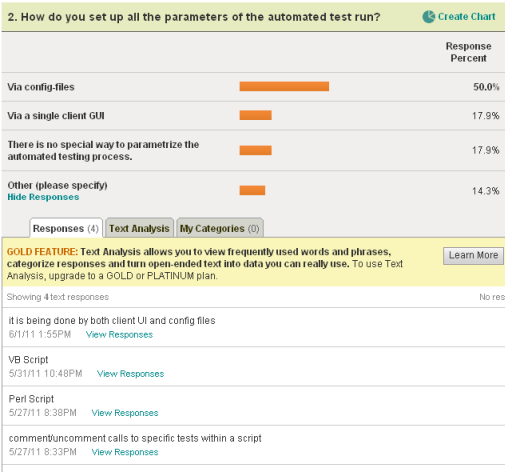

Unfortunately, there is no way to clarify how automated test-based parameterization takes place through a single UI, using config files, etc. The idea to ask such a question came after more than 100 answers had been received, and there was no point in adding it. But we included it in an English-language survey for members of QA-communities on LinkedIn (see chart below).

The above classification is the result of our experience, and does not claim to be the only correct one. I wonder how your visiondoes not coincide with ours?

It is no secret that regular regression testing of new builds ensures consistently high quality of the final product. But due to limited resources, regression testing is often neglected, or it is not fully implemented.

Five years ago, our company was faced with this problem. The number of errors found on the customer’s side has exceeded all standards of decency. The product is a complex desktop system for the automotive industry, consisting of many agents, modules and components. Every month, the application acquired new features, and the testers managed to check the new functionality and only some part of the old one. Despite the close cooperation between developers and testers, the system “fell” in places where no one expected.

It became obvious that the testing processes must be brought to a new level.

Began to facilitate the work of autotests, scripts and batch files. This allowed regression testing to be carried out faster and in greater volume. However, the introduction of automated testing hid a lot of problems that we did not anticipate initially. In fact, we briefly touched on some of them in the description of the stages of automation, but now we will consider more thoroughly.

The expression "automated testing" is to some extent conditional. Often, the tester performs many tasks manually: prepares the environment, uploads a new version of the product, drags configuration files, runs autotests on different Axes, registers defects in the BTS, etc. This is easy, but time consuming, and also increases the likelihood of errors due to the human factor.

Sometimes the loss of time can be significant. For example, in the case of Data Driven tests, where every single result is important, so the number of bugs that need to be entered into the defect control system can be huge. On average, an experienced specialist creates a bug in BTS with filling in all the required fields a little more than 1 minute, closing - about 15 seconds (*). These labor-intensive activities have low added value, and the time spent on their execution can be more effectively utilized by exploiting the testers' intellectual potential, for example, writing new tests.

(*) The measurements were carried out under the following conditions:

1. Defect control systems - MS TFS, Mantis, Bugzilla.

2. Experimenters: 2 testers with 4 years of experience.

During the experiment, 10 bugs were created with the following required fields:

MS TFS: Title, AssignTo, Iteration, Area, Tester, FoundIn, Severity.

Mantis: Category, Summary, Description, Platform, OS, Severity.

Bugzilla: Component, Version, Summary, Description, Severity, Assignee.

The time spent opening a BTS was also taken into account.

Even if the company is at an advanced stage of test automation, they rarely have a common interface for managing all tasks related to automated testing. Most often, parameterization occurs through config files (50% of respondents), which are often not validated. As a result, the number of errors increases and the effectiveness of automated testing as a whole decreases.

LinkedIn user survey results:

Often, when starting to develop an automated testing management system, a company focuses on the tools that are currently used, without providing for scalability and scalability of the system.

The disadvantages of this approach are obvious. If the company has updated the BTS version or decided to add a new type of virtualization servers, or starts using new types of tests, you will have to invest significant resources in customizing the system and integrating it with new tools.

A similar situation develops when the scalability is not embedded in the system architecture: as the product acquires functionality, the number of automatic tests increases, and a rational distribution of virtualization resources is required. Building a pool of virtual servers leads to an increase in efforts to support the test automation system and manage individual elements.

As a rule, each type of test (for example, written in Visual Studio, HP QTP, etc.) provides a report on the results in its own (native) format, and in order for it to be viewed by the project manager or customer, they must have the appropriate software installed. Although some automated test development environments allow you to export these results, for example, to HTML reports that anyone can see, they all differ in shape and structure, which complicates the perception of information. In addition, after running the necessary tests on virtual or physical machines, we receive several packs of reports, each of which must be viewed separately.

The word “cumulative” means the accumulation of results as you pass the tests in a single document. Thus, a “cumulative report in a single format” means the creation of a single general report that is constantly updated as new test results become available and ensures the relevance of the information.

An example of a common HTML report for all tests

A system that controls automated testing is essentially a pipeline. New assemblies for testing are sent to the input, and the output is the result in the form of generated bugs and reports. Developing such a system, we are faced with the problem of lack of protection from the "fool". That is, if one of the tests was written with an error, it could be executed by the system indefinitely and loaded the resource as a virtual or real machine, thereby preventing the tests in the execution queue from being expensive. This problem can be solved in different ways; we implemented the "timeout kill" feature (interrupting the execution of long-running commands).

The lack of logging complicates the process of debugging errors. Often, only some parts of the test automation system have logs, for example, only tests. At the same time, there is no recording of virtual machine events, creating bugs, reports, etc. This makes the system opaque and difficult to track its operations and reduces the efficiency of the process as a whole.

Considering the moments about which we “made bumps” and described above, we gradually, step by step, developed a system that would autonomously control the entire automated testing cycle 24 hours a day - from preparing the environment and running tests to registering defects and creating reports.

It is worth emphasizing separately that this is not about creating your own bug-tracking system or scripting environment. The solution is an integrator that combines already existing test tools into a single system with a common interface. The test toolkit means different software for testing and managing projects: build system, version control system, bug tracking system, virtualization system and test development environment.

Initially, we studied the basic interactions of testers with each of these tools, then the APIs of the latter. Finally, they were integrated all together under a common UI, which allows you to conveniently and quickly configure the system in accordance with the rules and processes existing in the company.

The main development of the management system, which was later called Octopussy in honor of 8 main features, was completed 1.5-2 years ago. Since then, we have not had a headache about the lack of resources for regression testing, and the quality of the produced software has significantly increased. In effect, it feels like we additionally hired 5 testers.

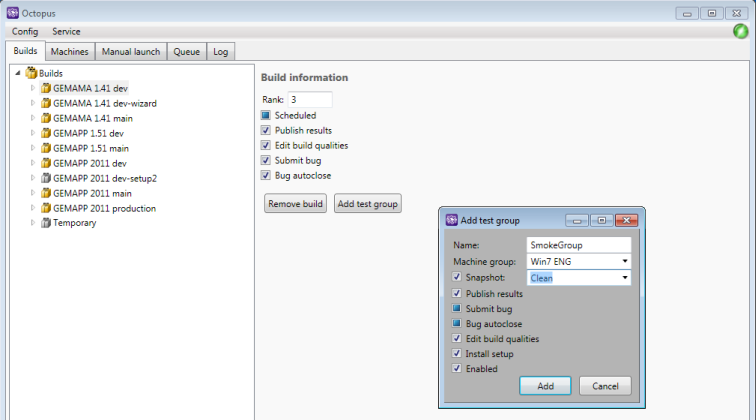

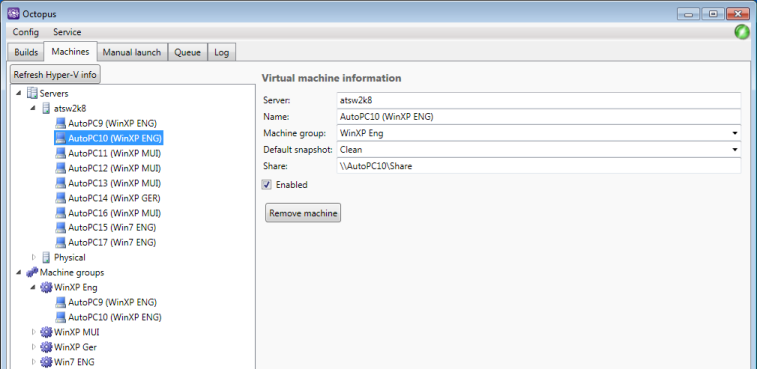

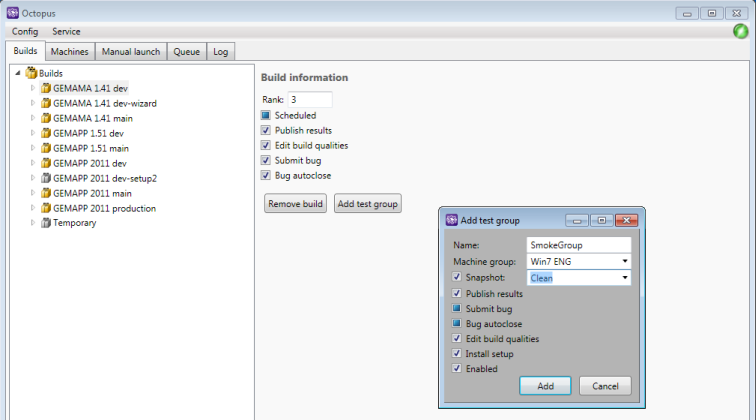

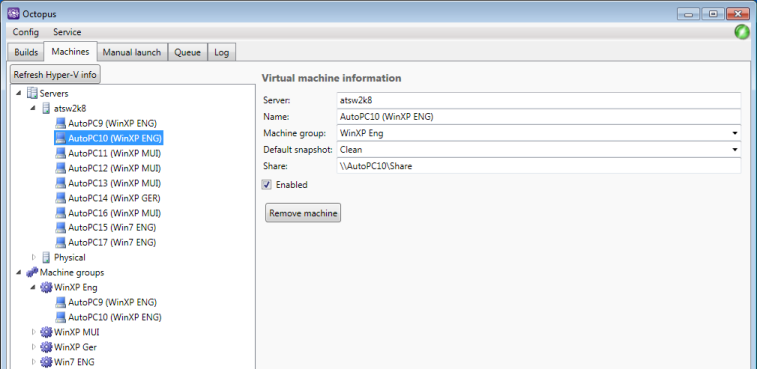

We customize the automated testing process using 5 tabs: Builds, Machines, Manual Start, Queue, Log. On each tab, the setting of the corresponding elements is fully implemented, and the Log allows you to monitor what is happening in every corner of the system.

Screenshots examples

Tabs "builds"

The “Cars” tab

Tab "Queue"

1. Starts virtual machines (VM) in the case of virtualization when testing.

2. Prepares the test environment: copies configuration files and autotests to the testing machine, installs the product.

3. Puts successful builds into the testing queue according to the build priority.

4. Runs automated tests / test groups on a schedule or on an event of a successful release of the assembly.

5. Tests on “clean” VMs, certain snapshots and on physical machines.

6. Registers detected errors in BTS, fills in fields assigned by the tester (for example, Title, Assigned to, State, Description, Found in, Reviewer / Tester, Severity, etc.).

7. Closes fixed bugs, if allowed by the operator.

8. Generates a general report for all passed tests in HTML.

The tester loads the automatic tests into the database of the integrated system, once sets its settings for a specific project, and it independently tests each new product build in non-stop mode.

In this video (3 min.), It is shown how the system automatically starts testing the assembled build.

The solution consists of three modules:

The automatic test manager is a server component that controls the entire system.

Implementation technology: Windows Service in C # for the .NET Framework v3.5 platform.

Resource Consumption:

CPU: practically does not load

RAM: ~ 50 MB

Network: at the start and completion of tasks it is fully occupied - the environment is downloaded to the virtual machine and the results are received

Automatic Test Launch Agent - a module that directly runs tests on builds on a virtual or physical testing machine, sets up an environment, and sends a file with test results to the Automatic Test Manager.

Implementation technology: console application in C ++.

Resource Consumption:

CPU: not used during test execution

RAM: ~ 7 MB

The Automated Testing Control Panel is the client part providing the Octopus user interface. Through it, you can conduct a comprehensive setup of test execution, manage resources (virtual machines), view the current test execution status and event log, and, if necessary, manually start and stop tests.

Implementation technology: desktop and web application on C # under the Microsoft Silverlight platform.

Resource Consumption:

Size: ~ 0.5 MB

RAM: ~ 30 MB

OS: Windows XP / Vista / 7, Windows Server 2003/2008

CPU: 1.0 GHz

RAM: 512 MB

Hard Drive: 4 MB

As the system developed as a project for internal needs, we initially integrated it with the testing products used in our company.

When the thought came that, probably, a similar system might be useful to someone else, the list was expanded to include free software.

At the moment the list looks like this:

Virtualization systems: VMware Server 2.0 *, Hyper-V.

Build Systems: CruiseControl.NET *, Microsoft Team Foundation Server 2008/2010.

Version Control Systems: SVN *, Microsoft Team Foundation Server 2008/2010.

Error control systems: Mantis Bug Tracker *, Bugzilla *, Microsoft Team Foundation Server 2008/2010.

Test development environments: AutoIt *, Microsoft Visual Studio, HP QTP.

(*) - free software.

The system is customized for commercial and free with open API. In the near future - to “screw” the bug-tracking system Jira.

We made a free promotional version of the Octopus application based on free software. We chose freeware, so that if desired, everyone can try it in action.

More information on this page: www.appsys.net/Octopus/Download/Ru .

Waiting for your questions and comments.

The Software Engineering Forum 2011 took place in Minsk on May 19-20. We gave a talk “A New Test Automation Level”, or the alternative long version - “Automation automation” of automated testing through the integration of test tools. In it, we opened up three main questions:

- The levels of test automation in the organization.

- The main points that you should pay attention to test automation (based on your own experience and the experience of colleagues, as well as the results of surveys).

- Prototype solution for automated test management (based on the internal development of Octopus).

Under the cut - the content of the report, a link to the promotional version of Octopus. Long post.

1. Levels of test automation

Based on our own experience in introducing automated tests, on the experience of colleagues from other companies with whom we spoke during the QA conferences, as well as on the data of our surveys, we identified three main stages through which the company passes on the way to test automation.

')

A. Stage of origin (I)

Along with manual testing, companies are starting to use:

- scripts that copy the setup of the test product, install it and partially test it;

- tests written in any development environment of automatic tests.

Stage Characteristics

- Actions associated with automated testing are performed manually (running scripts and tests, working with virtual machines, etc.).

- The lack of a clear organization of test storage (as a rule, tests are stored on the tester's machine, used only by them, that is, they are not reusable and adaptable to changes in the tested interfaces).

- There is no systematization of test launches and control over their execution (they can run tests, but they can also forget).

- Between testers and developers there is no information flow about the status of the build test.

- As a rule, there is no complete confidence in the tests. However, tests and scripts significantly release the tester's resource.

This stage is peculiar to, first of all, companies with short-term projects, which is why they simply do not have time to bring automated testing to a new level. At this stage, there are also organizations that only implement automated tests in their software quality control processes, and small firms that do not clearly describe the testing processes.

B. Conscious Stage (I + II)

Along with the automation of the tests themselves, some part of the process is also automated.

Stage Characteristics

- Organization of centralized storage and reuse of tests (function library) appears.

- The naming convention for automatic tests comes into force.

- Most actions are automated: running tests, starting / stopping virtual machines (in the case of using virtualization), copying the necessary configuration files to a test machine, etc.

- The quality of the tests themselves is improved.

- Running tests are systematized and automated with the release of each product assembly.

- Clearly defined behavior in the processing of test results - the creation / closure of bugs.

This is one of the most popular stages. It is typical for companies that have established the testing process as such and are working on medium-term and long-term projects. Those. we are talking about organizations that need to conduct regression testing of the product.

C. Advanced level (I + II + III)

Stage "automation of automated testing." Tautology is used intentionally to express the idea that all operations of the automated testing cycle are carried out without the tester's participation - from preparing the environment and running tests on the released build to registering errors in the bug-tracking system (BTS - bug tracking system) create reports. As a rule, this is achieved with the help of scripts and configuration files, and they are less often invested in the development of an integrated automated test management system.

Stage Characteristics

- All operations associated with running autotests are “automated”: environment configuration, virtual machine startup, execution tests, bug registration, reporting creation.

- The creation of errors (if appropriate, the closure of corrected ones) in the control system occurs automatically according to all the rules adopted by the company, with the appointment of a responsible person and filling in the specified fields in the BTS.

- Various "gadgets" to increase the efficiency of testing: the creation of groups of tests to run simultaneously, grouping virtual machines according to certain criteria (Axis, installed programs, localization, etc.).

- Single interface for parameterization of test execution (rarely found).

First of all, companies developing complex software products with wide functionality, with medium and long-term projects, and acutely feeling the problem of lack of high-quality and timely regression testing, strive to achieve this stage.

About 12.5% of respondents to our survey, conducted among Habrozhiteley, said that their company is at this stage.

Unfortunately, there is no way to clarify how automated test-based parameterization takes place through a single UI, using config files, etc. The idea to ask such a question came after more than 100 answers had been received, and there was no point in adding it. But we included it in an English-language survey for members of QA-communities on LinkedIn (see chart below).

The above classification is the result of our experience, and does not claim to be the only correct one. I wonder how your vision

Some lyrics, or difficulties with regression testing

It is no secret that regular regression testing of new builds ensures consistently high quality of the final product. But due to limited resources, regression testing is often neglected, or it is not fully implemented.

Five years ago, our company was faced with this problem. The number of errors found on the customer’s side has exceeded all standards of decency. The product is a complex desktop system for the automotive industry, consisting of many agents, modules and components. Every month, the application acquired new features, and the testers managed to check the new functionality and only some part of the old one. Despite the close cooperation between developers and testers, the system “fell” in places where no one expected.

It became obvious that the testing processes must be brought to a new level.

Began to facilitate the work of autotests, scripts and batch files. This allowed regression testing to be carried out faster and in greater volume. However, the introduction of automated testing hid a lot of problems that we did not anticipate initially. In fact, we briefly touched on some of them in the description of the stages of automation, but now we will consider more thoroughly.

2. Problems in the way of test automation

A. Incomplete automation

The expression "automated testing" is to some extent conditional. Often, the tester performs many tasks manually: prepares the environment, uploads a new version of the product, drags configuration files, runs autotests on different Axes, registers defects in the BTS, etc. This is easy, but time consuming, and also increases the likelihood of errors due to the human factor.

Sometimes the loss of time can be significant. For example, in the case of Data Driven tests, where every single result is important, so the number of bugs that need to be entered into the defect control system can be huge. On average, an experienced specialist creates a bug in BTS with filling in all the required fields a little more than 1 minute, closing - about 15 seconds (*). These labor-intensive activities have low added value, and the time spent on their execution can be more effectively utilized by exploiting the testers' intellectual potential, for example, writing new tests.

(*) The measurements were carried out under the following conditions:

1. Defect control systems - MS TFS, Mantis, Bugzilla.

2. Experimenters: 2 testers with 4 years of experience.

During the experiment, 10 bugs were created with the following required fields:

MS TFS: Title, AssignTo, Iteration, Area, Tester, FoundIn, Severity.

Mantis: Category, Summary, Description, Platform, OS, Severity.

Bugzilla: Component, Version, Summary, Description, Severity, Assignee.

The time spent opening a BTS was also taken into account.

B. Lack of a convenient and flexible test management tool

Even if the company is at an advanced stage of test automation, they rarely have a common interface for managing all tasks related to automated testing. Most often, parameterization occurs through config files (50% of respondents), which are often not validated. As a result, the number of errors increases and the effectiveness of automated testing as a whole decreases.

LinkedIn user survey results:

C. Extensibility and Scalability of the Architecture

Often, when starting to develop an automated testing management system, a company focuses on the tools that are currently used, without providing for scalability and scalability of the system.

The disadvantages of this approach are obvious. If the company has updated the BTS version or decided to add a new type of virtualization servers, or starts using new types of tests, you will have to invest significant resources in customizing the system and integrating it with new tools.

A similar situation develops when the scalability is not embedded in the system architecture: as the product acquires functionality, the number of automatic tests increases, and a rational distribution of virtualization resources is required. Building a pool of virtual servers leads to an increase in efforts to support the test automation system and manage individual elements.

D. Lack of cumulative reporting uniform format

As a rule, each type of test (for example, written in Visual Studio, HP QTP, etc.) provides a report on the results in its own (native) format, and in order for it to be viewed by the project manager or customer, they must have the appropriate software installed. Although some automated test development environments allow you to export these results, for example, to HTML reports that anyone can see, they all differ in shape and structure, which complicates the perception of information. In addition, after running the necessary tests on virtual or physical machines, we receive several packs of reports, each of which must be viewed separately.

The word “cumulative” means the accumulation of results as you pass the tests in a single document. Thus, a “cumulative report in a single format” means the creation of a single general report that is constantly updated as new test results become available and ensures the relevance of the information.

An example of a common HTML report for all tests

E. Trouble-free process

A system that controls automated testing is essentially a pipeline. New assemblies for testing are sent to the input, and the output is the result in the form of generated bugs and reports. Developing such a system, we are faced with the problem of lack of protection from the "fool". That is, if one of the tests was written with an error, it could be executed by the system indefinitely and loaded the resource as a virtual or real machine, thereby preventing the tests in the execution queue from being expensive. This problem can be solved in different ways; we implemented the "timeout kill" feature (interrupting the execution of long-running commands).

F. Insufficient system logging

The lack of logging complicates the process of debugging errors. Often, only some parts of the test automation system have logs, for example, only tests. At the same time, there is no recording of virtual machine events, creating bugs, reports, etc. This makes the system opaque and difficult to track its operations and reduces the efficiency of the process as a whole.

3. Solution for automated test management

Considering the moments about which we “made bumps” and described above, we gradually, step by step, developed a system that would autonomously control the entire automated testing cycle 24 hours a day - from preparing the environment and running tests to registering defects and creating reports.

It is worth emphasizing separately that this is not about creating your own bug-tracking system or scripting environment. The solution is an integrator that combines already existing test tools into a single system with a common interface. The test toolkit means different software for testing and managing projects: build system, version control system, bug tracking system, virtualization system and test development environment.

Initially, we studied the basic interactions of testers with each of these tools, then the APIs of the latter. Finally, they were integrated all together under a common UI, which allows you to conveniently and quickly configure the system in accordance with the rules and processes existing in the company.

The main development of the management system, which was later called Octopussy in honor of 8 main features, was completed 1.5-2 years ago. Since then, we have not had a headache about the lack of resources for regression testing, and the quality of the produced software has significantly increased. In effect, it feels like we additionally hired 5 testers.

We customize the automated testing process using 5 tabs: Builds, Machines, Manual Start, Queue, Log. On each tab, the setting of the corresponding elements is fully implemented, and the Log allows you to monitor what is happening in every corner of the system.

Screenshots examples

Tabs "builds"

The “Cars” tab

Tab "Queue"

The main features of the Octopus control system

1. Starts virtual machines (VM) in the case of virtualization when testing.

2. Prepares the test environment: copies configuration files and autotests to the testing machine, installs the product.

3. Puts successful builds into the testing queue according to the build priority.

4. Runs automated tests / test groups on a schedule or on an event of a successful release of the assembly.

5. Tests on “clean” VMs, certain snapshots and on physical machines.

6. Registers detected errors in BTS, fills in fields assigned by the tester (for example, Title, Assigned to, State, Description, Found in, Reviewer / Tester, Severity, etc.).

7. Closes fixed bugs, if allowed by the operator.

8. Generates a general report for all passed tests in HTML.

The tester loads the automatic tests into the database of the integrated system, once sets its settings for a specific project, and it independently tests each new product build in non-stop mode.

In this video (3 min.), It is shown how the system automatically starts testing the assembled build.

Octopus system structure

The solution consists of three modules:

The automatic test manager is a server component that controls the entire system.

Implementation technology: Windows Service in C # for the .NET Framework v3.5 platform.

Resource Consumption:

CPU: practically does not load

RAM: ~ 50 MB

Network: at the start and completion of tasks it is fully occupied - the environment is downloaded to the virtual machine and the results are received

Automatic Test Launch Agent - a module that directly runs tests on builds on a virtual or physical testing machine, sets up an environment, and sends a file with test results to the Automatic Test Manager.

Implementation technology: console application in C ++.

Resource Consumption:

CPU: not used during test execution

RAM: ~ 7 MB

The Automated Testing Control Panel is the client part providing the Octopus user interface. Through it, you can conduct a comprehensive setup of test execution, manage resources (virtual machines), view the current test execution status and event log, and, if necessary, manually start and stop tests.

Implementation technology: desktop and web application on C # under the Microsoft Silverlight platform.

Resource Consumption:

Size: ~ 0.5 MB

RAM: ~ 30 MB

System requirements

OS: Windows XP / Vista / 7, Windows Server 2003/2008

CPU: 1.0 GHz

RAM: 512 MB

Hard Drive: 4 MB

As the system developed as a project for internal needs, we initially integrated it with the testing products used in our company.

When the thought came that, probably, a similar system might be useful to someone else, the list was expanded to include free software.

At the moment the list looks like this:

Virtualization systems: VMware Server 2.0 *, Hyper-V.

Build Systems: CruiseControl.NET *, Microsoft Team Foundation Server 2008/2010.

Version Control Systems: SVN *, Microsoft Team Foundation Server 2008/2010.

Error control systems: Mantis Bug Tracker *, Bugzilla *, Microsoft Team Foundation Server 2008/2010.

Test development environments: AutoIt *, Microsoft Visual Studio, HP QTP.

(*) - free software.

The system is customized for commercial and free with open API. In the near future - to “screw” the bug-tracking system Jira.

Free promotional version

We made a free promotional version of the Octopus application based on free software. We chose freeware, so that if desired, everyone can try it in action.

More information on this page: www.appsys.net/Octopus/Download/Ru .

Waiting for your questions and comments.

Source: https://habr.com/ru/post/123215/

All Articles