How to insert a seal into the document so that the gods do not kill the kitten

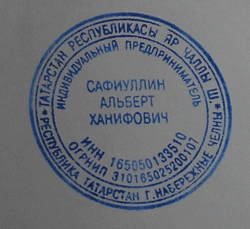

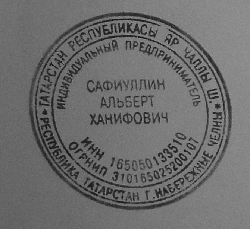

Elba users had a dream to embed images of seals and signatures into accounts, acts, invoices and other serious documents. Why not please the dreamers, we thought. Looking around, we realized that usually in such cases all the dirty work is blamed on the user (well, you know: "the picture should be 300 by 400 pixels, with high contrast, good resolution and a perfectly white background"). But judging by the experience of our team, which happens in a call center, even a simple download of the image from the camera plunges users into deep depression, and they have to be rescued in ungodly ways, a la “insert a picture into the Word”. Of course, there can be no question of forcing users to clean prints in photoshop - let them take pictures as best they can, and Elba will do the rest for them!

If you are interested in knowing what needs to be done with a photo taken with a phone or a soap box, in order to get a clear stamp and signature with a transparent background - read on.

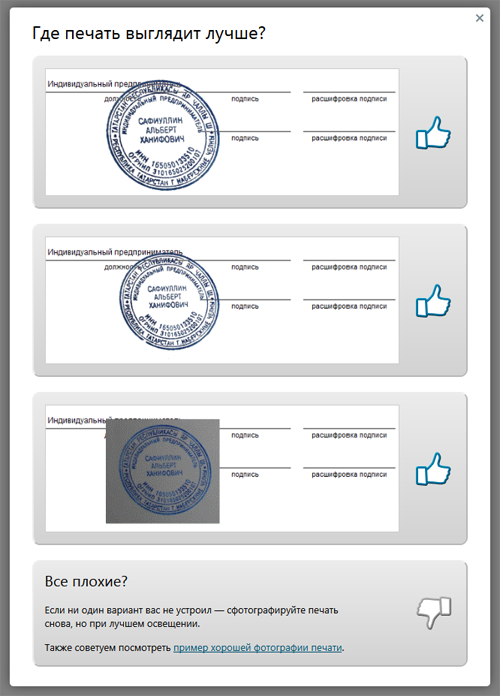

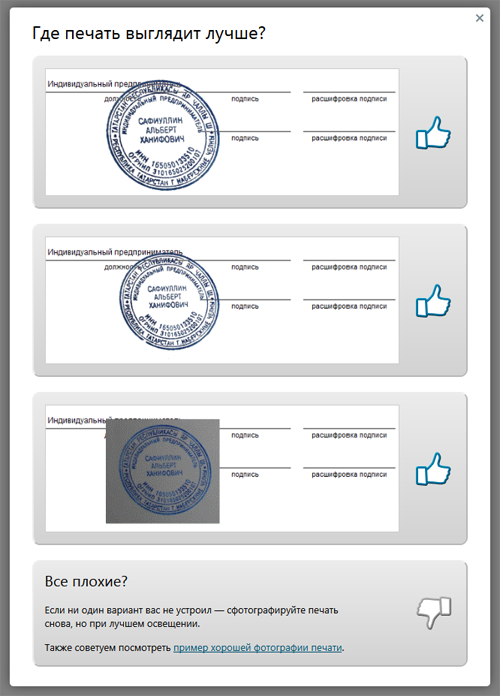

Actually, we have done not one, but three methods for processing seals and signatures. This is not from a good life - some images are better cleaned in one way, some - in another. We simultaneously use all three, and then let the user choose:

')

Any picture can be considered as a set of points, each of which has a certain color. Based on this, our task looks very simple - take only those points that are part of the seal or signature.

The idea looks like this: in some way, we will transform the original image into a black and white image (the background is black, the print is white), then we intersect the set of all white dots (mask) with the original image. Those parts of the original image that coincide in coordinates with white dots are considered a seal. In more detail:

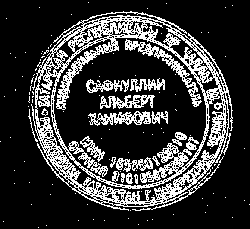

This is what happens if you apply this as a mask to the original print:

Everything that is far enough away from the elements of the image, we threw out, and this, of course, is a success. But we did not remove the details of the background near the print. This happened because the color of the background near the image was not sufficiently different from the picture itself (the photo was poor-quality, unevenly lit, etc.) and during our coarsening these areas were not assigned to the background. Immediately it begs a very simple step - maximize the difference between the print and the background areas near the print. It's very easy to do: increase the contrast. To do this, run the original photo through anti-aliasing and HistogramEqualization:

As a result, the background, far from printing, has become the same color as the print, but we don’t give a damn, we have learned to discard it and work only with background areas close to the picture. Then the matter of technology, no new ideas will be: in gray, inverted, roughened.

So, we are able to clean everything except the garbage near the print, and we have already seen what the result will be. We have just learned how to clean the garbage near the seal, now apply what we got to our first result:

Already not bad. It is clear that we need to slightly blur, raise the contrast, make the background transparent, etc.

It seemed that the goal was achieved, but when we began to take examples of stamps and signatures from the Internet, we encountered a new problem. If for the majority of stamps our algorithm worked quite well, then with the signatures everything was much worse: the level of contrast of photographs was sometimes such that the search for edges simply lost half the lines, and lowering the bar for searching is also dangerous - we risk getting a lot of garbage.

We decided, why do we need these searches of edges and other bells and whistles? In the end, a signature is a very simple thing: a few lines drawn with a dark pen on light paper.

At first glance, to separate the dark from the light is small science. To begin with, the algorithm codenamed “who is not with us is against us” looked very simple: we iterate over all the points that have a higher brightness than the gray color, write them into the “background” and destroy them. All that is darker, leave, for the "pen".

Drove on the first available signature - hooray, cool process!

They drove to the second - a full file.

The first thought is to write on the download form “Fotay is more contrast, boys” and score - for some reason, the interface designers did not approve. I had to turn on the brain. We figured it, since it works on some photos, but not on others, you just need to normalize the photos yourself a little. They took a photo, walked through all the points, built a simple histogram: it was trite for each of the 256 possible brightness values to count the number of points of this brightness itself. Found the minimum brightness, then the maximum, chose the point "somewhere in the middle" and cut out the background.

Hooray, we said, and began to look for examples of trash signatures with joy. Well, to find as much evidence as we can do. Life, as always, turned out to be more interesting: literally in the second photo, a hard file was waiting for us again! No matter how we chose that point “somewhere in the middle,” either the background remained in the corner, or part of the signature disappeared.

Fearfully looking at the result, they opened the original and began to think.

The puzzles, in general, were not there, just the light went down so that the background on one edge of the photo was darker than the pen on the other (as it turned out - this happens quite often in real life). Obviously, in this situation, the desired point simply does not exist.

Understand that further twist the parameters meaningless. They began to reason logically: “Here we are looking at the photo and the signature is perfectly visible. So, the contrast is sufficient. At least local contrast. ”

When the word “local” came to life, they sharply revived and decided: since for the whole picture it is impossible to select a point like “the handle is darker, the background is lighter”, then we will try to do it on the part of the image!

We split into rectangles (a 10x10 grid experimentally arranged for us) and applied the algorithm to each cell separately. All anything, but a part of the cells was filled exclusively with the background. It's easier here - since there is nothing but a background, then local contrast is extremely low. This means that the brightest point and the darkest point in the histogram are very close.

It seems to work out.

We take a separate cell, build a histogram along it, look at the left edge (minimum brightness) and right edge (maximum brightness). Then take the delta, which is the contrast. If the contrast is less than a certain value (at a minimum, it is calculated based on the overall contrast of the image), then we consider the entire cell as the background and discard the excess. If the contrast is greater - we define the point of the “section” and cut off everything that is brighter.

Two options seemed to us a little, we decided to add a third one - a simple treatment of “almost perfect” photos, in which the whole background is white (well, or almost white). To do this, knocked out all the pixels lighter than 95% of the maximum brightness and cut off the field.

As a result, as we said at the beginning, the user chooses one of three options. In the event that none of the options came up (which is extremely rare), we show instructions on how to correctly press the “masterpiece” button on the camera.

You can try it yourself , even if you do not have a seal, then you probably know how to sign;)

If you are interested in knowing what needs to be done with a photo taken with a phone or a soap box, in order to get a clear stamp and signature with a transparent background - read on.

Actually, we have done not one, but three methods for processing seals and signatures. This is not from a good life - some images are better cleaned in one way, some - in another. We simultaneously use all three, and then let the user choose:

')

Method one: a scientific approach

Any picture can be considered as a set of points, each of which has a certain color. Based on this, our task looks very simple - take only those points that are part of the seal or signature.

The idea looks like this: in some way, we will transform the original image into a black and white image (the background is black, the print is white), then we intersect the set of all white dots (mask) with the original image. Those parts of the original image that coincide in coordinates with white dots are considered a seal. In more detail:

- Take a picture

- Translate to gray

- Starting the search for edges

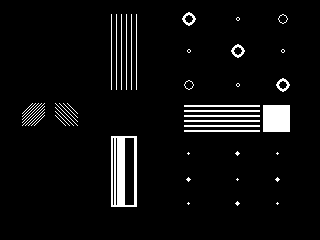

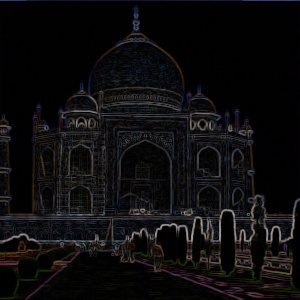

Here we need to clarify what the edges are and how we look for them. In our picture there are areas of uniform color (labels and circles) and the actual edges are the borders of these areas. In many graphics packages and libraries, there is a standard “Sobel’s” filter that highlights the horizontal and vertical edges separately (and it’s on the gray image). Here is a vivid example of the selection of edges according to Sobel:

And here is how the edges were found in our image:

- So, we got a print outline, but it is not uniform: in addition to black and white areas (background and print), there are quite a few points that are close in color to the background or print. By declaring such points a background or an image, we immediately improve the quality of recognition. We harden our picture:

- After all these transformations, we have pretty well identified the print area. But there is rubbish all over the picture - “lonely” white dots. “Lonely” is a keyword, there is always a lot of black around. Now we will reduce the resolution of our image, instead of each square of 20 × 20 points we will make one big point. We define its color as the average of the colors of all the points that entered this square. White single points will inevitably turn black. And after that, we again harden the picture:

- As a result, all the garbage in the form of lonely points disappeared, we well identified the area in which the seal is guaranteed. In addition, we have the edge (remember, found "according to Sobel"). Just cross the edge with what you just got.

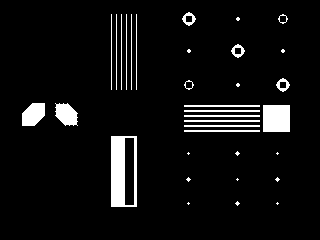

This is what happens if you apply this as a mask to the original print:

Everything that is far enough away from the elements of the image, we threw out, and this, of course, is a success. But we did not remove the details of the background near the print. This happened because the color of the background near the image was not sufficiently different from the picture itself (the photo was poor-quality, unevenly lit, etc.) and during our coarsening these areas were not assigned to the background. Immediately it begs a very simple step - maximize the difference between the print and the background areas near the print. It's very easy to do: increase the contrast. To do this, run the original photo through anti-aliasing and HistogramEqualization:

As a result, the background, far from printing, has become the same color as the print, but we don’t give a damn, we have learned to discard it and work only with background areas close to the picture. Then the matter of technology, no new ideas will be: in gray, inverted, roughened.

So, we are able to clean everything except the garbage near the print, and we have already seen what the result will be. We have just learned how to clean the garbage near the seal, now apply what we got to our first result:

Already not bad. It is clear that we need to slightly blur, raise the contrast, make the background transparent, etc.

True, we have a problem with photos in high resolution (the race for camera megapixels, alas, did not spare the owners of the phones) - the wide (10, and sometimes 100 pixels) print lines began to disintegrate as a result of the search for edges on two separate lines.

To eliminate such minor annoyances, you can use closing. In our case, the closure will lead to the fact that all areas of the background between the paired strokes will be smeared, but only if the distance between the strokes is not too large. Here is an example of how the closure works from the AForge.Closing filter documentation:

It is seen that the cavity is more than a few pixels in a row the closure is not able to gloss over. And the size of the cavity depends on the resolution with which the print was photographed.

It would seem - well, we will bring the resolution in accordance with the one we need (a specific value will be determined experimentally). However, the problem is that people can quite (and love) take pictures of print with huge white fields.

After compressing the image to the “optimal size”, we will get a tiny print in the corner of the photo.

As a result, we decided not to bother and run the algorithm 2 times. For the first time we will remove large debris (and, possibly, small parts of the seal), and also understand where the print is in the picture. After this, we again take the original picture, cut out the now known place with the seal, scale it to the desired size, and again run the cleaning algorithm from the background.

It seemed that the goal was achieved, but when we began to take examples of stamps and signatures from the Internet, we encountered a new problem. If for the majority of stamps our algorithm worked quite well, then with the signatures everything was much worse: the level of contrast of photographs was sometimes such that the search for edges simply lost half the lines, and lowering the bar for searching is also dangerous - we risk getting a lot of garbage.

Method Two: The Great Bicycle Invention

We decided, why do we need these searches of edges and other bells and whistles? In the end, a signature is a very simple thing: a few lines drawn with a dark pen on light paper.

At first glance, to separate the dark from the light is small science. To begin with, the algorithm codenamed “who is not with us is against us” looked very simple: we iterate over all the points that have a higher brightness than the gray color, write them into the “background” and destroy them. All that is darker, leave, for the "pen".

Drove on the first available signature - hooray, cool process!

They drove to the second - a full file.

The first thought is to write on the download form “Fotay is more contrast, boys” and score - for some reason, the interface designers did not approve. I had to turn on the brain. We figured it, since it works on some photos, but not on others, you just need to normalize the photos yourself a little. They took a photo, walked through all the points, built a simple histogram: it was trite for each of the 256 possible brightness values to count the number of points of this brightness itself. Found the minimum brightness, then the maximum, chose the point "somewhere in the middle" and cut out the background.

Hooray, we said, and began to look for examples of trash signatures with joy. Well, to find as much evidence as we can do. Life, as always, turned out to be more interesting: literally in the second photo, a hard file was waiting for us again! No matter how we chose that point “somewhere in the middle,” either the background remained in the corner, or part of the signature disappeared.

Fearfully looking at the result, they opened the original and began to think.

The puzzles, in general, were not there, just the light went down so that the background on one edge of the photo was darker than the pen on the other (as it turned out - this happens quite often in real life). Obviously, in this situation, the desired point simply does not exist.

Understand that further twist the parameters meaningless. They began to reason logically: “Here we are looking at the photo and the signature is perfectly visible. So, the contrast is sufficient. At least local contrast. ”

When the word “local” came to life, they sharply revived and decided: since for the whole picture it is impossible to select a point like “the handle is darker, the background is lighter”, then we will try to do it on the part of the image!

We split into rectangles (a 10x10 grid experimentally arranged for us) and applied the algorithm to each cell separately. All anything, but a part of the cells was filled exclusively with the background. It's easier here - since there is nothing but a background, then local contrast is extremely low. This means that the brightest point and the darkest point in the histogram are very close.

It seems to work out.

We take a separate cell, build a histogram along it, look at the left edge (minimum brightness) and right edge (maximum brightness). Then take the delta, which is the contrast. If the contrast is less than a certain value (at a minimum, it is calculated based on the overall contrast of the image), then we consider the entire cell as the background and discard the excess. If the contrast is greater - we define the point of the “section” and cut off everything that is brighter.

The third way, the final

Two options seemed to us a little, we decided to add a third one - a simple treatment of “almost perfect” photos, in which the whole background is white (well, or almost white). To do this, knocked out all the pixels lighter than 95% of the maximum brightness and cut off the field.

As a result, as we said at the beginning, the user chooses one of three options. In the event that none of the options came up (which is extremely rare), we show instructions on how to correctly press the “masterpiece” button on the camera.

You can try it yourself , even if you do not have a seal, then you probably know how to sign;)

Source: https://habr.com/ru/post/123089/

All Articles