EchoPrint - open music recognition system

Surely many of you have heard and know about music identification systems like TrackID , Shazam , MusicBrainz or online Audiotag.info , which allow you to find out its name from a recorded passage of a song. All of them are quite good, but they have a common drawback - a closed code and, accordingly, limited scope. You can only use TrackID on Sony Ericsson phones, Shazam also on phones, albeit on a more extensive list of platforms, and Music Brainz is not at all clear whether it works at all.

Surely many of you have heard and know about music identification systems like TrackID , Shazam , MusicBrainz or online Audiotag.info , which allow you to find out its name from a recorded passage of a song. All of them are quite good, but they have a common drawback - a closed code and, accordingly, limited scope. You can only use TrackID on Sony Ericsson phones, Shazam also on phones, albeit on a more extensive list of platforms, and Music Brainz is not at all clear whether it works at all.The guys from the company Echo Nest , decided that the recognition of music should be as accessible to the world as an e-mail or DNS :), and released their brainchild completely under the MIT License. A brainchild, I must say, they have a very serious - even if the founders of the company are doctors from MIT Media Lab .

The functionality that they released was not only limited to the recognition of music from a recorded passage, but also allows you to do such things as searching for duplicate music, mass recognition and filling tags in music collections, checking audio / video for the content of a material , synchronization of collections from different music spaces (iTunes <-> Last.fm <-> Spotify, for example) and much more.

')

How it works?

In short - on the client side, a code generator is started, which forms a unique imprint of the recorded part of the song, and sends it to the server for identification.

Customer

The client, also known as echoprint-codegen , comes in the form of a cross-platform library and a binary. Here is a typical use case - check 20 seconds from an mp3 file, starting from the 10th second:

echoprint-codegen. / recorded.mp3 10 20 | \

curl -F "query = @ -" http: // developer.echonest.com / api / v4 / song / identify? api_key = MY_API_KEY

{ "response" : { "status" : { "version" : "4.2" , "code" : 0 , "message" : "Success" } ,

"songs" : [ { "tag" : 0 , "score" : 66 , "title" : "Creep" , "message" : "OK (match type 6)" ,

"artist_id" : "ARH6W4X1187B99274F" , "artist_name" : "Radiohead" , "id" : "SOPQLBY12A6310E992" } ] } }

And if you want to use it in your program, it is also easy:

Codegen * pCodegen = new Codegen ( const float * pcm, uint numSamples, int start_offset ) ;

string code = pCodegen - > getCodeString ( ) ;

As it becomes clear, to fasten it to your favorite language / project is not difficult at all - as long as there is enough imagination. By the way, for the most active guys they even staged a contest with a prize of $ 10,000 for the most interesting and innovative music application that uses the capabilities of the EchoNest API to the full. And the APIs are quite extensive, linking musical compositions according to all imaginable and inconceivable connections - from the main geographical location and the year of birth of the artist to the level of dance composition.

To get a digital fingerprint of a song, its own algorithm, The Echo Nest Musical Fingerprint aka ENMFP , is used , which the company has long been successfully using for its other services, such as searching for similar songs, counting BPM and other things. The guys themselves say that they ate the dog by analyzing the songs (not just sounds, but musical compositions).

You can learn more about the API on the developer portal .

Server

The server-side code is also publicly available on github , so if you prefer, you can raise your own server — Echo Nest even shared several gigabytes of already processed data. The server engine is based on the Apache Solr search server; Tokyo Tyrant is used as the database.

So far, there is relatively little data on their server - about 150,000 songs, but according to the company, they import the 7digital multi-million collection, actively collaborate with MusicBrainz (which even raised its echoprint server to integrate with its data), and also provide functionality new songs by users.

Using

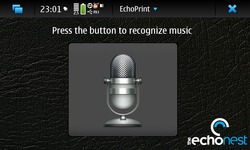

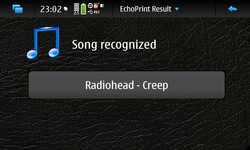

Of course, one of the main functions of use is still the recognition of heard music. I heard a good song, took out the phone, pressed a button - and found out who sings. I quickly wrote an echoprint client for Maemo / Meego, and while testing I can say the following:

- some performers are recognized with a very high probability, and some through time

- 20 seconds is the minimum recording period. an increase in recording time increases the recognition chance

- the volume has almost no effect on the result - the algorithm normalizes the sound well enough

- Metallica is better recognized than Beethoven :-D

- Sometimes wrong: (

Moreover, they even claim that the algorithm allows you to find similar versions - like Live performances or similar covers.

So far, of course, it’s too early to call echoprint a Shazam killer, but that’s just a matter of time. Such projects, being released, begin to develop by orders of magnitude faster.

findings

The main thing that I would like to convey, in addition to a simple review of this wonderful open-source project, is the fact that the company really creates something important (not for everyone, of course). This is not just “another music processing algorithm,” it is a whole encyclopedia of music that allows you to find, describe and combine all songs that have ever been released. Unlike Shazam, in the Echo Nest database there may be information about obscure groups - at least you can upload your own songs. In addition, one of the projects, code-named Rosetta Stone, is intended to combine different identifier spaces - for example, the same MusicBrainz ID or Napster Artist ID.

In general, a kind of musical Wikipedia, which, thanks to openness, promises to grow into something truly ambitious.

Source: https://habr.com/ru/post/122969/

All Articles