Programs, data and their owners (end)

In the first and second parts of the article, we examined the fundamental cause of computer viruses - auto-programmability (the ability of an algorithm to change itself) in a large multi-user environment.

Viruses almost inevitably arise in a computer for which 2 conditions are met:

1) executable code at the hardware level is not prohibited from writing to the area of executable code;

2) the computer has a lot of hosts, independently controlling the system I / O interfaces.

Computer we call an arbitrary computing system. We have already shown that the Internet is just such a system for which both of these conditions are true. They are also performed for the vast majority of Internet subsystems, i.e. system blocks connected to it - from household smartphones and laptops to corporate servers. Nominal owners have lost the possibility of exclusive control from the moment they are connected to the Network, sharing this privilege with millions of strangers whose actions can affect the executable code.

')

Assessment of the reliability of the computer in terms of resistance to viruses.

Both the Internet as a whole and the typical computer connected to the Internet unfortunately occupy the last place in the antivirus security table (marked in red).

The situation would be completely different if people could write programs without errors. But there is no such technology on Earth yet - at least for commercial programs. We will talk about a complex of technological and social reasons making mistakes in software inevitable another time, but for now let's look at a table describing a world where programs do not contain errors.

Evaluation of the reliability of the computer in terms of virus resistance for a hypothetical case of complete absence of errors in all software, provided that virus resistance is incorporated in it at the development level :)

If all operating systems, browsers, web servers and other programs were written ideally and taking into account security requirements, then an arbitrarily large background Neumann environment could exist, while remaining immune to viruses. In ideal software, all the connections between elements are so carefully thought out that, with any combination of interactions, it is mathematically guaranteed that it is impossible to change the code on the part of anyone except its nominal owner. Any information security specialist understands that in relation to large systems it is a utopia .

Nevertheless, careful programming to eliminate the possibility of the influence of some code elements on others is one of the most commonly used methods of anti-virus protection. And it brings good results, especially when the code is compact. But - not perfect. You can often hear statements like "Certified UNIX systems are invulnerable." It is close to the truth, but it is not the truth. Such statements are not mathematically correct. They are indisputable, and their rigorous proof is impossible in practice. Formal proof of the impeccability of a large amount of code is time consuming and very expensive, especially given the interaction of this code with a variety of hardware. Absolute confidence in the immutability of the code gives only its physical protection against changes (read-only) on the appropriate media. This carrier, by the way, can also be RAM - provided that it is impossible to make changes to it by the code itself (for example, if it is placed in memory by external means and then blocking the recording in this memory until the first performance bar).

Competent implementation of protection of critical code in industrial operating systems sometimes provides reliability, close to the physical implementation of read-only. But such systems are not always possible to use - for a variety of technological and economic reasons. In the work of an information security specialist, there can be problems of a completely different kind - from designing a communication operator node to programming a PLC controlling a turbine; from developing a responsible database to auditing and putting in order an arbitrary corporate system. If, for example, such a system is based on the Windows OS and the 1C: Enterprise product complex, then all references to UNIX invulnerability will be useless. In practice, we have to deal with proprietary software, which is difficult to judge about vulnerabilities, and with chips, the command system of which is not known for certain. In the field of information technology, the initial conditions of almost all projects are different. Therefore, for competent management of any project, a specialist should possess techniques that are universal for computer science and cybernetics in general. One of them is fixing the program (hardware protection against changes).

In many cases, the fixation of the program is provided by means of hardware protection emulation. Emulation can be performed at different levels of the architecture, it can be more or less complete, but most importantly, it should not be managed programmatically, i.e. from the same code that is protected with it. Software-controlled emulation of protection (for example, from writing to certain areas of memory or from accessing them) does not deprive the computer of its auto-programmable properties, since ultimately the ability to modify the code is determined by the code itself.

Presence in the processor and chipset separate - program-controlled! - Mechanisms of "hardware" protection of certain areas of memory does not solve the problem completely. For example, in x86 processors, protected mode, system management modes, and hardware virtualization are managed programmatically. But in itself, equipping a computer with such a processor does not mean that it will be immune to viruses: the software ( BIOS , OS, other system-level software) is still fully responsible for properly managing the “hardware” protection. The integrity of the code depends on the computer solely on how it is programmed. In other words, if with two different operating systems (and even with two different settings of one operating system) code protection levels are different on the same hardware, this means that we have a typical auto-programmable system, in which protection is not implemented in hardware, and programmatically.

In a system that does not have autoprogrammability properties, the immutability of a program is ensured regardless of its content. When replacing a program with any other level of its protection, it remains unchanged and absolute, since it is ensured by the system design.

By the way, in connection with some reader comments on the first part of the article, we draw attention to the fact that replacing an arbitrary program is considered to replace at least one bit in it. From a formal point of view, the difference in 1 bit is enough to consider two arbitrary programs different. The term “program replacement” does not necessarily mean the replacement of the entire code from beginning to end with a radical change in functionality :)

Both software and hardware protection of code from changes has its own advantages and disadvantages. To solve many practical problems of information security, the advantages of hardware protection are decisive. The main ones are low cost, mathematically sound reliability and intuitive evidence. Hardware protection does not require proof of the code integrity, providing its guaranteed invariance for the right time even in the presence of errors. But for the application of this method of protection, it is necessary to ensure the separation of programs and data (removal of data beyond the fixed memory area), which in some cases can be a significant drawback.

In addition to protection against viruses, fixing a program (code, algorithm, information objects in general) allows you to solve other information security problems, which we will discuss in future releases. In the meantime, we give several examples of fixing objects at different levels of the physical and logical structure of a computer system. For each object, the flags of immutability and non-execution are indicated. We emphasize that they are installed by means external to the protected objects. Depending on the specific tasks and capabilities, the flags can be implemented in hardware, software or conditionally (this means, for example, there is no direct ban on operations, instead of which flag violation events are tracked). These examples should not be taken as a direct guide to action: these are just illustrations of the general principles of protection against viruses and some particular cases of using fixed programs for this purpose.

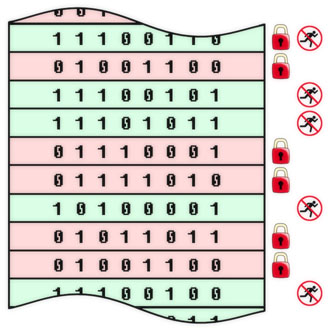

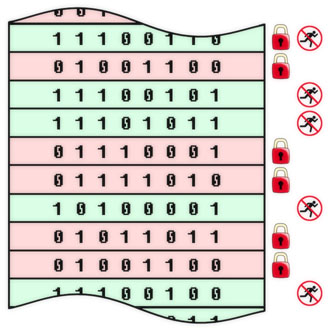

Antivirus protection at the level of individual bytes. It corresponds to the classical microcontroller scheme with a program in ROM and data in RAM, but it can be implemented in other ways (for example, setting flags in additional bits of shared memory).

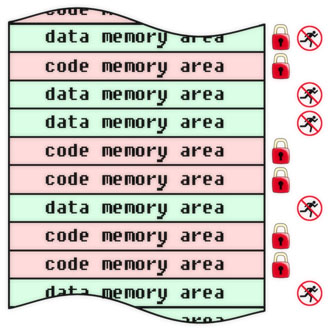

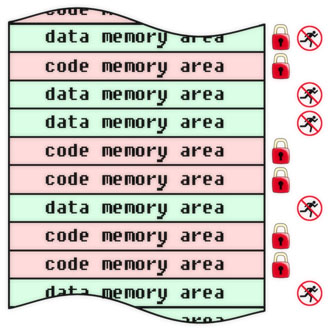

Antivirus protection at the level of logical modules. Well illustrates the key principle of the separation of programs and data - regardless of the specific mechanism for its implementation.

Antivirus protection at the level of memory areas. The physical memory device does not matter: it can be either RAM, flash memory, or disk storage, and any other.

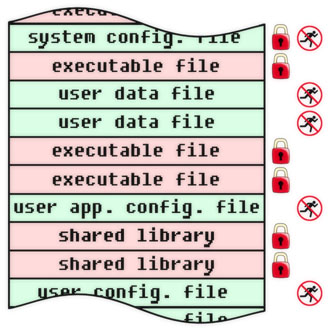

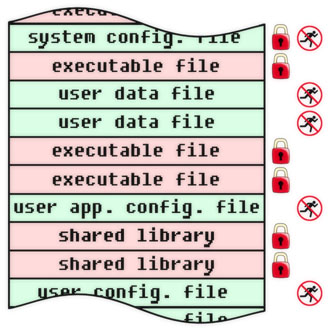

File level anti-virus protection. This level is very visual, it is convenient to control.

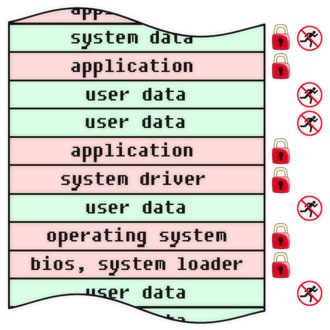

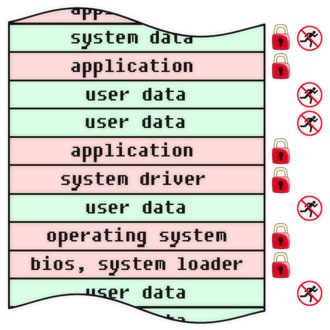

Antivirus protection at the object level of functionally completed software. Control at this level is the key to the long-term reliability of an organization’s computer infrastructure.

Note that an antivirus program based on the principle of heuristic analysis (analysis of the nature and behavior of information objects) is a special case of a system that controls information objects at different levels of a computer and records some of them according to specified rules. This is a useful tool in the arsenal of an information security specialist, but not the only one.

Summarize. We see three ways to resolve the crisis, which was described (in part - allegorically) in the first issue of the blog. The most obvious, reliable and profitable way is to reduce the level of auto-programmability of computing systems. It is difficult, but still real, even for very small organizations and private users. He can protect them not only from the virus threat, but also from the threat of the gradual transition of their programs and data into the hands of the new owners over the next few years.

The second, very dangerous way - reducing the number of owners. Until the bulk of programs and data are concentrated in the hands of a small circle of decision makers on all significant issues. This is the only real alternative to the first path, unfortunately.

The third way is to develop a commercially efficient technology to create programs without errors. The reality of this technology is extremely doubtful.

If our readers see other ways to resolve the crisis or do not consider the events as a crisis, we will be glad to know their opinion. We invite to the discussion.

* * *

Read in the next issue:

What is a computer virus?

Viruses almost inevitably arise in a computer for which 2 conditions are met:

1) executable code at the hardware level is not prohibited from writing to the area of executable code;

2) the computer has a lot of hosts, independently controlling the system I / O interfaces.

Computer we call an arbitrary computing system. We have already shown that the Internet is just such a system for which both of these conditions are true. They are also performed for the vast majority of Internet subsystems, i.e. system blocks connected to it - from household smartphones and laptops to corporate servers. Nominal owners have lost the possibility of exclusive control from the moment they are connected to the Network, sharing this privilege with millions of strangers whose actions can affect the executable code.

')

Assessment of the reliability of the computer in terms of resistance to viruses.

Both the Internet as a whole and the typical computer connected to the Internet unfortunately occupy the last place in the antivirus security table (marked in red).

The situation would be completely different if people could write programs without errors. But there is no such technology on Earth yet - at least for commercial programs. We will talk about a complex of technological and social reasons making mistakes in software inevitable another time, but for now let's look at a table describing a world where programs do not contain errors.

Evaluation of the reliability of the computer in terms of virus resistance for a hypothetical case of complete absence of errors in all software, provided that virus resistance is incorporated in it at the development level :)

If all operating systems, browsers, web servers and other programs were written ideally and taking into account security requirements, then an arbitrarily large background Neumann environment could exist, while remaining immune to viruses. In ideal software, all the connections between elements are so carefully thought out that, with any combination of interactions, it is mathematically guaranteed that it is impossible to change the code on the part of anyone except its nominal owner. Any information security specialist understands that in relation to large systems it is a utopia .

Nevertheless, careful programming to eliminate the possibility of the influence of some code elements on others is one of the most commonly used methods of anti-virus protection. And it brings good results, especially when the code is compact. But - not perfect. You can often hear statements like "Certified UNIX systems are invulnerable." It is close to the truth, but it is not the truth. Such statements are not mathematically correct. They are indisputable, and their rigorous proof is impossible in practice. Formal proof of the impeccability of a large amount of code is time consuming and very expensive, especially given the interaction of this code with a variety of hardware. Absolute confidence in the immutability of the code gives only its physical protection against changes (read-only) on the appropriate media. This carrier, by the way, can also be RAM - provided that it is impossible to make changes to it by the code itself (for example, if it is placed in memory by external means and then blocking the recording in this memory until the first performance bar).

Competent implementation of protection of critical code in industrial operating systems sometimes provides reliability, close to the physical implementation of read-only. But such systems are not always possible to use - for a variety of technological and economic reasons. In the work of an information security specialist, there can be problems of a completely different kind - from designing a communication operator node to programming a PLC controlling a turbine; from developing a responsible database to auditing and putting in order an arbitrary corporate system. If, for example, such a system is based on the Windows OS and the 1C: Enterprise product complex, then all references to UNIX invulnerability will be useless. In practice, we have to deal with proprietary software, which is difficult to judge about vulnerabilities, and with chips, the command system of which is not known for certain. In the field of information technology, the initial conditions of almost all projects are different. Therefore, for competent management of any project, a specialist should possess techniques that are universal for computer science and cybernetics in general. One of them is fixing the program (hardware protection against changes).

In many cases, the fixation of the program is provided by means of hardware protection emulation. Emulation can be performed at different levels of the architecture, it can be more or less complete, but most importantly, it should not be managed programmatically, i.e. from the same code that is protected with it. Software-controlled emulation of protection (for example, from writing to certain areas of memory or from accessing them) does not deprive the computer of its auto-programmable properties, since ultimately the ability to modify the code is determined by the code itself.

Presence in the processor and chipset separate - program-controlled! - Mechanisms of "hardware" protection of certain areas of memory does not solve the problem completely. For example, in x86 processors, protected mode, system management modes, and hardware virtualization are managed programmatically. But in itself, equipping a computer with such a processor does not mean that it will be immune to viruses: the software ( BIOS , OS, other system-level software) is still fully responsible for properly managing the “hardware” protection. The integrity of the code depends on the computer solely on how it is programmed. In other words, if with two different operating systems (and even with two different settings of one operating system) code protection levels are different on the same hardware, this means that we have a typical auto-programmable system, in which protection is not implemented in hardware, and programmatically.

In a system that does not have autoprogrammability properties, the immutability of a program is ensured regardless of its content. When replacing a program with any other level of its protection, it remains unchanged and absolute, since it is ensured by the system design.

By the way, in connection with some reader comments on the first part of the article, we draw attention to the fact that replacing an arbitrary program is considered to replace at least one bit in it. From a formal point of view, the difference in 1 bit is enough to consider two arbitrary programs different. The term “program replacement” does not necessarily mean the replacement of the entire code from beginning to end with a radical change in functionality :)

Both software and hardware protection of code from changes has its own advantages and disadvantages. To solve many practical problems of information security, the advantages of hardware protection are decisive. The main ones are low cost, mathematically sound reliability and intuitive evidence. Hardware protection does not require proof of the code integrity, providing its guaranteed invariance for the right time even in the presence of errors. But for the application of this method of protection, it is necessary to ensure the separation of programs and data (removal of data beyond the fixed memory area), which in some cases can be a significant drawback.

In addition to protection against viruses, fixing a program (code, algorithm, information objects in general) allows you to solve other information security problems, which we will discuss in future releases. In the meantime, we give several examples of fixing objects at different levels of the physical and logical structure of a computer system. For each object, the flags of immutability and non-execution are indicated. We emphasize that they are installed by means external to the protected objects. Depending on the specific tasks and capabilities, the flags can be implemented in hardware, software or conditionally (this means, for example, there is no direct ban on operations, instead of which flag violation events are tracked). These examples should not be taken as a direct guide to action: these are just illustrations of the general principles of protection against viruses and some particular cases of using fixed programs for this purpose.

Antivirus protection at the level of individual bytes. It corresponds to the classical microcontroller scheme with a program in ROM and data in RAM, but it can be implemented in other ways (for example, setting flags in additional bits of shared memory).

Antivirus protection at the level of logical modules. Well illustrates the key principle of the separation of programs and data - regardless of the specific mechanism for its implementation.

Antivirus protection at the level of memory areas. The physical memory device does not matter: it can be either RAM, flash memory, or disk storage, and any other.

File level anti-virus protection. This level is very visual, it is convenient to control.

Antivirus protection at the object level of functionally completed software. Control at this level is the key to the long-term reliability of an organization’s computer infrastructure.

Note that an antivirus program based on the principle of heuristic analysis (analysis of the nature and behavior of information objects) is a special case of a system that controls information objects at different levels of a computer and records some of them according to specified rules. This is a useful tool in the arsenal of an information security specialist, but not the only one.

Summarize. We see three ways to resolve the crisis, which was described (in part - allegorically) in the first issue of the blog. The most obvious, reliable and profitable way is to reduce the level of auto-programmability of computing systems. It is difficult, but still real, even for very small organizations and private users. He can protect them not only from the virus threat, but also from the threat of the gradual transition of their programs and data into the hands of the new owners over the next few years.

The second, very dangerous way - reducing the number of owners. Until the bulk of programs and data are concentrated in the hands of a small circle of decision makers on all significant issues. This is the only real alternative to the first path, unfortunately.

The third way is to develop a commercially efficient technology to create programs without errors. The reality of this technology is extremely doubtful.

If our readers see other ways to resolve the crisis or do not consider the events as a crisis, we will be glad to know their opinion. We invite to the discussion.

* * *

Read in the next issue:

What is a computer virus?

Source: https://habr.com/ru/post/122586/

All Articles