Is there a silver bullet vulnerability analysis

In the comments on my previous article, amarao asked me a very good question . He asked how exactly, using what rules, we are looking for vulnerabilities in software. I will give a part of this question:

With this question, amarao , voluntarily or involuntarily, draws attention to the problem of objectivity in assessing the vulnerability of software, whether this assessment depends, or does not depend, on the expert conducting it. The question is very important, and therefore often arises both among security specialists and our clients.

Indeed, if we are not able to objectively assess the safety of the products offered to us, how can we choose one of them that best suits our needs? All manufacturers, naturally, will swear that it is their product - the safest ever created. Moreover, each of them, perhaps, will believe in it, and, moreover, to believe with good reason.

It seems that software evaluation by independent companies - for example, certification - can solve this problem. But if there is no objective means of analysis, then how can we check the quality of the assessment itself? Indeed, the evaluation of a software product in this case is in itself a product, a service.

Despite the fact that these questions are discussed quite often, I did not see a definite answer to them. But during my work in this area I have developed certain ideas about the possibilities of modern techniques.

In this article I want to illustrate my next thought: today, there is no vulnerability search algorithm that would guarantee an objective security assessment. Therefore, the credibility of the product can be based only on the reputation of the experts who conducted its assessment, and on the experience of its operation (in fact, on the reputation of hackers who tried to overcome the defense).

All existing methods for finding vulnerabilities can be divided into two categories: formal and expert.

Formal methods for finding vulnerabilities in software are very closely related to formal development methods. They are based on the use of languages with a precisely defined meaning for the specification of the product being designed; mathematical methods for validating the resulting project; automated program code generation. During the validation check, an assessment of possible vulnerabilities also occurs.

Expert methods, as the name implies, are based on the knowledge and experience of evaluators. Despite the fact that, by definition, these methods can hardly be called completely objective, I will start with them.

Expert techniques are based on the fact that information systems are created using more or less well-known patterns. There are architecture patterns, design patterns, code. Developers use known means and methods of protection. Programs are written in well-known programming languages, run under common operating systems, use available libraries, access available services. And the use of each of these patterns opens up the possibility for the emergence of certain vulnerabilities.

So, for example, if the program is written in C, C ++, you can try to find in it an “exploitable” buffer overflow. If the query language to the program is rather complicated, it may be vulnerable to attack using API abuse methods. If the program uses the password to authenticate the user, there is a chance to pick it up. Programs working with DBMS are often vulnerable to SQL injection. This list, as you can guess, is huge.

A more complex system is decomposed into its component parts. Further, these parts and their interaction are analyzed.

So in a system consisting of the server and client parts, it is necessary to analyze the server, the client and the protocol of interaction between them. In this case, it may be useful to separate parts also decompose into components. For example, the server part often consists of a DBMS and an application server. In turn, the application server can be implemented in the form of several processes in the operating system; each process - to use certain libraries.

Actually, the technique is reduced to finding some representation of a program or system in the form of interacting parts. This presentation should allow, based on the historical information we have, to analyze the vulnerability of each part and the vulnerabilities associated with their interaction.

A good example of an expert approach is the method developed and used by Microsoft. The method is described, in particular, in the book “ThreatModeling” [ 1 ]. It uses information flow diagrams to analyze the program architecture, attack trees are used to present the results.

Thus, expert methods are based on the ability of a specialist to competently carry out program decomposition and on his knowledge of the vulnerabilities of various types of programs and protocols.

In my experience, “using mathematics” is sometimes perceived with reverence, almost like a magical effect. Mathematical, formal methods, in the understanding of many, are candidates for the title of "silver bullet", and the results obtained with their use are not perceived critically enough.

Of course, formal methods really allow you to create better software. They have already found their application in areas where increased requirements for reliability and / or safety are imposed on programs. Gradually, the areas of their use are expanding, perhaps those who are reading this article are already using at least elements of such methods, or will use them in the future. I think, over time, the use of formal means will allow both improving the quality of the product received and reducing the costs of its development and support * .

On the other hand, although formal methods provide excellent results, it is necessary to understand their limitations. Everyone who was engaged in the construction and study of mathematical models, will tell you that any model can give no more than what was originally incorporated into it.

Let's take as an example a very simple model of a very simple program that implements a very simple protocol.

')

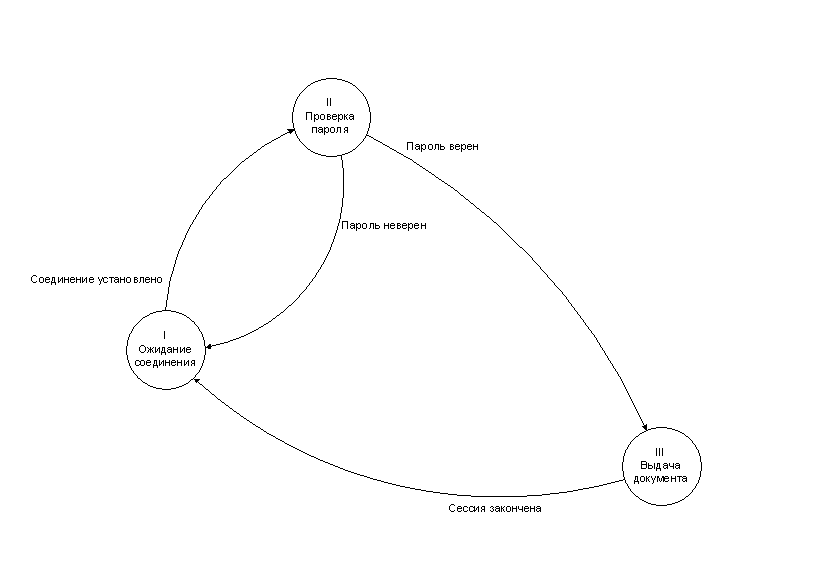

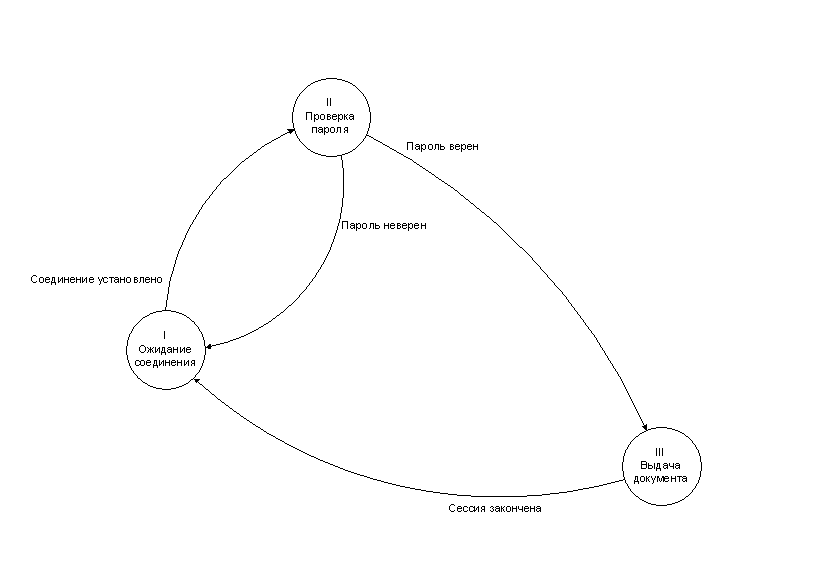

The program, after starting and initialization, should wait for the connection to be established by the user. After the connection is established, the program should receive a username and password from the user. If they turn out to be correct, the program can proceed to transmitting to the user certain information, a document. After the end of the transfer, the program should break the connection, and proceed to waiting for a new connection.

We want to make sure that using this program an unregistered user will not be able to access information.

One of the possible options to do this is to build an automaton model and investigate it.

The scheme of the automaton simulating this program is presented in the following figure.

In this model, only two routes are possible: I → II → III → I and I → II → I

Having considered all possible routes, we come to the conclusion that it is possible to get into the state in which the document is being read only after having successfully passed the authentication. This conclusion is a confirmation of the correctness of our project. Of course, for our simple system, this was obvious; in a more complicated situation, we might have found the “wrong” ways.

If we could find the routes that the system goes through to the document reading state without authentication, this would mean that we found a vulnerability. Moreover, since we would know which requests should be made in each state in order to make the appropriate transition, we would immediately know the method by which the system can be attacked.

It would seem, here it is - an algorithm for analyzing vulnerabilities. We created a model, studied it; At the exit, we got either attack methods or a conclusion about system security. But note that each model can help us find only certain types of vulnerabilities, in this case, these are errors in the sequence of messages the user exchanges with the program. And we are building this or that model, because we expect to find vulnerabilities of a certain type.

In asserting the safety of a program based on the study of a mathematical model, we rely on a number of assumptions about the properties of the simulated program, the environment in which it works.

Let's discuss some of the assumptions we made when building our simple model.

Probably our most obvious suggestion is that the user who submitted the correct password is registered. The model does not consider the problem of interception, selection, theft from the user of his password. In order to evaluate the program from this point of view, additional research would be needed.

There are less obvious assumptions. For example, the fact that the user receiving the document will be the same user who entered the name and password. This excludes from consideration all attacks related to session hijacking.

As we have seen, even when examining a very simple program, we are forced to rely on many assumptions. For more complex systems, this is even more true. Every time we make a conclusion about the security of a software product or system, we rely on a number of assumptions about the limited capabilities of the attacker.

Some of the assumptions can be verified. For example, in some cases we might argue that the session will not be intercepted. This looks quite plausible if the user works with the local keyboard and monitor, and the operating system supports the “trusted path”. Yes, I almost forgot, probably we still have to assume that the session is automatically terminated if the user gets up from the chair ...

Other assumptions cannot be verified. Some of them cannot be verified because we do not know the limits of the physical capabilities of the attacker. For example, its ability to intercept electromagnetic radiation from a computer. We can assume what means the attacker has, we may even have grounds for confidence in this knowledge. But we cannot be sure until the end. Perhaps the situation will change tomorrow in a significant way. It is possible that the attack means are already in relatively free access, but we still do not know about it.

Some logical assumptions also cannot be verified. An example of this assumption is the security of the RSA algorithm. Strict formal evidence of its indestructibility, to my knowledge, does not exist. This means that tomorrow a hacking technique may appear, and all systems based on the use of this algorithm will become unprotected. Moreover, we cannot be absolutely sure that no one yet knows how to attack this algorithm. Of course, there are good reasons to believe that RSA is now safe, but ask yourself the question: if you had a business, at a price of a couple of billions of dollars (which would make it an interesting target), would you put it completely dependent on RSA?

Interestingly, all these assumptions are not related to the fact that we know something — how to solve a particular problem — but to the fact that we do not know something . Every time when we have to check one or another of our statements, the question arises: we cannot solve this problem, and no one knows how to do this, or is the method of solution known, but do we not know it?

Anyone who remembers a school course of mathematics at least a little will easily notice that all mathematical models are based on axioms - unproved within the framework of the model itself. And the difference between a “good” and a “bad” model can only be in the reliability of the assumptions made. It is much more difficult to verify the statement “no one knows how to do this” than the statement “we can do it”. In this we have to trust the experts.

And we come to the paradoxical, it would seem, conclusion about the subjectivity of formal security models, about their dependence on the experience of an expert.

The absence of vulnerabilities cannot be demonstrated, in contrast to how, for example, the functionality of the program or its performance can be demonstrated. We have to trust the expert opinion.

Even if an expert does not know how to attack this system, this does not mean that it is safe. Even if no expert knows this, all the same, we cannot be completely sure that there are no such methods.

At the same time, only an expert - or an expert community - can determine which checks need to be done, what efforts are sufficient to make to search for vulnerabilities in the analyzed program. He can do this on the basis of his knowledge of the methods of attacks on such systems. And confidence in the results of the audit can only be based on the reputation of the experts who conducted it.

And I want to finish within the logic of the entire article.

Can I say that the Big Opening will not be made tomorrow, as a result of which, everything that was said in this article will turn out to be wrong? No I can not.

Am I sure that this Big Opening has not been made yet? I have reason to believe that this has not happened yet. But I can't be completely sure.

* If you are interested in formal approaches in general, without regarding security, then you may be interested in reading the book “Understanding formal methods” [ 2 ].

Another book is Computer Security. Art and Science ”[ 3 ] contains a lot of information about mathematical models in information security, including a separate chapter devoted to formal methods of software security analysis.

1. Frank Swiderski, Window Snyder “Threat Modeling”, Microsoft Press 2004, ISBN 978-0-7356-1991-3

2. Jean Francois Monin, Michal G. Hichey (editor) “Understanding Formal Methods”, Springer-Verlag 2003, ISBN 1-85233-247-6

3. Matt Bishop “Computer Security. Art and Science ”, Addison-Wesley 2003, ISBN 0-201-44099-7

By what algorithms, by what rules? Or is it all on a whim "and I know what mim is!"? In this case, the method does not describe in an even case anything somewhere - I thought, somewhere there is none.

With this question, amarao , voluntarily or involuntarily, draws attention to the problem of objectivity in assessing the vulnerability of software, whether this assessment depends, or does not depend, on the expert conducting it. The question is very important, and therefore often arises both among security specialists and our clients.

Indeed, if we are not able to objectively assess the safety of the products offered to us, how can we choose one of them that best suits our needs? All manufacturers, naturally, will swear that it is their product - the safest ever created. Moreover, each of them, perhaps, will believe in it, and, moreover, to believe with good reason.

It seems that software evaluation by independent companies - for example, certification - can solve this problem. But if there is no objective means of analysis, then how can we check the quality of the assessment itself? Indeed, the evaluation of a software product in this case is in itself a product, a service.

Despite the fact that these questions are discussed quite often, I did not see a definite answer to them. But during my work in this area I have developed certain ideas about the possibilities of modern techniques.

In this article I want to illustrate my next thought: today, there is no vulnerability search algorithm that would guarantee an objective security assessment. Therefore, the credibility of the product can be based only on the reputation of the experts who conducted its assessment, and on the experience of its operation (in fact, on the reputation of hackers who tried to overcome the defense).

All existing methods for finding vulnerabilities can be divided into two categories: formal and expert.

Formal methods for finding vulnerabilities in software are very closely related to formal development methods. They are based on the use of languages with a precisely defined meaning for the specification of the product being designed; mathematical methods for validating the resulting project; automated program code generation. During the validation check, an assessment of possible vulnerabilities also occurs.

Expert methods, as the name implies, are based on the knowledge and experience of evaluators. Despite the fact that, by definition, these methods can hardly be called completely objective, I will start with them.

Expert methods

Expert techniques are based on the fact that information systems are created using more or less well-known patterns. There are architecture patterns, design patterns, code. Developers use known means and methods of protection. Programs are written in well-known programming languages, run under common operating systems, use available libraries, access available services. And the use of each of these patterns opens up the possibility for the emergence of certain vulnerabilities.

So, for example, if the program is written in C, C ++, you can try to find in it an “exploitable” buffer overflow. If the query language to the program is rather complicated, it may be vulnerable to attack using API abuse methods. If the program uses the password to authenticate the user, there is a chance to pick it up. Programs working with DBMS are often vulnerable to SQL injection. This list, as you can guess, is huge.

A more complex system is decomposed into its component parts. Further, these parts and their interaction are analyzed.

So in a system consisting of the server and client parts, it is necessary to analyze the server, the client and the protocol of interaction between them. In this case, it may be useful to separate parts also decompose into components. For example, the server part often consists of a DBMS and an application server. In turn, the application server can be implemented in the form of several processes in the operating system; each process - to use certain libraries.

Actually, the technique is reduced to finding some representation of a program or system in the form of interacting parts. This presentation should allow, based on the historical information we have, to analyze the vulnerability of each part and the vulnerabilities associated with their interaction.

A good example of an expert approach is the method developed and used by Microsoft. The method is described, in particular, in the book “ThreatModeling” [ 1 ]. It uses information flow diagrams to analyze the program architecture, attack trees are used to present the results.

Thus, expert methods are based on the ability of a specialist to competently carry out program decomposition and on his knowledge of the vulnerabilities of various types of programs and protocols.

Formal methods

In my experience, “using mathematics” is sometimes perceived with reverence, almost like a magical effect. Mathematical, formal methods, in the understanding of many, are candidates for the title of "silver bullet", and the results obtained with their use are not perceived critically enough.

Of course, formal methods really allow you to create better software. They have already found their application in areas where increased requirements for reliability and / or safety are imposed on programs. Gradually, the areas of their use are expanding, perhaps those who are reading this article are already using at least elements of such methods, or will use them in the future. I think, over time, the use of formal means will allow both improving the quality of the product received and reducing the costs of its development and support * .

On the other hand, although formal methods provide excellent results, it is necessary to understand their limitations. Everyone who was engaged in the construction and study of mathematical models, will tell you that any model can give no more than what was originally incorporated into it.

Let's take as an example a very simple model of a very simple program that implements a very simple protocol.

')

Simple example

The program, after starting and initialization, should wait for the connection to be established by the user. After the connection is established, the program should receive a username and password from the user. If they turn out to be correct, the program can proceed to transmitting to the user certain information, a document. After the end of the transfer, the program should break the connection, and proceed to waiting for a new connection.

We want to make sure that using this program an unregistered user will not be able to access information.

One of the possible options to do this is to build an automaton model and investigate it.

The scheme of the automaton simulating this program is presented in the following figure.

In this model, only two routes are possible: I → II → III → I and I → II → I

Having considered all possible routes, we come to the conclusion that it is possible to get into the state in which the document is being read only after having successfully passed the authentication. This conclusion is a confirmation of the correctness of our project. Of course, for our simple system, this was obvious; in a more complicated situation, we might have found the “wrong” ways.

If we could find the routes that the system goes through to the document reading state without authentication, this would mean that we found a vulnerability. Moreover, since we would know which requests should be made in each state in order to make the appropriate transition, we would immediately know the method by which the system can be attacked.

It would seem, here it is - an algorithm for analyzing vulnerabilities. We created a model, studied it; At the exit, we got either attack methods or a conclusion about system security. But note that each model can help us find only certain types of vulnerabilities, in this case, these are errors in the sequence of messages the user exchanges with the program. And we are building this or that model, because we expect to find vulnerabilities of a certain type.

In asserting the safety of a program based on the study of a mathematical model, we rely on a number of assumptions about the properties of the simulated program, the environment in which it works.

Assumptions in the model

Let's discuss some of the assumptions we made when building our simple model.

Probably our most obvious suggestion is that the user who submitted the correct password is registered. The model does not consider the problem of interception, selection, theft from the user of his password. In order to evaluate the program from this point of view, additional research would be needed.

There are less obvious assumptions. For example, the fact that the user receiving the document will be the same user who entered the name and password. This excludes from consideration all attacks related to session hijacking.

As we have seen, even when examining a very simple program, we are forced to rely on many assumptions. For more complex systems, this is even more true. Every time we make a conclusion about the security of a software product or system, we rely on a number of assumptions about the limited capabilities of the attacker.

Some of the assumptions can be verified. For example, in some cases we might argue that the session will not be intercepted. This looks quite plausible if the user works with the local keyboard and monitor, and the operating system supports the “trusted path”. Yes, I almost forgot, probably we still have to assume that the session is automatically terminated if the user gets up from the chair ...

Other assumptions cannot be verified. Some of them cannot be verified because we do not know the limits of the physical capabilities of the attacker. For example, its ability to intercept electromagnetic radiation from a computer. We can assume what means the attacker has, we may even have grounds for confidence in this knowledge. But we cannot be sure until the end. Perhaps the situation will change tomorrow in a significant way. It is possible that the attack means are already in relatively free access, but we still do not know about it.

Some logical assumptions also cannot be verified. An example of this assumption is the security of the RSA algorithm. Strict formal evidence of its indestructibility, to my knowledge, does not exist. This means that tomorrow a hacking technique may appear, and all systems based on the use of this algorithm will become unprotected. Moreover, we cannot be absolutely sure that no one yet knows how to attack this algorithm. Of course, there are good reasons to believe that RSA is now safe, but ask yourself the question: if you had a business, at a price of a couple of billions of dollars (which would make it an interesting target), would you put it completely dependent on RSA?

Interestingly, all these assumptions are not related to the fact that we know something — how to solve a particular problem — but to the fact that we do not know something . Every time when we have to check one or another of our statements, the question arises: we cannot solve this problem, and no one knows how to do this, or is the method of solution known, but do we not know it?

Anyone who remembers a school course of mathematics at least a little will easily notice that all mathematical models are based on axioms - unproved within the framework of the model itself. And the difference between a “good” and a “bad” model can only be in the reliability of the assumptions made. It is much more difficult to verify the statement “no one knows how to do this” than the statement “we can do it”. In this we have to trust the experts.

And we come to the paradoxical, it would seem, conclusion about the subjectivity of formal security models, about their dependence on the experience of an expert.

findings

The absence of vulnerabilities cannot be demonstrated, in contrast to how, for example, the functionality of the program or its performance can be demonstrated. We have to trust the expert opinion.

Even if an expert does not know how to attack this system, this does not mean that it is safe. Even if no expert knows this, all the same, we cannot be completely sure that there are no such methods.

At the same time, only an expert - or an expert community - can determine which checks need to be done, what efforts are sufficient to make to search for vulnerabilities in the analyzed program. He can do this on the basis of his knowledge of the methods of attacks on such systems. And confidence in the results of the audit can only be based on the reputation of the experts who conducted it.

And I want to finish within the logic of the entire article.

Can I say that the Big Opening will not be made tomorrow, as a result of which, everything that was said in this article will turn out to be wrong? No I can not.

Am I sure that this Big Opening has not been made yet? I have reason to believe that this has not happened yet. But I can't be completely sure.

Note

* If you are interested in formal approaches in general, without regarding security, then you may be interested in reading the book “Understanding formal methods” [ 2 ].

Another book is Computer Security. Art and Science ”[ 3 ] contains a lot of information about mathematical models in information security, including a separate chapter devoted to formal methods of software security analysis.

Literature

1. Frank Swiderski, Window Snyder “Threat Modeling”, Microsoft Press 2004, ISBN 978-0-7356-1991-3

2. Jean Francois Monin, Michal G. Hichey (editor) “Understanding Formal Methods”, Springer-Verlag 2003, ISBN 1-85233-247-6

3. Matt Bishop “Computer Security. Art and Science ”, Addison-Wesley 2003, ISBN 0-201-44099-7

Source: https://habr.com/ru/post/122132/

All Articles