Competitive access to relational databases

The issues of concurrency in computer computing are very complex! The reasons for the great complexity are the huge amount of details that need to be considered when developing parallel programs. In programming, there are already a large number of details that create grounds for errors; concurrency adds more.

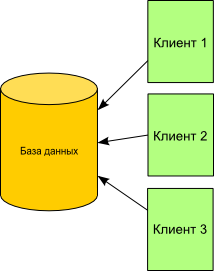

The issues of concurrency in computer computing are very complex! The reasons for the great complexity are the huge amount of details that need to be considered when developing parallel programs. In programming, there are already a large number of details that create grounds for errors; concurrency adds more.The issues of competitive access to relational databases are confronted practically by any developers of application software and not only by them. The result of this demand for this area is the presence of a large number of created architectural patterns. This allows you to successfully cope with the great complexity of developing such programs. Below we will talk about these recipes, as well as the mechanisms on which their implementation is based. The narration will be illustrated with examples of Java code, but most of the material is not language bound. The purpose of the article is to describe the problems of competitive access to relational databases, as an introduction to the subject, rather than a full coverage of the topic.

Competitive Access Issues

Consider the situation. In a certain accounting system, it is necessary to reflect the change in the remnants of the goods when carrying out documents. To make the discussion more substantive, I will provide it with examples working on PostgreSQL. Here is the database structure for our accounting system and test data.

')

CREATE TABLE stocks ( id integer PRIMARY KEY, name varchar(256) NOT NULL, quantity decimal(10,2) NOT NULL DEFAULT 0.0 ); CREATE TABLE documents ( id integer PRIMARY KEY, quantity decimal(10,2) NOT NULL DEFAULT 0.0, processed boolean NOT NULL DEFAULT false, stock integer REFERENCES stocks ); INSERT INTO stocks (id, name, quantity) VALUES (1, '', 56.4), (2, '', 26.8); INSERT INTO documents (id, quantity, stock) VALUES (1, 15.6, 1), (2, 26.1, 1); Suppose our document is responsible for writing off goods from the warehouse. The application code loads the document and residual data, performs calculations, and saves the data to the database. If this action is performed by one application, then everything is in order.

SELECT quantity, processed, stock FROM documents WHERE id = 1; quantity | processed | stock ----------+-----------+------- 15.60 | f | 1 SELECT name, quantity FROM stocks WHERE id = 1; name | quantity ------+---------- | 56.40 Loaded document data with ID 1 and the remainder corresponding to it. The new value of the remainder is calculated by writing off 56.40 - 15.60 = 40.80 and the data is saved back with a note about the processing of the document.

UPDATE stocks SET quantity = 40.80 WHERE id = 1; UPDATE documents SET processed = true WHERE id = 1; But single-user systems have a long history. Now it is impossible to imagine a business application to work with which users must line up. Therefore, let's consider the situation when two documents are processed simultaneously. As in the first case, applications read data from the database.

first application

SELECT quantity, processed, stock FROM documents WHERE id = 1; quantity | processed | stock ----------+-----------+------- 15.60 | f | 1 SELECT name, quantity FROM stocks WHERE id = 1; name | quantity ------+---------- | 56.40 second application

SELECT quantity, processed, stock FROM documents WHERE id = 2; quantity | processed | stock ----------+-----------+------- 26.10 | f | 1 SELECT name, quantity FROM stocks WHERE id = 1; name | quantity ------+---------- | 56.40 And then there is an obvious problem: the first application calculates 56.40 - 15.60 = 40.80, and the second 56.40 - 26.10 = 30.30. And any of these results will be written into the database. The one for which the last update request will be executed. This is clearly not the behavior that the user expects to see. Problems of this type are well known in parallel programming and are called race conditions (race conditions). This case is a compound action under the general name read-modify-write (read-modify-write).

If we checked the document for processing (the processed field), and in a real application we would have to do this, a situation could occur in which, despite the verification, the document could be processed twice. All the same condition of the race, but a different type of operation check-then-act (check-then-act). In addition, in the intervals between update requests, the database is in an inconsistent state, that is, the action on the document has already been executed, but the document is not marked as processed.

As a result, we have two of the most common problems that manifest themselves with incorrect competitive access to databases. This is a loss of changes occurring in the event of a race read-modify-write. And the inconsistent state of the data in the interval between update requests.

DBMS mechanisms

In order to discuss specific recipes for competitive access to relational databases, you need to be familiar with the lower-level mechanisms that DBMS and programming tools provide. These are the technical elements on which the implementation is based. The main such elements are transactions and locks. About them and will be discussed further.

Transactions and isolation levels

A transaction is a single sequence of operations for interacting with a database, which is perceived as a single whole, which has the ability to roll back or confirm the completion of operations. A transaction may be a means of resolving the problem of consistency of changes, as well as to prevent the situation of racing. The suitability of transactions to solve the first or both problems depends on the isolation level. Isolation is a property that determines how / when changes made by a single operation will be visible to a competitive operation.

The SQL standard defines four isolation levels: Read uncommitted, Read committed, Repeatable read, Serializable. All of them differ in the minimum acceptable insulation level. The basic properties of these levels should be clear from the title. Different DBMSs can implement support for isolation types in different ways. The main thing that each implementation does not allow isolation, less strict than prescribed for the level. For example, PostgreSQL supports only two levels of isolation, Read committed and Serializable, internally. Consider using these isolation levels to solve the problems described in the examples above.

Using the Read committed isolation level (used by default in PostgreSQL) allows us to solve the inconsistent data state problem. This is achieved due to the fact that all changes made within the transaction become visible to competitive transactions after the current one.

BEGIN; SELECT quantity, processed, stock FROM documents WHERE id = 1; SELECT name, quantity FROM stocks WHERE id = 1; -- UPDATE stocks SET quantity = 40.80 WHERE id = 1; UPDATE documents SET processed = true WHERE id = 1; COMMIT; But such a transaction does not solve the problem with the state of races in the competitive execution of operations. In such situations, another level of isolation can help - Serializable. This is a possible solution, but it is not the only one. The behavior of the implementation of the Serializable isolation level in PostgreSQL does not fully match the name. That is, all transactions with a Serializable isolation level are not performed sequentially. Instead, when committing a transaction, the conflict is checked when the data is changed and if the data has already been changed by a competitive transaction, the current transaction fails.

BEGIN ISOLATION LEVEL SERIALIZABLE; SELECT quantity, processed, stock FROM documents WHERE id = 1; SELECT name, quantity FROM stocks WHERE id = 1; -- UPDATE stocks SET quantity = 40.80 WHERE id = 1; UPDATE documents SET processed = true WHERE id = 1; COMMIT; The above transaction solves the problem with the race situation and data consistency. A distinctive feature of this approach is that the code performing this transaction learns about success only after the completion of the transaction. And as a result of failure, it will be necessary to repeat all actions and calculations until the transaction is completed successfully or the decision is made to refuse to perform the action. This behavior is unsatisfactory with a large competitive load, because a large amount of resources will be consumed to perform repetitions. This behavior is called optimistic concurrency control.

The examples above are based on the assumption that business logic is fully implemented in the application code, and the database acts only as a repository.

An important detail in the use of transactions affecting performance is their length. Transactions should not be too long; the faster they are made, the more efficient the system will be. This note may not be critical for code that edits a document in an application with a small number of users. But it is critical, for example, in a web service situation that interacts with a database and is used by a large number of clients.

Locks

In parallel programming, a blocking mechanism is used to control execution threads. Locks allow you to serialize access to specific areas. DBMS also supports locking mechanisms to control access to data. Consider the possibilities of this mechanism on the example of PostgreSQL.

PostgreSQL supports many types of locks. Their main distinguishing feature is the set of types of locks with which the current type of lock conflicts. Conflict means that the current lock cannot be captured together with the locks of any of the conflicting types. In addition, locks are divided into explicit and implicit. Explicit locks are those that are executed in the query using the lock keyword and query modifiers for update or for share, in other words, specified by the user. Implicit locks are those that are captured while executing various queries (select, update, insert, alter, and others). PostgerSQL also supports a separate type of lock called advisory lock.

Locks are captured from the time the request is executed to the end of the current transaction. For example, in our hypothetical accounting system, it was possible to capture an exclusive lock on the row of the stocks table corresponding to the balance with which the operation is performed and on the row of the documents table, thereby ensuring that only the current transaction has access to this data.

BEGIN; SELECT quantity, processed, stock FROM documents WHERE id = 1 FOR UPDATE; SELECT name, quantity FROM stocks WHERE id = 1 FOR UPDATE; -- UPDATE stocks SET quantity = 40.80 WHERE id = 1; UPDATE documents SET processed = true WHERE id = 1; COMMIT; In the code above, locks are captured on lines in the documents and stocks tables. In this case, the for update keywords are added to the select data request. This is an explicit blocking of the update string. Locking a whole table in this case is not effective. In general, it is necessary to block the table only for large-scale operations with data in it, but these are quite rare cases. Otherwise, you get a performance problem with competitive access, because all calls to it are serialized.

When attempting to lock a lock on an area, the lock on which has already been captured, blocking the request (by default) until the lock is released, or in the case of a dead lock, an error message is returned. It is possible to set the interval for returning control from a blocked request, or immediately receive a message about the impossibility of blocking the lock (nowait).

Types of competitive control

Competitive control is the rules by which parallel execution threads interact at competitive sites. Competitive controls should ensure the correctness of the calculated result. An additional goal is to get the result as quickly as possible in a particular case. Competitive control is usually divided into types according to the time of conflict handling: optimistic (optimistic), pessimistic (pessimistic) and partially optimistic (semi-optimistic).

Optimistic competitive control involves checking for a possible conflict when committing an action. For example, a user requests some data from the repository and modifies it, after which he tries to save his changes. If the current version of the data stored in the repository matches the version of the data on the basis of which the changes occurred, then there is no conflict and the data can be saved. In the opposite case, a conflict arises, which is processed either by repeating the actions or refusing them. Optimistic competitive control provides good performance with relatively little competition for competitive access.

Pessimistic competitive control involves checking for a possible conflict before performing an action. That is, competitive execution threads are serialized on the protected area. This provides greater performance with high competition for competitive access.

Partially optimistic is a mixed type in which both approaches are used simultaneously.

Architectural patterns

At this point, the reader should have a general idea of the problems with competitive access and the mechanisms for DBMS to solve them. This section focuses on architectural patterns that present a solution to the problems of competitive access to databases. Below is not a complete description, only a general idea and an example. For a full description refer to the links at the bottom of the article.

When working with a DBMS, the data is loaded into the application's memory, actions are performed on or over them, and the result of the actions, as a rule, should be saved back. All this time, it is necessary to store information about changes over the data in order to know what has changed. In addition, you must store information about the created and deleted objects. Of course, you can reflect all changes in the database as soon as they occur. In this case, the following problems arise: a system transaction takes a very long time, which leads to conflicts during competitive access, since locks over changes in the database are held for the entire transaction; interaction with the database is divided into many small parts, which is also ineffective. To solve data change tracking problems, the Unit of Work pattern is described. This pattern describes an object that tracks all changes and can apply them to the database in order to reconcile its state with the changes made.

public class UnitOfWork { private List<DomainObject> newObjects; private List<DomainObject> updatedObjects; private List<DomainObject> deletedObjects; /** * * @return */ public DomainObject create() { DomainObject domainObject = new DomainObject(); newObjects.add(domainObject); return domainObject; } /** * * @param domainObject */ public void update(DomainObject domainObject) { updatedObjects.add(domainObject); } /** * * @param domainObject */ public void remove(DomainObject domainObject) { deletedObjects.add(domainObject); } /** * */ public void commit() { // SQL INSERT insert(newObjects); // SQL UPDATE udpate(updatedObjects); // SQL DELETE delete(deletedObjects); } // insert, update, delete } Above is the simplest implementation of the Unit of Work pattern. DomainObject objects can be registered in the UnitOfWork object when they are acted upon. After completing a business transaction, the application calls the commit method on the UnitOfWork object.

A business transaction usually takes a long time. As a rule, it extends to several system transactions. The reason for this has already been mentioned above - problems with a long lock seizure. It follows from this that it is necessary to implement its own synchronization mechanism, which will work on top of several system transactions, since the DBMS synchronization mechanisms work only within one system transaction. To solve this problem, two patterns of Optimistic Offline Lock and Pessimistic Offline Lock are described, which implement optimistic and pessimistic types of competitive control.

Suppose the user opens the edit form, he works on the document for several minutes and saves the result. This is a typical example of a business transaction.

Consider the situation with optimistic competitive control. In case of detection of a conflict when saving the result of editing, the user will be notified with the changed data and a dialogue with the choice of further actions. The Optimistic Offline Lock pattern describes the change control mechanism, which, in general, relies on the data versioning mechanism. Each time a change is saved, a comparison is made of the version of the data in the database and the version based on which the changes were made. If these versions are equal, then the current version changes and the result is saved, otherwise a conflict occurs and it is processed either by repeating actions or canceling.

public class DomainObject { private Integer id; private Integer version; private Object data; // } public class UnitOfWork { // , public void update(List<DomainObject> updatedObjects) throws SQLException { PreparedStatement ps = connection.prepareStatement( "update domain_objects set data = ?, version = version + 1 " + "where id = ? and version = ?" ); for (DomainObject domainObject: updatedObjects) { ps.setObject(1, domainObject.getData()); ps.setInt(2, domainObject.getId()); ps.setInt(3, domainObject.getVersion()); if (ps.executeUpdate() == 0) { throw new RuntimeException(" "); } } } } The above code represents the implementation of the update method with the Optimistic Offline Lock pattern described above. In this implementation, the list of changed objects is saved with version checking. If the version has changed, then no entry in the table will be updated and in this example an exception is thrown. This exception corresponds to a conflict in which a record has already been updated by a competitive thread of execution.

In the case of pessimistic competitive control, the implementation of the Pessimistic Offline Lock pattern is presented below.

class DomainObject { private Integer id; private Boolean blocked; private Object data; // } public class UnitOfWork { // , public void update(List<DomainObject> updatedObjects) throws SQLException { PreparedStatement updateStatement = connection.prepareStatement( "update domain_objects set data = ?, blocked = false " + "where id = ? and blocked = true" ); for (DomainObject domainObject: updatedObjects) { updateStatement.setObject(1, domainObject.getData()); updateStatement.setInt(2, domainObject.getId()); updateStatement.executeUpdate(); } } public DomainObject get(Integer id) throws SQLException { PreparedStatement updateStatement = connection.prepareStatement( "update domain_objects set blocked = true " + "where id = ? and blocked = false" ); updateStatement.setInt(1, id); if (updateStatement.executeUpdate() == 1) { PreparedStatement selectStatement = connection.prepareStatement( "select * from domain_objects where id = ?" ); selectStatement.setInt(1, id); ResultSet result = selectStatement.executeQuery(); //result // DomainObject result return new DomainObject(); } else { throw new RuntimeException(" "); } } } In the presented code, when receiving data from the database, a lock is set on the blocked field. If it is already set, an exception is thrown, otherwise the result is returned. Then, when the object is updated, the lock is reset. This implementation will work with all levels of transaction isolation. Provided that the data will be saved after lock seizure.

The presented implementations only illustrate the description and are not intended for use in real-world applications!

Business Level Locking Policy

Blocking policies are rules that regulate competitive access to data. This paragraph deals with the policy of locking at the business level, and not at the level of implementing queries to the DBMS and platform synchronization mechanisms. It is necessary to take into account that the blocking policy must be related to business logic. And you can not leave the issue with competitive access for later. For example, in a system where the field of application indicates that a certain document must be edited individually, it is necessary that the business logic has knowledge of this requirement. , , , . , , . , , , . . .

(en)

Patterns of Enterprise Application Architecture (Martin Fowler, David Rice, Matthew Foemmel, Edward Hieatt, Robert Mee, Randy Stafford)

PostgreSQL Concurrency Control

Concurrency control

Isolation level

Source: https://habr.com/ru/post/121858/

All Articles