Site optimization. Technological foundation. Part 2

In the last article, we described what needs to be done in order to ensure successful promotion of web resources in search engines by fulfilling the basic requirements of search engine optimization.

In the last article, we described what needs to be done in order to ensure successful promotion of web resources in search engines by fulfilling the basic requirements of search engine optimization.Today I want to draw your attention to the technological features that must be observed in the development of the site. Check your resources for compliance. It will help you, as it helps us today. We tried to reduce everything to a set of tips that would be easy and convenient to work with it.

So, besides the external factors of your site, visible with what they call the naked eye (structure, texts, design) there are a number of technical measures that need to be done with the site for its proper configuration for better indexing by search engines. We list the most important of them.

')

The exact definition of the main host. Exclusion of mirrors.

It is necessary to determine the main address of the site, for example www.site.ru. In this case, you need to configure the server so that when you request an address without a WWW server, it gives the 301st response and redirects to the main address of the site (from WWW).

You also need to exclude hit in the search index synonyms of the main page (www.site.ru/index.php and other similar), because, despite the complete identity, for the search engine it will be different pages and what he considers the most important one only he knows.

Make sure you do not have other domains that completely copy the contents of the main site. For each of these, it is imperative to use a directive in robots.txt, for example:

User-Agent: *

Host: www.trubmaster.ruCorrect server replies

The main server responses that are commonly used when promoting a website are 301 (the page moved to another address for permanent residence) and 404 (there is no page with such an address).

The first answer is used in case of determining the main host (see above), as well as to redirect users and search robots from pages that have changed their address to a new one. For example, if you have redesigned the site and all the pages received new addresses, then it is advisable to give this very 301st response code to the old addresses indicating the new address. In this case, for example, the loss of the reference weight that the page has gained at the old address is minimized.

Answer 404 should be used for non-existent pages. On this page, you can display navigation links with a suggestion to visit other important sections of the site instead of the wrong one. Having the right answer for such pages guarantees that the deliberately non-existent pages do not enter the search engine index.

Setting the correct addresses (URL)

Each page should have a unique URL. Modern search engine algorithms perfectly recognize words contained in an address, including Russian words typed in the Latin alphabet, and also take them into account when ranking sites in search results. Compose such URLs from which the location of the document in the structure of the site will be clearly visible and its content is clear. Such addresses are called human-readable URLs (CNCs).

An example of a bad URL: www.site.ru/page.php?id=12313&brid=1536

An example of a good URL: www.site.ru/holodilniki/zanussi

Robots.txt

Using the robots.txt file, it is possible to send directives to the search robot on which sections of the site do not need to be indexed. Why is it important? The fact is that very often, especially in cases where there are product catalogs on the site (online stores), some pages duplicate the same content. These can be pages that appear when sorting, including various filters. You also need to close the indexation of the search results page and the page after specifying the number of items on different pages displayed on the page.

The fact is that each such page often has its own unique URL, and the content generated on them duplicates the information posted on the main pages of the catalog. As a result, on sites with a large number of directory elements, hundreds and thousands of completely unnecessary pages are added to the index, which only “confuse” the search robot when selecting relevant pages and simply slowing down its work of collecting information about all pages of the site.

Finally, in the robots.txt file, it is necessary to prohibit indexing of various service folders and folders of the content management system.

As a result, you should have a file like this:

User-Agent: *

Host: www.site.ru

Sitemap: www.site.ru/sitemap.xml

Disallow: /katalog/tv/cost-sort/

Disallow: /katalog/tv/name-sort/

Disallow: /katalog/tv/50/

Disallow: /admin/

Disallow: /search/Sitemap

Another very useful tool that helps the search engine to correctly index your site is the creation of a file sitemap.xml. Each page of your site and recommendations for a search robot for its indexing are written in this file.

A detailed description of the principles of working with the SITEMAP element is spelled out in Yandex assistance: help.yandex.ru/webmaster/?id=1007070 .

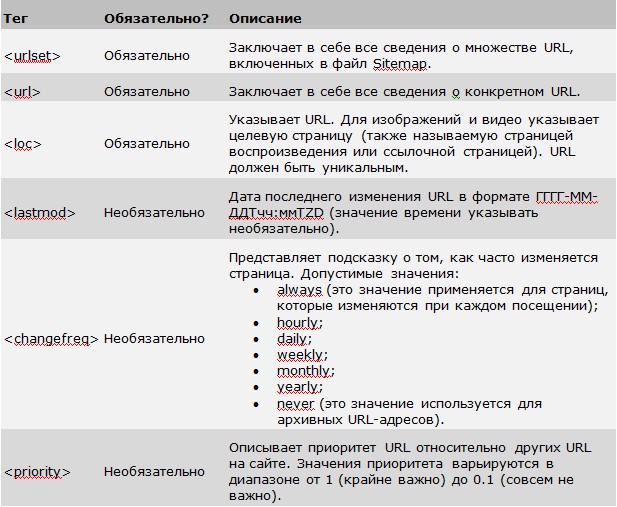

In the following table, we provide the decoding of the tags required for listing the simple URLs in the SITEMAP file.

And a number of small tips for working with this file:

- The link to the file (or files, if there are several) should be recorded in robots.txt;

- It is desirable that the actual date of the last update was entered into the file (for example, after pressing the Save button, and for the main page and catalog pages the fact of updating the catalog may additionally serve as a signal to change the page refresh date. It is also necessary to update the modification date for pages that have the output is generated automatically. For example, the News feeds page will automatically refresh after adding a news item or editing a previously added one;

- Only real-life pages should get into the file and duplicates of the same pages should be excluded both at different addresses (including “technical”), and simply duplication of identical URLs in different parts of the file.

HTML code

HTML is a hypertext markup language for web pages. On it "is written" a code of the site which, as a result, is read by search robots. Therefore, the quality of this code and what it conveys are very important points of internal site optimization.

HTML code can also be attributed to the technological foundation, but the very question of correct HTML coding is so voluminous and serious that it makes sense to talk about it separately. Although, it should be noted that many optimizers do not attach code too much importance. In particular, this is due to the fact that the search engines themselves are trying to set up their search robots to work with any code, even the most inconsistent and chaotic, and even more so with the code of popular typical CMS (Bitrix, Joomla, Drupal and others). However, as practice shows, sites with well-developed HTML-code have the best performance in the search results for a long time.

In general, the following requirements can be formulated for the HTML code:

- Compliance with HTML standards

- Compactness - to ensure minimum "weight" and increase the speed of indexing and loading

The compactness of the code is provided by a technologically advanced design (without unnecessary “bells and whistles”) and its competent layout under HTML. Unfortunately, a good and correct layout is still very rare. Only very famous and large studios constantly produce works at a decent level of quality.

In this chapter, we will provide some general code recommendations for which you can evaluate the code for your own resource.

Layout method

Layout HTML-site code is necessary by the method of DIV.

The use of tabular layout is not allowed. Tables can be used only for the actual design of the tables, or for special cases.

The general structure of building a content page in HTML

The following is a general scheme for constructing HTML code for a typical site page. Using this scheme, it should be clear what sequence of occurrence of blocks in the code must be followed when HTML-code is designed using the DIV method, if we assume that we work with some blocks. Each block is a specific “piece” of HTML code:

- Cap

- Menu (tagged unlisted list LI)

- Main content block (with H1 header and texts in P tags + LI lists)

- Secondary content blocks (with H2-H6 headers and texts in P tags + LI lists)

- Additional menus and supporting information blocks (advertisements, announcements of other sections, blocks of links to other resources, etc.)

- Site footer (copyright, counters)

Compact code. CSS. Javascript. Counters

When writing HTML code, it is necessary to maximize its compactness and bring immediate textual content as close as possible to the beginning of the code. The non-compact code leads to the fact that before the search robot starts to index the really significant text, it "eats" a significant amount of unnecessary code. As a result:

- the quota of the robot allocated in the database of the search engine for storing this page is spent;

- decreases the proportion of useful keywords of the total text indexed on the page.

- use of typesetting by DIV;

- Prevent unnecessary “voids” between lines and extra spaces in the HTML code;

- minimize the use of different styles and classes right in the code; all basic and standard elements should be described in separate CSS files;

- when using references to styles and classes, use as short as possible and at the same time understandable names identifiers.

In separate files you need to make and connect as needed:

- all styles of layout elements - in the form of CSS files;

- all JavaScript scripts are in the form of JS files;

Text layout in HTML code

All texts on the site can be divided into main content, menu blocks and auxiliary text (for example, advertising links, banners, a basement block with the company's copyright, and so on). Rules for the design of texts for the blocks of the main content and the auxiliary text are the same. The menu blocks have their own features.

Menu block design

All menu blocks must be arranged in the list tags: UL, LI. A link is placed inside the list tag. Stylistic differences need to be ensured by defining different styles within the DIV tags that surround the menu items.

:

DIV UL LI A /A /LI /UL /DIVTexts of the main content and sub-menu blocks

A. When formatting texts, direct placement of texts in tags is not recommended:

- DIV (for example, DIV Here is the text / DIV)

- FONT - this tag should be removed from the HTML code altogether.

- H1-H6 - for the design of headers

- P - the main tag for the design of almost all texts

- UL, OL, LI - for the design of lists

- TH, TR, TD - for tabular cells

- PRE - an additional tag for displaying preformatted text, can be used in exceptional cases

Page meta descriptions

Each page of the site can be described by special meta tags (TITLE, DESCRIPTION, KEYWORDS), which allow to more accurately identify it in the structure of web documents.

Tag TITLE is the most significant tag of any tags applied to the texts on the site. Explicitly, the text entered in TITLE is visible in the search results and as the title of the window in some Internet browsers. In the TITLE tag you need to make a concise text that describes the content of the page as accurately as possible. The TITLE generation template for the default website pages: [Name of the current section]. [The name of the parent section]. [Unique suffix].

The unique suffix is the text that will allow the search engine to highlight your document as unique in the entire set of Internet documents. It is usually formed as a company name plus 2-3 words describing the main activity.

The DESCRIPTION and KEYWORDS tags have long lost their influence that they had in the late 1990s and early 2000s, but their use and proper elaboration are still recommended.

In the KEYWORDS tag, you must list keywords that accurately describe the content of the pages. It is recommended to select a unique set for each of the pages, no need to write anything extra. It is desirable to be limited to 15-20 words, no more. And you do not need to “drag” from page to page any phrase that seems to be the most-necessary for your promotion, better define your single, but most suitable page for this phrase.

In the DESCRIPTION tag you need to write 3-5 related sentences about the content of the page. Something like "Catalog of fishing goods. List of wobblers in category Bait. Fishing online store BestNasveRybalka.Ru ". It is also important to create unique descriptions for each page that exactly correspond to its content. It is also necessary to take into account that the content of this tag is sometimes displayed as a page description in Google and Yandex search results.

It should be noted that the development of meta-descriptions for sites with hundreds of pages is an extremely tedious business. Moreover, it is desirable to somehow unify these descriptions. In this case, come to the aid of CMS, in which it is possible to create templates of such meta-descriptions. Unfortunately, today not every CMS allows you to simply edit these meta tags and only a few allow you to create templates. Therefore, at the stage of creating a site, if you attract third-party developers, it is advisable to immediately discuss with them exactly how meta tags will be formed for your standard pages.

We observe the above tips when developing websites using web-canape technology . We and customers are pleased with the results. What and you want!

Special thanks to our friend, partner and author of these recommendations - Andrey Zaitsev ( www.promo-icom.ru )

In the following, final material, we will tell about additional promotion tools and useful services for webmasters. In the meantime, we are waiting for comments and additions from experts.

PS The first part of the tips

Source: https://habr.com/ru/post/120505/

All Articles