Sync notes. Deadlock

In our harsh time, when the power of processors ceased to grow high (frequency) and began to grow in breadth (the number of threads), the problem of synchronization is very serious. Faced with this problem in practice, I felt for myself that this task is much more difficult than it seems at first glance, and what number of underwater rakes it hides. In the process of working on this problem, I had several interesting patterns that I want to introduce to the habrasoobschestvo.

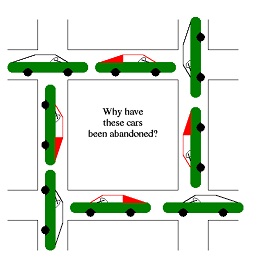

One of the most unpleasant problems that one has to face when programming multithreading is Deadlock. Most often, it happens when a thread has already blocked resource A, after which it tries to block B, while another thread has blocked resource B, after which it tries to block resource A. The saddest thing about this type of bug is that it happens very rarely and catch it when testing is almost impossible. So, you need to prevent even the possibility of the appearance of this bug! How? Very simple. Here we will be helped by a pattern which I called SynchronizationManager, i.e. Synchronization Manager. The pattern is intended for cases when it is necessary to ensure the synchronization of a sufficiently large number of diverse resources.

Let's start with the fact that all resources to which you need to share should be inherited from a special base class.

class SynchPrim { enum{ NOT_LOCKED=0 }; public: SynchPrim(): lockId_( NOT_LOCKED ){} SynchPrim( const SynchPrim& ): lockId_( NOT_LOCKED ){} SynchPrim operator =( const SynchPrim& ){} private: template< typename T >friend class SynchronizationManager; int getLockId()const{ return lockId_; } int lockId_; }; ')

Well, for now, everything is simple. And what to do in cases if we can not or do not want to add an extra ancestor to the class? It's okay, you just need to write a wrapper for it:

template< typename T > class SynchPrimWrapper : public T, public SynchPrim{}; And use it when declaring an object:

SynchPrimWrapper< Resource1 > resource1; The main idea of the pattern is that all shared resources that may be needed at the current moment need to be blocked simultaneously, simultaneously released, then deadlock cannot arise. In cases if we try to block new resources without first releasing the previous ones, the assert macro will work.

To begin, we will create a list of resources with which we will work. This can be either a C-array or a std :: vector.

SynchPrim* lock_list[] = { &resource1, &resource2, &resource3 }; After that, in one call, we block them all:

SynchronizationManager::getInstance().lock( lock_list+0, lock_list+3 ); By the way, the lock function supports asynchronous locking. If you do not want the thread to wait until the resources are free - just pass the last parameter to false.

After resources are no longer needed - we release them by calling:

SynchronizationManager::getInstance().unlock( lock_list+0, lock_list+3 ); Well, actually class:

template< class TSynchronizator >// lock, unlock, wait, signal ThreadType class SynchronizationManager : public Singelton< SynchronizationManager< TSynchronizator > > { public: /* needWait - true - , false, */ template< typename Iterator > bool lock( const Iterator& begin, const Iterator& end, bool needWait= true ); /* */ template< typename Iterator > void unlock( const Iterator& begin, const Iterator& end ); private: TSynchronizator synh_; #ifdef _DEBUG typedef typename TSynchronizator::ThreadType ThreadId; typedef std::set< ThreadId > LockedThreads; LockedThreads lthread_; #endif }; Synchronization strategy can be written using any means, and on virtually any platform. As a rule, it is mutex and condition.

Implementation:

template< class TSynchronizator > template< typename Iterator > bool SynchronizationManager< TSynchronizator >::lock( const Iterator& begin, const Iterator& end, bool needWait ) { synh_.lock(); ThreadId threadId = synh_.getThreadId(); bool isFree = false; #ifdef _DEBUG assert( lthread_.find( threadId ) == lthread_.end() ); #endif while( !( isFree = std::find_if( begin, end, std::mem_fun( &SynchPrim::getLockId ) ) == end ) && needWait ) synh_.wait(); if( isFree ) for( Iterator it = begin; it != end; it++ ) (*it)->lockId_ = threadId; #ifdef _DEBUG if( isFree ) lthread_.insert( threadId ); #endif synh_.unlock(); return isFree; } template< class TSynchronizator > template< typename Iterator > void SynchronizationManager< TSynchronizator >::unlock( const Iterator& begin, const Iterator& end ) { synh_.lock(); #ifdef _DEBUG ThreadId threadId = synh_.getThreadId(); assert( lthread_.find( threadId ) != lthread_.end() ); lthread_.erase( threadId ); #endif for( Iterator it = begin; it != end; it++ ) (*it)->lockId_ = SynchPrim::NOT_LOCKED; synh_.signal(); synh_.unlock(); } Naturally, this approach is not without flaws. It is not always possible to know in advance what resources we will need. In this situation, you will have to unblock already allocated resources and block them with a new one, but this is the overhead cost, they are worth it in order to get rid of the potential deadlock - you decide. In addition, in the current form, the pattern does not allow controlling access to unlocked resources and does not guarantee unlocking after the use of resources. How this can be done, I will write in the next article.

Source: https://habr.com/ru/post/119438/

All Articles