Immersion in HTML5 Test

(This is the second article in a series of articles reviewing various browser tests. Earlier in the series: Immersion in ACID3 .)

Judging by the majority of references to HTML5Test (hereinafter h5t ), the majority of users of this test are more interested in the final result (the sum of points), as a certain indicator that can be referenced and compared to, rather than in the inner essence of the result.

')

In common, it is called puzomerkami, but as aptly noticed about a year ago in Lenta.ru (see below) - “who has HTML5 is longer”.

Let's start with Tapes.ru, here’s a reference to a one-year-old test when it first appeared:

In front of me is also the Computer Bild # 02 (125) / 2011 number, in which “large-scale” browser testing is arranged (“mega-test on 18 pages”):

300 of 384 pages are HTML5 Test results, which means the remaining 84 are not specified. :)

Here is Computerra talks about Mozilla Fennec :

Sadly, but Habr also does not differ much analysis in relation to the tests. Here is just one of the recent references in the post about the new parsing engine in Opera :

The catch lies not only in the fact that Opera has long been supported by SVG, but also in the fact that Opera doesn’t have native support for MathML in Opera (there is partial through CSS for MathML Profile).

In fact, h5t is used as a kind of black box, “checking the support of a particular HTML5 standard browser”, which is widely referred to and fully trusted:

Those who are immersed in web development are a little more than a regular user or most journalists, of course, understand that the test not only gives out a number, but also is divided into blocks - on the test page you can track down what different points are given for. That is, what exactly, according to the test, works and what does not. The obvious analogy is to count the number of burning light bulbs:

And here the most interesting begins: what each of the bulbs means, why some are bigger and others smaller, why not all the lights are lit, and, after all, how much do the lights correspond to the stated purpose of checking HTML5 support?

As a remark : it is worth noting that the test is constantly evolving, if you refer to references to a one-year-old test, you will find that the total number of points and the set of tests were different. The current level of 400 points may change in the future.

In general, let's deal with everything in order.

As additional interesting details:

Unlike Acid3, h5t is not associated with any organization that develops or promotes web standards. Here is what the test author writes:

As far as I know, no browser vendor has a reference to h5t in official communications as a criterion for HTML5 support. (OK, with the exception of the well-known trolling post from the evangelist Mozilla Paul Rouget about IE9 vs. Fx4.0.)

The test consists of:

The testing engine consists of several key functions (objects):

Each test set is represented by a separate function that stores inside the number of tests, the name of the set and the tests themselves.

The internal structure of the tests can vary, but the overall structure of the set is clearly seen in this example:

All tests are performed as a separate function t i , in the process of testing when dialing is initialized, the browser goes through all of the methods described in the set.

Each method inside adds to the collected results (the results object is transferred from the testing engine) information about the passing or non-passing of the test, the number of points relying on this test, and additional meta-information (from references to the specification to formulations about partial or complete test passing) .

More complex tests (for example, for web forms) use slightly more complex structures to group the results, but the essence remains the same.

Now that the mechanisms of the dough work are understood in general terms, it's time to move on to the details of the internal kitchen of the tests themselves.

Let's start by looking at how the individual blocks to be tested are distributed according to the test and converted into points, and how this all is interconnected with the HTML5 standard.

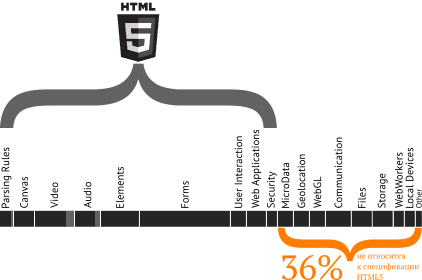

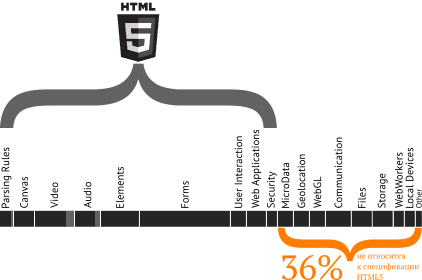

The first thing that catches your eye is that a significant part of the “checked” elements for today is not included in the HTML5 specification:

Some of these elements were excluded from the HTML5 specification and isolated into separate standards (for example, HTML Microdata), some were never included into this specification (for example, FileAPI).

Separate things to the web standards have no direct relationship at all (codecs — for support, additional bonus points are given).

Finally, WebGL is a third-party specification that is not directly related to HTML5 (uses Canvas to render rendering results).

The second important point that is definitely worth paying attention to is the distribution of points between different specifications and within the HTML5 specification.

So, a huge amount of points (90 out of 400) is allocated for new elements for forms - almost a quarter of the total amount or a third of the points allocated to HTML5 itself. Web forms, of course, are an important component in the new specification, but today this component can hardly be called dominant in terms of demand (for more details, see below).

Approximately as many points are assigned to working with video as to all the new semantic elements of HTML5. The Canvas is significantly smaller than the Audio.

Some tests and checks weigh 1 point, some up to 10. In fact, the distribution of points between tests and checks is the complete will and imagination of the author of the test.

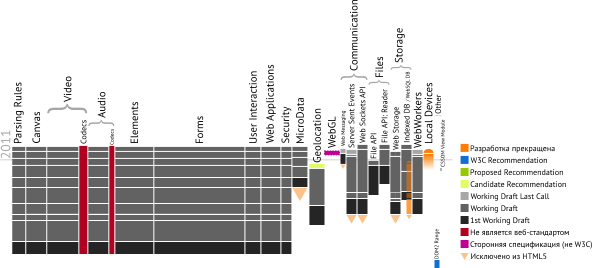

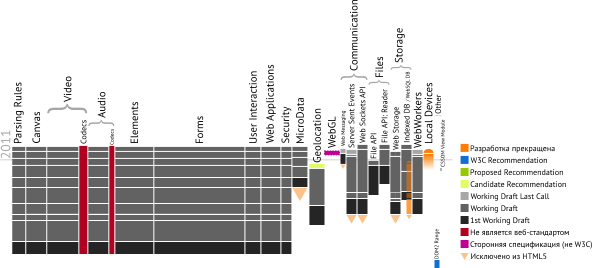

The third side of the case, which must be kept in mind, concerns exactly which specifications are checked and in what condition they are at the moment.

If you start digging in, the first thing that pops up on the surface is the current draft status of most specifications:

The second, and no less important, is the early status and variability of many specifications: in the picture above you can see the frequency of updates of specifications for each of the standards over the past three years. In some places, as mentioned above, the variability is such that the test no longer corresponds to the real state of affairs in the field of web standards .

Here is what the test author writes:

Let's take a closer look at the content of each test.

The largest block in the entire test is of central interest. It consists of several internal parts that check certain elements.

In this test block, some rules for parsing documents and, in particular, parsing various exotic and incorrect markup options that comply with the HTML5 specification are checked.

1. Support <! Doctype html> 1 point

Verifies that "with the doctype installed, the browser works in Strict mode". Inside, a check is made that document.compatMode == "CSS1Compat". This property describes the mode in which the browser is currently working (Quirks vs. Strict).

In reality, if there is any correct doctype, the browser should work in Strict-mode, that is, this check is not directly related to HTML5, since Strict mode with <! Doctype html> will be enabled even in IE6, which certainly does not support HTML5.

2. HTML5 Tokenizer 5 points

Various exotic cases of specifying the names, attributes and contents of elements are checked - a total of 19 checks for the correct parsing of the internal contents of the html element:

Although, unlikely, you will encounter in life most of these cases, it is important to note that the HTML5 specification really focuses on parsing documents and properly handling such examples.

(In fact, and almost literally, these tests are based on Mozilla HTML parsing tests: http://hsivonen.iki.fi/test/moz/detect-html5-parser.html )

3. HTML5 Tree building 5 points

The continuation of the topic of document parsing is the dynamic creation of various elements with the same original test source:

Note : this and previous tests are the only ones in all h5t that check the implementation details of a particular functionality and claim to check the interoperability of document parsing.

(I repeat that, unlike the rest of the tests, the parsing verification tests are borrowed from Henri Sivonen , who is known for being very involved in the development of the HTML5 parser for Firefox 4. That is, in fact, these are tests that were invented during development of Fx4, so it is not surprising that it passes them perfectly. The key feature of such selections is that, while remaining correct, they cannot claim 100% or close coverage of the checked functionality. In other words, this is selective testing of interesting variations to the author of the code.)

Whether the browser supports SVG or MathML is not really checked

Suddenly, the test fails Opera, which historically has one of the best support for SVG. True MathML support is only available in Firefox. Chrome passes this test without even having the support of MathML itself (you can check it with Mozilla's test examples ).

Note : formally, the internal contents of the svg and math elements and the rules for parsing / displaying are described by separate specifications. The HTML5 specification says that the document must support the insertion of SVG content through the <svg> tag and MathML content using the special <math> tag.

This test block checks Canvas support. In fact, as can be seen from the description below, only the fact that the browser supports the canvas tag and outputs the correct context for JavaScript manipulations is verified.

Unfortunately, this block does not produce any substantial verification of the level of support for the canvas and compatibility of implementations .

This block is designed to check the support in the browser of the new <video> tag. Additionally, it checks for support for various codecs, which has nothing to do with the HTML5 specification, but is of some interest.

This test block does not produce a detailed check of the support level of a video element or track element inside the video .

Similar to the video support check block, this block checks the support of the new <audio> tag. Additionally, it checks the support of various audio codecs.

This unit of tests does not produce checks for the level of support for an audio element .

Note : the incorrectness of checking codecs in such tests is determined not only by the fact that the requirement to support certain codecs is not defined in the HTML5 specification, but also by the fact that this is not always a (permanent) characteristic of the browser. For example, IE9 with installed WebM codecs from Google gets an extra 3 bonus points.

This unit checks the support of various new elements and attributes.

Note : there is an interesting point in the last test: the score for each of the elements mark and ruby / rt / rp is given for the so-called. “Full support” of the element, which, in the opinion of the author, lies in the correct values of the default element style. In fact:

Note : internal verification of the creation of elements for details and summary is not quite correct. For the first, you need to check that it is HTMLDetailsElement , not HTMLElement (and all browsers must fail this test). For the second special DOM interface is not provided, so the test will be run even if the browser creates an HTMLElement, but says it does not know what kind of element it is. Moreover, a proper check should also take into account that the summary-element makes sense only in the context of the details element.

Note : it is interesting that the iPhone and Android formally support contentEditable (inherited from Webkit), but they don’t really support it, so no points are given for them for this test.

Note : unfortunately, as well as for the attributes above, in this case, the operation of the specified properties and methods is not checked - only their placement in the DOM is evaluated.

Note : web forms are a very interesting object from different points of view, but the attention to them in this test is both too large and too superficial in general and noticeably deeper compared to the other HTML5 elements - in particular, in order to display the real the picture.

As already mentioned, web forms are an important part of HTML5. In the historical aspect, HTML5 has grown from a Web Applications 1.0 project, subsequently incorporating as part of the Web Forms 2.0 specification, describing new form elements and additions to existing ones. Therefore, of course, web forms deserve a lot of attention, as other elements deserve, but they have never been the center of attention in conversations about HTML5, unlike, say, canvas, media elements, semantic structural tags and various APIs.

Regarding web forms, especially new input elements, there are many ambiguous points that will still require tweaking, and, above all, it concerns the appearance and interaction with the user:

1. Input item types 58 points

A series of tests that test the ability to set an input element of a particular type, validation and additional features in individual cases.

2. 20 points

3. 12 points

Note : the last check is done with a frank error, so it does not work even in those browsers that these methods support. In the beta version of the test, both event checks are removed following specification changes , and the scores are redistributed in favor of the selectors above.

This block includes the verification of two possibilities: Drag & Drop and the History API.

1. Drag & Drop 10 points

Tests support for the draggable attribute in elements.

Note : it is interesting that D & D support was available in four versions of Netscape and Internet Explorer. In IE5, it has been greatly expanded, and subsequently also implemented in Safari. Later, in the course of working on HTML5, this D & D implementation was reverse-engineered by Jan Hickson and described in a new standard.

The main essence of Drag & Drop is in special events marking the individual stages of the process of transferring elements and transferring data together with elements through special objects. In general, there is a D & D in IE5 +, but h5t does not know about it (unlike Modernizr), since makes a verdict only for a specific new attribute not supported in IE.

2. Session History 5 points

Checks for the history property and the pushState method in it.

Checks support for Application Cache (for offline applications) and special methods in the Navigator (for information about the data received).

Checks if work with iframe.

Note : because when it comes to such a critical thing as security, then simply checking for the presence of these attributes does not represent much value without actually checking how the browser handles them, because they suggest quite interesting scenarios for setting restrictions and expanding the fusion with the environment, respectively.

Microdata HTML specification support is evaluated. The test includes one test verifying:

Geolocation support is checked. The test includes one test that examines the presence of the geolocation property in the navigator.

WebGL support is evaluated. Verification includes one test that verifies support for at least one of the many contexts for the canvas element: 'webgl', 'ms-webgl', 'experimental-webgl', 'moz-webgl', 'opera-3d', 'webkit-3d' , 'ms-3d', '3d'. :)

Checks support for a number of specifications that describe networking.

Note : as you know, you will not envy the evolution of web sockets - the protocol periodically changes (sometimes in an incompatible way), and because of security threats, support for web sockets themselves is sometimes turned off. The test also does not take into account the experimental implementation of web sockets for IE .

Checks File API support (one of the most raw (fresh) specifications among all checked).

Note : Similar to the case with web sockets, for today the specifications are far from being able to speak with full confidence about the need to include their implementations in stable versions of products. The test also does not take into account the experimental implementation of the File API for IE .

Checks support for a number of specification for storing data on the client.

Note : the test does not take into account the experimental implementation of IndexedDB for IE .

Note : although further work on the WebSQL Database is discontinued, if the browser does not support IndexedDB, the test allows it to score an additional 5 points on the WebSQL Database (for example, in Opera).

Checking support for Web Workers - comes down to checking the presence of a Worker object in a window.

Checking whether the <device> element can be created and whether it has a type property.

Note : a month and a half ago, this tag was cut from both the specification from the W3C and the specification from the WHATWG, to which the author refers. The implementation of the corresponding functionality should be implemented through a special API.

Several separate properties are checked. (I suspect that for a round account in the test.)

Note : the method refers to the DOM Range and must go to the appropriate specification.

Note : The method has been moved to CSSOM .

The first thing that comes to mind when looking at the scan and test description is superficiality . The test, setting itself a big task to check the level of support for HTML5 in various browsers (in any case, this is how it is perceived), is not a bit buried inside most of the tested technologies, limited to a formal test of the presence of certain properties and methods.

The arbitrariness of the distribution of points - I already wrote about this at the beginning of the review, but I repeat once again: the distribution of points for various “achievements” is done at the discretion of the author by a willful decision. As a result, somewhere for a superficial check is hung up by 15 points, somewhere by 1-2 for checking the availability of properties, and somewhere around 5 points is given for 19 checks of the parsing algorithm or 0.26 points in terms of each check.

Associated with arbitrariness thing: the bias of accents . As noted at the beginning, a very large emphasis is given to web forms (even with a superficial approach), a small emphasis is given to checking the details of Canvas, Video or Audio, and various APIs. A number of checks evaluate the operation of the parsing algorithm, single checks evaluate the operation of the API, including 50+ methods and properties (Canvas) and many different nuances.

Bugs . They are - both in checks and in relation to checked standards or their parts, some of them are no longer supported or transformed so that the checks included in the test are not relevant.

The test includes things that are not directly related to web standards - codecs, and WebGL, which is a third-party (non-W3C) standard.

Selectivity of checked standards : besides the fact that a third (by points) of checked things is not included in the HTML5 standard today, the reality is that neither in the HTML5 specification area nor in the set of new specifications for Web Apps, 100% coverage is observed (even superficial).

In other words, there are a lot of things that HTML5Test doesn't test at all ...

Let's start by looking at what the author says about his creation:

So, HTML5 test does not check:

If you ask the question "is it possible to judge the level of support for HTML5 in browsers on the basis of the HTML5 test," then the answer is no more than yes. There are many reasons for this, the key ones are three: superficiality, imbalance and a large amount of impurities.

If you put the question in a slightly different perspective: “Does the HTML5 test display the dynamics of improved support for HTML5 in browsers?” Then, given the internal correctness of most tests, the answer will be more likely than not. This, however, does not exclude that support can be improved (in one direction or another, or parameters), but the test will not notice this because of its selectivity.

In the technical aspect, the HTML5 test is, of course, of great interest as an example of a well-built architecture for testing browsers.

Judging by the majority of references to HTML5Test (hereinafter h5t ), the majority of users of this test are more interested in the final result (the sum of points), as a certain indicator that can be referenced and compared to, rather than in the inner essence of the result.

')

In common, it is called puzomerkami, but as aptly noticed about a year ago in Lenta.ru (see below) - “who has HTML5 is longer”.

Examples

Let's start with Tapes.ru, here’s a reference to a one-year-old test when it first appeared:

We checked browsers for compatibility with the latest web standard .

In March, the html5test.com website appeared on the Web , which, as the name implies, checks whether a particular browser is ready to work with the new and extremely promising HTML5 web standard.

In front of me is also the Computer Bild # 02 (125) / 2011 number, in which “large-scale” browser testing is arranged (“mega-test on 18 pages”):

Most of the web pages are written in hypertext markup language HTML. In order to correctly display the page, the browser must read all the tags contained in its source code. This line indicates how many of the 384 pages the browser displayed without errors.

300 of 384 pages are HTML5 Test results, which means the remaining 84 are not specified. :)

Here is Computerra talks about Mozilla Fennec :

The HTML5 Fennec support test passes, getting a good result of 207 points, while Safari on iOS only gets 185.

Sadly, but Habr also does not differ much analysis in relation to the tests. Here is just one of the recent references in the post about the new parsing engine in Opera :

Ragnarök also scores 11 out of 11 (plus 2 bonus points) at a few incomplete (and therefore misleading) html5test.com ( two bonus points for embedded SVG and MathML in HTML5 )

The catch lies not only in the fact that Opera has long been supported by SVG, but also in the fact that Opera doesn’t have native support for MathML in Opera (there is partial through CSS for MathML Profile).

Black box

In fact, h5t is used as a kind of black box, “checking the support of a particular HTML5 standard browser”, which is widely referred to and fully trusted:

Those who are immersed in web development are a little more than a regular user or most journalists, of course, understand that the test not only gives out a number, but also is divided into blocks - on the test page you can track down what different points are given for. That is, what exactly, according to the test, works and what does not. The obvious analogy is to count the number of burning light bulbs:

And here the most interesting begins: what each of the bulbs means, why some are bigger and others smaller, why not all the lights are lit, and, after all, how much do the lights correspond to the stated purpose of checking HTML5 support?

As a remark : it is worth noting that the test is constantly evolving, if you refer to references to a one-year-old test, you will find that the total number of points and the set of tests were different. The current level of 400 points may change in the future.

In general, let's deal with everything in order.

Who came up with the h5t?

HTML5Test's author name is Niels Leenheer . As Niels writes in his blog, he is a graphic designer and web programmer who periodically contributes to various open-source projects, including Geeklog, Nucleus CMS, Zenphoto, Mozilla, KHTML and WebKit - from wallpapers and icons to individual patches (more about contribution to each of the projects, see here http://rakaz.nl/code ).As additional interesting details:

- As it is fashionable (and probably convenient) today, the test is being developed as part of a special project on Github . There you can also find information about open and already corrected bugs or controversial points of the test.

- On the test page, Niels thanks Henri Sivonen for the ability to use his HTML5 parsing tests and other contributors.

Communication with organizations promoting web standards

Unlike Acid3, h5t is not associated with any organization that develops or promotes web standards. Here is what the test author writes:

Please note that the HTML5 test is not related to the W3C or the HTML5 working group.

As far as I know, no browser vendor has a reference to h5t in official communications as a criterion for HTML5 support. (OK, with the exception of the well-known trolling post from the evangelist Mozilla Paul Rouget about IE9 vs. Fx4.0.)

How does h5t work?

The internal structure of the test can be traced directly by the page code, and by the source code on github . Unlike Acid3, h5t does not imply the generation of any beautiful pictures, - only a general result in the form of points and details on tests.The test consists of:

- Test pages (layouts and styles)

- Engine for testing, collecting results and displaying them on the page

- Directly sets of tests and tests inside them.

Testing engine

The testing engine consists of several key functions (objects):

- startTest - starts the testing process.

An interesting detail: to obtain statistics, html5test accumulates the results of test passing by users through the BrowserScope API. - test - contains a list of test suites, passes through each set and collects the results

Simplified, the launch of testing looks like this:test.prototype = {

suites: [ { title: null , sections: [

testParsing, testCanvas, testVideo, testAudio, testElements,

testForm, testInteraction, testMicrodata, testOffline, testSecurity ]

},

{ title: 'Related specifications' , sections: [

testGeolocation, testWebGL, testCommunication, testFiles, testStorage,

testWorkers, testDevice, testOther ] }

],

initialize: function (e, t, c) {

var r = new results(e, t);

for (g in this .suites) {

r.createSuite( this .suites[g].title);

for (s in this .suites[g].sections) {

new ( this .suites[g].sections[s])( r );

}

}

c( r );

}

}

* This source code was highlighted with Source Code Highlighter .

Each of the functions with test suites receives a link to the object with the results in which you need to enter the test results. - results - forms an external view based on the results

- section, group and item - a logical representation of test groupings (used when generating and displaying results)

- auxiliary data and functions:

- iOS and Android - you need one of the tests (see below)

- isEventSupported - checks if the browser supports the corresponding event

- getRenderedStyle - pulls the resulting style out of an element

Test suites

Each test set is represented by a separate function that stores inside the number of tests, the name of the set and the tests themselves.

The internal structure of the tests can vary, but the overall structure of the set is clearly seen in this example:

function testFiles (results) { this .initialize(results) }

testFiles.prototype = {

name: 'Files' ,

count: 2,

initialize: function (results) {

this .section = results.getSection( this .name);

for ( var i = 1; i <= this .count; i++) { this [ 't' + i](); }

},

t1: function () {

this .section.setItem({

title: 'FileReader API' ,

url: 'http://dev.w3.org/2006/webapi/FileAPI/#filereader-interface' ,

passed: 'FileReader' in window,

value: 10

});

},

t2: function () {

this .section.setItem({

title: 'FileWriter API' ,

url: 'http://www.w3.org/TR/file-writer-api/' ,

passed: 'FileWriter' in window,

value: 10

});

}

};

* This source code was highlighted with Source Code Highlighter .All tests are performed as a separate function t i , in the process of testing when dialing is initialized, the browser goes through all of the methods described in the set.

Each method inside adds to the collected results (the results object is transferred from the testing engine) information about the passing or non-passing of the test, the number of points relying on this test, and additional meta-information (from references to the specification to formulations about partial or complete test passing) .

More complex tests (for example, for web forms) use slightly more complex structures to group the results, but the essence remains the same.

Now that the mechanisms of the dough work are understood in general terms, it's time to move on to the details of the internal kitchen of the tests themselves.

What does the h5t check?

A list of what h5t specifically checks with references (not always correct) for specifications or parts of specifications can be found directly on the test page, so there is not much point in duplicating it here. It is much more important to understand what is happening there inside and what is behind each testing function.Let's start by looking at how the individual blocks to be tested are distributed according to the test and converted into points, and how this all is interconnected with the HTML5 standard.

Composition

The first thing that catches your eye is that a significant part of the “checked” elements for today is not included in the HTML5 specification:

Some of these elements were excluded from the HTML5 specification and isolated into separate standards (for example, HTML Microdata), some were never included into this specification (for example, FileAPI).

Separate things to the web standards have no direct relationship at all (codecs — for support, additional bonus points are given).

Finally, WebGL is a third-party specification that is not directly related to HTML5 (uses Canvas to render rendering results).

Proportions

The second important point that is definitely worth paying attention to is the distribution of points between different specifications and within the HTML5 specification.

So, a huge amount of points (90 out of 400) is allocated for new elements for forms - almost a quarter of the total amount or a third of the points allocated to HTML5 itself. Web forms, of course, are an important component in the new specification, but today this component can hardly be called dominant in terms of demand (for more details, see below).

Approximately as many points are assigned to working with video as to all the new semantic elements of HTML5. The Canvas is significantly smaller than the Audio.

Some tests and checks weigh 1 point, some up to 10. In fact, the distribution of points between tests and checks is the complete will and imagination of the author of the test.

Statuses

The third side of the case, which must be kept in mind, concerns exactly which specifications are checked and in what condition they are at the moment.

If you start digging in, the first thing that pops up on the surface is the current draft status of most specifications:

- HTML5 - Working Draft, WD LC is expected to be in May

- HTML MicroData - WD

- Geolocation - Candidate Recommendation (the only exception from the entire clip)

- Web Messaging and Server Sent Events - WD LC

- Web Sockets API - WD (we leave protocol changeability beyond the scope of the review)

- File API - WD, recently just stepped over the frontier of the first version of a draft

- Web Storage - WD

- Indexed DB - WD

- Web SQL Database - work has been discontinued, but the author allows to score points for those who do not support Indexed DB

- Web Workers - WD LC

- Local Devices - not in the spec from W3C, one and a half months ago excluded from the specification from WHATWG and replaced with API

- DOM Range / Text Selection is a separate property of a different specification.

- CSSOM View Module / Scrolling - a separate property transferred to another specification (editorial version)

The second, and no less important, is the early status and variability of many specifications: in the picture above you can see the frequency of updates of specifications for each of the standards over the past three years. In some places, as mentioned above, the variability is such that the test no longer corresponds to the real state of affairs in the field of web standards .

Here is what the test author writes:

Please keep in mind that the tested specifications are still in the process of being developed and may change before becoming official.

Let's take a closer look at the content of each test.

HTML5 block

The largest block in the entire test is of central interest. It consists of several internal parts that check certain elements.

Parsing 5 tests | 11 points | 2 bonus points

In this test block, some rules for parsing documents and, in particular, parsing various exotic and incorrect markup options that comply with the HTML5 specification are checked.

1. Support <! Doctype html> 1 point

Verifies that "with the doctype installed, the browser works in Strict mode". Inside, a check is made that document.compatMode == "CSS1Compat". This property describes the mode in which the browser is currently working (Quirks vs. Strict).

In reality, if there is any correct doctype, the browser should work in Strict-mode, that is, this check is not directly related to HTML5, since Strict mode with <! Doctype html> will be enabled even in IE6, which certainly does not support HTML5.

2. HTML5 Tokenizer 5 points

Various exotic cases of specifying the names, attributes and contents of elements are checked - a total of 19 checks for the correct parsing of the internal contents of the html element:

- "<div <div>";

- "<div foo <bar = ''>"

- "<div foo =` bar`> "

- "<div \" foo = ''> "

- "<a href='\nbar'> </a>"

- "<! DOCTYPE html>"

- "\ u000D"

- "& lang; & rang;"

- "& apos;"

- "& ImaginaryI;"

- "& Kopf;"

- "& notinva;"

- '<? import namespace = "foo" implementation = "# bar">'

- '<! - foo - bar ->'

- '<! [CDATA [x]]>'

- "<textarea> <! - </ textarea> -> </ textarea>"

- "<textarea> <! - </ textarea> ->"

- "<style> <! - </ style> -> </ style>"

- "<style> <! - </ style> ->"

Although, unlikely, you will encounter in life most of these cases, it is important to note that the HTML5 specification really focuses on parsing documents and properly handling such examples.

(In fact, and almost literally, these tests are based on Mozilla HTML parsing tests: http://hsivonen.iki.fi/test/moz/detect-html5-parser.html )

3. HTML5 Tree building 5 points

The continuation of the topic of document parsing is the dynamic creation of various elements with the same original test source:

- Tag inference / automatic creation of tags (in this case, it is checked that the dynamically created html element has inside the head and body elements).

- Implied <colgroup> / expected col-element in the table

- List parsing

- Foster parenting support

- Adoption agency / parsing incorrect attachments like "<i> A <b> B <p> </ i> C </ b> D"

- HTML namespace

Note : this and previous tests are the only ones in all h5t that check the implementation details of a particular functionality and claim to check the interoperability of document parsing.

(I repeat that, unlike the rest of the tests, the parsing verification tests are borrowed from Henri Sivonen , who is known for being very involved in the development of the HTML5 parser for Firefox 4. That is, in fact, these are tests that were invented during development of Fx4, so it is not surprising that it passes them perfectly. The key feature of such selections is that, while remaining correct, they cannot claim 100% or close coverage of the checked functionality. In other words, this is selective testing of interesting variations to the author of the code.)

| 4. SVG in text / html | 1 bonus point | Checks that the browser is able to recognize SVG-content inserted into the document, by the appropriate namespace. |

| 5. MathML in text / html | 1 bonus point | Checks that the browser is able to recognize the MathML content inserted into the document by the appropriate namespace. |

Suddenly, the test fails Opera, which historically has one of the best support for SVG. True MathML support is only available in Firefox. Chrome passes this test without even having the support of MathML itself (you can check it with Mozilla's test examples ).

Note : formally, the internal contents of the svg and math elements and the rules for parsing / displaying are described by separate specifications. The HTML5 specification says that the document must support the insertion of SVG content through the <svg> tag and MathML content using the special <math> tag.

Canvas 3 tests | 20 points

This test block checks Canvas support. In fact, as can be seen from the description below, only the fact that the browser supports the canvas tag and outputs the correct context for JavaScript manipulations is verified.

| 1. <canvas> element | 5 points | Checks that the created canvas element has a getContext method. |

| 2. 2d context | 10 points | Checks that the CanvasRenderingContext2D object is supported and the getContext (“2d”) method call returns an instance of this object. |

| 3. Text support | 5 points | Verifies that the received context has a fillText method. |

Video 7 tests | 31 points | 8 bonus points

This block is designed to check the support in the browser of the new <video> tag. Additionally, it checks for support for various codecs, which has nothing to do with the HTML5 specification, but is of some interest.

| 1. <video> element | 20 points | Checks that the created video element supports the canPlayType method. |

| 2. track support | 10 points | Checks that the browser recognizes the track element. |

| 3. Poster support | 1 point | Checks that the video element supports posters. |

| 4. Supports MPEG-4 | 2 bonus points | Checks that the browser says that he is able to play video in the corresponding codec. |

| 5. Support H.264 | 2 bonus points | Checks that the browser says that he is able to play video in the corresponding codec. |

| 6. Support Ogg Theora | 2 bonus points | Checks that the browser says that he is able to play video in the corresponding codec. |

| 7. WebM support | 2 bonus points | Checks that the browser says that he is able to play video in the corresponding codec. |

Audio 6 tests | 20 points | 5 bonus points

Similar to the video support check block, this block checks the support of the new <audio> tag. Additionally, it checks the support of various audio codecs.

| 1. <audio> element | 20 points | Checks that the created audio element supports the canPlayType method. |

| 2. Support PCM | 1 bonus point | Checks that the browser says that it can play audio in the corresponding codec. |

| 3. Support MP3 | 1 bonus point | Checks that the browser says that it can play audio in the corresponding codec. |

| 4. AAC support | 1 bonus point | Checks that the browser says that it can play audio in the corresponding codec. |

| 5. Support Ogg Vorbis | 1 bonus point | Checks that the browser says that it can play audio in the corresponding codec. |

| 6. WebM support | 1 bonus point | Checks that the browser says that it can play audio in the corresponding codec. |

Note : the incorrectness of checking codecs in such tests is determined not only by the fact that the requirement to support certain codecs is not defined in the HTML5 specification, but also by the fact that this is not always a (permanent) characteristic of the browser. For example, IE9 with installed WebM codecs from Google gets an extra 3 bonus points.

Elements 8 tests | 38 points

This unit checks the support of various new elements and attributes.

| 1. Insert invisible data | 4 points | The presence of the dataset property on elements is checked. |

| 2. Section elements | 7 points (by score for each item) | Checks that the browser is able to create block elements section, nav, article, aside, hgroup, header and footer. |

| 3. Support for annotated blocks (for some reason called grouping) | 2 points (by score for each item) | Checks that the browser is able to create block elements figure and figcapture. |

| 4. Text semantics | 6 points (by score for each item) | Checks that the browser can create text semantics description elements:

|

- For mark, the default values for the background and text colors are described as typical in the specification (and can reasonably differ from one UA to another)

- For ruby / rt / rp, the tested values are specified in the CSS3 Ruby module under development, but not in the HTML5 specification.

| 5. Interactive elements | 6 points (according to the points on details and summary and two command and menu) | Checks that the browser is able to create the corresponding elements: details, summary, command and menu. |

| 6. hidden attribute | 1 point | Checks that elements have a hidden attribute. |

| 7. contentEditable | 10 points | Checks that the elements have a contentEditable-attribute. |

| 8. API for the document (for some reason is called dynamic insertion) | 2 points (by score for each item) | Verifies that the elements have a property outerHTML and a method insertAdjacentHTML. |

Forms 3 tests | 90 points

Note : web forms are a very interesting object from different points of view, but the attention to them in this test is both too large and too superficial in general and noticeably deeper compared to the other HTML5 elements - in particular, in order to display the real the picture.

As already mentioned, web forms are an important part of HTML5. In the historical aspect, HTML5 has grown from a Web Applications 1.0 project, subsequently incorporating as part of the Web Forms 2.0 specification, describing new form elements and additions to existing ones. Therefore, of course, web forms deserve a lot of attention, as other elements deserve, but they have never been the center of attention in conversations about HTML5, unlike, say, canvas, media elements, semantic structural tags and various APIs.

Regarding web forms, especially new input elements, there are many ambiguous points that will still require tweaking, and, above all, it concerns the appearance and interaction with the user:

- The appearance of the elements is not described by the specification; therefore, it is actually relegated to browser developers. (There is a similar problem with audio / video controls, which are different in all browsers.) The fact that the appearance is not described in detail in the specification is correct, because depending on the features of the device, the input elements, their UI and UX for the user can vary (for example, on mobile devices). But at the same time, even on desktops, absolute homogeneity in this matter has not yet been observed.

- Styling - try to set a uniform style for controls, especially new ones, in which styles are embedded in the browser code.

- Localization (for example, see the control of the choice of time or date) is a matter of principle, which today has no unambiguous solution. Yes, this is the task of the browser manufacturers, but for today it is not solved.

1. Input item types 58 points

A series of tests that test the ability to set an input element of a particular type, validation and additional features in individual cases.

| search | 2 points | Type support |

| tel | 2 points | Type support |

| url | 2 points | Type support (points), validation |

| email | 2 points | Type support (points), validation |

| Dates and times | 24 points (4 points for each type) | Checked for datetime, date, month, week, time, datetime-local:

|

| number, range | 8 points (4 points for each type) | Checked

|

| color | 4 points | Checked

|

| checkbox | 1 point | indeterminate . |

| select | 1 point | required-. |

| fieldset | 2 points | , fieldset elements disabled. |

| datalist | 2 points | , datalist input list-. |

| keygen | 2 points | , keygen () challenge keytype. |

| output | 2 points | , output-. |

| progress | 2 points | , progress-. |

| meter | 2 points | , meter-. |

2. 20 points

| pattern, required | 2 points ( ) | pattern required input. |

| 8 points |

| |

| 4 points | autocomplete, autofocus, placeholder multiple input. | |

| CSS- | 4 points ( ) | : valid, invalid, optional required. |

| 2 points ( ) | input change. |

| Validation | 8 points | checkValidity input. |

| 2 points ( ) | Checks forminput and formchange event support. | |

| Sending events | 2 points (by score for each event) | Checks for support for the dispatchFormInput and dispatchFormChange methods. |

User Interaction 2 tests | 15 points

This block includes the verification of two possibilities: Drag & Drop and the History API.

1. Drag & Drop 10 points

Tests support for the draggable attribute in elements.

Note : it is interesting that D & D support was available in four versions of Netscape and Internet Explorer. In IE5, it has been greatly expanded, and subsequently also implemented in Safari. Later, in the course of working on HTML5, this D & D implementation was reverse-engineered by Jan Hickson and described in a new standard.

The main essence of Drag & Drop is in special events marking the individual stages of the process of transferring elements and transferring data together with elements through special objects. In general, there is a D & D in IE5 +, but h5t does not know about it (unlike Modernizr), since makes a verdict only for a specific new attribute not supported in IE.

2. Session History 5 points

Checks for the history property and the pushState method in it.

Web Applications 3 tests | 19 points

Checks support for Application Cache (for offline applications) and special methods in the Navigator (for information about the data received).

| 1. Application Cache | 15 points | The presence of the applicationCache property in the window is checked. |

| 2. ProtocolHandler | 2 points | Checks for the presence of the registerProtocolHandler method in the navigator. |

| 3. ContentHandler | 2 points | Checks for the presence of the registerContentHandler method in the navigator. |

Security 2 tests | 10 points

Checks if work with iframe.

| 1. sandbox | 5 points | Checks if there is a sandbox attribute in the iframe. |

| 2. seamless | 5 points | Checks if there is a seamless attribute in the iframe. |

MicroData block 1 test | 15 points

Microdata HTML specification support is evaluated. The test includes one test verifying:

- support for itemValue and properties attributes

- getItems method support

Geolocation 1 test block | 15 points

Geolocation support is checked. The test includes one test that examines the presence of the geolocation property in the navigator.

WebGL 1 test block | 15 points

WebGL support is evaluated. Verification includes one test that verifies support for at least one of the many contexts for the canvas element: 'webgl', 'ms-webgl', 'experimental-webgl', 'moz-webgl', 'opera-3d', 'webkit-3d' , 'ms-3d', '3d'. :)

Block Communication 3 tests | 25 points

Checks support for a number of specifications that describe networking.

| 1. Cross-Document Messaging | 5 points | Checks for the presence of the postMessage method in a window. |

| 2. Server-Sent Events | 10 points | Checks for the presence of an 'EventSource' object in a window. |

| 3. Web Sockets | 10 points | Checks for the presence of a 'WebSocket' object in a window. |

Files block 2 tests | 20 points

Checks File API support (one of the most raw (fresh) specifications among all checked).

| 1. FileReader API | 10 points | Checks for the presence of a 'FileReader' object in a window. |

| 2. FileWriter API | 10 points | Checks for the presence of a 'FileWriter' object in a window. |

Storage 4 tests unit | 20 points

Checks support for a number of specification for storing data on the client.

| 1. Session Storage | 5 points | Checks for sessionStorage in window. |

| 2. Local Storage | 5 points | Checks for the presence of localStorage in a window. |

| 3. IndexedDB | 10 points | Checks for the presence of an indexedDB object (or webkitIndexedDB, or mozIndexedDB, or moz_indexedDB) in a window. |

| 4. WebSQL Database | 0 (5) points | Checks for the presence of the openDatabase method in a window. |

Web Workers block 1 test | 10 points

Checking support for Web Workers - comes down to checking the presence of a Worker object in a window.

Block Local Devices 1 test | 20 points

Checking whether the <device> element can be created and whether it has a type property.

Note : a month and a half ago, this tag was cut from both the specification from the W3C and the specification from the WHATWG, to which the author refers. The implementation of the corresponding functionality should be implemented through a special API.

Block Other 2 test | 6 points

Several separate properties are checked. (I suspect that for a round account in the test.)

| 1. Text Selection | 5 points | The presence of the getSelection method in the window is checked. |

| 2. Scrolling | 1 point | The presence of the scrollIntoView method on elements is checked. |

Conclusions on the content of the tests

The first thing that comes to mind when looking at the scan and test description is superficiality . The test, setting itself a big task to check the level of support for HTML5 in various browsers (in any case, this is how it is perceived), is not a bit buried inside most of the tested technologies, limited to a formal test of the presence of certain properties and methods.

The arbitrariness of the distribution of points - I already wrote about this at the beginning of the review, but I repeat once again: the distribution of points for various “achievements” is done at the discretion of the author by a willful decision. As a result, somewhere for a superficial check is hung up by 15 points, somewhere by 1-2 for checking the availability of properties, and somewhere around 5 points is given for 19 checks of the parsing algorithm or 0.26 points in terms of each check.

Associated with arbitrariness thing: the bias of accents . As noted at the beginning, a very large emphasis is given to web forms (even with a superficial approach), a small emphasis is given to checking the details of Canvas, Video or Audio, and various APIs. A number of checks evaluate the operation of the parsing algorithm, single checks evaluate the operation of the API, including 50+ methods and properties (Canvas) and many different nuances.

Bugs . They are - both in checks and in relation to checked standards or their parts, some of them are no longer supported or transformed so that the checks included in the test are not relevant.

The test includes things that are not directly related to web standards - codecs, and WebGL, which is a third-party (non-W3C) standard.

Selectivity of checked standards : besides the fact that a third (by points) of checked things is not included in the HTML5 standard today, the reality is that neither in the HTML5 specification area nor in the set of new specifications for Web Apps, 100% coverage is observed (even superficial).

In other words, there are a lot of things that HTML5Test doesn't test at all ...

What is not testing h5t?

Let's start by looking at what the author says about his creation:

HTML5 test results are just an indicator of how well your browser supports the upcoming HTML5 standard and related specifications. He is not trying to test all the new features of HTML5, he also does not check the functionality of each detected feature.

So, HTML5 test does not check:

- , , ;

- HTML5, , :

- (, role aria-*) , ,

- ;

- SVG MathML, namespace;

- , «HTML5», , , . , HTML5, :

- Navigation Timing

- Progress Events

- RDFa API

- System Information API

- MediaCapture API

- Calendar API

- Clipboard API

- and a number of other equally interesting and raw specifications :)

findings

Conclusions, as always, depend on the goals and the questions you want to get answers to.If you ask the question "is it possible to judge the level of support for HTML5 in browsers on the basis of the HTML5 test," then the answer is no more than yes. There are many reasons for this, the key ones are three: superficiality, imbalance and a large amount of impurities.

If you put the question in a slightly different perspective: “Does the HTML5 test display the dynamics of improved support for HTML5 in browsers?” Then, given the internal correctness of most tests, the answer will be more likely than not. This, however, does not exclude that support can be improved (in one direction or another, or parameters), but the test will not notice this because of its selectivity.

In the technical aspect, the HTML5 test is, of course, of great interest as an example of a well-built architecture for testing browsers.

Source: https://habr.com/ru/post/118641/

All Articles