On the verge of augmented reality: what to prepare for developers (part 3 of 3)

The third and final part of the trilogy transcript (see part 1 and part 2 ) of the Augmented Reality report.

It will be about image processing as applied to augmented reality:

- detection of markers and tags;

- multi-camera marker motion capture systems;

- structured lighting;

- Z-sensors (in particular, Kinect);

- using posture databases;

- pure optical motion capture systems.

And for a snack - a subjective view of the future of augmented reality, which will clarify the meaning of the picture with the dog.

Markers

Much more interesting and innovative is the processing of video images that we receive from cameras. The simplest is to use markers.

')

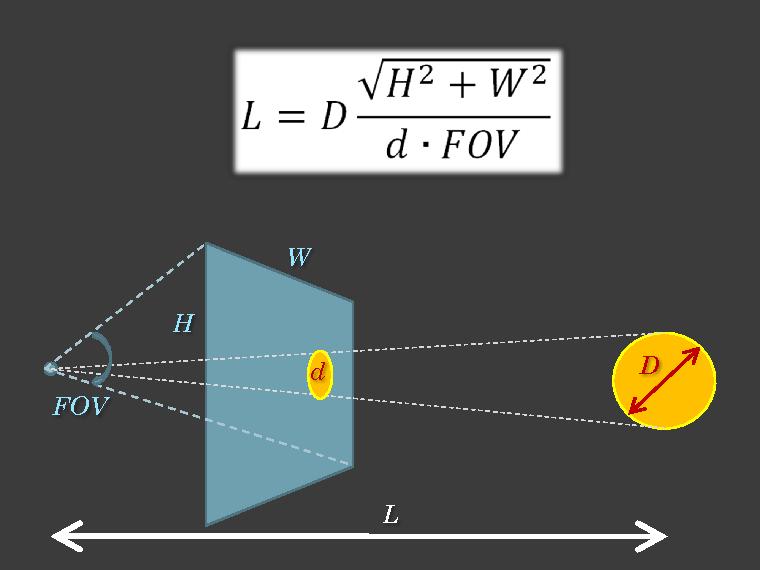

For example, as we see on the Sony Move , there is a glowing ball. This is a pretty cool thing, because if we know the actual diameter of the marker (D), we know the parameters of our camera, as it is called, the viewing angle (Field-Of-View = FOV), and its resolution, in width (W) and height ( H), and knowing the size in the pixels of the marker's projection onto the image (d), we can estimate the distance to our real object (L), and in general, we can estimate its position in the camera coordinate system.

This is all done by absolutely simple and stupid formulas, well, if we have a viewing angle of 75 °, 640 × 480, and a 5 cm ball, and we see it as 20 pixels, then it means there is a meter and a half to it. On this elementary principle, the Sony Move tracking works. There is an accelerometer, there is a gyroscope, and there is a magnetic compass, thanks to which we can see the corners, but we still wonder where it is in space.

There is a completely simple camera, the Sony Playstation Eye, by the way, is a good camera. It is relatively inexpensive, and at the same time it can shoot with high frequency and low distortion (geometric).

And in general, based on this, we can understand where we have something, and to do just such an augmented reality. Here, in this case, the dude got his hands instead of the Sony Move, because by the markers we understand where they are, and we can scale the hand, depending on whether it is closer or further, and in the corners we understand how to orient this hand:

High frequency is how much?

60 frames per second, she even 77 can produce, but this is some kind of extreme mode.

How to detect markers on image

How to find a marker on the image? Well, the dumbest way is on the doorstep. Those. you have some kind of picture, this is some kind of signal, and you just know that the markers, they are just the brightest in the color we need, and just cut them off at the thresholds. In fact, most of the algorithms work in this way. There are more cunning detectors, where they enter every gauss inside, and the like, but they are quite expensive, and what you see in real-time, most likely this is a simple threshold, maybe with some sort of bells and whistles.

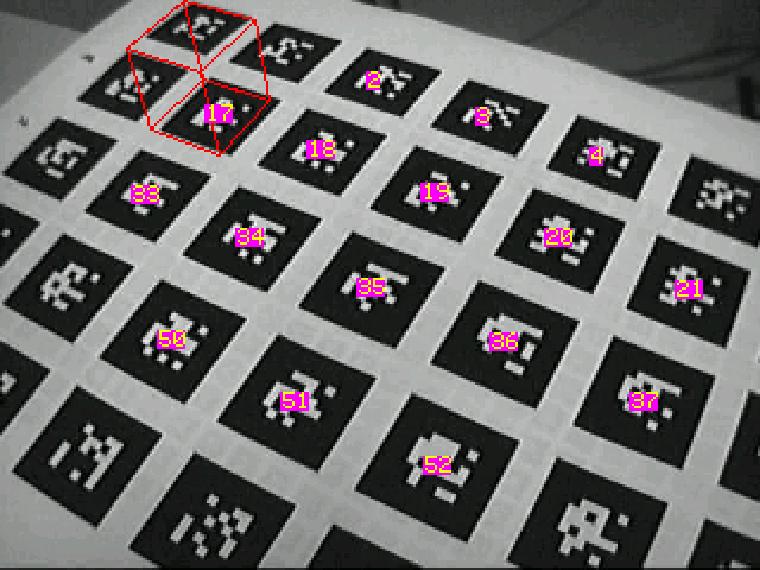

For example, some such tricky markers are often used in augmented reality, ala some 2D barcodes, only simpler. Here they are detected, and here, on top of them you can draw some figure:

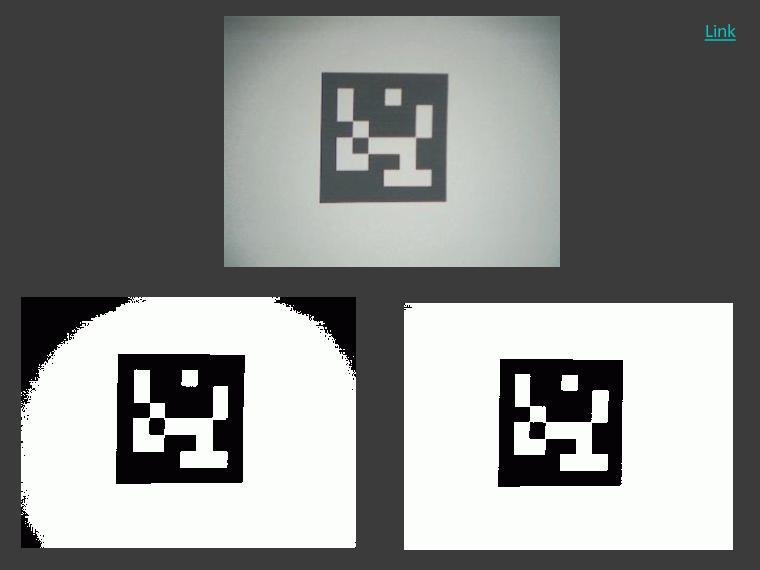

How do they do it? In fact, there is also the original picture, then it is cut off on the threshold, the contours are cleared, and then with this binarized picture you can already apply a grid, understand what is painted, what is not, and put a pattern:

All this magic in image processing is, often, a mixture of some completely stupid algorithms, and most importantly, to pick up this mixture, the main innovations in this.

But there are more cunning algorithms, now the computing power is growing, it is becoming available.

If you have N cameras, and there are M markers, then you can generally do triangulation to calculate the position of each marker in space, understanding how it is projected onto each of the planes, and on the basis of this, add something more complicated.

For example, of such dinosaurs, the fact that it actively exploits this system, this idea, is VICON . It costs about a hundred thousand dollars. A person is dressed in a special suit, covered with a bunch of markers, a large number of cameras are placed, and then, strictly speaking, based on the position of these markers, you can try to reconstruct these skeletons.

Reconstruct how the human skeleton moves, based on the fact that it tracks this marker. In the video, by the way, it’s quite dark, and you don’t see the markers yourself, because they are illuminated with infrared light, and there are infrared detectors in the cameras.

The cameras themselves, by the way, are simply driven to the computer by the coordinates of the marker, because with so many cameras to drive the image to the computer, no matter what modern tires are now ... therefore the dumbest algorithm — a threshold, a special chip — is sewn into the camera itself It transmits, roughly speaking (x, y), the coordinates of the marker image, and then the computer threshes.

But there is still enough tricky mathematics to restore the position of the skeleton on the markers, it is still quite difficult. Here they are selling it for a hundred thousand dollars, they actually have only one serious competitor among industrial marker systems, this is OptiTrack . They sell something from six thousand dollars, but to work normally - at least 10 thousand. But there they have a lot of restrictions - one actor, and the like.

This is about a market for you to understand, which is still expensive.

Total: it is difficult to process images, so you can get out: use markers - cut off on the threshold, and that’s it, we already have a dot, and with dots we can sort it out somehow.

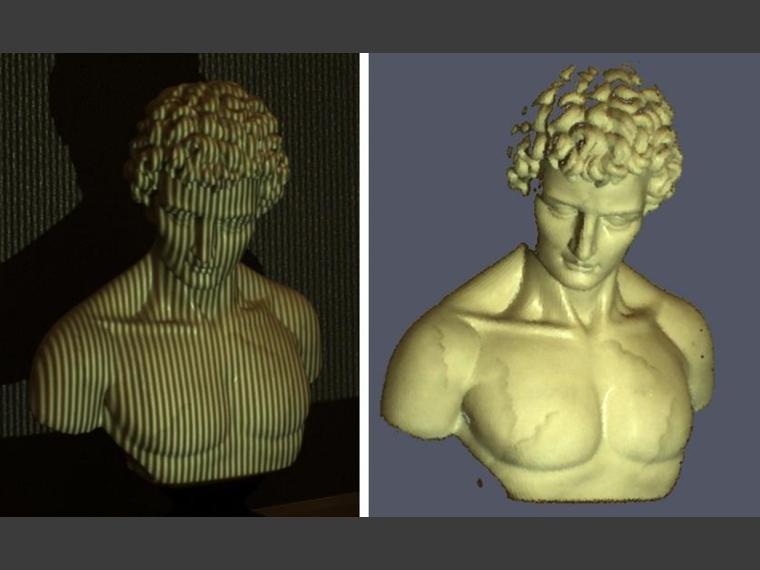

Structured light

Accordingly, the following is such a perversion in order to simplify the processing of images, this is to take and direct some structured light to our object. In this case, it is in the visible range, cameras in the visible range are working, you can put something smaller. Based on this, shooting with several cameras ... we have a certain such structure in the image that is not very difficult to detect, and based on how it is displaced in one image relative to another, you can again reconstruct the three-dimensional model due to triangulation.

About ten years ago I was doing this a little bit; I still have scanned Lenin's face somewhere on a laptop. A terrible thing.

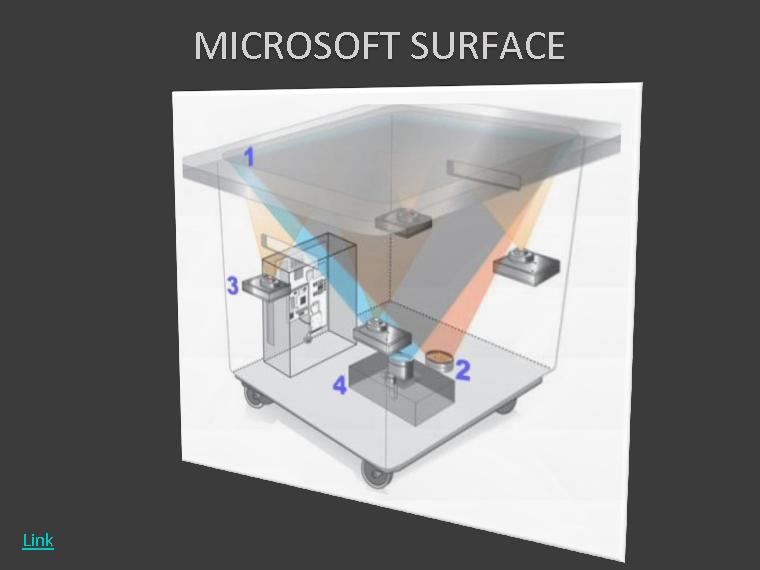

As a matter of fact, Microsoft Surface works on this principle until such a purely development-research, until it goes into consumer goods, and maybe it will not work, because it is quite expensive 1) .

There are four or even more infrared cameras downstairs, an infrared structured backlight, and accordingly, based on this, she understands what they put on this Surface, what fingers they touch on it, and so on. Those. these are quite such cool and intuitive interfaces.

I like most of all that when a phone is detected it can be uploaded to it. It is a cool application of augmented reality, very physically.

Z-cameras / Z-sensors

Next - Z-cameras, Z-sensors. This, of course, is Microsoft Kinect, and I promised to tell you more about it.

But actually, Kinect, formerly called Project Natal , its roots are the Z-sensor and Z-cam from 3DV, and Microsoft bought them .

And the progenitor of this sensor is alive here. But if someone sees on the laptop, I’m coming up here, and here - the depth map, but the Z-sensor itself.

How exactly does this sensor work? In fact, it is like a laser range finder. A beam of light is sent there, it is reflected from the object and it comes back with some picosecond delays, and we learned to measure them in some way. This is essentially the time of passage of the light signal to the object and back:

This I recorded at home with a video, the depth map looks like:

And plus to this, it removes the usual RGB image, so that you can somehow analyze it further. You understand that this is a lot of good data, depth, distance, it is much easier to work with this than just with RGB, you can guess gestures and the like.

But Kinect, how is it even different. It seems that despite the fact that they bought it, they built it all on a different principle 2) . Because they have two cameras that determine the depth, and one - which is RGB 3) . And it seems that they provide some kind of structured illumination in the infrared range of the object, and they take it off with two cameras, and some rather complicated chip cheats it, in terms of the correlation of these images with the backlight, and the depth to different points. Those. They essentially changed the technology.

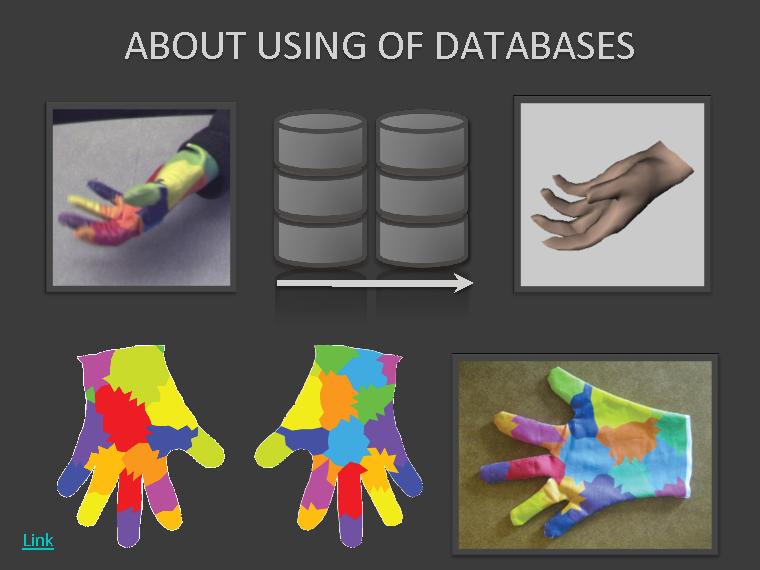

Another interesting thing with Kinect is that they used a database of certain poses for recognition ... after all, the task remains to recognize what position the person is in at the moment, and they need it in real-time, because this is all for games. They used some kind of database, and this is all very difficult, very secret. four)

There are some developments at MIT that do something similar for the hand,

on which they put on a special colored glove, you can order and print out some drawing on a white lycra glove, and then they also approximately restore the position of the hand:

- somehow reduce the picture

- calculate some of its characteristics,

- and looking for the nearest database

- and having found, they have a ready position.

This is how it works, by the way for what you can use your hands. Enough such a fun development. For now, here’s a lot of boiling down to the fact that you need to apply a trick - hang a marker, put something special on. You see how they came up with, why all this can be applied - op.

Well, then it is their fantasies that they have not washed their hands, but in a glove they take and wash. Well done, with humor dudes.

True Optical: several cameras

Still, you can try to tense up and do it purely optically, without tricks, put a bunch of cameras, cut in more complicated algorithms, there is such a commercial system, again for Motion Capture, for capturing human movements, it is called Organic Motion .

There is some kind of clever algorithms, there they slash a person to restore his position, i.e. all this is a little barbarous, all this looks, here is a video, this is Andrew Garlic, the author of the idea and the general director, he approached and talked to us at the exhibition, such a completely ordinary and sociable uncle American.

Here he explains how his system works, he has a lot of cameras arranged, a lot, and you see a special background. As a matter of fact, right in real time, he shoots it and you have a character on the screen that is animated, ... like this.

It is worth all this, the price, from 60 thousand dollars, for such a system, it is with equipment, turnkey.

We are also trying to work on this niche, we also have marker-free capture of movements, but we have such that we want to make it completely accessible at all, with ordinary cameras, we use the PlayStation ourselves, with ordinary computers. And if anyone is interested, I can then show the program, we can’t remove it here, didn’t bring it, and then, we don’t have real-time processing, but offline, it’s not so interesting, you don’t live looking.

Here is an example, one of our users, with the help of our Mockup, made this kind of action movie:

The people already use this, although we still need to refine and refine 5) . But this is much better than just sitting and trying to draw all this animation with your hands. Here is such a thing.

True Optical: one moving camera

Well, and finally, I actually complete - truly optical. There were a lot of cameras, now imagine that you have one moving camera. Those. over time, you see the same objects from different points of view. Based on this, you can get information about these objects.

Here is a live program that tries to track some points on the image, and on this basis, it finds some planes in the picture, in a real three-dimensional world and then you can put some characters on it, everything is quite funny, this is a live demonstration Moreover, all of this is available in source code . The only thing is that there are no ready-made c-build examples, so you need to complete the quest, and be able to assemble it all. I could not under Windows, although it is argued that it is possible. True, I did not try long, but if someone is very interested, then you can play.

Future

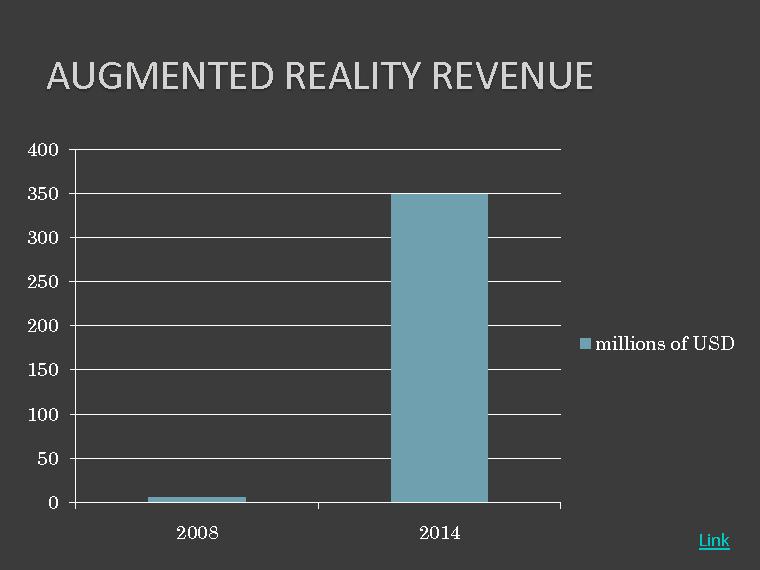

About the future. Be sure to talk about money in the presentation.

They assume a sharp increase in all kinds of money, although this is just ... not so many millions of dollars, and the research is so plush.

But actually there are three problems on the way to this.

Robustness

The first is robustness, i.e. really reliable. All that you see and try to use, it is actually buggy, and as if unreliable.

Robustness is like a doll, i.e. in spite of the fact that you are making noise, in spite of the fact that something is imperfect there, it still continues to work. While this is not there, these technologies are still very fragile, they should be used very carefully.

Quick response

The second is a quick response. Because if we perform some action, and we see the response to it after some time, cognitive dissonance arises in our head. By the way, this is the main problem of Microsoft Kinect, because they have a very noticeable lag between the action and the display in the game, and therefore they have all the games very plush - not hardcore 6) . Those. in such hardcore games, this is still not applicable, but Sony Move - it is applicable, there is almost no lag, it is there in the region of ten milliseconds.

Helpfulness

And then the usefulness of actually this all. While many of these applications are of very dubious usefulness, like a fifth leg dog. For example, on Android there is such an application, it measures the distance to an object on the floor, knowing the height, i.e. there you must enter the height at which the device is located, there is such a crosshair in the camera, you point to a point on the floor, and it shows you the distance. We can calculate the angle, we figured it out, mastered the Kalman filter or alpha-beta, we have entered it and then according to the sine or cosine theorem, as you like, we get the actual distance.

Why this, I do not know. So while with this there are some problems.

Future is now

Well, what the future might look like, how BMW sees it for itself, in general, it's cool to show BMW as a future:

Technician of the future. Those. Someone came with his BMW to the Armenians-service, it’s known, they just came from the village yesterday, they don’t know how and what is arranged in the car, so they put on special glasses, she draws them “Dude, here you need to unscrew the two screws” . So he said “Next step”, she disassembled the Armenian accent, and showed “now take it off”. So you can disassemble, it is unclear whether it is also possible to assemble everything back, in general, the future sees BMW as well.

This is how the AVATAR film was shot (I heard the statement that it was much more interesting to watch how Avatar was shot than to watch Avatar itself):

Here they are in special costumes, with special markers, in addition, they have black dots on their faces, we must also cover the facial animation, which I haven’t mentioned yet. There are black dots on the face, and right here the camera is hanging on the bracket. Accordingly, they immediately capture and facial animation, and the movement of the whole thing.

And they rode there on real horses, instead of some mythical school, which are there on Pandora. In general, if you type “AVATAR MOCKUP” on YouTube, then you will find a bunch of the same video, ten times filled with different people and organizations. Here, you can get accustomed - put black dots on it, these are also markers. In Hollywood, they do not soar.

As I understand it, there was a lot of innovation there, highlighting the face so that it was bright, and they even learned how to track eye movements. Here (the scene with the dragon at the cliff) - do you think this was all drawn on the computer? Rendered it yes, but the actors had to fly.

Also, I once was shocked by the way the matrix was removed there - it turned out that they were hung there on the ropes so that they ran along the walls. So the actors still have to sweat.

That's all, SEE YOU IN AR!

Notes

1) In the six months since the report, Microsoft has managed to announce a new version - Surface 2.0 , working on new principles - PixelSense. And in the near future is expected to begin sales of the device SUR400 from Samsung.

2) As soon as the Kinects went on sale, they were immediately disassembled and they found out that there is a chip from PrimeSense . This is also an Israeli company (like 3DV), but they did not sell out to Microsoft, so now their chip stands in ASUS Xtion .

3) In fact, there is one infrared camera, one RGB camera and an infrared laser for structured backlighting. Hello again structured light! This is how a room with a working Kinect through infrared glasses looks like:

4) Not so long ago, MS Research published an article on this topic: Real-Time Human Pose Recognition in Parts from Single Depth Images . Interestingly, the official patent describes a slightly different approach.

5) During this time, they released a version with Kinect support and made a lot of improvements:

6) Less than a month ago, MS announced that a new version of the tracking algorithm of the human body is being prepared, which has significantly less time delays.

Source: https://habr.com/ru/post/118243/

All Articles