Multi-camera video analytics

In the first publication to the Chabroux community we want to tell about the most interesting direction of the work of the company “Synesis” - a multi-camera video analysis, more precisely, a multi-camera object tracking algorithm.

Our team is engaged in applied research in the field of video analytics and develops high-speed machine vision algorithms for automatic classification of situations according to streaming video data. We plan to highlight the most interesting results in the corporate blog. We will be grateful for ideas and criticism.

An integral component of almost any video analysis system is the tracking algorithm (tracking). Why does he need a smart video surveillance system? In general, tracking of objects is necessary for automatic recognition of situations by rules, for example, a person entered the control zone, stopped, left the subject, or without rules, in self-learning systems. Disruption of tracking almost always leads either to a missed alarm situation, or to repeated triggers of video analytics.

Habr has already talked about the maintenance of objects in articles about the development of Zdenek Kalal and Microsoft Research . “Single-chamber” support, for example, in the MagicBox device works as follows:

')

The result of the “single-chamber” tracking algorithm is a sequence of space-time coordinates of each object. There may be breaks in the trajectories when the object leaves the field of view of the camera or when the object approaches the obstacle.

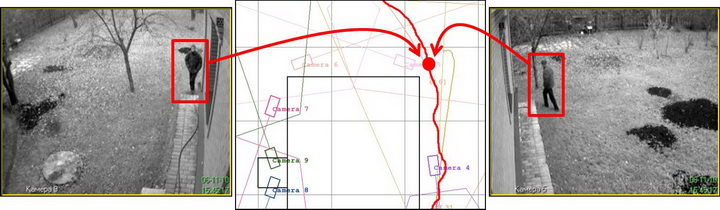

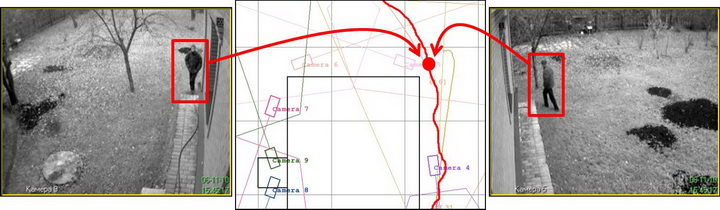

The multi-chamber tracking algorithm, the subject of this publication, continuously compares data on the position of objects from different cameras, taking into account the relative position and linking of cameras to the terrain map. The algorithm builds a generalized trajectory of the object when moving from camera to camera and projects this trajectory onto the map. In this case, the object can be observed by several cameras simultaneously or be in a blind zone. The multi-camera trajectory allows you to implement geovisual search tools, automatic angle selection and other security features that are often shown in science fiction films.

Before operating the system, the surveillance zone of each camera should be linked to the map of the controlled area. Our calibration algorithm uses four points, the coordinates of which the user must specify simultaneously on the camera frame and on the map:

It is recommended to use the nodal points on the terrain, which are easy to identify visually from different angles, such as trees, corners of the house and fences. Algorithms calculates the coordinate transformation matrix by the method of least squares :

where r is the coordinates on the camera frame, R is the global coordinates on the map, A is the desired transformation matrix.

Thus, the transformation matrix A allows mapping the two-dimensional coordinates of the object in the camera frame to its global coordinates on the map.

So, the stream of space-time coordinates of moving objects recorded by different cameras comes to the input of the multi-chamber tracking algorithm. So the “single-camera” video analysis is not synchronized in time, the initial coordinates are reduced to a single time scale using the linear interpolation method. The coordinates are then converted to a global coordinate system using the matrix A.

This is how the object trajectories look after projection onto the map. The illustration shows the coverage areas of nine cameras, five of which registered movement of the object. “Single-chamber” trajectories are highlighted in the same color as the corresponding cameras and their zones of action.

The second step is a rough comparison of the global coordinates of the observed objects, which can potentially be observed by several cameras, but correspond to one physical object. To do this, calculate the distance between the observed objects on the map for the current point in time. If the distance is less than the selected threshold, for example, 1 meter, then we mark the objects for the next processing step.

If there is no data for the camera for the considered time point (the object is outside the range of this camera), then the object location is predicted. It is assumed that the speed of the object out of sight of the camera does not change.

As a result of step 2, a list of observable objects and the corresponding “single-chamber” trajectories are formed, which can correspond to a single physical object.

In the third step, we calculate the Pearson correlation coefficient between the pairs of coordinates of two “single-chamber” trajectories. If the correlation coefficient lies in the selected interval of significance, then we assume that two trajectories belong to the same object.

In the fourth step, we unite the “single-chamber” trajectories into “multi-chamber” ones. In the area of overlap obtained in step 3, we calculate the average trajectory of the object. If the areas of the cameras do not overlap, then the two paths are “stitched”, where the coordinates of the blind zone are interpolated according to the boundary coordinates observed in each camera.

Below is a generalized trajectory of the object on a map using multi-camera video analytics.

From a practical point of view, the developed multi-chamber tracking algorithm allows you to:

In the course of the research, an experimental zone of multi-chamber tracking of 9 cameras was created . A generalized trajectory of the target is obtained according to several camera data. The task of future research is to evaluate the effectiveness and accuracy of the developed algorithm.

See also the publication on the website "Synesis":

Our team is engaged in applied research in the field of video analytics and develops high-speed machine vision algorithms for automatic classification of situations according to streaming video data. We plan to highlight the most interesting results in the corporate blog. We will be grateful for ideas and criticism.

Escort in sight of one camera

An integral component of almost any video analysis system is the tracking algorithm (tracking). Why does he need a smart video surveillance system? In general, tracking of objects is necessary for automatic recognition of situations by rules, for example, a person entered the control zone, stopped, left the subject, or without rules, in self-learning systems. Disruption of tracking almost always leads either to a missed alarm situation, or to repeated triggers of video analytics.

Habr has already talked about the maintenance of objects in articles about the development of Zdenek Kalal and Microsoft Research . “Single-chamber” support, for example, in the MagicBox device works as follows:

')

The result of the “single-chamber” tracking algorithm is a sequence of space-time coordinates of each object. There may be breaks in the trajectories when the object leaves the field of view of the camera or when the object approaches the obstacle.

Support in the field of view of several cameras

The multi-chamber tracking algorithm, the subject of this publication, continuously compares data on the position of objects from different cameras, taking into account the relative position and linking of cameras to the terrain map. The algorithm builds a generalized trajectory of the object when moving from camera to camera and projects this trajectory onto the map. In this case, the object can be observed by several cameras simultaneously or be in a blind zone. The multi-camera trajectory allows you to implement geovisual search tools, automatic angle selection and other security features that are often shown in science fiction films.

Spatial Camera Calibration

Before operating the system, the surveillance zone of each camera should be linked to the map of the controlled area. Our calibration algorithm uses four points, the coordinates of which the user must specify simultaneously on the camera frame and on the map:

It is recommended to use the nodal points on the terrain, which are easy to identify visually from different angles, such as trees, corners of the house and fences. Algorithms calculates the coordinate transformation matrix by the method of least squares :

where r is the coordinates on the camera frame, R is the global coordinates on the map, A is the desired transformation matrix.

Thus, the transformation matrix A allows mapping the two-dimensional coordinates of the object in the camera frame to its global coordinates on the map.

Step 1: Preprocessing

So, the stream of space-time coordinates of moving objects recorded by different cameras comes to the input of the multi-chamber tracking algorithm. So the “single-camera” video analysis is not synchronized in time, the initial coordinates are reduced to a single time scale using the linear interpolation method. The coordinates are then converted to a global coordinate system using the matrix A.

This is how the object trajectories look after projection onto the map. The illustration shows the coverage areas of nine cameras, five of which registered movement of the object. “Single-chamber” trajectories are highlighted in the same color as the corresponding cameras and their zones of action.

Step 2: Preselection of objects in the camera overlap area

The second step is a rough comparison of the global coordinates of the observed objects, which can potentially be observed by several cameras, but correspond to one physical object. To do this, calculate the distance between the observed objects on the map for the current point in time. If the distance is less than the selected threshold, for example, 1 meter, then we mark the objects for the next processing step.

If there is no data for the camera for the considered time point (the object is outside the range of this camera), then the object location is predicted. It is assumed that the speed of the object out of sight of the camera does not change.

As a result of step 2, a list of observable objects and the corresponding “single-chamber” trajectories are formed, which can correspond to a single physical object.

Step 3: Comparison of "single-chamber" trajectories

In the third step, we calculate the Pearson correlation coefficient between the pairs of coordinates of two “single-chamber” trajectories. If the correlation coefficient lies in the selected interval of significance, then we assume that two trajectories belong to the same object.

Step 4 Summarizing Trajectories

In the fourth step, we unite the “single-chamber” trajectories into “multi-chamber” ones. In the area of overlap obtained in step 3, we calculate the average trajectory of the object. If the areas of the cameras do not overlap, then the two paths are “stitched”, where the coordinates of the blind zone are interpolated according to the boundary coordinates observed in each camera.

Below is a generalized trajectory of the object on a map using multi-camera video analytics.

Conclusion

From a practical point of view, the developed multi-chamber tracking algorithm allows you to:

- improve the accuracy of target detection and reduce the number of false positives due to the correlation of metadata of video analytics of adjacent television and thermal imaging cameras;

- to compare the image of the accompanied target, observed simultaneously on the television and thermal imaging cameras;

- exclude repeated triggered video analytics when a target moves from the observation area of one camera to the surveillance area of another camera;

- display the whole trajectory of a person’s movement on the map of the protected object based on the results of the video analysis for all cameras at once;

- apply the rules to the multi-chamber trajectory on the map for more accurate recognition of human behavior and events;

- automatically select the optimal angle of observation of a person as he moves from camera to camera.

In the course of the research, an experimental zone of multi-chamber tracking of 9 cameras was created . A generalized trajectory of the target is obtained according to several camera data. The task of future research is to evaluate the effectiveness and accuracy of the developed algorithm.

Additional Information

See also the publication on the website "Synesis":

- Algorithms of target tracking in extended object security systems

- Algorithm of multi-camera tracking using data from a video camera and a thermal imager

- Maintenance of objects in the conditions of their shielding by moving and motionless obstacles

- The future of video surveillance systems: multi-camera support

Source: https://habr.com/ru/post/117746/

All Articles