Accelerate Visual Studio Part II. Compilation experiments

My main working tool for the past 10 years is Microsoft Visual Studio. This is a great IDE (at least for C ++ and C #), which I do not know better than for desktop development for Windows. Nevertheless, one of its drawbacks is well known - the drop in performance on large solutions. I am currently working on a solution from 19 projects (about 4k files, 350k lines of code). Compilation and other costs eat up a fair amount of time. That is why I set out to conduct a large-scale study of ways to increase the speed of Visual Studio, separating myths from reality.

My main working tool for the past 10 years is Microsoft Visual Studio. This is a great IDE (at least for C ++ and C #), which I do not know better than for desktop development for Windows. Nevertheless, one of its drawbacks is well known - the drop in performance on large solutions. I am currently working on a solution from 19 projects (about 4k files, 350k lines of code). Compilation and other costs eat up a fair amount of time. That is why I set out to conduct a large-scale study of ways to increase the speed of Visual Studio, separating myths from reality.I’ll say right away that in the final I managed to reduce the compilation time of the solution from 4:24 minutes to less than one minute. Details under the cut.

On your marks!

In the beginning, I was going to build a separate test machine with a “clean” OS and only one installed program — Visual Studio. But then he decided that testing spherical horses in a vacuum would not be entirely true. One way or another, any browser, antivirus, file manager, archiver, text editor, etc., will be installed on the programmer’s computer, which means the Studio will have to work with all this in the vicinity. So, in this mode, we will test it on a regular developer’s computer (by reinstalling, however, for the sake of experiment purity, the operating system and installing the latest versions of the programs used in the work).

')

A set of experiments compiled on advice from the sites Stackoverfow , RDN , MSDN forums , Google issue, and just from the head.

The object of testing will be the time to complete the compilation of the solution. Before each experiment, the entire project folder will be deleted, the code will be re-uploaded from the repository, and Visual Studio will reload. Each experiment will be repeated three times, the result will be the average time. After each experiment, the changes made are rolled back.

If anyone is interested in the hardware configuration of my computer, so here it is:

+ hard drive WD 500 GB, 7200 RPM, 16 MB cache

+ Win 7 Professional 32 bits with all possible updates

+ Visual Studio 2010 Professional with the first service pack

In Windows, the maximum performance mode is turned on, Aero and all kinds of animations are disabled.

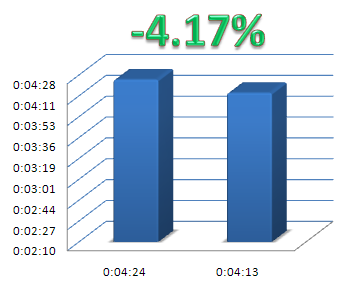

Initial compilation time of my solution: 4 minutes 24 seconds

Go!

Temporary files - on RamDrive

It is believed that the slowest operations during the compilation of a solution are related to disk access. This time can be reduced by using RamDrive for temporary files.

Result : 4 minutes 13 seconds or -4.17% by the time of compilation.

Conclusion : there is a performance boost, albeit small. If the amount of RAM allows, this advice can be applied in action.

The whole project is on RamDrive

If we managed to speed up the compilation a bit by dumping temporary files on RamDrive, it is possible to achieve even better results by moving the entire solution there (after all, more than 4000 files).

Result : 3 minutes 47 seconds or -14.02% at compile time.

Conclusion : At first, this experiment seemed a bit dumb to me - storing the source code in RAM is not the best option (what if the power was lost?). But, given the fact that there are no-breakers, as well as such versions of RamDrive, as from QSoft (with automatic duplication of modified files from RamDrive to the hard disk), they convinced me that option is possible. You only need enough RAM (and, in an amicable way, 64-bit OS).

The whole project is on a flash drive.

Continuing the discussion about the speed of searching for files on a disk and more efficient random access operations for USB-drives, we try to put the entire project on a USB flash drive and compile it there.

Result : 20 minutes and 5 seconds or + 356.44% at compile time

Conclusion : the most disastrous experiment. A flash drive is not able to cope with the squall of input / output operations that occur during compilation.

Enabling the ReadyBoost feature in Windows

Microsoft praises this feature precisely for improving performance when working with a large number of relatively small data blocks (our version). Let's try.

Result : 4 minutes 17 seconds or -2.65% at compile time.

Conclusion : quite normal way to accelerate work. In addition to the need to insert a flash drive once and configure ReadyBoost, it has no other drawbacks, but it does provide some performance boost.

Change the number of simultaneously compiled projects

When installing, Visual Studio prescribes this number equal to the total number of processor cores in your PC. Nevertheless, we can try to change it (this is done in the VS settings for C ++ projects) and evaluate the performance change. Since in my computer there is a 4-core processor, initially this number was equal to four.

Result :

6 projects are compiled simultaneously - 4 minutes 34 seconds or + 3.79% by the time of compilation

2 projects are compiled simultaneously - 4 minutes 21 seconds or -1.14% by the time of compilation

Conclusion : from the very beginning, I expected that an increase in the number of simultaneously compiled projects would not yield any performance gain (this was the case). But that is why reducing it to two gave a small increase for me is not very clear. Perhaps this is just a statistical error, and maybe when compiling 4 projects, Studio because of their dependencies loses time on some expectation, which happens less often when compiling just two projects. If anyone else has thoughts on the topic - please in the comments.

Disabling build text in the Output window

Less text output when compiling - faster result.

Result : 4 minutes 22 seconds or -0.76% by compile time

Conclusion : the increase is so ridiculous that it is not even worth the comments. It can be both real and random.

Empty basket

I read this tip on stackoverflow. The argument was that during the compilation a lot of small files are created and deleted, and the deletion procedure in Windows is slower with a clogged basket. Since I carried out all previous experiments with an empty basket, I had to do a reverse experiment - put 5,000 files in the basket with a total volume of 2 GB.

Result : 4 minutes 23 seconds or + 0.38% at compile time.

Conclusion : the compile time remains unchanged. The theory has failed.

Compiler Key / MP

The / MP switch is also a parallel compilation, but no longer projects in the solution, but files inside each project.

Result : 2 minutes 38 seconds or -40.15% by the time of compilation

Conclusion : this is one of the most significant achievements among all the experiments. Of course, the gain is so high mainly due to the 4-coreness of my processor, but soon such (and even more nuclear processors) will become the norm in any computer, so it makes sense to turn on the option. When it is turned on, the compiler honestly warns that it is not compatible with the / Gm (Enable Minimal Rebuild) key, which is scary at first - the thought arises that now with any change to any file, the solution will be fully recompiled. So-nifiga like! After changing one file with the code, as before, only this file will be recompiled, but not the entire solution. All that the key does is determine the choice of the algorithm for determining the interrelationships of code files and header files in a project ( more detailed ). Both algorithms are not bad and a significant performance increase from switching on / MP is many times greater than the drawbacks from turning off / Gm.

Remove solution folder from Windows search index

It is believed that changing files in folders that are indexed by the Windows search engine causes an increase in compilation time.

Result : 4 minutes 24 seconds or no change in compile time.

Conclusion : whether the indexing in Windows is done so well that it does not slow down the work of other programs with the disk, or if this influence is minimal, or I was just lucky and the compilation did not coincide in time with the indexing.

Unity builds

I told about this mechanism in the last article .

Result : 3 minutes 24 seconds or -22.73% by the time of compilation.

Conclusion : reducing compile time significantly. I have already written about all the advantages and disadvantages of this technique, you can decide whether to use it or not.

Completion of extra programs

The programs working in parallel with Studio eat memory and processor resources. Closing them can have a positive effect on the speed of the Studio. So, I close Skype, QIP, Dropbox, GTalk, DownloadMaster, Mysql server.

Result : 4 minutes 15 seconds or -3.41% at compile time

Conclusion : at compile time you will have to do without other programs. No jokes and pornoes while it is compiled there. It is unlikely that a complete rejection of all programs is possible for the developer, but you can create bat files that turn on / off all the excess and sometimes use them.

Disable Antivirus

If you have an antivirus installed on your system, then this

Result : 3 minutes 32 seconds or -19.07% at compile time

Conclusion : an amazing result. For some reason I was sure that all these * .cpp. * .h, * .obj files are completely ignored by the antivirus and only compiled executable programs will receive attention, which will not slow down the work very much. However, the fact is - almost a minute of saving time.

Defragment the hard drive

File operations are performed faster on a defragmented disk, and compilation is a huge number of file operations. I deliberately left this experiment for the last, because it is impossible to cancel the disk defragmentation, and I wanted to make the experiments as independent as possible.

Result : 4 minutes 8 seconds or -6.06% at compile time

Conclusion : practice is consistent with theory. Set yourself a defragmentation in the scheduler and more often.

Ways that would probably help, but it didn't work out

Switch to 64-bit Windows

There is an assumption that this would give some performance gains, but porting our project to x64, due to its specificity, does not have a very high priority and is not yet implemented. Accordingly, there is nothing to test.

Upgrading processor, memory, replacing HDD with SSD or RAID

I must say that my test machine is not so bad and it is still far from a planned upgrade. We work with what is. According to reviews on the Internet, the installation of SSD has the greatest impact on compile time.

Delivering seldom-changing projects to a separate solution.

This has already been done. If in your project this is not yet implemented - be sure to do it.

Xoreax's IncrediBuild or equivalent

The distribution of compilation between computers is already quite a cardinal step. It requires the purchase of special software, serious configuration and some "turning inside out" the build process. But in very large projects this may be the only possible option. The site Xoreax's IncrediBuild has data on productivity gains , customer stories and a lot of

That's all I wanted to talk about how to speed up the compilation of solutions in Visual Studio, and in the next article I will give some tips on how to speed up the work of the IDE itself.

Source: https://habr.com/ru/post/117670/

All Articles