Fast multiplication of polynomials using Fourier transform is just

Good evening.

This post is dedicated to the fast Fourier transform. Direct and inverse transformations (in complex numbers) will be considered. In the next part, I plan to consider their applications in some problems of Olympiad programming (in particular, one task about the “similarity” of strings), and also to tell about the implementation of the conversion in integers.

An FFT is an algorithm that calculates values of a polynomial of degree n = 2 k at some n points in O ( n ⋅log n ) time (the “naive” method performs the same task in O ( n 2 ) time). At the same time, you can perform the inverse transform. Since adding, subtracting and multiplying arrays of numbers is much easier than polynomials (especially multiplying), FFT is often used to speed up calculations with polynomials and long numbers.

First, let's define what a polynomial is:

P ( x ) = a 0 + x a 1 + x 2 a 2 + x 3 a 3 + ... + x n - 1 a n - 1

If you are familiar with complex numbers, you can skip this clause, otherwise, here is a brief definition:

x = a + i b , where i 2 = - 1

Here a is called the real ( Real ) part, and b - the imaginary ( Imaginary ). In these numbers, as you can easily see, you can extract the root from negative (and indeed any) numbers - this is very convenient when working with polynomials - as follows from the main theorem of the algebra, each polynomial of degree n has exactly n complex roots (taking into account the multiplicity ).

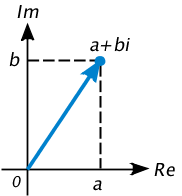

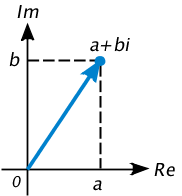

It is also very convenient to represent them as points on a plane:

Another remarkable property of complex numbers is that they can be represented as x = (cosα + i sinα) r , where α is the polar angle of the "number" (called the argument ), and r is the distance from zero to it ( module ). And when multiplying two numbers:

a = (cosα + i ⋅sinα) r a

b = (cosβ + i ⋅sinβ) r b

a b = (cosα + i ⋅sinα) (cosβ + i ⋅sinβ) r a r b

a b = (cosα⋅cosβ-sinα⋅sinβ + i (sinα⋅cosβ + cosβ⋅sinα)) r a r b

a b = (cos (α + β) + i ⋅ sin (α + β)) r a r b

Their modules are multiplied, and the arguments are added.

Now let's understand how the complex roots of the nth degree of 1 look like. Let x n = 1 , then its module is obviously equal to one, and n ⋅ arg x = 2 π k , where k is an integer. This means that after n multiplications of a number by itself (that is, raising to the nth power), its argument will become “multiple” by 2 π (360 degrees).

Recall the formula of a number, if the argument and the module are known, we get:

α = 2 π⋅ x / n , where 0 x

ω i = cosα + i ⋅sinα

Those. if we draw, we just get the points on the circle at regular intervals:

Please note three things that we will actively use (without them, nothing happens):

ω a ⋅ ω b = ω ( a + b ) mod n

ω 0 + ω 1 + ω 2 + ... + ω n - 1 = 0

ω 0 n / 2 = ω 2 n / 2 = ω 4 n / 2 = ... = 1 (for even n )

Because of these properties, it is at these points that we will consider the value of the polynomial. Of course, the results will not necessarily be real, so the program will need to work with complex numbers.

The proof is very simple: let φ = ω 0 + ω 1 + .... Multiply both sides by ω 1 (! = 1). Because ω i ⋅ω 1 = ω i + 1 , then φ⋅ω 1 = ω 1 + ω 2 + ... + ω n - 1 + ω 0 . The sum does not change from the permutation of the terms, therefore φ = φ⋅ω 1 , respectively φ (ω 1 - 1 ) = 0 . Because ω 1 ! = 1, then φ = 0 .

We assume that our polynomial has degree n = 2 k . If not, we add the highest coefficients with zeros to the nearest power of two.

The basic idea of FFT is very simple:

Let be:

A ( x ) = a 0 + x a 2 + x 2 a 4 + ... + x n / 2 - 1 a n - 2 (even coefficients P )

B ( x ) = a 1 + x a 3 + x 2 a 5 + ... + x n / 2 - 1 a n - 1 (odd coefficients P ).

Then P ( x ) = A ( x 2 ) + x ⋅ B ( x 2 ).

Now apply the principle of "divide and conquer": to calculate the values of P at n points (ω 0 , ω 1 , ... ), count the values of A and B recursively at n / 2 points (ω 0 , ω 2 , ... ) . Now the value of P (ω i ) is quite simple to restore:

P (ω i ) = A (ω 2 i ) + ω i ⋅ B (ω 2 i )

If we denote for ξ i = ω 2 i the points at which we consider the values of a polynomial of degree n / 2 , the formula will change:

P (ω i ) = A (ξ i ) + ω i ⋅ B (ξ i )

It can already be driven into the program, not forgetting that i takes values from 0 to n - 1 , and ξ i is defined only from 0 to n / 2 - 1 . Conclusion - it will be necessary to take i modulo n / 2 .

The running time is expressed by the recurrent formula T ( n ) = O ( n ) + 2 T ( n / 2 ). This is a fairly well-known relation and it is revealed in O ( n ⋅log 2 n ) (roughly speaking, the recursion depth is log 2 n levels, at each level, O ( n ) operations are performed in total for all calls).

Here is an example of an inefficient recursive FFT implementation:

You can add I / O and verify that your implementation is correct. For the polynomial P ( x ) = 4 + 3 x + 2 x 2 + x 3 + 0 x 4 + 0 x 5 + 0 x 6 + 0 x 7, the values should be as follows:

P ( w 0 ) = ( 1 0. 0 0 0 , 0. 0 0 0 )

P ( w 1 ) = ( 5. 4 1 4 , 4. 8 2 8 )

P ( w 2 ) = ( 2. 0 0 0 , 2. 0 0 0 )

P ( w 3 ) = ( 2. 5 8 6 , 0. 8 2 8 )

P ( w 4 ) = ( 2. 0 0 0 , 0. 0 0 0 )

P ( w 5 ) = ( 2. 5 8 6 , - 0. 8 2 8 )

P ( w 6 ) = ( 2. 0 0 0 , - 2. 0 0 0 )

P ( w 7 ) = ( 5. 4 1 4 , - 4. 8 2 8 )

If so, you can note the time of the recursive and naive method on large tests.

I have a polynomial of degree 2 12, this implementation runs 62 ms, naive - 1800 ms. The difference is obvious.

In order to make the procedure non-recursive, you have to think. The easiest way, it seems to me, is to draw an analogy with MergeSort (merge sort) and draw a picture that shows all the recursive calls:

As we see, you can make one array, fill it initially with the values fft ( a 0 ), fft ( a 4 ), fft ( a 2 ), .... As it is easy to understand, the numbers a i are the numbers “ 0 , 1 , 2 , 3 , ... ” “expanded” in the binary representation. For example, 1 1 0 = 0 0 1 2 , 4 1 0 = 1 0 0 2 or 6 = 1 1 0 2 , 3 = 0 1 1 2 . This can be understood as follows: when descending to the lower level of recursion, we have one more low-order bit (from the end). And with “normal” numbering, the bits are determined from the beginning. Therefore, you need to "expand" the number. This can be done “head on” for O ( n ⋅log 2 n ), or it can be dynamically programmed for O ( n ) using the following algorithm:

Now we come up with an algorithm that allows us to get a step higher from the “step”. Store all values from the previous step, we will be in the same array. As can be clearly seen in the figure, it is necessary to process the data in blocks of k , and at the beginning k = 1 , and then with each step it doubles. We process two blocks of length k and get one block of length 2 k at the output. Let's take an example to analyze how it was done recursively, recall the formula from the beginning of the article and repeat:

The arguments of the procedure for merging two blocks will be two vectors (naturally, by reference, the original and the result), the number of the starting element of the first block (the second comes right after) and the length of the blocks. It could of course be done with iterators — for more STL, but we will still carry this procedure inside the core for brevity.

And the basic conversion procedure:

At the polynomial of degree 2 1 6, the recursion works 640 ms, without recursion - 500. There is an improvement, but the program can be made even faster. We use the property that ω i = -ω i + n / 2 . This means that you can not count twice the root and a i ⋅ ω j - sine, cosine and multiplication of complex numbers are very costly operations.

The transition with this optimization is called “butterfly transformation”. The program began to work 260 ms. To consolidate success, let's assume all the roots of 1 and write them into an array:

Now the speed is 78 ms. Optimization of 8 times compared with the first implementation!

At the moment, the entire conversion code takes about 55 lines. Not a hundred, but this is quite a lot - it can be shorter. First, get rid of a heap of unnecessary variables and operations in fft_merge :

Now you can move the cycle from fft_merge to the main procedure (you can also remove p2 , since p2 = p1 + len - for me this also gave a small time gain. What is curious, if you remove p1 = pdest , then I personally have a time gain killed) :

As you can see, the conversion itself takes not so much - 17 lines. All the rest is the prerequisite of roots and reversal of numbers. If you are ready to save the code in exchange for the running time ( O ( n ⋅log 2 n ) instead of O ( n )), you can replace the 13 reversal lines with the following six:

As a result, the code now looks like this:

Obtaining the values of a polynomial in points is, of course, good, but the Fourier transform can do more — construct a polynomial by these values, moreover, in the same time! It turns out that if we apply the Fourier transform to an array of values as to the coefficients of a polynomial, then divide the result by n and invert the segment from 1 to n - 1 (numbering from 0 ), then we obtain the coefficients of the original polynomial.

The code here is extremely simple - everything is already written. I think you can handle it.

Suppose we apply the inverse transformation to the polynomial P ( x ) with coefficients v i (the original polynomial had coefficients a i ):

v i = a 0 + ω i a 1 + ω 2 i a 2 + ω 3 i a + ...

Let's look at the result of the conversion:

b i = v 0 + ω i v 1 + ω 2 i v 2 + ω 3 i v 3 + ...

Substitute the values of v j (remember that ω a ω b = ω a + b m o d n :

Now let's prove one remarkable fact: for x ≠ 0 , ω 0 + ω x + ω 2 x + ... + ω ( n - 1 ) x = 0 .

It is proved analogously to the fact that the sum of the roots is zero: we denote by φ the sum, multiply both sides by ω x and see what happens.

Now apply this fact to the calculation of the value of b i . Note that all lines except one, which contains a n - i , will be reset.

In this way:

b i = a n - i ⋅ (ω 0 + ω 0 + ω 0 + ω 0 + ... )

')

b i = a n - i ⋅ n

Q.E.D.

Generally speaking, I already talked a little about the application at the beginning of the article. In particular, now the multiplication of polynomials can be performed as follows:

It is easy to see that the running time of this program is O ( n ⋅log 2 n ) and the most time consuming operations are Fourier transforms. It can also be noted that if we need to calculate a more complex expression with two polynomials, then still we can perform only three transformations - addition and subtraction will also work in linear time. Unfortunately, the division is not so simple, because the polynomial may accidentally take the value 0 at any of the points. UPD2: do not forget that the degree of the product of two polynomials of degree n will be equal to 2n , so when entering you should add “extra” zero leading coefficients.

If you represent a number in decimal (or more) number system, as a polynomial with coefficients - numbers, the multiplication of long numbers can also be done very quickly.

And finally, the task I will discuss in the following post: you have two lines of the same length of the order of 1 0 5 from the letters A, T, G, C. It is required to find such a cyclic shift of one of the lines so that the maximum number of characters matches. Obviously a naive solution for O ( n 2 ), but there is a solution with the help of FFT.

Good luck!

UPD: Laid out the code entirely on pastebin

This post is dedicated to the fast Fourier transform. Direct and inverse transformations (in complex numbers) will be considered. In the next part, I plan to consider their applications in some problems of Olympiad programming (in particular, one task about the “similarity” of strings), and also to tell about the implementation of the conversion in integers.

An FFT is an algorithm that calculates values of a polynomial of degree n = 2 k at some n points in O ( n ⋅log n ) time (the “naive” method performs the same task in O ( n 2 ) time). At the same time, you can perform the inverse transform. Since adding, subtracting and multiplying arrays of numbers is much easier than polynomials (especially multiplying), FFT is often used to speed up calculations with polynomials and long numbers.

Definitions and applications

First, let's define what a polynomial is:

P ( x ) = a 0 + x a 1 + x 2 a 2 + x 3 a 3 + ... + x n - 1 a n - 1

Complex numbers

If you are familiar with complex numbers, you can skip this clause, otherwise, here is a brief definition:

x = a + i b , where i 2 = - 1

Here a is called the real ( Real ) part, and b - the imaginary ( Imaginary ). In these numbers, as you can easily see, you can extract the root from negative (and indeed any) numbers - this is very convenient when working with polynomials - as follows from the main theorem of the algebra, each polynomial of degree n has exactly n complex roots (taking into account the multiplicity ).

It is also very convenient to represent them as points on a plane:

Another remarkable property of complex numbers is that they can be represented as x = (cosα + i sinα) r , where α is the polar angle of the "number" (called the argument ), and r is the distance from zero to it ( module ). And when multiplying two numbers:

a = (cosα + i ⋅sinα) r a

b = (cosβ + i ⋅sinβ) r b

a b = (cosα + i ⋅sinα) (cosβ + i ⋅sinβ) r a r b

a b = (cosα⋅cosβ-sinα⋅sinβ + i (sinα⋅cosβ + cosβ⋅sinα)) r a r b

a b = (cos (α + β) + i ⋅ sin (α + β)) r a r b

Their modules are multiplied, and the arguments are added.

Complex roots of 1

Now let's understand how the complex roots of the nth degree of 1 look like. Let x n = 1 , then its module is obviously equal to one, and n ⋅ arg x = 2 π k , where k is an integer. This means that after n multiplications of a number by itself (that is, raising to the nth power), its argument will become “multiple” by 2 π (360 degrees).

Recall the formula of a number, if the argument and the module are known, we get:

α = 2 π⋅ x / n , where 0 x

ω i = cosα + i ⋅sinα

Those. if we draw, we just get the points on the circle at regular intervals:

Please note three things that we will actively use (without them, nothing happens):

ω a ⋅ ω b = ω ( a + b ) mod n

ω 0 + ω 1 + ω 2 + ... + ω n - 1 = 0

ω 0 n / 2 = ω 2 n / 2 = ω 4 n / 2 = ... = 1 (for even n )

Because of these properties, it is at these points that we will consider the value of the polynomial. Of course, the results will not necessarily be real, so the program will need to work with complex numbers.

Why the sum of the roots is zero

The proof is very simple: let φ = ω 0 + ω 1 + .... Multiply both sides by ω 1 (! = 1). Because ω i ⋅ω 1 = ω i + 1 , then φ⋅ω 1 = ω 1 + ω 2 + ... + ω n - 1 + ω 0 . The sum does not change from the permutation of the terms, therefore φ = φ⋅ω 1 , respectively φ (ω 1 - 1 ) = 0 . Because ω 1 ! = 1, then φ = 0 .

How does it work

We assume that our polynomial has degree n = 2 k . If not, we add the highest coefficients with zeros to the nearest power of two.

The basic idea of FFT is very simple:

Let be:

A ( x ) = a 0 + x a 2 + x 2 a 4 + ... + x n / 2 - 1 a n - 2 (even coefficients P )

B ( x ) = a 1 + x a 3 + x 2 a 5 + ... + x n / 2 - 1 a n - 1 (odd coefficients P ).

Then P ( x ) = A ( x 2 ) + x ⋅ B ( x 2 ).

Now apply the principle of "divide and conquer": to calculate the values of P at n points (ω 0 , ω 1 , ... ), count the values of A and B recursively at n / 2 points (ω 0 , ω 2 , ... ) . Now the value of P (ω i ) is quite simple to restore:

P (ω i ) = A (ω 2 i ) + ω i ⋅ B (ω 2 i )

If we denote for ξ i = ω 2 i the points at which we consider the values of a polynomial of degree n / 2 , the formula will change:

P (ω i ) = A (ξ i ) + ω i ⋅ B (ξ i )

It can already be driven into the program, not forgetting that i takes values from 0 to n - 1 , and ξ i is defined only from 0 to n / 2 - 1 . Conclusion - it will be necessary to take i modulo n / 2 .

The running time is expressed by the recurrent formula T ( n ) = O ( n ) + 2 T ( n / 2 ). This is a fairly well-known relation and it is revealed in O ( n ⋅log 2 n ) (roughly speaking, the recursion depth is log 2 n levels, at each level, O ( n ) operations are performed in total for all calls).

Write something

Here is an example of an inefficient recursive FFT implementation:

Slow FFT

#include <vector> #include <complex> using namespace std; typedef complex<double> cd; // STL- . double, sin cos typedef vector<cd> vcd; vcd fft(const vcd &as) { // 1 int n = as.size(); // - ? if (n == 1) return vcd(1, as[0]); vcd w(n); // for (int i = 0; i < n; i++) { double alpha = 2 * M_PI * i / n; w[i] = cd(cos(alpha), sin(alpha)); } // A B vcd A(n / 2), B(n / 2); for (int i = 0; i < n / 2; i++) { A[i] = as[i * 2]; B[i] = as[i * 2 + 1]; } vcd Av = fft(A); vcd Bv = fft(B); vcd res(n); for (int i = 0; i < n; i++) res[i] = Av[i % (n / 2)] + w[i] * Bv[i % (n / 2)]; return res; } You can add I / O and verify that your implementation is correct. For the polynomial P ( x ) = 4 + 3 x + 2 x 2 + x 3 + 0 x 4 + 0 x 5 + 0 x 6 + 0 x 7, the values should be as follows:

P ( w 0 ) = ( 1 0. 0 0 0 , 0. 0 0 0 )

P ( w 1 ) = ( 5. 4 1 4 , 4. 8 2 8 )

P ( w 2 ) = ( 2. 0 0 0 , 2. 0 0 0 )

P ( w 3 ) = ( 2. 5 8 6 , 0. 8 2 8 )

P ( w 4 ) = ( 2. 0 0 0 , 0. 0 0 0 )

P ( w 5 ) = ( 2. 5 8 6 , - 0. 8 2 8 )

P ( w 6 ) = ( 2. 0 0 0 , - 2. 0 0 0 )

P ( w 7 ) = ( 5. 4 1 4 , - 4. 8 2 8 )

If so, you can note the time of the recursive and naive method on large tests.

I have a polynomial of degree 2 12, this implementation runs 62 ms, naive - 1800 ms. The difference is obvious.

Getting rid of recursion

In order to make the procedure non-recursive, you have to think. The easiest way, it seems to me, is to draw an analogy with MergeSort (merge sort) and draw a picture that shows all the recursive calls:

As we see, you can make one array, fill it initially with the values fft ( a 0 ), fft ( a 4 ), fft ( a 2 ), .... As it is easy to understand, the numbers a i are the numbers “ 0 , 1 , 2 , 3 , ... ” “expanded” in the binary representation. For example, 1 1 0 = 0 0 1 2 , 4 1 0 = 1 0 0 2 or 6 = 1 1 0 2 , 3 = 0 1 1 2 . This can be understood as follows: when descending to the lower level of recursion, we have one more low-order bit (from the end). And with “normal” numbering, the bits are determined from the beginning. Therefore, you need to "expand" the number. This can be done “head on” for O ( n ⋅log 2 n ), or it can be dynamically programmed for O ( n ) using the following algorithm:

- Run through the cycle from 0 to n - 1

- We will store and dynamically recalculate the number of the highest single bit of the number. It changes only when the current number is a power of two: it increases by 1.

- When we know the most significant bit of a number, it’s easy to invert the whole number: “cut off” the most significant bit (XOR), turn over the remainder (already calculated value) and add the “cut off” unit

Now we come up with an algorithm that allows us to get a step higher from the “step”. Store all values from the previous step, we will be in the same array. As can be clearly seen in the figure, it is necessary to process the data in blocks of k , and at the beginning k = 1 , and then with each step it doubles. We process two blocks of length k and get one block of length 2 k at the output. Let's take an example to analyze how it was done recursively, recall the formula from the beginning of the article and repeat:

The arguments of the procedure for merging two blocks will be two vectors (naturally, by reference, the original and the result), the number of the starting element of the first block (the second comes right after) and the length of the blocks. It could of course be done with iterators — for more STL, but we will still carry this procedure inside the core for brevity.

Block combination

void fft_merge(const vcd &src, vcd &dest, int start, int len) { int p1 = start; // int en1 = start + len; // int p2 = start + len; // int en2 = star + len * 2; // int pdest = start; // int nlen = len * 2; // for (int i = 0; i < nlen; i++) { double alpha = 2 * M_PI * i / nlen; cd w = cd(cos(alpha), sin(alpha)); // dest[pdest] = src[p1] + w * src[p2]; if (++p1 >= en1) p1 = start; if (++p2 >= en2) p2 = start + len; } } And the basic conversion procedure:

vcd fft(const vcd &as) { int n = as.size(); int k = 0; // n while ((1 << k) < n) k++; vi rev(n); rev[0] = 0; int high1 = -1; for (int i = 1; i < n; i++) { if ((i & (i - 1)) == 0) // . i , i-1 . high1++; rev[i] = rev[i ^ (1 << high1)]; // rev[i] |= (1 << (k - high1 - 1)); // } vcd cur(n); for (int i = 0; i < n; i++) cur[i] = as[rev[i]]; for (int len = 1; len < n; len <<= 1) { vcd ncur(n); for (int i = 0; i < n; i += len * 2) fft_merge(cur, ncur, i, len); cur.swap(ncur); } return cur; } Optimization

At the polynomial of degree 2 1 6, the recursion works 640 ms, without recursion - 500. There is an improvement, but the program can be made even faster. We use the property that ω i = -ω i + n / 2 . This means that you can not count twice the root and a i ⋅ ω j - sine, cosine and multiplication of complex numbers are very costly operations.

fft_merge ()

for (int i = 0; i < len; i++) { double alpha = 2 * M_PI * i / nlen; cd w = cd(cos(alpha), sin(alpha)); // cd val = w * src[p2]; dest[pdest] = src[p1] + val; dest[pdest + len] = src[p1] - val; pdest++; if (++p1 >= en1) p1 = start; if (++p2 >= en2) p2 = start + len; } The transition with this optimization is called “butterfly transformation”. The program began to work 260 ms. To consolidate success, let's assume all the roots of 1 and write them into an array:

fft_merge ()

int rstep = roots.size() / nlen; // for (int i = 0; i < len; i++) { cd w = roots[i * rstep]; cd val = w * src[p2]; fft ()

roots = vcd(n); for (int i = 0; i < n; i++) { double alpha = 2 * M_PI * i / n; roots[i] = cd(cos(alpha), sin(alpha)); } Now the speed is 78 ms. Optimization of 8 times compared with the first implementation!

Code optimization

At the moment, the entire conversion code takes about 55 lines. Not a hundred, but this is quite a lot - it can be shorter. First, get rid of a heap of unnecessary variables and operations in fft_merge :

void fft_merge(const vcd &src, vcd &dest, int start, int len) { int p1 = start; //int en1 = start + len; // , . int p2 = start + len; //int en2 = start + len * 2; // int pdest = start; //int nlen = len * 2; // //int rstep = roots.size() / nlen; int rstep = roots.size() / (len * 2); for (int i = 0; i < len; i++) { //cd w = roots[i * rstep]; // //cd val = w * src[p2]; cd val = roots[i * rstep] * src[p2]; dest[pdest] = src[p1] + val; dest[pdest + len] = src[p1] - val; pdest++, p1++, p2++; //if (++p1 >= en1) p1 = start; // 2len, len, //if (++p2 >= en2) p2 = start + len; // } } Now you can move the cycle from fft_merge to the main procedure (you can also remove p2 , since p2 = p1 + len - for me this also gave a small time gain. What is curious, if you remove p1 = pdest , then I personally have a time gain killed) :

fft ()

for (int len = 1; len < n; len <<= 1) { vcd ncur(n); int rstep = roots.size() / (len * 2); for (int pdest = 0; pdest < n;) { int p1 = pdest; for (int i = 0; i < len; i++) { cd val = roots[i * rstep] * cur[p1 + len]; ncur[pdest] = cur[p1] + val; ncur[pdest + len] = cur[p1] - val; pdest++, p1++; } pdest += len; } cur.swap(ncur); } As you can see, the conversion itself takes not so much - 17 lines. All the rest is the prerequisite of roots and reversal of numbers. If you are ready to save the code in exchange for the running time ( O ( n ⋅log 2 n ) instead of O ( n )), you can replace the 13 reversal lines with the following six:

At the beginning of the fft () procedure

vcd cur(n); for (int i = 0; i < n; i++) { int ri = 0; for (int i2 = 0; i2 < k; i2++) // ri = (ri << 1) | !!(i & (1 << i2)); // cur[i] = as[ri]; } As a result, the code now looks like this:

vcd fft(const vcd &as) { int n = as.size(); int k = 0; // n while ((1 << k) < n) k++; vector<int> rev(n); rev[0] = 0; int high1 = -1; for (int i = 1; i < n; i++) { if ((i & (i - 1)) == 0) // . i , i-1 . high1++; rev[i] = rev[i ^ (1 << high1)]; // rev[i] |= (1 << (k - high1 - 1)); // } vcd roots(n); for (int i = 0; i < n; i++) { double alpha = 2 * M_PI * i / n; roots[i] = cd(cos(alpha), sin(alpha)); } vcd cur(n); for (int i = 0; i < n; i++) cur[i] = as[rev[i]]; for (int len = 1; len < n; len <<= 1) { vcd ncur(n); int rstep = roots.size() / (len * 2); for (int pdest = 0; pdest < n;) { int p1 = pdest; for (int i = 0; i < len; i++) { cd val = roots[i * rstep] * cur[p1 + len]; ncur[pdest] = cur[p1] + val; ncur[pdest + len] = cur[p1] - val; pdest++, p1++; } pdest += len; } cur.swap(ncur); } return cur; } Inverse transform

Obtaining the values of a polynomial in points is, of course, good, but the Fourier transform can do more — construct a polynomial by these values, moreover, in the same time! It turns out that if we apply the Fourier transform to an array of values as to the coefficients of a polynomial, then divide the result by n and invert the segment from 1 to n - 1 (numbering from 0 ), then we obtain the coefficients of the original polynomial.

The code here is extremely simple - everything is already written. I think you can handle it.

Evidence

Suppose we apply the inverse transformation to the polynomial P ( x ) with coefficients v i (the original polynomial had coefficients a i ):

v i = a 0 + ω i a 1 + ω 2 i a 2 + ω 3 i a + ...

Let's look at the result of the conversion:

b i = v 0 + ω i v 1 + ω 2 i v 2 + ω 3 i v 3 + ...

Substitute the values of v j (remember that ω a ω b = ω a + b m o d n :

Now let's prove one remarkable fact: for x ≠ 0 , ω 0 + ω x + ω 2 x + ... + ω ( n - 1 ) x = 0 .

It is proved analogously to the fact that the sum of the roots is zero: we denote by φ the sum, multiply both sides by ω x and see what happens.

Now apply this fact to the calculation of the value of b i . Note that all lines except one, which contains a n - i , will be reset.

In this way:

b i = a n - i ⋅ (ω 0 + ω 0 + ω 0 + ω 0 + ... )

')

b i = a n - i ⋅ n

Q.E.D.

Application

Generally speaking, I already talked a little about the application at the beginning of the article. In particular, now the multiplication of polynomials can be performed as follows:

Fast polynomial multiplication

vcd a, b; // // vcd a_vals = fft(a); vcd b_vals = fft(b); vcd c_vals(a_vals.size()); for (int i = 0; i < a_vals.size(); i++) c_vals[i] = a_vals[i] * b_vals[i]; vcd c = fft_rev(c_vals); // It is easy to see that the running time of this program is O ( n ⋅log 2 n ) and the most time consuming operations are Fourier transforms. It can also be noted that if we need to calculate a more complex expression with two polynomials, then still we can perform only three transformations - addition and subtraction will also work in linear time. Unfortunately, the division is not so simple, because the polynomial may accidentally take the value 0 at any of the points. UPD2: do not forget that the degree of the product of two polynomials of degree n will be equal to 2n , so when entering you should add “extra” zero leading coefficients.

If you represent a number in decimal (or more) number system, as a polynomial with coefficients - numbers, the multiplication of long numbers can also be done very quickly.

And finally, the task I will discuss in the following post: you have two lines of the same length of the order of 1 0 5 from the letters A, T, G, C. It is required to find such a cyclic shift of one of the lines so that the maximum number of characters matches. Obviously a naive solution for O ( n 2 ), but there is a solution with the help of FFT.

Good luck!

UPD: Laid out the code entirely on pastebin

Source: https://habr.com/ru/post/113642/

All Articles