RAID-4 / RAID-DP - turning disadvantages into advantages

When I wrote in my last post that Snapshots were, at the time of the appearance of NetApp systems, their main features, I kind of lied (and partially just forgot), because at that time they had at least one more feature that markedly One of the “traditional” storage systems among them is “RAID type 4”.

This is all the more interesting since no one else uses this type of RAID for use in disk storage systems.

Why was this type chosen, what are its advantages, and why no one else uses this type of RAID today?

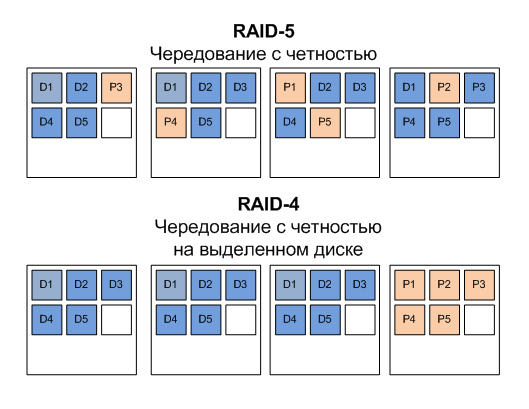

For those who are far from the theory of raid construction, let me remind you that of the six standard RAID types described in the scientific work that initiated their use, RAID type 2 (with the protection of the Hamming code) was not used in “wildlife”, remaining an amusing theoretical exercise, RAID-1 (and its variant RAID-10, or, sometimes, like RAID-0 + 1) is data protection by automatic synchronous mirroring on a pair (or several pairs) of physical disks, and RAID-3, 4, and 5 (later RAID type 6 was added) - this is the so-called "RAID with interleaving and parity". Between themselves, they differ in the way they organize and store parity data, as well as in the order in which data is interleaved. In a well-known RAID-5, data alternates across disks along with parity information, that is, parity does not occupy a special disk.

In RAID-3 and 4, a separate disk is allocated for parity information (Between 3 and 4, the difference in the size of an interleaved block is a sector in type 3 and a group of sectors, a block in type 4).

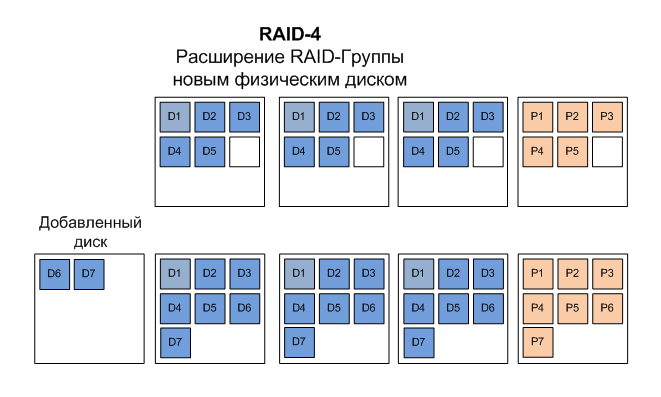

RAID-4, that is, “block-wise RAID and parity on a dedicated disk,” has one important advantage. It can be increased in size simply by adding physical disks to the RAID, and there is no need to rebuild the entire RAID, as is the case for RAID-5, for example. If you use 5 disks for data (and one for parity) in RAID-4, then in order to increase its capacity, you can simply add one, two, and so on disks to it, and the capacity of the RAID array will immediately increase by volume of added disks. No lengthy process of rebuilding an inaccessible RAID array, as in the case of RAID-5, is necessary. Obvious, and very convenient in practice advantage.

')

Why, then, do not use RAID-4 everywhere instead of RAID-5?

It has one, but very serious problem - write performance.

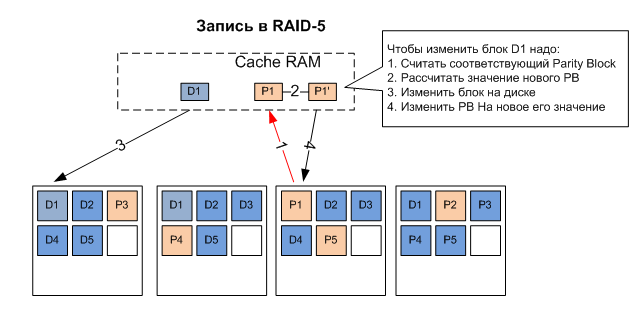

The fact is that each block write operation on RAID disks is accompanied by a record for updating the parity block on the Parity disk. This means that no matter how much we add to our disk array, between which I / O will be parallelized, it will still rest on a single disk - the parity disk. In fact, the performance of RAID-4, in its "classic" application, is limited by the performance of the Parity disk. Until this disk has recorded the update of the contents of the parity blocks lying on it, all other disks storing data are spinning and waiting for the completion of this operation.

In part, this problem was solved in RAID-5, where parity blocks are also parallelized on disks, like data blocks, and the bottleneck problem on parity disk was partially solved, which, however, does not mean that RAID-5 is deprived of all disadvantages, rather the opposite, is the worst type of RAID of all widely used today, suffering from both unreliability and poor performance, which I wrote about in the article “Why is RAID-5 a mustdie?” .

Particularly focusing on performance issues (read about the reliability of the article above), it should be noted that RAID-5 has one, but very serious “birth injury”, initially structurally inherent in this type of RAID in general (types 3,4,5 and 6 - “alternating with parity ") - low performance on an arbitrary (random) record. This aspect is very important in real life, since the volumes of arbitrary, random in nature, records in the general storage traffic are quite significant (reaching 30-50%), and a drop in performance on the records directly affects the performance of the storage in general.

This problem is “sick”, I repeat, all “classic” storage systems using this type of RAID (3, 4, 5 and 6).

Everything except NetApp.

How did NetApp manage to solve the “bottleneck” problem with the parity disk and the problem of poor performance with random write at once?

Here we will again have to recall the structure of WAFL, which I have already written about earlier.

As NetApp engineer Kostadis Roussos said about this: “Almost every file system today lies on top of a RAID. But only WAFL and RAID on NetApp at the same time know about each other so much to mutually use the opportunities and compensate each other’s shortcomings. ” This is true, because for WAFL, the RAID level is just another logical data layout level inside the file system itself. Let me remind you that NetApp does not use hardware RAID controllers, preferring to build RAID using its own file system (a similar approach later chose ZFS with its RAID-Z)

What is this "mutual opportunity"?

As you remember from my story about WAFL, it is designed in such a way that the data on it, once recorded, are not further overwritten. If it is necessary to make changes to the contents inside an already recorded file, space is allocated in the free block space, the modified block is written there, and then the pointer to the contents of the old block is rearranged to the new block, which was empty and now carries the new contents.

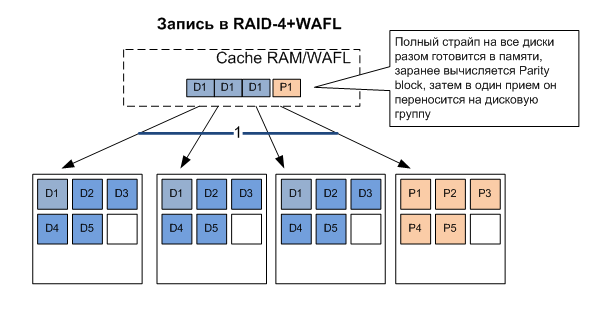

Such a write strategy allows us to turn a random entry into a sequential (sequental). And if so, we can record as efficiently as possible - with a “full stripe” by preparing it beforehand, and collecting all the “rewrites” aimed at different parts of the discs into one convenient area for recording. That is, instead of rewriting individual blocks, recording in one step the finished, formed “strip” of data, all of the time on all the RAID disks, including the previously calculated parity of the entire stripe to its corresponding disk. Instead of three read-write operations, one.

It's no secret that sequental operations are much faster and more convenient for the system than random operations.

Writing a “full stripe”, that is, on all interleaved RAID disks, is the most desirable write algorithm for this type of RAID. For this purpose, RAID-controllers increase the amount of cache memory, reaching on high-end systems very impressive sizes (and cost). The larger the write cache in traditional arrays, and the longer the data block that comes from the server to write to the disk freezes, the more chances are there that once this cache is collected from such “full stripe” blocks, and it will be possible to drain on the disks as profitably as possible

That is why write-back caches of RAID controllers tend to flush data onto disks less often, and they definitely need battery power supply to save their data blocks that are waiting for their turn in the cache.

With the growth of disk volumes, NetApp, like all others, faced the need to provide increased reliability of RAID data storage. For this reason, since 2005, RAID-DP, NetApp's implementation of RAID-6, is the recommended, preferred, and generally “default configuration”. In this, NetApp also excels because RAID-DPD, protecting, like RAID-6, from data loss when two disks are lost at once (for example, a failure during a rebuild of a previously failed disk), does not degrade performance, unlike RAID- 6, in comparison with RAID-5 or RAID-10.

The reasons for this are the same. The situation with "other vendors" with RAID-6, in comparison with RAID-5, is even worse. It is believed that the performance of RAID-6 falls compared with RAID-5 by 10-15%, and compared to RAID-10 by 25-35%. Now we need to carry out not only the read-write of one parity block, but we must do this for two different groups of blocks.

However, RAID-DP still does not need this; the reasons for this are still the same — randomly writing to an arbitrary place in the array is transformed by WAFL into sequential writing into a pre-allocated space, and such writing is much faster and more profitable.

Confirming that the use of RAID-4 (and its version - RAID-DP, analog of RAID-6) on NetApp systems does not objectively lead to performance degradation - authoritative tests of the performance of SAN disk systems (Storage Performance Council, SPC-1 / SPC-1E ) and NAS ( SPECsfs2008 ), which NetApp demonstrates on RAID-4 (and RAID-DP since 2005).

Moreover, NetApp systems with RAID-DP compete on equal terms with systems with RAID-10, that is, they show performance significantly higher than usual for “RAID with interleaved and parity”, while maintaining high space efficiency, because, as you know, on RAID -10 can be used for data of only 50% of purchased capacity, while on RAID-DP, with higher reliability, but comparable to RAID-10 speed, and for a standard group size, more than 87% of available data space is obtained. .

Thus, the ingenious use of two features together — RAID-4 and the WAFL recording mode — made it possible to take advantage of both of them while at the same time getting rid of their disadvantages. And the further development of RAID-4 in RAID-DP, which provides protection against double disk failure, has increased the reliability of data storage without sacrificing the traditionally high performance. And it is not easy. Suffice it to say that using RAID-6 (similar to RAID-DP, with an equivalently high level of protection), due to poor write performance, is not practiced and is not recommended for primary data by any other manufacturer of storage systems.

How to become rich, strong and healthy at the same time (and so that nothing is for it)? How to ensure a high degree of data protection from failure without sacrificing either high performance or disk space?

The answer knows NetApp.

Contact his partner companies;)

UPD: starring in the photo in the article's screensaver - NetApp FAS2020A from the foboss habrauser farm .

Source: https://habr.com/ru/post/113427/

All Articles